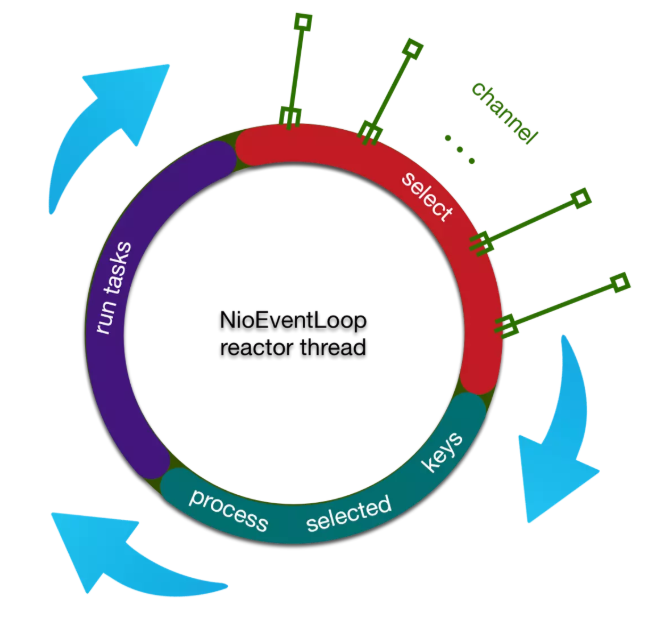

Last two blog posts( netty source code analysis: unveiling reactor threads (1),netty source code analysis: unveiling reactor threads (2) )The first two steps of the reactor thread of netty have been described. Here, we use this picture to review:

A brief summary of reactor thread Trilogy

1. Polling out IO events

2. Handle IO events

3. Processing task queue

Today, we're going to do the last episode of the trilogy, processing task queue, which is the purple part in the figure above.

After reading this article, you will learn about the asynchronous task mechanism of netty and the processing logic of timed tasks. These details can better help you write netty applications

Common usage scenarios of task s in netty

We take three typical task usage scenarios to analyze

I. user defined common tasks

ctx.channel().eventLoop().execute(new Runnable() { @Override public void run() { } });

Let's follow up the execute method and see the key points

@Override public void execute(Runnable task) { // addTask(task); ... }

execute method calls addTask method

protected void addTask(Runnable task) { if (task == null) { throw new NullPointerException("task"); } if (!offerTask(task)) { reject(task); } }

Then we call the offerTask method. If offer fails, we call the reject method and throw the exception directly through the default rejectedExecutionHandler.

protected final void reject(Runnable task) { rejectedExecutionHandler.rejected(task, this); }

final boolean offerTask(Runnable task) { if (isShutdown()) { reject(); } return taskQueue.offer(task); }

When it comes to the offerTask method, the task basically lands. netty uses a taskQueue to keep the task, so what is the Holy Spirit of the taskQueue

Let's see where taskQueue is defined and initialized

private final Queue<Runnable> taskQueue; protected SingleThreadEventExecutor(EventExecutorGroup parent, Executor executor, boolean addTaskWakesUp, int maxPendingTasks, RejectedExecutionHandler rejectedHandler) { ... taskQueue = newTaskQueue(this.maxPendingTasks); .. } protected Queue<Runnable> newTaskQueue(int maxPendingTasks) { return new LinkedBlockingQueue<Runnable>(maxPendingTasks); }

We found that task queue is mpsc queue by default in NioEventLoop, and mpsc queue is also "multi producer single consumer queue". Using mpsc, netty can easily gather tasks of external threads, and use single thread program to execute serially in reactor thread. We can use netty's task queue execution mode to handle similar multi-threaded data reporting and timed aggregation applications.

In the task scenario discussed in this section, all code execution is in the reactor thread, index, and all calls to inEventLoop(), all of which return true. Since they are all executed in the reactor thread, the mpsc queue here actually does not play a real role. In the second scenario, mpsc shows its strength.

2. Various methods of non current reactor thread calling channel

// non reactor thread channel.write(...)

The above case is more common in the push system. Generally, in the business thread, according to the user's identification, find the corresponding channel reference, then call the write class method to push the message to the user, and then enter the scene.

As for the call chain of channel.write() class methods, a separate article will be pulled out later for further analysis. Here, we just need to know that the final write method strings to the following methods

AbstractChannelHandlerContext.java

private void write(Object msg, boolean flush, ChannelPromise promise) { AbstractChannelHandlerContext next = findContextOutbound(); final Object m = pipeline.touch(msg, next); EventExecutor executor = next.executor(); if (executor.inEventLoop()) { if (flush) { next.invokeWriteAndFlush(m, promise); } else { next.invokeWrite(m, promise); } } else { AbstractWriteTask task; if (flush) { task = WriteAndFlushTask.newInstance(next, m, promise); } else { task = WriteTask.newInstance(next, m, promise); } safeExecute(executor, task, promise, m); } }

When the external thread invokes write, executor.inEventLoop() returns false, directly into the else branch, and encapsulates write into a writeTask (here is write instead of flush, so flush parameter is false), and then calls safeExecute method.

private static void safeExecute(EventExecutor executor, Runnable runnable, ChannelPromise promise, Object msg) { try { executor.execute(runnable); ... }

The next call chain will enter the first scenario, but there is an obvious difference between the first scenario and the first scenario. The first scenario is that the initiator thread of the call chain is the reactor thread, and the second scenario is that the initiator thread of the call chain is the user thread. There may be many user threads. Obviously, there may be thread synchronization problems when multiple threads write taskQueue concurrently, so this kind of field In the background, the mpsc queue of netty has its place

3. User defined scheduled tasks

ctx.channel().eventLoop().schedule(new Runnable() { @Override public void run() { } }, 60, TimeUnit.SECONDS);

The third scenario is timed task logic. The most commonly used method is as follows: execute tasks after certain events

We follow up the schedule method

@Override public ScheduledFuture<?> schedule(Runnable command, long delay, TimeUnit unit) { ... return schedule(new ScheduledFutureTask<Void>( this, command, null, ScheduledFutureTask.deadlineNanos(unit.toNanos(delay)))); }

Through ScheduledFutureTask, the user-defined task is packaged into a netty internal task again

<V> ScheduledFuture<V> schedule(final ScheduledFutureTask<V> task) { if (inEventLoop()) { scheduledTaskQueue().add(task); ... return task; }

Here, we are a little familiar with each other. In the processing of non scheduled tasks, netty lands tasks through an mpsc queue. Is there a similar queue for such scheduled tasks? With that in mind, let's move on

Queue<ScheduledFutureTask<?>> scheduledTaskQueue() { if (scheduledTaskQueue == null) { scheduledTaskQueue = new PriorityQueue<ScheduledFutureTask<?>>(); } return scheduledTaskQueue; }

Sure enough, the scheduledTaskQueue() method returns a priority queue and then calls the add method to add the timed task to the queue. But why do we need to use priority queues here instead of considering multithread concurrency?

Because the scenario we are discussing now, the initiator of the call chain is reactor thread, and there will be no multi-threaded concurrency.

But what if some users perform timed tasks outside of reactor? Although such scenarios are rarely seen, netty, as an extremely robust high-performance io framework, must consider this situation.

To this end, netty processes that if the schedule is called in an external thread, netty encapsulates the logic of adding a scheduled task into a common task. The task of this task is to add a scheduled task instead of adding a scheduled task, which is actually the second scenario. In this way, the access to the privorityQueue becomes a single thread, that is, only the reactor thread.

Complete schedule method

<V> ScheduledFuture<V> schedule(final ScheduledFutureTask<V> task) { if (inEventLoop()) { scheduledTaskQueue().add(task); } else { //Enter scenario 2: further encapsulate the task execute(new Runnable() { @Override public void run() { scheduledTaskQueue().add(task); } }); } return task; }

In the process of reading the source code details, we should ask more why? This will help to see the source when not making trouble! For example, why the scheduled tasks should be saved in the priority queue? We can think about the characteristics of priority versus column without looking at the source code first

The priority queue arranges the internal elements in a certain order. The internal elements must be comparable. Since each element here is a scheduled task, it means that the scheduled task can be compared. Where can we compare when we arrive?

Each task has a deadline event for the next execution. The deadline events can be compared. When the deadline is the same, the order of adding tasks can also be compared.

With conjecture, let's study the ScheduledFutureTask and extract the key parts

final class ScheduledFutureTask<V> extends PromiseTask<V> implements ScheduledFuture<V> { private static final AtomicLong nextTaskId = new AtomicLong(); private static final long START_TIME = System.nanoTime(); static long nanoTime() { return System.nanoTime() - START_TIME; } private final long id = nextTaskId.getAndIncrement(); /* 0 - no repeat, >0 - repeat at fixed rate, <0 - repeat with fixed delay */ private final long periodNanos; @Override public int compareTo(Delayed o) { //... } // Reduced code @Override public void run() { }

Here, we find the compareTo method at a glance. cmd+u jumps to the implemented interface and finds that it is the Comparable interface

public int compareTo(Delayed o) { if (this == o) { return 0; } ScheduledFutureTask<?> that = (ScheduledFutureTask<?>) o; long d = deadlineNanos() - that.deadlineNanos(); if (d < 0) { return -1; } else if (d > 0) { return 1; } else if (id < that.id) { return -1; } else if (id == that.id) { throw new Error(); } else { return 1; } }

When entering the method body, we find that the comparison of two scheduled tasks is to compare the deadline of the task first. When the deadline is the same, then compare the id, that is, the order of adding tasks. If the id is the same again, then throw the Error

In this way, when performing scheduled tasks, you can ensure that tasks with the latest deadline are executed first

Next, let's see how netty ensures the execution of various scheduled tasks. There are three types of scheduled tasks in netty

1. Once after several times

2. Every other period of time

3. At the end of each execution, execute again at a certain interval

Netty uses a period nanos to distinguish these three situations, as the comments of netty do

/* 0 - no repeat, >0 - repeat at fixed rate, <0 - repeat with fixed delay */ private final long periodNanos;

With these backgrounds in mind, let's take a look at how netty handles these three different types of scheduled tasks

@Override public void run() { assert executor().inEventLoop(); try { if (periodNanos == 0) { if (setUncancellableInternal()) { V result = task.call(); setSuccessInternal(result); } } else { // check if is done as it may was cancelled if (!isCancelled()) { task.call(); if (!executor().isShutdown()) { long p = periodNanos; if (p > 0) { deadlineNanos += p; } else { deadlineNanos = nanoTime() - p; } if (!isCancelled()) { // scheduledTaskQueue can never be null as we lazy init it before submit the task! Queue<ScheduledFutureTask<?>> scheduledTaskQueue = ((AbstractScheduledEventExecutor) executor()).scheduledTaskQueue; assert scheduledTaskQueue != null; scheduledTaskQueue.add(this); } } } } } catch (Throwable cause) { setFailureInternal(cause); } }

if (periodNanos == 0) corresponds to the type of scheduled task that is executed once after several times. After execution, the task ends.

Otherwise, enter the else code block, execute the task first, and then distinguish the type of task. periodNanos is greater than 0, which means that a task is executed at a fixed frequency, regardless of the duration of the task. Then, set the next deadline of the task as the current deadline plus the interval periodNanos. Otherwise, after each task is executed, the How long does it take to execute again? The deadline is the current time plus the interval time. - p means to add a positive interval time. Finally, add the current task object to the queue again to implement the scheduled execution of the task

After we have a good understanding of the internal task adding mechanism of netty, we can see how reactor's third part schedules these tasks

task scheduling of reactor thread

First, we turn to the outermost appearance code

runAllTasks(long timeoutNanos);

As the name suggests, this line of code means that all tasks should be taken out and run once in a certain period of time. timeoutNanos indicates that this method can be executed for such a long time at most. Why does netty do this? We can think that if the reactor thread stays here for a long time, it will accumulate a lot of IO events that cannot be handled (see the first two steps of reactor thread), resulting in a large number of client requests blocking. Therefore, by default, netty will control the execution time of the internal force column

OK, let's follow up

/** * Poll all tasks from the task queue and run them via {@link Runnable#run()} method. This method stops running * the tasks in the task queue and returns if it ran longer than {@code timeoutNanos}. */ protected boolean runAllTasks(long timeoutNanos) { fetchFromScheduledTaskQueue(); Runnable task = pollTask(); if (task == null) { afterRunningAllTasks(); return false; } final long deadline = ScheduledFutureTask.nanoTime() + timeoutNanos; long runTasks = 0; long lastExecutionTime; for (;;) { safeExecute(task); runTasks ++; // Check timeout every 64 tasks because nanoTime() is relatively expensive. // XXX: Hard-coded value - will make it configurable if it is really a problem. if ((runTasks & 0x3F) == 0) { lastExecutionTime = ScheduledFutureTask.nanoTime(); if (lastExecutionTime >= deadline) { break; } } task = pollTask(); if (task == null) { lastExecutionTime = ScheduledFutureTask.nanoTime(); break; } } afterRunningAllTasks(); this.lastExecutionTime = lastExecutionTime; return true; }

This code is all the logic for reactor to execute the task, which can be disassembled into the following steps

1. Transfer scheduled tasks from scheduledTaskQueue to taskQueue(mpsc queue)

2. Calculate the task cycle deadline

3. Perform tasks

4. finishing

According to this step, we will analyze it step by step

Transfer scheduled tasks from scheduledTaskQueue to taskQueue(mpsc queue)

First, call the fetchFromScheduledTaskQueue() method to transfer the expired scheduled tasks to the mpsc queue

private boolean fetchFromScheduledTaskQueue() { long nanoTime = AbstractScheduledEventExecutor.nanoTime(); Runnable scheduledTask = pollScheduledTask(nanoTime); while (scheduledTask != null) { if (!taskQueue.offer(scheduledTask)) { // No space left in the task queue add it back to the scheduledTaskQueue so we pick it up again. scheduledTaskQueue().add((ScheduledFutureTask<?>) scheduledTask); return false; } scheduledTask = pollScheduledTask(nanoTime); } return true; }

As you can see, netty is very careful when transferring tasks from the scheduledTaskQueue to the taskQueue. When the taskQueue cannot be offer ed, you need to add back the tasks taken from the scheduledTaskQueue

The logic of pulling a scheduled task from the scheduledTaskQueue is as follows. The passed in parameter nanoTime is the current time (in fact, the number of nanoseconds loaded by the ScheduledFutureTask class)

protected final Runnable pollScheduledTask(long nanoTime) { assert inEventLoop(); Queue<ScheduledFutureTask<?>> scheduledTaskQueue = this.scheduledTaskQueue; ScheduledFutureTask<?> scheduledTask = scheduledTaskQueue == null ? null : scheduledTaskQueue.peek(); if (scheduledTask == null) { return null; } if (scheduledTask.deadlineNanos() <= nanoTime) { scheduledTaskQueue.remove(); return scheduledTask; } return null; }

As you can see, each pollScheduledTask will be retrieved only when the deadline of the current task has reached

Calculate the deadline of this task cycle

protected boolean runAllTasks(long timeoutNanos) { fetchFromScheduledTaskQueue(); Runnable task = pollTask(); if (task == null) { afterRunningAllTasks(); return false; } final long deadline = ScheduledFutureTask.nanoTime() + timeoutNanos; long runTasks = 0; long lastExecutionTime;

In this step, the first task will be taken out, and the deadline of the current task cycle will be calculated with the timeout timeoutNanos passed in by the reactor thread, and the runTasks and lastExecutionTime are used to record the status of the task at any time

Cycle tasks

protected boolean runAllTasks(long timeoutNanos) { ... for (;;) { safeExecute(task); runTasks ++; // Check timeout every 64 tasks because nanoTime() is relatively expensive. // XXX: Hard-coded value - will make it configurable if it is really a problem. if ((runTasks & 0x3F) == 0) { lastExecutionTime = ScheduledFutureTask.nanoTime(); if (lastExecutionTime >= deadline) { break; } } task = pollTask(); if (task == null) { lastExecutionTime = ScheduledFutureTask.nanoTime(); break; } } afterRunningAllTasks(); this.lastExecutionTime = lastExecutionTime; return true; }

This step is the core code in netty to perform all tasks.

First, call safeExecute to ensure the safe execution of the task, and ignore any exceptions

protected static void safeExecute(Runnable task) { try { task.run(); } catch (Throwable t) { logger.warn("A task raised an exception. Task: {}", task, t); } }

for (;;) { safeExecute(task); runTasks ++; // Check timeout every 64 tasks because nanoTime() is relatively expensive. // XXX: Hard-coded value - will make it configurable if it is really a problem. if ((runTasks & 0x3F) == 0) { lastExecutionTime = ScheduledFutureTask.nanoTime(); if (lastExecutionTime >= deadline) { break; } } task = pollTask(); if (task == null) { lastExecutionTime = ScheduledFutureTask.nanoTime(); break; } }

Then add one to the runTasks of the running tasks, and judge whether the current time exceeds the deadline of the reactor task cycle every 0x3F task, that is, after 64 tasks are executed. If it exceeds the deadline, break it. If it does not exceed the deadline, continue to execute. It can be seen that the performance optimization of netty is very considerate. If there are a large number of small tasks in the netty task queue, if you have to judge whether the deadline is reached every time you have to finish the task, then the efficiency is relatively low

Ending

afterRunningAllTasks(); this.lastExecutionTime = lastExecutionTime;

The finishing job is very simple. Call the afterRunningAllTasks method

@Override protected void afterRunningAllTasks() { runAllTasksFrom(tailTasks); }

NioEventLoop can add closing tasks to tailTasks through the executeAfterEventLoopIteration method of the parent class singletheadventloop. For example, you want to count how long it takes to execute a task cycle to call this method

@UnstableApi public final void executeAfterEventLoopIteration(Runnable task) { ObjectUtil.checkNotNull(task, "task"); if (isShutdown()) { reject(); } if (!tailTasks.offer(task)) { reject(task); } if (wakesUpForTask(task)) { wakeup(inEventLoop()); } }

this.lastExecutionTime = lastExecutionTime; simply record the execution time of the task, search for the reference of the field, and find that the field has not been used, just assign, assign, assign, etc. every time, go to the netty official to propose an issue another day

The third part of reactor thread is basically finished here. If you find it very easy to read this, congratulations. You are very familiar with the task mechanism of netty. Congratulations to me. I will make these mechanisms clear to you. Let's sum it up for the last time, in the form of tips

1. The current reactor thread calls the current eventLop to execute the task directly. Otherwise, it will be added to the task queue for later execution

2.netty internal tasks are divided into normal tasks and scheduled tasks, which are landed in MpscQueue and PriorityQueue respectively

3.netty will transfer the expired scheduled tasks from PriorityQueue to MpscQueue before each task cycle

4.netty checks whether to exit the task cycle every 64 tasks