Namesrv for RocketMQ Source Analysis

Source analysis is more content points, of course, we can not read all the source code of RocketMQ, so we interpret the core, focus source code.

The source code to be interpreted is as follows:

1,RocketMQ Overall architecture of source analysis 2,RocketMQ Source Analysis NameServer 3,RocketMQ Source Analysis Remoting 4,RocketMQ Source Analysis Producer 5,RocketMQ Source Analysis Store 6,RocketMQ Source Analysis Consumer 7,RocketMQ Memory Mapping in Source Analysis 8,RocketMQ Data synchronization and asynchronization mechanisms for source analysis 9,RocketMQ Transaction messages for source analysis 10,RocketMQ Message filtering and retry for source analysis

Look at the source with questions

-

Connectivity of NameServer, Broker, Producer, Consumer

-

What does it matter when Producer and Consumer connections are established?

-

What information does NameServer store and how?

-

Is Topic's persistent storage in NameServer or Broker?

RocketMQ Overall Architecture and Connectivity

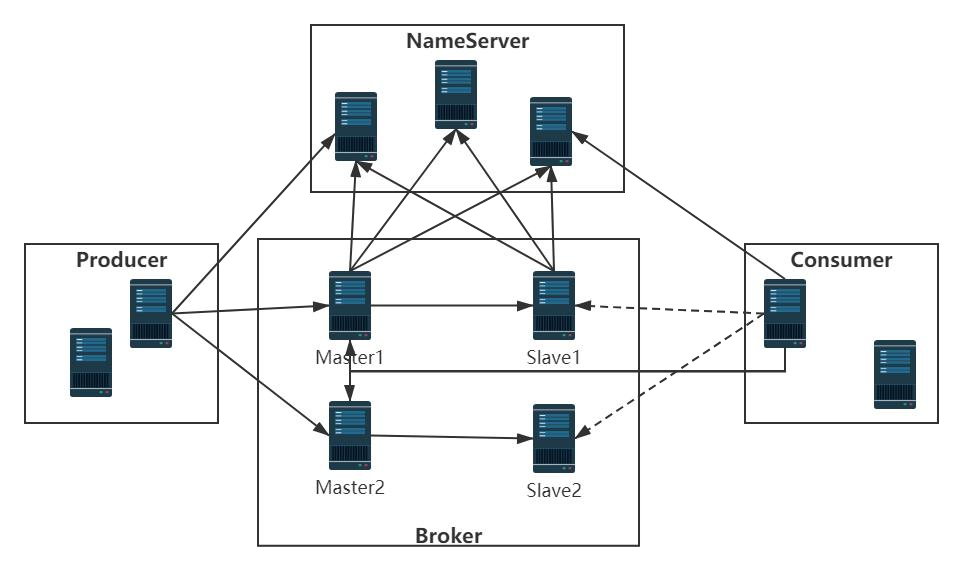

The figure below is a typical two-master-two-slave architecture that includes producers and consumers.

They are not communicating with each other in the NameServer cluster.

-

Producers establish long connections to one of the NameServer clusters at the same time.

-

All Master Broker s between producers and brokers maintain long connections.

-

Consumers establish long connections to one of the NameServer clusters at the same time.

-

Consumers make long connections to all brokers.

RocketMQ Core Components and Overall Process

rocketmq-all-4.8.0-source-release

.github

acl

broker

client

common

dev

distribution

docs

example

filter

logappender

logging

namesrv

openmessaging

remoting

srvutil

store

style

target

test

tools

The source code for RocketMQ looks a lot, but if it's broken down by components, there are only a few cores. As follows:

-

NameServer#start

-

BrokerStartup#main

-

DefaultMQProducerImpl#start

-

DefaultMQProducerImpl#send(Message)

-

DefaultMQPushConsumerImpl#start

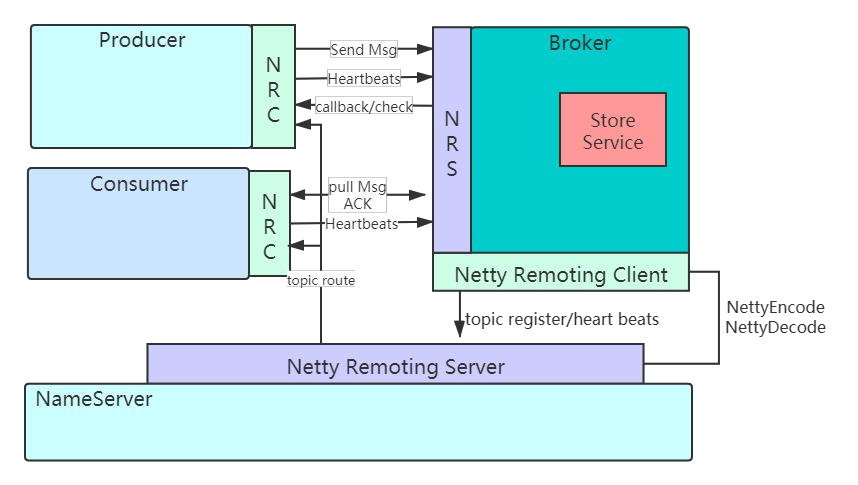

NameServer

Name the service, update and route the discovery broker service.

NameServer is designed to provide routing information about Topic to message producers and consumers. In addition to storing the basic routing information, NameServer can also manage

Broker node, including routing registration, routing deletion, and so on.

Producer and Constumer

The java version of the mq client implementation, including producers and consumers.

Broker

It receives requests from producer s and consumer s and calls the store-tier service to process messages. The basic unit of the HA service, which supports synchronous and asynchronous dual-write modes.

Store

Storage layer implementation, including index services, highly available HA service implementation.

NettyRemotingServer and NettyRemotingClient

Based on netty's underlying communication implementation, all service interactions are based on this module. It also distinguishes between server and client.

NameServer Source Analysis

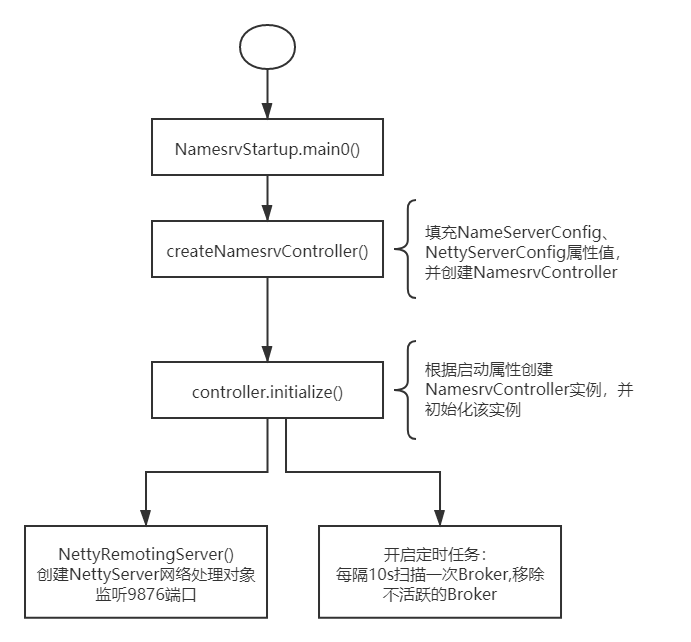

NameServer Startup Process Summary

Start process

From the startup of the source code, NameServer starts separately.

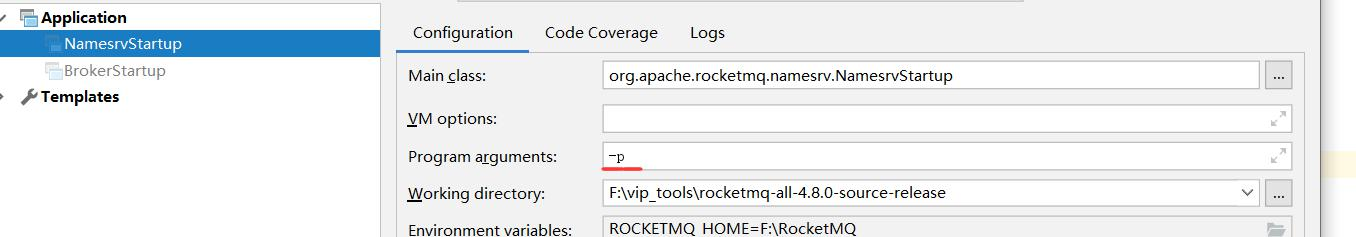

Entry class:**NamesrvController**(NamesrvStartup) Core approach:**NamesrvController** In class **main()->main0-> createNamesrvController->start() -> initialize()**

Step One

Parse the configuration file, populate NameServerConfig, NettyServerConfig attribute values, and create NamesrvController

NamesrvController In class createNamesrvController Method

public static NamesrvController createNamesrvController(String[] args) throws IOException, JoranException {

System.setProperty(RemotingCommand.REMOTING_VERSION_KEY, Integer.toString(MQVersion.CURRENT_VERSION));

//PackageConflictDetect.detectFastjson();

//Create NamesrvConfig

final NamesrvConfig namesrvConfig = new NamesrvConfig();

//Create NettyServerConfig

final NettyServerConfig nettyServerConfig = new NettyServerConfig();

//Set Startup Port Number

nettyServerConfig.setListenPort(9876);

//Resolve Start-c Parameter

if (commandLine.hasOption('c')) {

String file = commandLine.getOptionValue('c');

if (file != null) {

InputStream in = new BufferedInputStream(new FileInputStream(file));

properties = new Properties();

properties.load(in);

MixAll.properties2Object(properties, namesrvConfig);

MixAll.properties2Object(properties, nettyServerConfig);

namesrvConfig.setConfigStorePath(file);

System.out.printf("load config properties file OK, %s%n", file);

in.close();

}

}

//Resolve Start-p Parameter

if (commandLine.hasOption('p')) {

InternalLogger console = InternalLoggerFactory.getLogger(LoggerName.NAMESRV_CONSOLE_NAME);

MixAll.printObjectProperties(console, namesrvConfig);

MixAll.printObjectProperties(console, nettyServerConfig);

System.exit(0);

}

//Fill in startup parameters to namesrvConfig,nettyServerConfig

MixAll.properties2Object(ServerUtil.commandLine2Properties(commandLine), namesrvConfig);

log = InternalLoggerFactory.getLogger(LoggerName.NAMESRV_LOGGER_NAME);

MixAll.printObjectProperties(log, namesrvConfig);

MixAll.printObjectProperties(log, nettyServerConfig);

//todo creates NameServerController

final NamesrvController controller = new NamesrvController(namesrvConfig, nettyServerConfig);

// remember all configs to prevent discard

controller.getConfiguration().registerConfig(properties);

return controller;

} // With deletion

Here you can see that if you want to see the various parameters, you can print all the parameter information for this NameServer by simply entering -p in the startup parameter

Similarly, all parameters must be found in the boot log:

Step 2

Create and initialize a NamesrvController instance based on the startup properties. The NameServerController instance is the NameServer core controller. The core controller starts the timer task:

1, Every 10 s Scan once Broker,Remove inactive Broker

2. Print KV configuration every 10 minutes

initialize() in the NamesrvController class

public boolean initialize() {

//Load KV Configuration

this.kvConfigManager.load();

//Create NettyServer Network Processing Object

this.remotingServer = new NettyRemotingServer(this.nettyServerConfig, this.brokerHousekeepingService);

//Turn on the timer task: scan the Broker every 10s to remove inactive brokers

this.remotingExecutor = Executors.newFixedThreadPool(nettyServerConfig.getServerWorkerThreads(),

new ThreadFactoryImpl("RemotingExecutorThread_"));

this.registerProcessor();

//Scan every 10s as active Broker

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

//todo scans every 10s as active Broker

NamesrvController.this.routeInfoManager.scanNotActiveBroker();

}

}, 5, 10, TimeUnit.SECONDS);

//Turn on timer tasks: Print KV configuration every 10 minutes

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

NamesrvController.this.kvConfigManager.printAllPeriodically();

}

}, 1, 10, TimeUnit.MINUTES);

...

return true;

}

Step Three

Close the thread pool and release resources in time before the JVM process closes

//Hook Method

Runtime.getRuntime().addShutdownHook(new ShutdownHookThread(log, new Callable<Void>() {

@Override

public Void call() throws Exception {

controller.shutdown();

return null;

}

}));

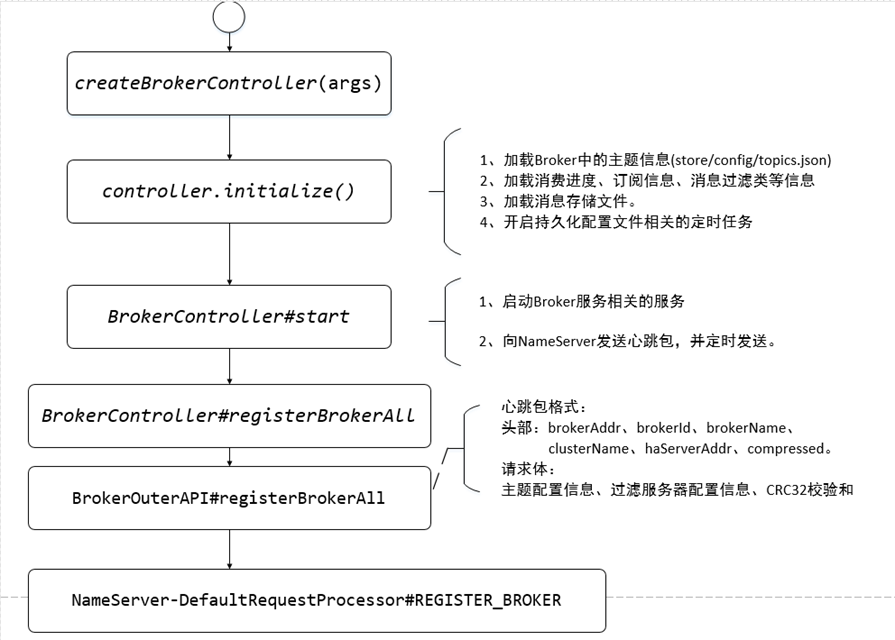

Broker Startup Process Summary

Entry class: BrokerController(BrokerStartup)

Core methods:

Broker sends a message to NameServer

//todo broker sends heartbeat packets to NameServer every 30s

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

//todo sends a heartbeat packet to NameServer

BrokerController.this.registerBrokerAll(true, false, brokerConfig.isForceRegister());

} catch (Throwable e) {

log.error("registerBrokerAll Exception", e);

}

}

}, 1000 * 10, Math.max(10000, Math.min(brokerConfig.getRegisterNameServerPeriod(), 60000)), TimeUnit.MILLISECONDS);

public synchronized void registerBrokerAll(final boolean checkOrderConfig, boolean oneway, boolean forceRegister) {

TopicConfigSerializeWrapper topicConfigWrapper = this.getTopicConfigManager().buildTopicConfigSerializeWrapper();

...

//todo sends heartbeat packets to all servers (NameServer)

if (forceRegister || needRegister(this.brokerConfig.getBrokerClusterName(),

this.getBrokerAddr(),

this.brokerConfig.getBrokerName(),

this.brokerConfig.getBrokerId(),

this.brokerConfig.getRegisterBrokerTimeoutMills())) {

//todo needs to send a heartbeat packet to the server (NameServer)

doRegisterBrokerAll(checkOrderConfig, oneway, topicConfigWrapper);

}

}

private void doRegisterBrokerAll(boolean checkOrderConfig, boolean oneway,

TopicConfigSerializeWrapper topicConfigWrapper) {

//todo sends information to the brokerOuter API

List<RegisterBrokerResult> registerBrokerResultList = this.brokerOuterAPI.registerBrokerAll(

this.brokerConfig.getBrokerClusterName(),

this.getBrokerAddr(),

this.brokerConfig.getBrokerName(),

this.brokerConfig.getBrokerId(),

this.getHAServerAddr(),

topicConfigWrapper,

this.filterServerManager.buildNewFilterServerList(),

oneway,

this.brokerConfig.getRegisterBrokerTimeoutMills(),

this.brokerConfig.isCompressedRegister());

//todo Here is the return value for messages sent like NameSever every 30s. (This is mainly used to record)

if (registerBrokerResultList.size() > 0) {

RegisterBrokerResult registerBrokerResult = registerBrokerResultList.get(0);

if (registerBrokerResult != null) {

if (this.updateMasterHAServerAddrPeriodically && registerBrokerResult.getHaServerAddr() != null) {

this.messageStore.updateHaMasterAddress(registerBrokerResult.getHaServerAddr());

}

this.slaveSynchronize.setMasterAddr(registerBrokerResult.getMasterAddr());

if (checkOrderConfig) {

this.getTopicConfigManager().updateOrderTopicConfig(registerBrokerResult.getKvTable());

}

}

}

}

public List<RegisterBrokerResult> registerBrokerAll(..., final boolean compressed) {

final List<RegisterBrokerResult> registerBrokerResultList = new CopyOnWriteArrayList<>();

List<String> nameServerAddressList = this.remotingClient.getNameServerAddressList();

if (nameServerAddressList != null && nameServerAddressList.size() > 0) {

//todo Heart Pack Format:

//Header: brokerAddr, brokerld, brokerName,

//Cluster Name, haServerAddr, compressed. Requestor:

//Theme Configuration Information, Filter Server Configuration Information, CRC32 Checksum

final RegisterBrokerRequestHeader requestHeader = new RegisterBrokerRequestHeader();

requestHeader.setBrokerAddr(brokerAddr);

requestHeader.setBrokerId(brokerId);

requestHeader.setBrokerName(brokerName);

requestHeader.setClusterName(clusterName);

requestHeader.setHaServerAddr(haServerAddr);

requestHeader.setCompressed(compressed);

RegisterBrokerBody requestBody = new RegisterBrokerBody();

requestBody.setTopicConfigSerializeWrapper(topicConfigWrapper);

requestBody.setFilterServerList(filterServerList);

final byte[] body = requestBody.encode(compressed);

final int bodyCrc32 = UtilAll.crc32(body);

requestHeader.setBodyCrc32(bodyCrc32);

//todo Sends to Multiple NameServer s Send Together Using CDL

//todo countDownLatch starter gun

final CountDownLatch countDownLatch = new CountDownLatch(nameServerAddressList.size());

for (final String namesrvAddr : nameServerAddressList) {

brokerOuterExecutor.execute(new Runnable() {

@Override

public void run() {

try {

RegisterBrokerResult result = registerBroker(namesrvAddr, oneway, timeoutMills, requestHeader, body);

if (result != null) {

registerBrokerResultList.add(result);

}

log.info("register broker[{}]to name server {} OK", brokerId, namesrvAddr);

} catch (Exception e) {

log.warn("registerBroker Exception, {}", namesrvAddr, e);

} finally {

countDownLatch.countDown(); // Starter gun used

}

}

});

}

try {

countDownLatch.await(timeoutMills, TimeUnit.MILLISECONDS);

} catch (InterruptedException e) {

}

}

return registerBrokerResultList;

}

private RegisterBrokerResult registerBroker(...) throws RemotingCommandException, MQBrokerException, RemotingConnectException, RemotingSendRequestException, RemotingTimeoutException,

InterruptedException {

//The TODO is encapsulated and sent to NameServer by DefaultRequestProcessor, according to RequestCode

RemotingCommand request = RemotingCommand.createRequestCommand(RequestCode.REGISTER_BROKER, requestHeader);

request.setBody(body);

if (oneway) { // oneway processing

...

RemotingCommand response = this.remotingClient.invokeSync(namesrvAddr, request, timeoutMills);

assert response != null;

switch (response.getCode()) {

case ResponseCode.SUCCESS: {

RegisterBrokerResponseHeader responseHeader =

(RegisterBrokerResponseHeader) response.decodeCommandCustomHeader(RegisterBrokerResponseHeader.class);

RegisterBrokerResult result = new RegisterBrokerResult();

result.setMasterAddr(responseHeader.getMasterAddr());

result.setHaServerAddr(responseHeader.getHaServerAddr());

if (response.getBody() != null) {

result.setKvTable(KVTable.decode(response.getBody(), KVTable.class));

}

return result;

}

default:

break;

}

throw new MQBrokerException(response.getCode(), response.getRemark(), requestHeader == null ? null : requestHeader.getBrokerAddr());

}

The request was processed by Namesrv

public RemotingCommand processRequest(ChannelHandlerContext ctx, RemotingCommand request) throws RemotingCommandException {

if (ctx != null) {

...

}

switch (request.getCode()) {

...

//Judgment is to register Broker information

case RequestCode.REGISTER_BROKER:

Version brokerVersion = MQVersion.value2Version(request.getVersion());

if (brokerVersion.ordinal() >= MQVersion.Version.V3_0_11.ordinal()) {

return this.registerBrokerWithFilterServer(ctx, request);

} else {

//Register Broker Information

return this.registerBroker(ctx, request);

}

case RequestCode.UNREGISTER_BROKER:

return this.unregisterBroker(ctx, request);

//todo producers get routing information from NameServer

case RequestCode.GET_ROUTEINFO_BY_TOPIC:

return this.getRouteInfoByTopic(ctx, request);

...

default:

break;

}

return null;

}

public RemotingCommand registerBroker(ChannelHandlerContext ctx,

RemotingCommand request) throws RemotingCommandException {

..

//todo registers the Broker information core method, calls the registerBroker() method, and uses a read-write lock

RegisterBrokerResult result = this.namesrvController.getRouteInfoManager().registerBroker(

requestHeader.getClusterName(),

requestHeader.getBrokerAddr(),

requestHeader.getBrokerName(),

requestHeader.getBrokerId(),

requestHeader.getHaServerAddr(),

topicConfigWrapper,

null,

ctx.channel()

);

responseHeader.setHaServerAddr(result.getHaServerAddr());

responseHeader.setMasterAddr(result.getMasterAddr());

byte[] jsonValue = this.namesrvController.getKvConfigManager().getKVListByNamespace(NamesrvUtil.NAMESPACE_ORDER_TOPIC_CONFIG);

response.setBody(jsonValue);

response.setCode(ResponseCode.SUCCESS);

response.setRemark(null);

return response;

}

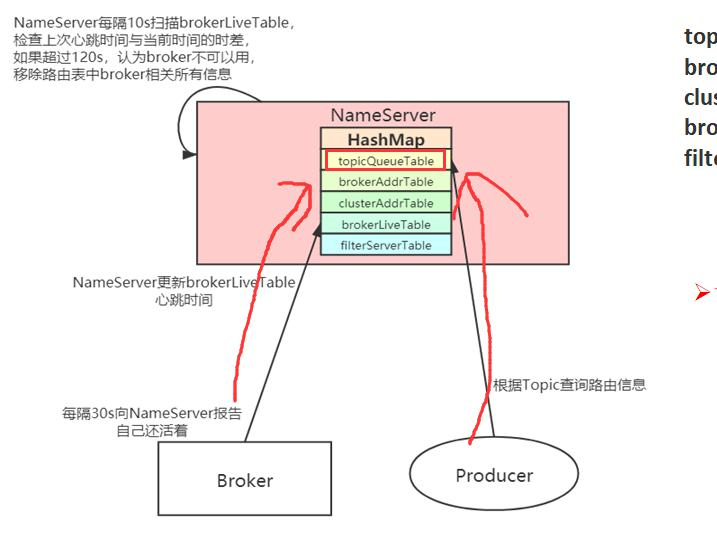

Topic Routing Registration and Removal Mechanism

Routing registration and discovery (read-write locks guarantee high concurrency when sending messages)

The routing information is retrieved when the message is sent, and the Broker updates the routing information periodically, so the routing table

1. Producers need frequent acquisition when sending messages. Read the table.

2. Broker Timer (30s) updates a routing table. Write the table.

In order to improve the high concurrency (thread security) of message sending, a pair of locks, a read lock and a write lock are maintained. By separating the read and write locks, concurrency is greatly improved compared to the general exclusive locks.

Write lock

Broker reports to NameServer every 30 seconds that he is still alive (contains a lot of information) Write locks are used here. Because the data will eventually be written to NameServer's memory (saved using HashMap)

//Registration Broker Core Method NameServer mainly does service registration and discovery

public RegisterBrokerResult registerBroker(...) {

RegisterBrokerResult result = new RegisterBrokerResult();

try {

try {

//Register routing information using write locks

this.lock.writeLock().lockInterruptibly();

//Maintain Cluster AddrTable

Set<String> brokerNames = this.clusterAddrTable.get(clusterName);

if (null == brokerNames) {

brokerNames = new HashSet<String>();

this.clusterAddrTable.put(clusterName, brokerNames);

}

brokerNames.add(brokerName);

boolean registerFirst = false;

//Maintain brokerAddrTable

BrokerData brokerData = this.brokerAddrTable.get(brokerName);

//First registration creates brokerData

if (null == brokerData) {

registerFirst = true;

brokerData = new BrokerData(clusterName, brokerName, new HashMap<Long, String>());

this.brokerAddrTable.put(brokerName, brokerData);

}

//Update Broker not first registered

Map<Long, String> brokerAddrsMap = brokerData.getBrokerAddrs();

//Switch slave to master: first remove <1, IP:PORT> in namesrv, then add <0, IP:PORT>

//The same IP:PORT must only have one record in brokerAddrTable

Iterator<Entry<Long, String>> it = brokerAddrsMap.entrySet().iterator();

while (it.hasNext()) {

...

}

String oldAddr = brokerData.getBrokerAddrs().put(brokerId, brokerAddr);

registerFirst = registerFirst || (null == oldAddr);

//Maintain topicQueueTable

if (null != topicConfigWrapper

&& MixAll.MASTER_ID == brokerId) {

...

}

...

} finally {

this.lock.writeLock().unlock();

}

} catch (Exception e) {

log.error("registerBroker Exception", e);

}

return result;

}

Read Lock

When a producer sends a message, he or she needs to get routing information from NameServer, where a read lock is used.

// MQClientAPIImpl.java

public TopicRouteData getTopicRouteInfoFromNameServer(final String topic, final long timeoutMillis,

boolean allowTopicNotExist)

throws MQClientException, InterruptedException, RemotingTimeoutException, RemotingSendRequestException, RemotingConnectException {

GetRouteInfoRequestHeader requestHeader = new GetRouteInfoRequestHeader();

requestHeader.setTopic(topic);

//todo producers get routing information from NameServer

RemotingCommand request = RemotingCommand.createRequestCommand(RequestCode.GET_ROUTEINFO_BY_TOPIC, requestHeader);

RemotingCommand response = this.remotingClient.invokeSync(null, request, timeoutMillis);

// DefaultRequestProcessor.processRequest(): This is the code Namesrv uses to process requests

public RemotingCommand processRequest(ChannelHandlerContext ctx,

RemotingCommand request) throws RemotingCommandException {

...

switch (request.getCode()) {

...

//todo producers get routing information from NameServer

case RequestCode.GET_ROUTEINFO_BY_TOPIC:

return this.getRouteInfoByTopic(ctx, request);

} // DefaultRequestProcessor.getRouteInfoByTopic()

public RemotingCommand getRouteInfoByTopic(ChannelHandlerContext ctx,

RemotingCommand request) throws RemotingCommandException {

final RemotingCommand response = RemotingCommand.createResponseCommand(null);

final GetRouteInfoRequestHeader requestHeader =

(GetRouteInfoRequestHeader) request.decodeCommandCustomHeader(GetRouteInfoRequestHeader.class);

//todo Here is used to get route information for NameServer pickupTopicRouteData()

TopicRouteData topicRouteData = this.namesrvController.getRouteInfoManager().pickupTopicRouteData(requestHeader.getTopic());

} // RouteInfoManager. pickupTopicRouteData()

//Return routing table from NameServer (read lock used)

public TopicRouteData pickupTopicRouteData(final String topic) {

TopicRouteData topicRouteData = new TopicRouteData();

// ...

try {

try {

//Read Lock

this.lock.readLock().lockInterruptibly();

List<QueueData> queueDataList = this.topicQueueTable.get(topic);

Design highlights

Because Broker sends a heartbeat packet to NameServer every 30 seconds, this operation updates the status of the broker each time, but at the same time the producer sends a message that also requires the status of the broker and frequently reads it. So there's a paradox here that Broker's status is updated regularly and read more frequently. How to increase concurrency here, especially when the producer sends messages, so a read-write lock mechanism (for scenarios with more reads and less writes) is used.

For each heartbeat packet NameServer receives, it updates the status information about the broker in the brokerLiveTable and the routing table (topicQueueTable, brokerAddrTable, brokerLiveTable, filterServerTable). Updating the above routing table uses a read-write lock with less lock granularity, allowing multiple message senders (Producers) to read concurrently to ensure high concurrency when sending messages. However, NameServer only processes one Broker heartbeat at a time and multiple heartbeat packets request serial execution. This is also a classic use scenario for read-write locks. For more information on read-write locks, refer to Concurrent Programming.

Routing culling mechanism

Broker sends a heartbeat packet to NameServer every 30 seconds. The heartbeat packet contains the BrokerId, Broker address, Broker name, the name of the cluster to which the Broker belongs, and the list of filter servers associated with the Broker. But if the Broker is down, NameServer cannot receive the heartbeat packet. How does NameServer remove these invalid brokers at this time? RLiveTable status table, if the last UpdateTimestamp timestamp of BrokerLive is more than 120 seconds from the current time, the Broker is considered invalid, the Broker is removed, the connection to the Broker is closed, and topicQueueTable, brokerAddrTable, brokerLiveTable, filterServerTable are updated.

RocketMQ has two triggers to delete routing information:

-

NameServer periodically scans brokerLiveTable to detect the time difference between the last heartbeat packet and the current system. If the time exceeds 120s, the broker needs to be removed.

-

Broker executes unregisterBroker instructions when shutting down normally

Both routes delete in the same way, deleting the broker-related information from the related routing table

public boolean initialize() {

...

//Scan every 10s as active Broker

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

//todo scans every 10s as active Broker

NamesrvController.this.routeInfoManager.scanNotActiveBroker();

}

}, 5, 10, TimeUnit.SECONDS);

}

//Broker is considered invalid if it exceeds 120s

public void scanNotActiveBroker() {

//Get brokerLiveTable

Iterator<Entry<String, BrokerLiveInfo>> it = this.brokerLiveTable.entrySet().iterator();

//Traversing brokerLiveTable

while (it.hasNext()) {

Entry<String, BrokerLiveInfo> next = it.next();

long last = next.getValue().getLastUpdateTimestamp();

//If the heartbeat packet is received more than 120 seconds from that time

if ((last + BROKER_CHANNEL_EXPIRED_TIME) < System.currentTimeMillis()) {

//Close Connection

RemotingUtil.closeChannel(next.getValue().getChannel());

//Remove broker

it.remove();

log.warn("The broker channel expired, {} {}ms", next.getKey(), BROKER_CHANNEL_EXPIRED_TIME);

//Maintain routing tables (update routing tables)

this.onChannelDestroy(next.getKey(), next.getValue().getChannel());

}

}

}

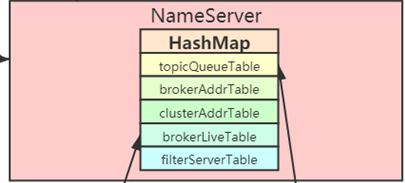

Storage of NameServer

NameServer stores the following information:

// RouteInfoManager.java //Read-write lock private final ReadWriteLock lock = new ReentrantReadWriteLock(); // Topic Message Queuing Routing Information, Load Balancing based on Routing Table when sending messages private final HashMap<String/* topic */, List<QueueData>> topicQueueTable; // Broker base information, including brokerName, cluster name, primary and standby broker address private final HashMap<String/* brokerName */, BrokerData> brokerAddrTable; // Broker cluster information, stores all broker names in the cluster private final HashMap<String/* clusterName */, Set<String/* brokerName */>> clusterAddrTable; // Broker status information, which is replaced every time NameServer receives a heartbeat packet private final HashMap<String/* brokerAddr */, BrokerLiveInfo> brokerLiveTable; // A list of FilterServer s on the Broker for class mode message filtering. private final HashMap<String/* brokerAddr */, List<String>/* Filter Server */> filterServerTable;

The implementation of NameServer ** is memory based and **NameServer does not persist routing information. The task of persistence is left to Broker.

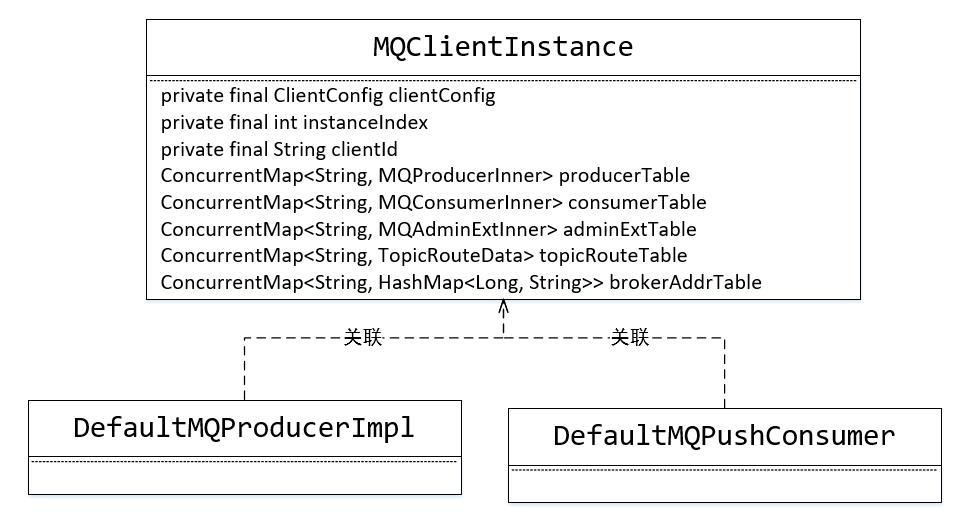

Client Start Core Process

DefaultMQProducer is the only default implementation of MQProducer, which implements the MQProducer interface by inheriting the ClientConfig class (client configuration class), which can be configured such as sendMsgTimeout timeout, producerGroup producer group maximum message capacity, and whether compression is enabled

The sender and consumer of the message in RocketMQ belong to the client

Each client is an MQClientInstance, and each ClientConfig corresponds to an instance.

So different producers and consumers share an instance of MQClientInstance if they reference the same client configuration (ClientConfig). MQClientInstance is not an external application class, that is, the user does not need to instantiate himself to use it. Furthermore, the instantiation of MQClientInstance is not used directly after new, but through the type of MQClientManager. MQClientManager is a singleton class that uses hungry-man mode design to ensure thread safety. His role is to provide an instance of MQClientInstance, which RocketMQ believes

Instances of MQClientInstance are reusable instances. As long as the client-related feature parameters are the same, an instance of MQClientInstance will be reused. We can see the source code

// Instantiate message producer Producer

DefaultMQProducer producer = new DefaultMQProducer("AsyncProducer");

// Set the address of NameServer

producer.setNamesrvAddr("127.0.0.1:9876");//localhost

// Start Producer Instance

producer.start();

public void start() throws MQClientException {

this.setProducerGroup(withNamespace(this.producerGroup));

//The core of todo Producer startup process is defaultMQProducerImpl.start();

this.defaultMQProducerImpl.start();

if (null != traceDispatcher) {

try {

traceDispatcher.start(this.getNamesrvAddr(), this.getAccessChannel());

} catch (MQClientException e) {

log.warn("trace dispatcher start failed ", e);

}

}

}

//Startup process core for todo Producer

public void start(final boolean startFactory) throws MQClientException {

switch (this.serviceState) {

//todo must have been here for the first time

case CREATE_JUST:

this.serviceState = ServiceState.START_FAILED;

//Check if the producer group meets the requirements

this.checkConfig();

//Change current instanceName to process ID

if (!this.defaultMQProducer.getProducerGroup().equals(MixAll.CLIENT_INNER_PRODUCER_GROUP)) {

this.defaultMQProducer.changeInstanceNameToPID();

}

//todo gets MQ client instance

//There is only one instance of MQClientManager in the entire JVM, maintaining an MQClientInstance cache table

//ConcurrentMap<String/* clientId */, MQClientInstance> factoryTable = new ConcurrentHashMap<String,MQClientInstance>();

//Only one MQClientInstance will be created for the same clientId.

//MQClientInstance encapsulates the RocketMQ Network Processing API and is the network channel through which message producers and consumers interact with NameServer and Broker

this.mQClientFactory =

MQClientManager.getInstance().

getOrCreateMQClientInstance(this.defaultMQProducer, rpcHook); // Core Code

//Register current producer into MQClientInstance management to facilitate subsequent network requests

boolean registerOK = mQClientFactory.registerProducer(this.defaultMQProducer.getProducerGroup(), this);

if (!registerOK) {

this.serviceState = ServiceState.CREATE_JUST;

throw new MQClientException("The producer group[" + this.defaultMQProducer.getProducerGroup()

+ "] has been created before, specify another name please." + FAQUrl.suggestTodo(FAQUrl.GROUP_NAME_DUPLICATE_URL),

null);

}

this.topicPublishInfoTable.put(this.defaultMQProducer.getCreateTopicKey(), new TopicPublishInfo());

//Start Producer

if (startFactory) {

//todo ultimately calls MQClientInstance

mQClientFactory.start();

}

...

}

public MQClientInstance getOrCreateMQClientInstance(final ClientConfig clientConfig) {

return getOrCreateMQClientInstance(clientConfig, null);

}

public MQClientInstance getOrCreateMQClientInstance(final ClientConfig clientConfig, RPCHook rpcHook) {

// Build Client ID

String clientId = clientConfig.buildMQClientId();

//Depending on the client ID or client instance

MQClientInstance instance = this.factoryTable.get(clientId);

//If the instance is empty, create a new instance and add it to the instance table

if (null == instance) {

instance =

new MQClientInstance(clientConfig.cloneClientConfig(),

this.factoryIndexGenerator.getAndIncrement(), clientId, rpcHook);

MQClientInstance prev = this.factoryTable.putIfAbsent(clientId, instance);

if (prev != null) {

instance = prev;

log.warn("Returned Previous MQClientInstance for clientId:[{}]", clientId);

} else {

log.info("Created new MQClientInstance for clientId:[{}]", clientId);

}

}

return instance;

}

The parameters in ClientConfig are the same. These Clients will reuse an MQClientInstance. When using it, be aware of the relationship between Client and MQClientInstance in your program. Let's take a look at how RocketMQ reuses an MQClientInstance with Client for parameter configurations IP@instanceName@unitName, such a string, where I don't explain IP, InstanceName can be set, Producer and Constucer have corresponding API settings, if the default value is not set, that is, Client's PID, UnitName does not set the default to null, Of course, you can set this value by DefaultMQProducer#setUnitName(), which is inherited from the ClientConfig class.

// IP@instanceName@unitName: reuse if same

public String buildMQClientId() {

StringBuilder sb = new StringBuilder();

sb.append(this.getClientIP());

sb.append("@");

sb.append(this.getInstanceName());

if (!UtilAll.isBlank(this.unitName)) { // todo unitName does not set default to null

sb.append("@");

sb.append(this.unitName);

}

return sb.toString();

}

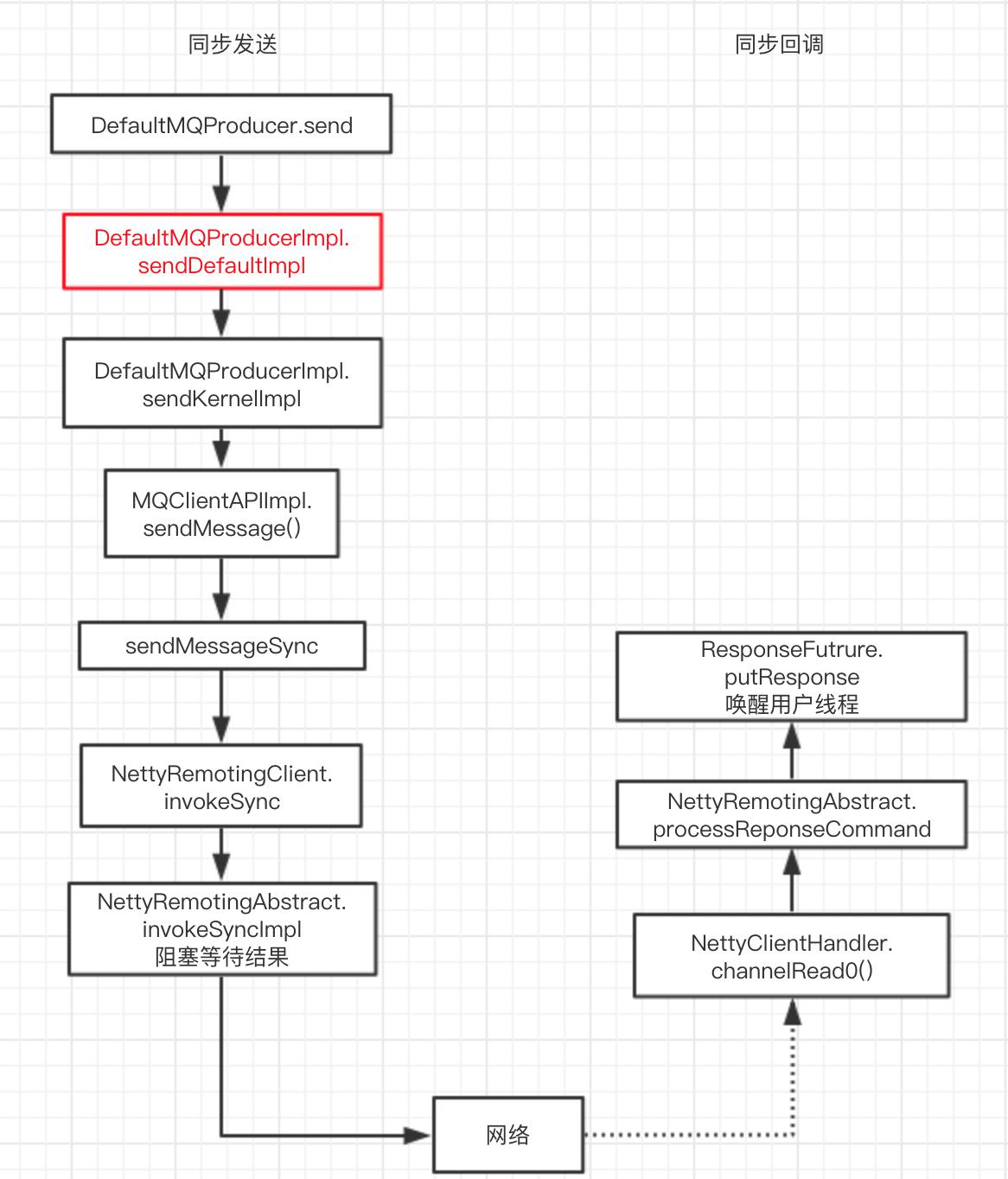

When to establish a client connection

**Note: ** Connections in MQClientInstance are created on demand, that is, when data interaction with the client is required

public static void main(String[] args) throws Exception {

...

// Start Producer Instance

producer.start();

// Connect when start or send: The answer is when send

producer.send(msg, new SendCallback() { // One more callback was sent asynchronously

}

...

}

// DefaultMQProducer.java

public void send(Message msg,SendCallback sendCallback) {

msg.setTopic(withNamespace(msg.getTopic()));

this.defaultMQProducerImpl.send(msg, sendCallback);

}

// NettyRemotingClient.java

public RemotingCommand invokeSync(String addr, final RemotingCommand request, long timeoutMillis)

throws InterruptedException, RemotingConnectException, RemotingSendRequestException, RemotingTimeoutException {

long beginStartTime = System.currentTimeMillis();

//todo Create a connection here

final Channel channel = this.getAndCreateChannel(addr);

if (channel != null && channel.isActive()) {}

}

private Channel getAndCreateChannel(final String addr) throws RemotingConnectException, InterruptedException {

if (null == addr) {

return getAndCreateNameserverChannel();

}

ChannelWrapper cw = this.channelTables.get(addr);

if (cw != null && cw.isOK()) {

return cw.getChannel();

}

return this.createChannel(addr);

}