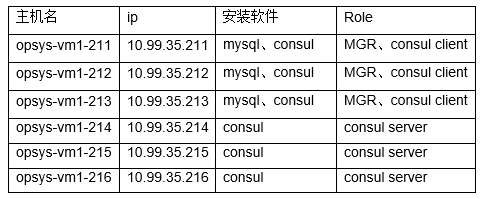

To fully simulate a complete set of consumer + Mgr environment, we may need to configure the following servers:

Three serve as the consumer server, and the other three serve as MySQL servers in MGR single master mode.

1, Build Mysql MGR:

Download the new version of mysql 8.0.13

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-8.0.13-linux-glibc2.12-x86_64.tar.xz

Use the uuid gen command to generate UUIDs:

uuidgen b71ed720-3219-4cb9-8349-0ecc04be979b

Modify hosts

10.99.35.211 opsys-vm1-211 10.99.35.212 opsys-vm2-212 10.99.35.213 opsys-vm3-213

Install mysql-8.0.13

cd /apps tar xvf mysql-8.0.13-linux-glibc2.12-x86_64.tar tar xvf mysql-8.0.13-linux-glibc2.12-x86_64.tar.xz mv mysql-8.0.13-linux-glibc2.12-x86_64 mysql-8.0.13 mkdir -p /data/mysql/3307 chown -R mysql:mysql /data/mysql/3307 cd /apps/mysql-8.0.13 vim my_3307.cnf --> my_3307.cnf Here's an example at the end bin/mysqld --defaults-file=/apps/mysql-8.0.13/my_3307.cnf --initialize --lc_messages_dir=/apps/mysql-8.0.13/share nohup bin/mysqld_safe --defaults-file=/apps/mysql-8.0.13/my_3307.cnf & /apps/mysql-8.0.13/bin/mysql -uroot -p -S /data/mysql/3307/mysql.sock alter user root@localhost identified by 'aspire@123-'; install plugin group_replication soname 'group_replication.so'; RESET MASTER

Configure start MGR

Log in to opsys-vm1-211, configure and start mgr

set sql_log_bin=0; CREATE USER repluser@'%' IDENTIFIED WITH 'mysql_native_password' BY '123qwe'; GRANT REPLICATION SLAVE ON *.* TO 'repluser'@'%'; FLUSH PRIVILEGES; set sql_log_bin=1; CHANGE MASTER TO MASTER_USER='repluser',MASTER_PASSWORD='123qwe' FOR CHANNEL 'group_replication_recovery'; #The group replication bootstrap group parameter is only enabled on the first started MGR instance. Other instances do not operate set global group_replication_bootstrap_group=ON; START GROUP_REPLICATION; set global group_replication_bootstrap_group=OFF; SELECT * FROM performance_schema.replication_group_members;

Log in to opsys-vm1-212, opsys-vm1-213, configure and start mgr

set sql_log_bin=0; CREATE USER repluser@'%' IDENTIFIED WITH 'mysql_native_password' BY '123qwe'; GRANT REPLICATION SLAVE ON *.* TO 'repluser'@'%'; FLUSH PRIVILEGES; set sql_log_bin=1; CHANGE MASTER TO MASTER_USER='repluser',MASTER_PASSWORD='123qwe' FOR CHANNEL 'group_replication_recovery'; START GROUP_REPLICATION; SELECT * FROM performance_schema.replication_group_members;

So far, mgr is completed

mysql [(none)]> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | +---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+ | group_replication_applier | 98297c82-7b91-11e9-8774-000c292741d1 | opsys-vm1-211 | 3307 | ONLINE | PRIMARY | 8.0.13 | | group_replication_applier | 9b1373e9-7b91-11e9-a067-000c2985a8a2 | opsys-vm3-213 | 3307 | ONLINE | SECONDARY | 8.0.13 | | group_replication_applier | 9b9fa56c-7b91-11e9-b8a6-000c29f9538d | opsys-vm2-212 | 3307 | ONLINE | SECONDARY | 8.0.13 | +---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+

2, Consumer service deployment

The consumer server is the three servers:

10.99.35.214 10.99.35.215 10.99.35.216

The installation of consumer is very easy from https://www.consul.io/downloads.html After downloading here, you can use it after decompressing. It's just a binary file, and none of the others. I use version 1.4.4 of consumer. After downloading the file, extract it to / usr/local/bin. It's ready to use. Don't rely on anything.

unzip consul_1.4.4_linux_amd64.zip mv consul /usr/local/bin/

All three servers above are installed. All three machines create directories, which are used to store configuration files and data.

mkdir -p /etc/consul.d/ mkdir -p /data/consul_cluster/ mkdir -p /data/consul_cluster/scripts

The configuration of the main server requires a server.json. Here is an important concept: domain. Here is tk, which is the domain we belong to. Access is provided by the way of domain. For each machine, the advertise [addr] is based on the actual IP address of each machine. Here is the configuration of 10.99.35.214 server. The configuration of 10.99.35.215 and 10.99.35.216 can be done in a similar way.

vim /etc/consul.d/server.json

{

"addresses": {

"http": "0.0.0.0",

"dns": "0.0.0.0"

},

"bind_addr": "0.0.0.0",

"advertise_addr": "10.99.35.214",

"bootstrap_expect": 3,

"datacenter": "syk-mgrdb",

"data_dir": "/data/consul_cluster",

"dns_config": {

"allow_stale": true,

"max_stale": "87600h",

"node_ttl": "0s",

"service_ttl": {

"*": "0s"

}

},

"domain": "tk",

"enable_syslog": false,

"leave_on_terminate": false,

"log_level": "info",

"node_name": "opsys-vm4-214",

"node_meta": {

"location": "B1 in test"

},

"performance": {

"raft_multiplier": 1

},

"ports": {

"http": 8500,

"dns": 53

},

"reconnect_timeout": "72h",

"retry_join": [

"10.99.35.215",

"10.99.35.216"

],

"retry_interval": "10s",

"server": true,

"skip_leave_on_interrupt": true,

"ui": true

}

nohup consul agent -config-dir=/etc/consul.d > /data/consul_cluster/consul.log &For the client, you need a client.json, where you need to configure all the consumer servers. Therefore, you also need to create directories on three mysql servers, which are used to store configuration files and data.

mkdir -p /etc/consul.d/ mkdir -p /data/consul_cluster/ mkdir -p /data/consul_cluster/scripts

For each machine, the advertise [addr] is based on the actual IP address of each machine. Here is the configuration of 10.99.35.211 server. The configuration of 10.99.35.212 and 10.99.35.213 can be done in a similar way.

vim /etc/consul.d/client.json

{

"addresses": {

"http": "0.0.0.0",

"dns": "0.0.0.0"

},

"bind_addr": "0.0.0.0",

"advertise_addr": "10.99.35.211",

"datacenter": "syk-mgrdb",

"data_dir": "/data/consul_cluster",

"enable_script_checks": true,

"enable_syslog": false,

"leave_on_terminate": true,

"log_level": "info",

"node_name": "opsys-vm1-211",

"node_meta": {

"location": "B1 in test"

},

"ports": {

"dns": 53,

"http": 8500

},

"rejoin_after_leave": true,

"retry_join": [

"10.99.35.214",

"10.99.35.215",

"10.99.35.216"

],

"retry_interval": "10s",

"skip_leave_on_interrupt": false

}

nohup consul agent -config-dir=/etc/consul.d > /data/consul_cluster/consul.log &

[root@opsys-vm4-214 consul.d]# consul members -http-addr='10.99.35.214:8500'

Node Address Status Type Build Protocol DC Segment

opsys-vm4-214 10.99.35.214:8301 alive server 1.4.4 2 syk-mgrdb <all>

opsys-vm5-215 10.99.35.215:8301 alive server 1.4.4 2 syk-mgrdb <all>

opsys-vm6-216 10.99.35.216:8301 alive server 1.4.4 2 syk-mgrdb <all>

opsys-vm1-211 10.99.35.211:8301 alive client 1.4.4 2 syk-mgrdb <default>

opsys-vm2-212 10.99.35.212:8301 alive client 1.4.4 2 syk-mgrdb <default>

opsys-vm3-213 10.99.35.213:8301 alive client 1.4.4 2 syk-mgrdb <default>Write detection primay script and detection slave script on consumer client 10.99.35.211, 10.99.35.212 and 10.99.35.213

[root@opsys-vm2-212 consul_cluster]# cat /data/consul_cluster/scripts/check_mgr_primary.sh

#!/bin/bash

port=$1

user="root"

passwod="aspire@123-"

#comm="/usr/local/mysql_5.7.23/bin/mysql -u$user -h 127.0.0.1 -P $port "

comm="/apps/mysql-8.0.13/bin/mysql -u$user -P $port -p$passwod -S /data/mysql/3307/mysql.sock"

value=`$comm -Nse "select 1"`

primary_member=`$comm -Nse "select variable_value from performance_schema.global_status WHERE VARIABLE_NAME= 'group_replication_primary_member'"`

server_uuid=`$comm -Nse "select variable_value from performance_schema.global_variables where VARIABLE_NAME='server_uuid';"`

if [ -z $value ]

then

echo "mysql $port is down....."

exit 2

fi

# Judge node status

node_state=`$comm -Nse "select MEMBER_STATE from performance_schema.replication_group_members where MEMBER_ID='$server_uuid'"`

if [ $node_state != "ONLINE" ]

then

echo "MySQL $port state is not online...."

exit 2

fi

# Determine whether it is the master node

if [[ $server_uuid == $primary_member ]]

then

echo "MySQL $port Instance is master ........"

exit 0

else

echo "MySQL $port Instance is slave ........"

exit 2

fi

#end

[root@opsys-vm2-212 consul_cluster]# cat /data/consul_cluster/scripts/check_mgr_secondary.sh

#!/bin/bash

port=$1

user="root"

passwod="aspire@123-"

#comm="/usr/local/mysql_5.7.23/bin/mysql -u$user -h 127.0.0.1 -P $port "

comm="/apps/mysql-8.0.13/bin/mysql -u$user -P $port -p$passwod -S /data/mysql/3307/mysql.sock"

value=`$comm -Nse "select 1"`

primary_member=`$comm -Nse "select variable_value from performance_schema.global_status WHERE VARIABLE_NAME= 'group_replication_primary_member'"`

server_uuid=`$comm -Nse "select variable_value from performance_schema.global_variables where VARIABLE_NAME='server_uuid';"`

# Judge whether mysql survives

if [ -z $value ]

then

echo "mysql $port is down....."

exit 2

fi

# Judge node status

node_state=`$comm -Nse "select MEMBER_STATE from performance_schema.replication_group_members where MEMBER_ID='$server_uuid'"`

if [ $node_state != "ONLINE" ]

then

echo "MySQL $port state is not online...."

exit 2

fi

# Determine whether it is the master node

if [[ $server_uuid != $primary_member ]]

then

echo "MySQL $port Instance is slave ........"

exit 0

else

node_num=`$comm -Nse "select count(*) from performance_schema.replication_group_members"`

# Judge if there is no slave node, the master node also registers the slave role service.

if [ $node_num -eq 1 ]

then

echo "MySQL $port Instance is slave ........"

exit 0

else

echo "MySQL $port Instance is master ........"

exit 2

fi

fi

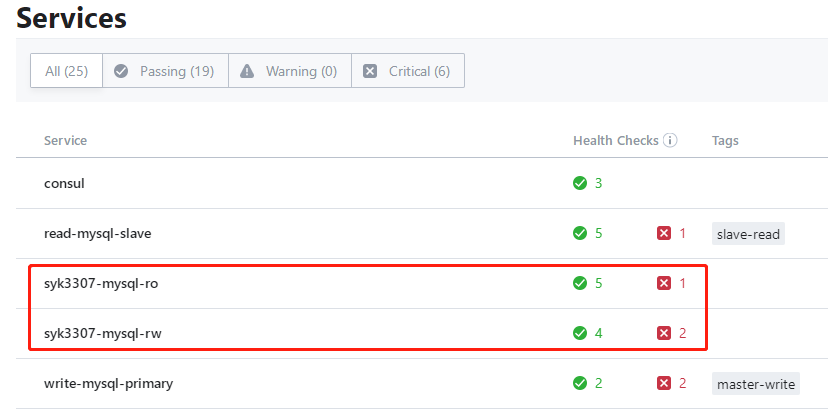

#endVisit the web page of the consumer server http://10.99.35.214:8500/ui/

3, MGR+Consul high availability implementation

Check the MGR cluster status to see which is the primary node

mysql [(none)]> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | +---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+ | group_replication_applier | 98297c82-7b91-11e9-8774-000c292741d1 | opsys-vm1-211 | 3307 | ONLINE | PRIMARY | 8.0.13 | | group_replication_applier | 9b1373e9-7b91-11e9-a067-000c2985a8a2 | opsys-vm3-213 | 3307 | ONLINE | SECONDARY | 8.0.13 | | group_replication_applier | 9b9fa56c-7b91-11e9-b8a6-000c29f9538d | opsys-vm2-212 | 3307 | ONLINE | SECONDARY | 8.0.13 | +---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+ 3 rows in set (0.00 sec)

Connect the json script found by the service on the consumer client 10.99.35.211, 10.99.35.212 and 10.99.35.213, and reload. All three machines need the corresponding json script to check whether mysql is the master or slave. The configuration file can be used by modifying the corresponding IP address

Detect master

[root@opsys-vm1-211 consul.d]# cat test_mgr_write.db.json

{

"services": [

{

"id": "syk3307_mgr_rw",

"name": "syk3307-mysql-rw",

"address": "",

"port": 3307,

"enable_tag_override": false,

"checks": [

{

"id": "syk3307_mgr_rw-check-01",

"name": "MySQL Write Check",

"args": ["/data/consul_cluster/scripts/check_mgr_primary.sh","3307"],

"interval": "15s",

"timeout": "1s",

"service_id": "syk3307_mgr_rw"

}

]

}

]

}Detect slave

[root@opsys-vm1-211 consul.d]# cat test_mgr_read.db.json

{

"services": [

{

"id": "syk3307_mgr_ro",

"name": "syk3307-mysql-ro",

"address": "",

"port": 3307,

"enable_tag_override": false,

"checks": [

{

"id": "syk3307_mgr_ro-check-02",

"name": "MySQL Write Check",

"args": ["/data/consul_cluster/scripts/check_mgr_secondary.sh","3307"],

"interval": "15s",

"timeout": "1s",

"service_id": "syk3307_mgr_ro"

}

]

}

]

}reload consul

[root@opsys-vm1-211 shell]# consul reload Configuration reload triggered

You can see from the user interface of the consumer server that the three mysql mgr clusters have been registered to the consumer service

Note: since there are master and slave detection scripts on each mysql server, and mysql server can only be master or slave, there are failed detection. Master detection has only one success, and slave detection has only one failure

Connection test

[root@opsys-35-209 ~]# cat /etc/resolv.conf # Generated by NetworkManager nameserver 10.99.35.214 nameserver 10.99.35.215 nameserver 10.99.35.216 [root@opsys-35-209 ~]# dig @10.99.35.214 -p 53 syk3307-mysql-rw.service.tk ; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.68.rc1.el6_10.3 <<>> @10.99.35.214 -p 53 syk3307-mysql-rw.service.tk ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 47308 ;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 2 ;; WARNING: recursion requested but not available ;; QUESTION SECTION: ;syk3307-mysql-rw.service.tk. IN A ;; ANSWER SECTION: syk3307-mysql-rw.service.tk. 0 IN A 10.99.35.211 ;; ADDITIONAL SECTION: syk3307-mysql-rw.service.tk. 0 IN TXT "location=B1 in test" syk3307-mysql-rw.service.tk. 0 IN TXT "consul-network-segment=" ;; Query time: 1 msec ;; SERVER: 10.99.35.214#53(10.99.35.214) ;; WHEN: Thu Apr 30 10:53:06 2020 ;; MSG SIZE rcvd: 129 [root@opsys-35-209 ~]# dig @10.99.35.214 -p 53 syk3307-mysql-ro.service.tk ; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.68.rc1.el6_10.3 <<>> @10.99.35.214 -p 53 syk3307-mysql-ro.service.tk ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 34863 ;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 4 ;; WARNING: recursion requested but not available ;; QUESTION SECTION: ;syk3307-mysql-ro.service.tk. IN A ;; ANSWER SECTION: syk3307-mysql-ro.service.tk. 0 IN A 10.99.35.212 syk3307-mysql-ro.service.tk. 0 IN A 10.99.35.213 ;; ADDITIONAL SECTION: syk3307-mysql-ro.service.tk. 0 IN TXT "location=B1 in test" syk3307-mysql-ro.service.tk. 0 IN TXT "consul-network-segment=" syk3307-mysql-ro.service.tk. 0 IN TXT "consul-network-segment=" syk3307-mysql-ro.service.tk. 0 IN TXT "location=B1 in test" ;; Query time: 1 msec ;; SERVER: 10.99.35.214#53(10.99.35.214) ;; WHEN: Thu Apr 30 10:53:32 2020 ;; MSG SIZE rcvd: 213

So far: the implementation of mysql mgr cluster + consumer for high availability cluster has been completed

The mysql configuration file is as follows:

[root@opsys-vm1-211 mysql-8.0.13]# cat my_3307.cnf [mysql] port = 3307 socket = /data/mysql/3307/mysql.sock default-character-set=utf8mb4 [mysqld] user=mysql server-id = 352113307 datadir=/data/mysql/3307 log-error = /data/mysql/3307/mysql_error.log default_storage_engine=InnoDB character_set_server=utf8mb4 log_bin_trust_function_creators=OFF federated open_files_limit = 10240 port = 3307 socket = /data/mysql/3307/mysql.sock back_log = 600 max_connections = 5000 max_connect_errors = 1000 lower_case_table_names=1 table_open_cache = 2048 thread_cache_size = 8 thread_stack = 192K sort_buffer_size = 8M join_buffer_size = 8M read_buffer_size = 2M read_rnd_buffer_size = 16M max_allowed_packet = 32M bulk_insert_buffer_size = 128M max_heap_table_size = 64M tmp_table_size = 64M binlog_cache_size = 1M log-slave-updates log-bin = binlog log-bin-index=binlog.index binlog_format = row sync_binlog = 1 max_binlog_size = 128M key_buffer_size = 128M myisam_sort_buffer_size = 64M myisam_max_sort_file_size = 64M myisam_repair_threads = 1 innodb_flush_method=O_DIRECT innodb_file_per_table=1 innodb_strict_mode=1 innodb_buffer_pool_size = 4G innodb_data_home_dir = /data/mysql/3307 innodb_data_file_path = ibdata1:100M:autoextend innodb_max_dirty_pages_pct = 90 innodb_lock_wait_timeout = 2 innodb_log_buffer_size = 8M innodb_log_group_home_dir = /data/mysql/3307 innodb_log_file_size = 64M innodb_log_files_in_group = 3 innodb_flush_log_at_trx_commit = 1 enforce-gtid-consistency=true gtid-mode=on master_info_repository="TABLE" relay_log_info_repository="TABLE" relay_log_recovery=1 skip_slave_start log_slave_updates=1 relay_log=mysql-relay-bin binlog_checksum=NONE transaction_write_set_extraction=XXHASH64 loose-group_replication_group_name="b71ed720-3219-4cb9-8349-0ecc04be979b" loose-group_replication_start_on_boot=off loose-group_replication_local_address= "10.99.35.211:33071" loose-group_replication_group_seeds= "10.99.35.211:33071,10.99.35.212:33071,10.99.35.213:33071" loose-group_replication_bootstrap_group= off [mysqld_safe] open-files-limit = 10240 [client] default-character-set= utf8mb4 #end