Blog Outline:

- 1, PXC introduction

- 2, Deploy PXC cluster

1, PXC introduction

Reference resources: Official Percona

PXC is an open source MySQL high availability solution, which integrates Percona Server and Xtrabackup with Galera library to realize synchronous multi master replication. High availability solutions based on Galera mainly include MariaDB Galera Cluster (MGC) and Percona XtraDB Cluster (PXC). At present, PXC architecture is used more and more mature in the production environment. Compared with the traditional master-slave cluster architecture MHA and dual master, the most prominent feature of PXC is to solve the long criticized replication delay problem, which can basically achieve real-time synchronization. And the relationship between nodes is equal. Galera Cluster is also a multi master architecture. PXC is a synchronous replication implemented in the storage engine layer, not an asynchronous replication, so its data consistency is quite high.

Its working principle is as follows:

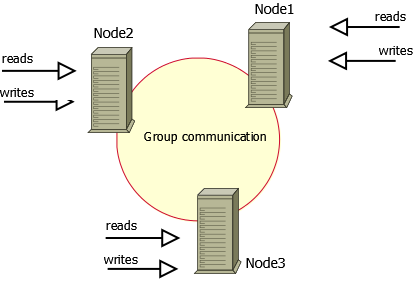

In order to build PXC architecture, at least three MySQL instances are needed to form a cluster. The three instances are not master-slave mode, but each is master-slave mode. Therefore, the relationship between the three instances is peer-to-peer, regardless of master-slave mode. This is also called multi master architecture. When the client reads and writes, which instance is connected is the same, and the data read is the same. After writing to any instance, the cluster will Synchronize the newly written data to other instances. This architecture does not share any data. It is a highly redundant MySQL Cluster Architecture.

1. Advantages and disadvantages of PXC

Advantage:

- It realizes the high availability of MySQL Cluster and the strong consistency of data.

- The real multi node read-write cluster scheme is completed.

- It improves the problem of master-slave replication delay and basically achieves real-time synchronization.

- The newly added nodes can automatically synchronize data without manual backup in advance, which is convenient for maintenance.

- Because it is a multi node write, database failover is easy.

Disadvantages:

- Adding a new node is expensive because when you add a new node, you must copy the complete data set from one of the existing nodes. If the data in the existing database is 100GB, you must copy 100GB.

- Any updated transaction needs to pass the global verification before it can be executed on other nodes. The cluster performance is limited by the worst node, the so-called short board effect (bucket law).

- Because of the need to ensure data consistency, PXC uses the real-time storage engine layer to achieve synchronous replication, so when multiple nodes write concurrently, the problem of lock conflict is more serious.

- There is a problem of write expansion, and write operations will occur on all nodes. PXC is not recommended for scenarios with large write load.

- Only Innodb storage engine is supported.

2. PXC working principle

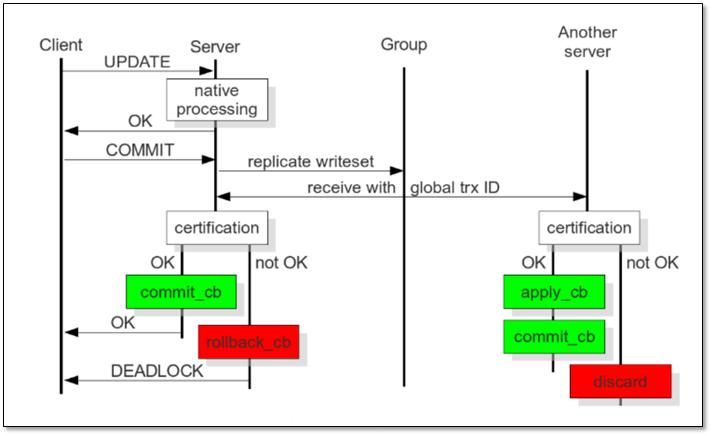

The operation flow of PXC is generally as follows: first, before the client submits the transaction to the write node requesting connection, the node broadcasts the replication writeset to be generated, then obtains the global transaction ID and transmits it to other nodes. After the other nodes merge the data through the certification, they find that there is no conflict data, and then they perform the apply and commit operations. Otherwise, they discard the transaction.

After the current node (the write node requested by the client) passes the verification, it performs the commit ﹣ CB operation and returns OK to the client. If the verification fails, roll back [CB].

There must be at least three nodes in the PXC cluster of the production line. If one of the nodes fails to pass the verification and there is a data conflict, the way to take at this time is to kick the nodes with inconsistent data out of the cluster, and it will automatically execute the shutdown command to automatically shut down.

3. Important concepts in PXC

First of all, the number of nodes in the cluster should be standardized. The number of nodes in the whole cluster should be controlled within the range of at least 3 and at most 8. At least three of them are to prevent brain crack, because only two nodes can cause brain crack. The manifestation of cleft brain is to output any command, and the returned result is unkown command.

When a new node wants to join the PXC cluster, it is necessary to select a doer node from each node in the cluster as the contributor of the total data. PXC has two types of node data transmission, one is called SST full transmission, the other is called IST incremental transmission. There are three modes of SST transmission: XtraBackup, mysqldump and rsync, while only XtraBackup is available for incremental transmission. Generally, when the amount of data is small, SST can be used as the full amount transmission, but only XtraBackup mode is used.

In the cluster, nodes will switch states due to new node joining or failure, synchronization failure, etc. the meanings of these states are listed below:

- open: node started successfully, try to connect to the cluster

- primary: the node is already in the cluster. When a new node joins the cluster, selecting donor for data synchronization will generate a state.

- joiner: the node is in a state waiting to receive the synchronization data file.

- joined: the node has completed data synchronization, trying to keep pace with the progress of other nodes in the cluster.

- synced: the state in which the node normally provides services, indicating that synchronization has been completed and is consistent with the progress of the cluster.

- Doer: the node is in the state of providing full data for the newly added node.

2, Deploy PXC cluster

1. Environmental preparation

| OS | host name | IP |

|---|---|---|

| Cent OS 7.5 | pxc-01 | 192.168.20.2 |

| Cent OS 7.5 | pxc-02 | 192.168.20.3 |

| Cent OS 7.5 | pxc-03 | 192.168.20.4 |

Note: all hosts do not need to install MySQL service in advance. MySQL service is included in PXC source package.

Note: for all operations in 2-8 summary, all three nodes need to be executed. If there is anything that doesn't need to be executed on all three nodes, I'll explain it in particular.

2. Installation dependency

[root@pxc-01 ~]# yum install -y libev lsof perl-Compress-Raw-Bzip2 perl-Compress-Raw-Zlib perl-DBD-MySQL perl-DBI perl-Digest perl-Digest-MD5 perl-IO-Compress perl-Net-Daemon perl-PlRPC socat openssl openssl-devel

3. Uninstall Mariadb

[root@pxc-01 ~]# yum -y erase mariadb && rpm -qa | grep mariadb | xargs rpm -e --nodeps

4. Download the required packages (xtraBackup, percona xtradb cluster and qpress)

[root@pxc-01 bin]# wget https://www.percona.com/downloads/Percona-XtraBackup-2.4/Percona-XtraBackup-2.4.18/binary/redhat/7/x86_64/percona-xtrabackup-24-2.4.18-1.el7.x86_64.rpm [root@pxc-01 bin]# wget http://www.quicklz.com/qpress-11-linux-x64.tar #The above package may report error 406 when using wget. Open the link with the browser to download and upload to the server. [root@pxc-01 bin]# wget https://www.percona.com/downloads/Percona-XtraDB-Cluster-LATEST/Percona-XtraDB-Cluster-5.7.28-31.41/binary/tarball/Percona-XtraDB-Cluster-5.7.28-rel31-31.41.1.Linux.x86_64.ssl101.tar.gz #This package will take a little longer to download. Wait patiently.

5. Configure the qpress command and install xtrabackup

[root@pxc-01 bin]# tar xf qpress-11-linux-x64.tar -C /usr/bin [root@pxc-01 bin]# rpm -ivh percona-xtrabackup-24-2.4.18-1.el7.x86_64.rpm

6. Deploy PXC services

#Create MySQL system user [root@pxc-01 bin]# useradd -M -r -s /bin/false mysql #Unpacking [root@pxc-01 bin]# tar zxf Percona-XtraDB-Cluster-5.7.28-rel31-31.41.1.Linux.x86_64.ssl101.tar.gz #Move to specified directory [root@pxc-01 bin]# mv Percona-XtraDB-Cluster-5.7.28-rel31-31.41.1.Linux.x86_64.ssl101 /usr/local/mysql #Create the data directory and change the directory owner group to MySQL [root@pxc-01 bin]# mkdir -p /usr/local/mysql/data [root@pxc-01 bin]# chown -R mysql.mysql /usr/local/mysql/ #Configure global variables for MySQL commands [root@pxc-01 bin]# echo "export PATH=/usr/local/mysql/bin:$PATH" >> /etc/profile [root@pxc-01 bin]# source /etc/profile

7. Define the profile of MySQL service

1) The complete configuration file of pxc-01 is as follows:

[root@pxc-01 bin]# cat /etc/my.cnf # Example: Percona XtraDB Cluster 5.7 [client] port = 3306 socket = /tmp/mysql.sock [mysql] prompt="\u@\h \R:\m:\s[\d]> " no-auto-rehash [mysqld] user = mysql port = 3306 basedir = /usr/local/mysql datadir = /usr/local/mysql/data socket = /tmp/mysql.sock pid-file = db.pid character-set-server = utf8mb4 skip_name_resolve = 1 open_files_limit = 65535 back_log = 1024 max_connections = 512 max_connect_errors = 1000000 table_open_cache = 1024 table_definition_cache = 1024 table_open_cache_instances = 64 thread_stack = 512K external-locking = FALSE max_allowed_packet = 32M sort_buffer_size = 4M join_buffer_size = 4M thread_cache_size = 768 #query_cache_size = 0 #query_cache_type = 0 interactive_timeout = 600 wait_timeout = 600 tmp_table_size = 32M max_heap_table_size = 32M slow_query_log = 1 slow_query_log_file = /usr/local/mysql/data/slow.log log-error = /usr/local/mysql/data/error.log long_query_time = 0.1 server-id = 1813306 log-bin = /usr/local/mysql/data/mysql-bin sync_binlog = 1 binlog_cache_size = 4M max_binlog_cache_size = 1G max_binlog_size = 1G expire_logs_days = 7 master_info_repository = TABLE relay_log_info_repository = TABLE gtid_mode = on enforce_gtid_consistency = 1 log_slave_updates binlog_format = row #For Galera to work correctly, binlog format should be ROW relay_log_recovery = 1 relay-log-purge = 1 key_buffer_size = 32M read_buffer_size = 8M read_rnd_buffer_size = 4M bulk_insert_buffer_size = 64M lock_wait_timeout = 3600 explicit_defaults_for_timestamp = 1 innodb_thread_concurrency = 0 innodb_sync_spin_loops = 100 innodb_spin_wait_delay = 30 transaction_isolation = REPEATABLE-READ innodb_buffer_pool_size = 1024M innodb_buffer_pool_instances = 8 innodb_buffer_pool_load_at_startup = 1 innodb_buffer_pool_dump_at_shutdown = 1 innodb_data_file_path = ibdata1:1G:autoextend innodb_flush_log_at_trx_commit = 1 innodb_log_buffer_size = 32M innodb_log_file_size = 2G innodb_log_files_in_group = 2 #innodb_max_undo_log_size = 4G innodb_io_capacity = 2000 innodb_io_capacity_max = 4000 innodb_flush_neighbors = 0 innodb_write_io_threads = 4 innodb_read_io_threads = 4 innodb_purge_threads = 4 innodb_page_cleaners = 4 innodb_open_files = 65535 innodb_max_dirty_pages_pct = 50 innodb_flush_method = O_DIRECT innodb_lru_scan_depth = 4000 innodb_checksum_algorithm = crc32 #innodb_file_format = Barracuda #innodb_file_format_max = Barracuda innodb_lock_wait_timeout = 10 innodb_rollback_on_timeout = 1 innodb_print_all_deadlocks = 1 innodb_file_per_table = 1 innodb_online_alter_log_max_size = 4G internal_tmp_disk_storage_engine = InnoDB innodb_stats_on_metadata = 0 # pxc is about database optimization, most of which are optional configurations. pxc is about the following parameters wsrep_provider=/usr/local/mysql/lib/libgalera_smm.so wsrep_provider_options="gcache.size=2G" wsrep_cluster_name=my_pxc_cluster wsrep_cluster_address=gcomm://192.168.20.2,192.168.20.3,192.168.20.4 wsrep_node_name=pxc01 wsrep_node_address=192.168.20.2 wsrep_sst_method=xtrabackup-v2 wsrep_sst_auth=sst:pwd@123 pxc_strict_mode=ENFORCING default_storage_engine=InnoDB # myisam storage engine can only be used in test environment innodb_autoinc_lock_mode=2 # This InnoDB auto incremental lock mode is enforced by Galera [mysqldump] quick max_allowed_packet = 32M

Some parameters related to PXC are explained as follows:

- Wsrep? Provider: Specifies the path of the required plug-in (the plug-in is available by default).

- wsrep_provider_options="gcache.size=1G": the size of Gcache ring buffer is pre allocated at startup, and the default is 10M. There are other options for this configuration: gcache.size represents the size used to cache the incremental information of the write set. Its default size is 128MB, which is set by the wsrep provider options variable parameter. It is recommended to adjust to 2G-4G range, enough space for more incremental information to be cached.

gcache.mem_size represents the size of the memory cache in gcache, which can improve the performance of the whole cluster.

gcache.page_size can be understood as writing the write set to the disk file directly if the memory is not enough (gcache is not enough). - Wsrep? Cluster? Name: this parameter defines the logical cluster name of the node. All nodes participating in the cluster must specify the same name.

- Wsrep cluster address: use this parameter to determine the IP address of other nodes in the cluster. Currently, the only back-end mode that supports production is gcomm.

- Wsrep? Node? Name: this parameter is used to set the logical name of the node itself.

- Wsrep > node > address: used to specify the IP address of the current node.

- Wsrep? SST? Method: used to specify the method of data synchronization. xtrabackup-v2 is recommended

- wsrep_sst_auth: define the user and password to use when SST replicates data (the database node must have the specified user and password)

- pxc_strict_mode: strict mode. It is officially recommended that the value of this parameter is encoding. With this configuration, any table created in the database must have a primary key, otherwise data cannot be written.

For more detailed explanation of PXC parameters, please move to: MySQL wsrep options , there will be surprises. At the same time, if you want to modify the above configuration items, it is recommended to see the detailed option explanation.

2) The complete configuration file of pxc-02 node is as follows:

Compared with pxc-01 node, there are only three points that can't be the same, which are marked as follows

[root@pxc-02 src]# cat /etc/my.cnf # Example: Percona XtraDB Cluster 5.7 [client] port = 3306 socket = /tmp/mysql.sock [mysql] prompt="\u@\h \R:\m:\s[\d]> " no-auto-rehash [mysqld] user = mysql port = 3306 basedir = /usr/local/mysql datadir = /usr/local/mysql/data socket = /tmp/mysql.sock pid-file = db.pid character-set-server = utf8mb4 skip_name_resolve = 1 open_files_limit = 65535 back_log = 1024 max_connections = 512 max_connect_errors = 1000000 table_open_cache = 1024 table_definition_cache = 1024 table_open_cache_instances = 64 thread_stack = 512K external-locking = FALSE max_allowed_packet = 32M sort_buffer_size = 4M join_buffer_size = 4M thread_cache_size = 768 #query_cache_size = 0 #query_cache_type = 0 interactive_timeout = 600 wait_timeout = 600 tmp_table_size = 32M max_heap_table_size = 32M slow_query_log = 1 slow_query_log_file = /usr/local/mysql/data/slow.log log-error = /usr/local/mysql/data/error.log long_query_time = 0.1 server-id = 1813307 # Server ID cannot be the same log-bin = /usr/local/mysql/data/mysql-bin sync_binlog = 1 binlog_cache_size = 4M max_binlog_cache_size = 1G max_binlog_size = 1G expire_logs_days = 7 master_info_repository = TABLE relay_log_info_repository = TABLE gtid_mode = on enforce_gtid_consistency = 1 log_slave_updates binlog_format = row relay_log_recovery = 1 relay-log-purge = 1 key_buffer_size = 32M read_buffer_size = 8M read_rnd_buffer_size = 4M bulk_insert_buffer_size = 64M lock_wait_timeout = 3600 explicit_defaults_for_timestamp = 1 innodb_thread_concurrency = 0 innodb_sync_spin_loops = 100 innodb_spin_wait_delay = 30 transaction_isolation = REPEATABLE-READ innodb_buffer_pool_size = 1024M innodb_buffer_pool_instances = 8 innodb_buffer_pool_load_at_startup = 1 innodb_buffer_pool_dump_at_shutdown = 1 innodb_data_file_path = ibdata1:1G:autoextend innodb_flush_log_at_trx_commit = 1 innodb_log_buffer_size = 32M innodb_log_file_size = 2G innodb_log_files_in_group = 2 #innodb_max_undo_log_size = 4G innodb_io_capacity = 2000 innodb_io_capacity_max = 4000 innodb_flush_neighbors = 0 innodb_write_io_threads = 4 innodb_read_io_threads = 4 innodb_purge_threads = 4 innodb_page_cleaners = 4 innodb_open_files = 65535 innodb_max_dirty_pages_pct = 50 innodb_flush_method = O_DIRECT innodb_lru_scan_depth = 4000 innodb_checksum_algorithm = crc32 #innodb_file_format = Barracuda #innodb_file_format_max = Barracuda innodb_lock_wait_timeout = 10 innodb_rollback_on_timeout = 1 innodb_print_all_deadlocks = 1 innodb_file_per_table = 1 innodb_online_alter_log_max_size = 4G internal_tmp_disk_storage_engine = InnoDB innodb_stats_on_metadata = 0 # PXC wsrep_provider=/usr/local/mysql/lib/libgalera_smm.so wsrep_provider_options="gcache.size=2G" wsrep_cluster_name=my_pxc_cluster wsrep_cluster_address=gcomm://192.168.20.2,192.168.20.3,192.168.20.4 wsrep_node_name=pxc02 # The logical name of a node cannot be the same wsrep_node_address=192.168.20.3 #The IP address here is local, of course, it's not the same wsrep_sst_method=xtrabackup-v2 wsrep_sst_auth=sst:pwd@123 pxc_strict_mode=ENFORCING default_storage_engine=InnoDB innodb_autoinc_lock_mode=2 [mysqldump] quick max_allowed_packet = 32M

3) The complete configuration file of pxc-03 is as follows:

Similarly, the three configurations that cannot be the same are the server ID, the logical name of the node, and the IP address of the node.

[root@pxc-03 src]# cat /etc/my.cnf # Example: Percona XtraDB Cluster 5.7 [client] port = 3306 socket = /tmp/mysql.sock [mysql] prompt="\u@\h \R:\m:\s[\d]> " no-auto-rehash [mysqld] user = mysql port = 3306 basedir = /usr/local/mysql datadir = /usr/local/mysql/data socket = /tmp/mysql.sock pid-file = db.pid character-set-server = utf8mb4 skip_name_resolve = 1 open_files_limit = 65535 back_log = 1024 max_connections = 512 max_connect_errors = 1000000 table_open_cache = 1024 table_definition_cache = 1024 table_open_cache_instances = 64 thread_stack = 512K external-locking = FALSE max_allowed_packet = 32M sort_buffer_size = 4M join_buffer_size = 4M thread_cache_size = 768 #query_cache_size = 0 #query_cache_type = 0 interactive_timeout = 600 wait_timeout = 600 tmp_table_size = 32M max_heap_table_size = 32M slow_query_log = 1 slow_query_log_file = /usr/local/mysql/data/slow.log log-error = /usr/local/mysql/data/error.log long_query_time = 0.1 server-id = 1813308 log-bin = /usr/local/mysql/data/mysql-bin sync_binlog = 1 binlog_cache_size = 4M max_binlog_cache_size = 1G max_binlog_size = 1G expire_logs_days = 7 master_info_repository = TABLE relay_log_info_repository = TABLE gtid_mode = on enforce_gtid_consistency = 1 log_slave_updates binlog_format = row relay_log_recovery = 1 relay-log-purge = 1 key_buffer_size = 32M read_buffer_size = 8M read_rnd_buffer_size = 4M bulk_insert_buffer_size = 64M lock_wait_timeout = 3600 explicit_defaults_for_timestamp = 1 innodb_thread_concurrency = 0 innodb_sync_spin_loops = 100 innodb_spin_wait_delay = 30 transaction_isolation = REPEATABLE-READ innodb_buffer_pool_size = 1024M innodb_buffer_pool_instances = 8 innodb_buffer_pool_load_at_startup = 1 innodb_buffer_pool_dump_at_shutdown = 1 innodb_data_file_path = ibdata1:1G:autoextend innodb_flush_log_at_trx_commit = 1 innodb_log_buffer_size = 32M innodb_log_file_size = 2G innodb_log_files_in_group = 2 #innodb_max_undo_log_size = 4G innodb_io_capacity = 2000 innodb_io_capacity_max = 4000 innodb_flush_neighbors = 0 innodb_write_io_threads = 4 innodb_read_io_threads = 4 innodb_purge_threads = 4 innodb_page_cleaners = 4 innodb_open_files = 65535 innodb_max_dirty_pages_pct = 50 innodb_flush_method = O_DIRECT innodb_lru_scan_depth = 4000 innodb_checksum_algorithm = crc32 #innodb_file_format = Barracuda #innodb_file_format_max = Barracuda innodb_lock_wait_timeout = 10 innodb_rollback_on_timeout = 1 innodb_print_all_deadlocks = 1 innodb_file_per_table = 1 innodb_online_alter_log_max_size = 4G internal_tmp_disk_storage_engine = InnoDB innodb_stats_on_metadata = 0 # PXC wsrep_provider=/usr/local/mysql/lib/libgalera_smm.so wsrep_provider_options="gcache.size=2G" wsrep_cluster_name=my_pxc_cluster wsrep_cluster_address=gcomm://192.168.20.2,192.168.20.3,192.168.20.4 wsrep_node_name=pxc03 wsrep_node_address=192.168.20.4 wsrep_sst_method=xtrabackup-v2 wsrep_sst_auth=sst:pwd@123 pxc_strict_mode=ENFORCING default_storage_engine=InnoDB innodb_autoinc_lock_mode=2 [mysqldump] quick max_allowed_packet = 32M

8. Each node initializes MySQL service and opens firewall related ports

#Initialize MySQL service

[root@pxc-01 ~]# mysqld --defaults-file=/etc/my.cnf --user=mysql --basedir=/usr/local/mysql/ --datadir=/usr/local/mysql/data/ --initialize

#Open firewall related ports

[root@pxc-01 ~]# firewall-cmd --permanent --add-port={3306,4567,4444,4568}/tcp

success

[root@pxc-01 ~]# firewall-cmd --reload

[root@pxc-01 ~]# firewall-cmd --list-all

public (active)

target: default

icmp-block-inversion: no

interfaces: ens33

sources:

services: ssh dhcpv6-client

ports: 3306/tcp 4567/tcp 4444/tcp 4568/tcp #Confirm that the port has been added

protocols:

masquerade: no

forward-ports:

source-ports:

icmp-blocks:

rich rules:

The relevant port information is as follows:

- mysql service port: 3306

- Cluster communication port: 4567

- SST (state snapshot transfer) port: 4444

- IST (Incremental State Transfer) port: 4568

Note: the next operations, if there is no special declaration, are performed on the pxc-01 node.

9. Boot pxc-01 node to initialize cluster

[root@pxc-01 ~]# mysqld --defaults-file=/etc/my.cnf --wsrep_new_cluster &

[root@pxc-01 ~]# ss -anptl | grep mysql

LISTEN 0 128 *:4567 *:* users:(("mysqld",pid=61739,fd=11))

LISTEN 0 128 :::3306 :::* users:(("mysqld",pid=61739,fd=31))

10. View the initial password on the pxc01 host and log in to MySQL

# View initial password [root@pxc-01 ~]# grep password /usr/local/mysql/data/error.log 2020-02-26T13:11:29.770228Z 1 [Note] A temporary password is generated for root@localhost: j?wh;jn=7uB. #Log in to the database, because there are special characters, you need to enclose the password in single quotation marks [root@pxc-01 ~]# mysql -uroot -p'j?wh;jn=7uB.' #Modify the initial password of the root user to perform other operations. root@localhost 21:47: [(none)]> alter user root@localhost identified by '123.com'; # Create the sst account specified in the configuration file. The password must be the same as that specified in the configuration file. root@localhost 21:47: [(none)]> grant all privileges on *.* to sst@localhost identified by 'pwd@123';

11. Add other nodes to the cluster

Note: the following operations need to be performed on each node to be added to the pxc-01 cluster. Here, I perform them on the pxc-02 and pxc-03 nodes respectively.

[root@pxc-02 src]# mysqld --defaults-file=/etc/my.cnf &

[1] 61731

[root@pxc-02 src]# ss -anltp | grep mysql #At this moment, the newly added nodes are synchronizing data from the nodes in the cluster

LISTEN 0 128 *:4567 *:* users:(("mysqld",pid=61731,fd=11))

[root@pxc-02 src]# ss -anltp | grep mysql

#Wait patiently for a while. When it is found that port 3306 is listening, it means that the data has been synchronized and the node has joined the cluster

LISTEN 0 128 *:4567 *:* users:(("mysqld",pid=61731,fd=11))

LISTEN 0 128 :::3306 :::* users:(("mysqld",pid=61731,fd=36))

#After the node is started successfully, you can directly use the root password set by the pxc-01 node to log in to the database

#Because the database information of pxc-01 node has been synchronized to the local database node.

[root@pxc-02 src]# mysql -uroot -p123.com12. Verify replication capabilities

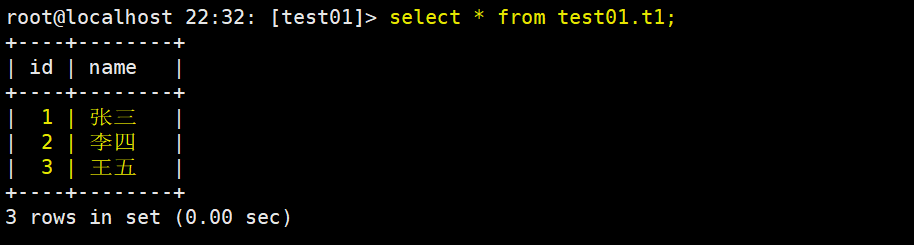

stay pxc-01 Node creation library and table [root@pxc-01 ~]# mysql -uroot -p123.com root@localhost 22:23: [(none)]> create database test01; root@localhost 22:24: [(none)]> use test01 root@localhost 22:31: [test01]> create table t1(id int primary key,name varchar(10)); # Insert a piece of data on three nodes respectively # pxc-01: root@localhost 22:31: [test01]> insert into t1(id,name) values(1,'Zhang San'); # pxc-02: root@localhost 22:33: [(none)]> insert into test01.t1(id,name) values(2,'Li Si'); # pxc-03: root@localhost 22:33: [(none)]> insert into test01.t1(id,name) values(3,'Wang Wu');

Finally, to view the newly created table at any node, its data should be as follows:

13. Add MySQL as system service

After being added as a system service, you don't need to use mysqld command to start and stop the service later, just use systemctl start mysqld command.

#Copy script file [root@pxc-01 ~]# cp /usr/local/mysql/support-files/mysql.server /etc/init.d/mysqld [root@pxc-01 ~]# chkconfig --add mysqld #Add as system service [root@pxc-01 ~]# chkconfig mysqld on #Add power on self start

So far, all three nodes have joined the cluster. If you want to add other nodes to the cluster in the future, you only need to configure the same as pxc-02 or pxc-03 nodes (pxc-01 node is slightly different because it is the first node in the cluster).

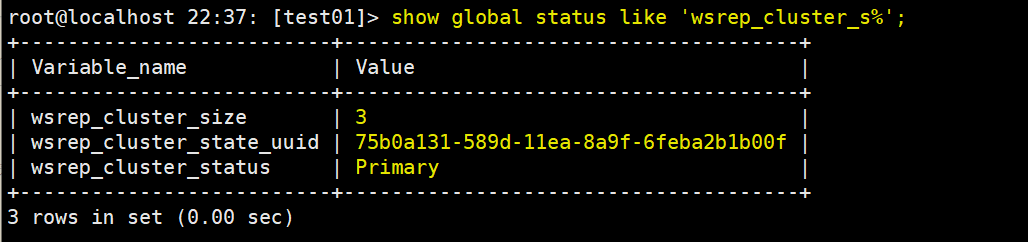

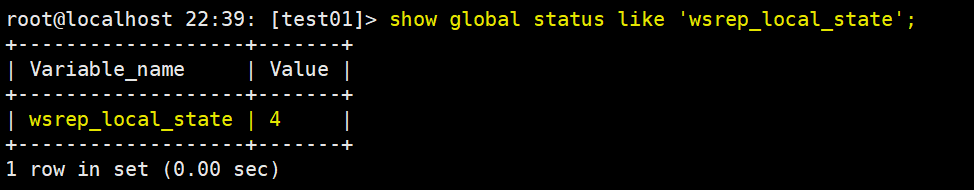

14. View cluster status

The above parameters are explained as follows:

- Wsrep? Cluster? Size: the number of nodes in the current cluster.

- Wsrep? Cluster? State? UUID: the UUID of the cluster. The UUID value in the same cluster must be consistent.

- Wsrep cluster status: the status of a cluster. A value of primary indicates normal. The following is an explanation of the different state values:

- open: the node starts successfully and attempts to connect to the cluster.

- primary: the node is already in the cluster. When a new node joins the cluster, selecting donor for data synchronization will generate a state.

- joiner: the node is in a state waiting to receive the synchronization data file.

- joined: the node has completed data synchronization, trying to keep pace with the progress of other nodes in the cluster.

- synced: the state in which the node normally provides services, indicating that synchronization has been completed and is consistent with the progress of the cluster.

- Doer: the node is in the state of providing full data for the newly added node.

Wsrep? Local? State: displays the current node status. A value of 4 indicates normal. There are four values for this state:

- Joining: indicates that the node is joining the cluster

- Doer: the node is in the state of providing full data for the newly added node.

- Joined: the current node has successfully joined the cluster.

- synced: the current node is in synchronization with each node in the cluster.