This article source code: GitHub. Click here || GitEE. Click here

I. Detailed Configuration

Scenario Description: MySQL tables synchronize with Elastic Search search engines in a full and incremental manner.

1. Download Content

- Elastic search version 6.3.2

- logstash Version 6.3.2

- mysql-connector-java-5.1.13.jar

2. Core configuration

- Path: / usr/local/logstash

- New configuration directory: sync-config

1) Configuration Full Text

/usr/local/logstash/sync-config/cicadaes.conf

input {

stdin {}

jdbc {

jdbc_connection_string => "jdbc:mysql://127.0.0.1:3306/cicada?characterEncoding=utf8"

jdbc_user => "root"

jdbc_password => "root123"

jdbc_driver_library => "/usr/local/logstash/sync-config/mysql-connector-java-5.1.13.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

jdbc_default_timezone => "Asia/Shanghai"

statement_filepath => "/usr/local/logstash/sync-config/user_sql.sql"

schedule => "* * * * *"

type => "User"

lowercase_column_names => false

record_last_run => true

use_column_value => true

tracking_column => "updateTime"

tracking_column_type => "timestamp"

last_run_metadata_path => "/usr/local/logstash/sync-config/user_last_time"

clean_run => false

}

jdbc {

jdbc_connection_string => "jdbc:mysql://127.0.0.1:3306/cicada?characterEncoding=utf8"

jdbc_user => "root"

jdbc_password => "root123"

jdbc_driver_library => "/usr/local/logstash/sync-config/mysql-connector-java-5.1.13.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

jdbc_default_timezone => "Asia/Shanghai"

statement_filepath => "/usr/local/logstash/sync-config/log_sql.sql"

schedule => "* * * * *"

type => "Log"

lowercase_column_names => false

record_last_run => true

use_column_value => true

tracking_column => "updateTime"

tracking_column_type => "timestamp"

last_run_metadata_path => "/usr/local/logstash/sync-config/log_last_time"

clean_run => false

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

}

output {

if [type] == "User" {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "cicada_user_search"

document_type => "user_search_index"

}

}

if [type] == "Log" {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "cicada_log_search"

document_type => "log_search_index"

}

}

}2) SQL file

- user_sql.sql

SELECT

id,

user_name userName,

user_phone userPhone,

create_time createTime,

update_time updateTime

FROM c_user

WHERE update_time > : sql_last_value- log_sql.sql

SELECT

id,

param_value paramValue,

request_ip requestIp,

create_time createTime,

update_time updateTime

FROM c_log

WHERE update_time > : sql_last_value3) Description of configuration parameters

- input parameter

statement_filepath: Read the location of the SQL statement schedule: Here the configuration executes every minute type: type, the identifier written to ES lowercase_column_names: Are fields lowercase? record_last_run: Record the last execution time use_column_value: Use column values tracking_column: Distinguish incremental data based on the updateTime field written to ES tracking_column_type: The distinguished field type

- output parameter

hosts: ES service address Index: Index name, understand database name by analogy document_type: Type name, analogy understanding table name

3. Start the process

/usr/local/logstash/bin/logstash -f /usr/local/logstash/sync-config/cicadaes.conf

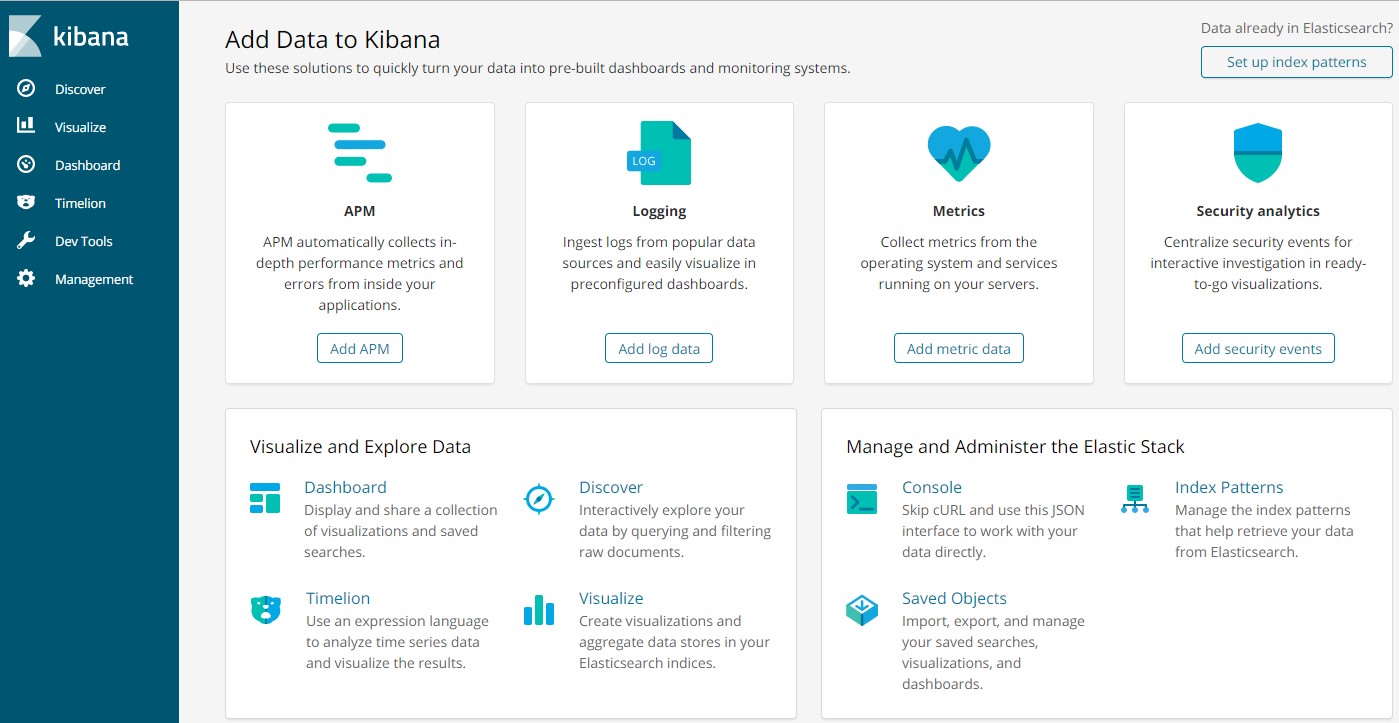

II. ES Client Tool

1. Download Software

kibana-6.3.2-windows-x86_64

2. Modify configuration

kibana-6.3.2-windows-x86_64\config\kibana.yml

Add configuration:

elasticsearch.url: "http://10.1.20.105:9200"

3. Double-click Start

kibana-6.3.2-windows-x86_64\bin\kibana.bat

4. Access Address

http://localhost:5601

3. Source code address

GitHub·address https://github.com/cicadasmile/linux-system-base GitEE·address https://gitee.com/cicadasmile/linux-system-base