There is a requirement in the recent project that about 150W data should be imported into the mysql database. There are six tables in total. The tables with the most data have about 100W data.

Because it's a one-time import and isn't used often, it's planned to write a small program that uses native JDBC, jar packages, and runs on the server.

At the end of the first writing, you type the jar package with maven. The jar package you type does not contain third-party jars such as mysql driver, and there is no program entry for java-jar. You need to add the following plugin s to the pom.xml file:

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>2.5.5</version>

<configuration>

<archive>

<manifest><!--Appoint jar Package Master Class-->

<mainClass>com.jd.TransferData</mainClass>

</manifest>

</archive>

<descriptorRefs><!--hit jar Time Input Dependency-->

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>The program is short as follows:

public class TransferData{

public static void main(String[] args) throws Exception {

Properties p = readProperties();

Connection connection = getConnection(p);

insertTable(connection,"table1");

/*insertTable(connection,"table2");

insertTable(connection,"table3");

insertTable(connection,"table4");

insertTable(connection,"table5");

insertTable(connection,"table6");*/

connection.close();

}

public static void insertTable(Connection connection, String tableName) throws Exception{

InputStream inputStream = TransferDataCopy.class.getResourceAsStream("/"+tableName+".txt");

BufferedReader reader = new BufferedReader(new InputStreamReader(inputStream));

String line;

while ((line = reader.readLine()) != null){

StringBuilder sb = new StringBuilder(100).append("insert into ").append(tableName).append(" values (").append(line).append(")");

PreparedStatement preparedStatement = connection.prepareStatement(sb.toString());

preparedStatement.executeUpdate();

connection.commit();

}

reader.close();

}

public static Properties readProperties() throws Exception {

Properties properties = new Properties();

properties.load(TransferDataCopy.class.getResourceAsStream("/jdbc.properties"));

//properties.list(System.out);

return properties;

}

public static Connection getConnection(Properties p) throws Exception{

Class.forName(p.getProperty("jdbc.driverClass"));

Connection connection = DriverManager.getConnection(p.getProperty("jdbc.jdbcUrl"),p.getProperty("jdbc.username") ,p.getProperty("jdbc.password"));

connection.setAutoCommit(false);

return connection;

}

}First read the jdbc configuration from the properties file, create a connection connection from the configuration, then read the data files for each table into memory, and insert them one by one.The largest file is about 140M, and I expect there will be no pressure to read it all in memory at once.More than one million pieces of data is not a lot. I didn't choose to insert them in batch. I think a few more hours will be over.

However, put it on the server, use the pre-release environment database, run for about 2 minutes, the program was inexplicably killed, several times like this, asked Operations Maintenance, Operations Maintenance said that just now the docker container memory was full, the program was killed by the operating system:

Unexpectedly, I don't know how the memory got full, what happened to cause the jvm to request memory so greedily.Later, I listened to Operations Maintenance's suggestion to limit the jvm memory:

java -Xms400m -Xmx400m -jar transferdata-1.0-SNAPSHOT-jar-with-dependencies.jar Sure it won't be kill ed. After more than an hour of execution, we found that only over 200,000 data were imported, and then it became slower and slower, inserting more than 10 in a second.The database table is not indexed.

I don't know how slow it is to import into the year of monkeys and horses. Looking up the data online, millions of insertions should be small case s for mysql. My program must have problems.So I ran the program locally.

Connect a program process with jconsole to observe heap memory usage:

It has been found that heap size has been increasing over time, but has not been killed by the operating system locally (possibly because of the differences between win s and linux operating systems, or docker).I was curious about what was accumulating in the heap.

Out of memory snapshot with jmap dump:

Jmap-dump:format=b, file=file name[pid]

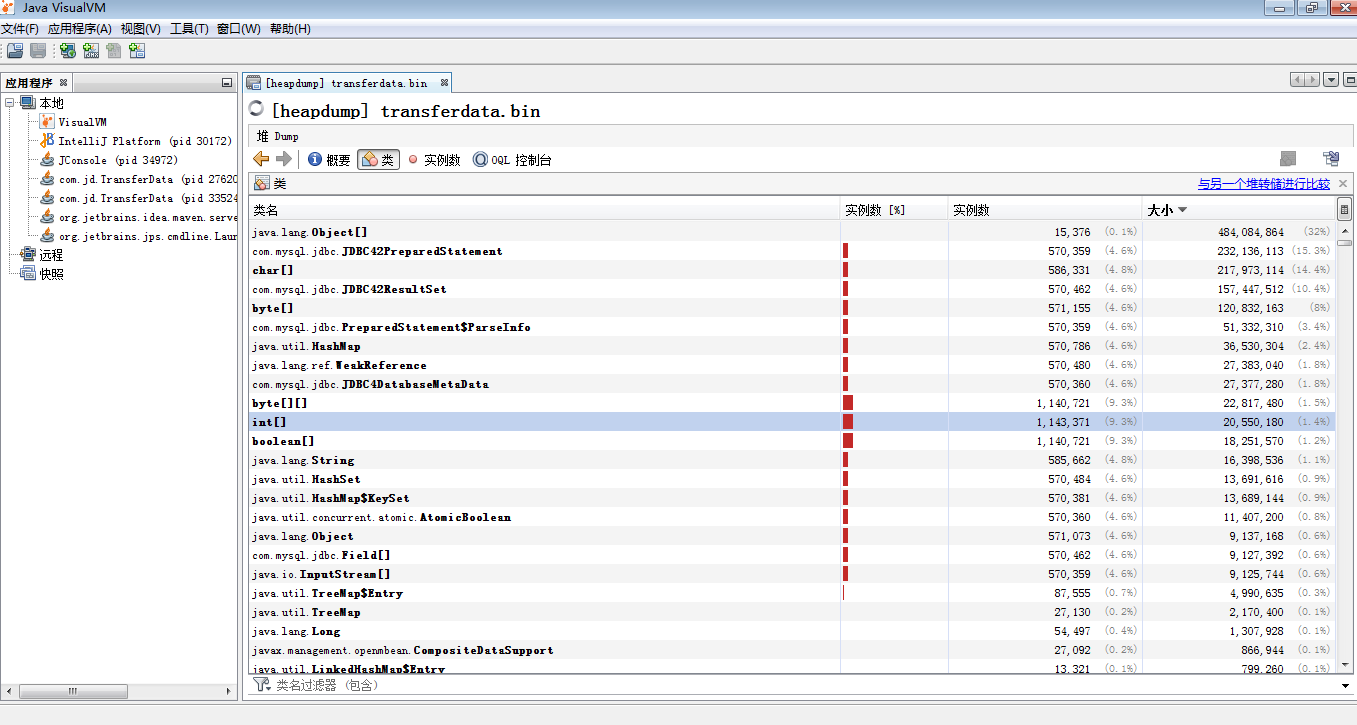

Analyze the dump file with jvisualvm:

Found many objects about jdbc, such as JDBC42PreparedStatement, JDBC42ResultSet, etc. The number of instances combined is several million..

I should have had a problem using jdbc, consulted the architect of the lower department, and finally found out what the problem is:

1. I've created too many PreparedStatements, one for each while loop, and none for later close s. Connections are one from start to finish, so as programs take longer to execute, while loops execute more and more times, and PreparedStatements are cached in memory, so they take up more and more memory.Not only do database clients (that is, the program) cache a large number of PreparedStatements, but database servers also have their own caching mechanisms, which can put a lot of pressure on servers for so many PerparedStatement s.In addition, according to jconsole's heap memory changes, heap jitter is very frequent and large, which indicates that gc happens frequently in jvm, and to a certain extent gc threads occupy the time slice of my program, which is also a reason why programs insert more and more slowly.

2. I did not take advantage of the PreparedStatement feature, PreparedStatement is a precompiled statement, it handles?Placeholders are placed in the position of the parameters, so each query only needs to set the parameters to the specified position, so a PreparedStatement can be used multiple times;

An object that represents a precompiled SQL statement.A SQL statement is precompiled and stored in a PreparedStatement object. This object can then be used to efficiently execute this statement multiple times.3. Using jdbc batch insert interfaces is theoretically much more efficient than inserting one at a time.

public static void insertTable(Properties p, String tableName) throws Exception{

Connection connection = getConnection(p);

InputStream inputStream = TransferData.class.getResourceAsStream("/"+tableName+".txt");

BufferedReader reader = new BufferedReader(new InputStreamReader(inputStream));

PreparedStatement preparedStatement = connection.prepareStatement(table_quote.get(tableName));

String line;

int linecount = 1;

String[] params = null;

try {

while ((line = reader.readLine()) != null){

params = line.replaceAll("'","").split(",");

for(int i=0;i<params.length;i++) {

preparedStatement.setObject(i+1,params[i].trim());

}

preparedStatement.addBatch();

if (linecount % 1000 == 0) {

preparedStatement.executeBatch();

connection.commit();

}

linecount++;

}

preparedStatement.executeBatch();

connection.commit();

preparedStatement.close();

connection.close();

inputStream.close();

} catch (Exception e) {

e.printStackTrace();

throw e;

}

}The finished program runs on the server and imports all the data in about two minutes.

Note:

Kill all java processes (only processes started by the current user)

ps -ef | grep java | grep -v grep | awk '{print $2}' | xargs kill

Even if ctrl+c exits after running the Java process, it will not stop the program from running nohup java-Xms400m-Xmx400m-jar transfer data-1.0-SNAPSHOT-jar-with-dependencies.jar &