mybatis cache

Mybatis caches have a first-level cache and a second-level cache. Why do mybatis use two caching mechanisms?Let's move on to today's topic with questions.

mybatis Level 1 Cache

When the mybatis cache executes the same SQL and the same parameters, it does not make repeated calls to the database. It only needs to be taken out of the cache. As long as the same session and other related conditions are maintained, our multiple requests are actually called only once, which can greatly save the cost of querying data.But in our actual development, first-level caches are used less frequently, why?

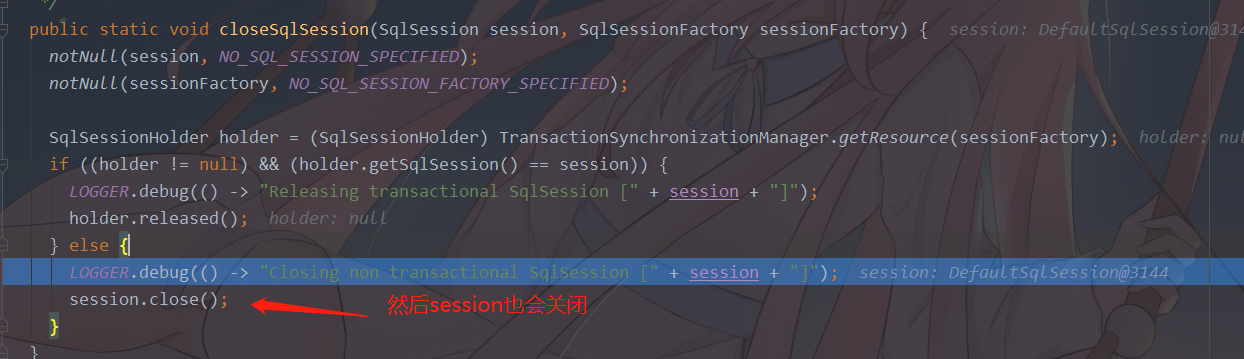

Level 1 caches only exist in the current session and will not be available without the current session, but we often use mybaits with spring, but each sq|statement in the spring is in a separate transaction, committing the transaction and closing the session by default after successful execution.

Tip: We mentioned earlier that there is only one transaction in a session, so spring will reopen a new session each time it uses mybatis by default, but if we add a transaction @Transactional to the language, you can use transactions.

Interpret Level 1 Cache

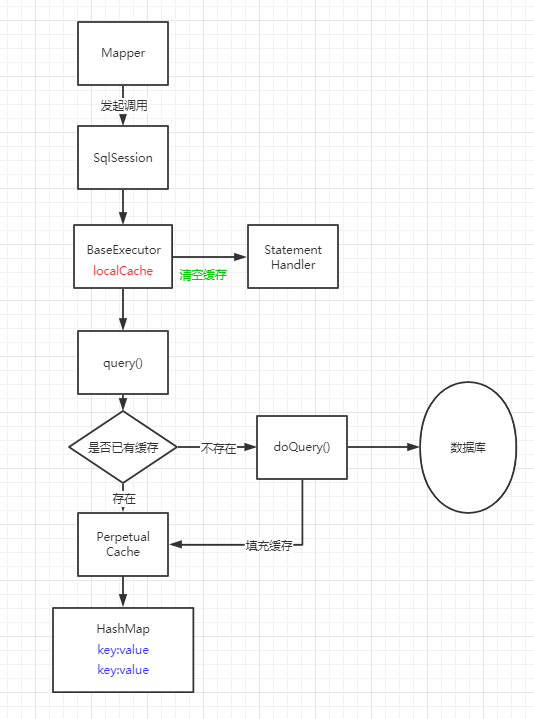

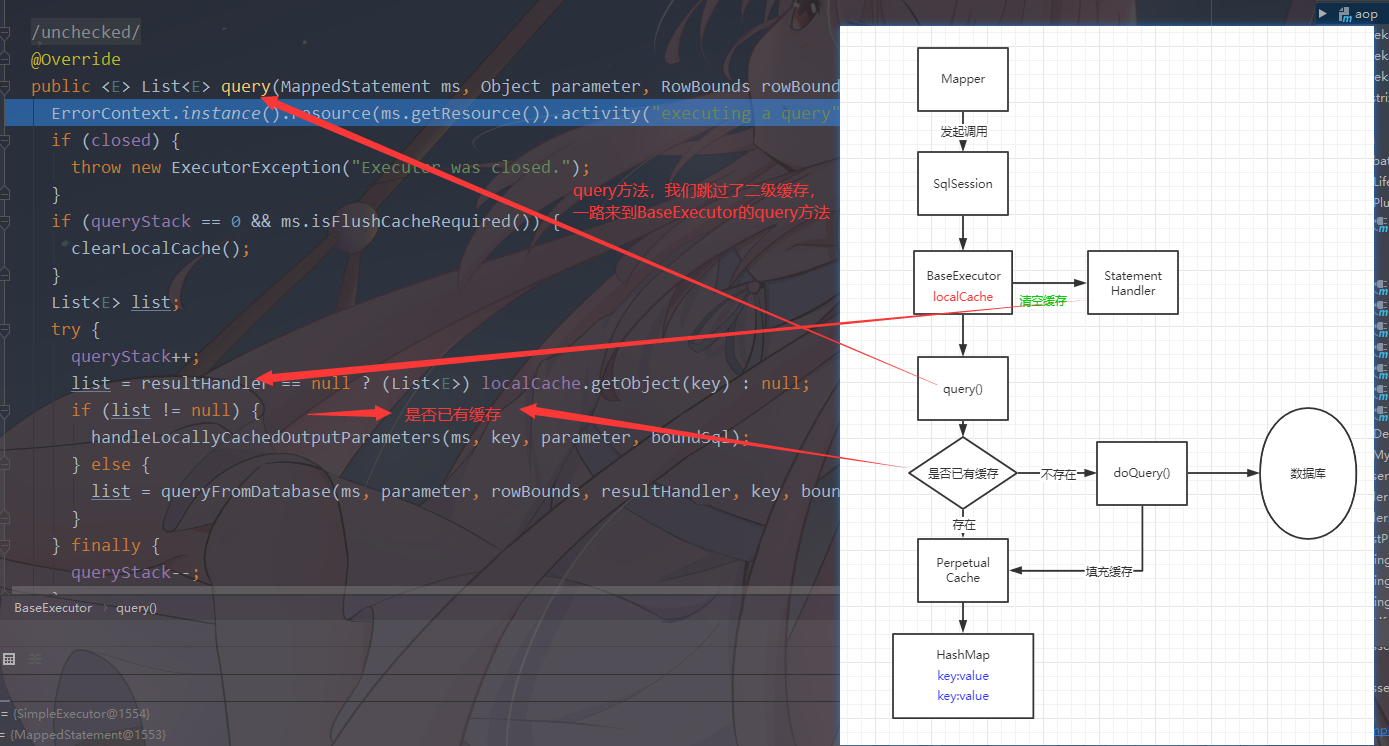

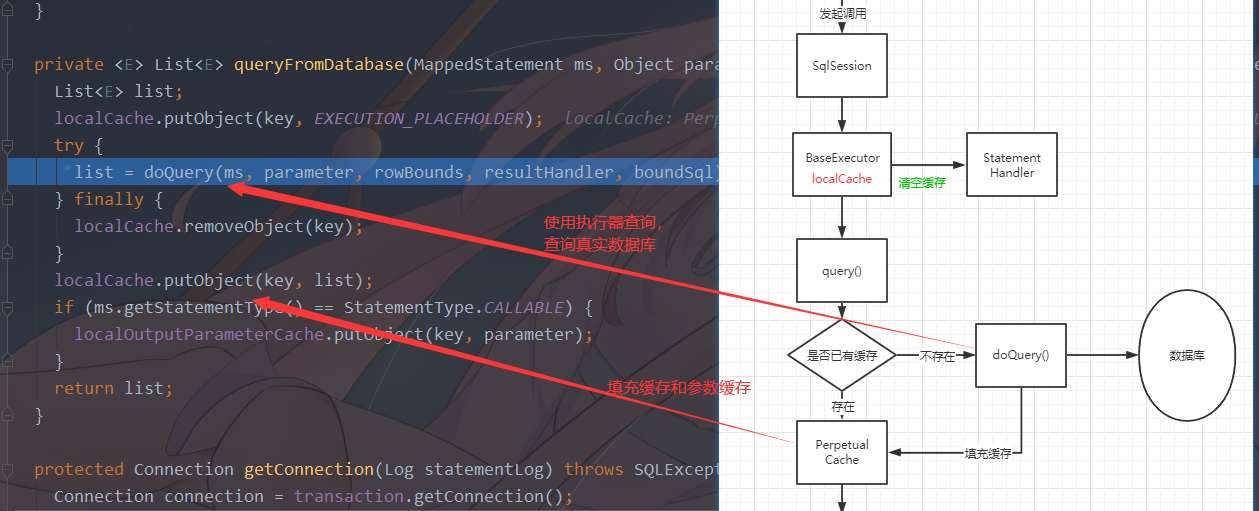

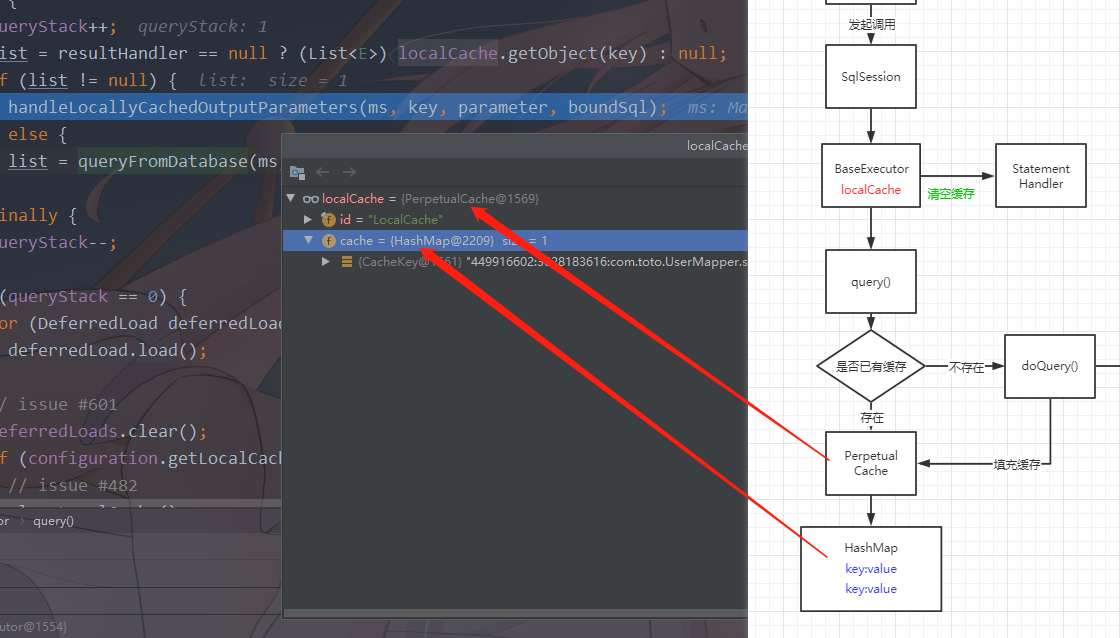

The logic of the first level cache is done in BaseExecutor, and the main logic is as follows:

//Source code bit is BaseExecutor 140 lines public <E> List<E> query(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException { ErrorContext.instance().resource(ms.getResource()).activity("executing a query").object(ms.getId()); if (closed) { throw new ExecutorException("Executor was closed."); } //The flushCache setting determines whether the cache needs to be cleared. This parameter can be set //No recursive call when queryStack =0 if (queryStack == 0 && ms.isFlushCacheRequired()) { clearLocalCache(); } List<E> list; try { //Prevent calls from being emptied so that local caches are not cleared when recursive calls are made to them queryStack++; //Check from the local Cache based on the cachekey first, and if the result processor is set, the first level cache will not be used as well. list = resultHandler == null ? (List<E>) localCache.getObject(key) : null; if (list != null) { //If a corresponding cache is found in the cache list, process the localOutputParameterCache handleLocallyCachedOutputParameters(ms, key, parameter, boundSql); } else { //Find from database when cache is not hit list = queryFromDatabase(ms, parameter, rowBounds, resultHandler, key, boundSql); } } finally { queryStack--; } if (queryStack == 0) { //Delay Loading All Elements in Queue for (DeferredLoad deferredLoad : deferredLoads) { deferredLoad.load(); } //Empty Delayed Load Queue // issue #601 deferredLoads.clear(); if (configuration.getLocalCacheScope() == LocalCacheScope.STATEMENT) { // issue #482 //If the cache scope is STATEMENT, the first level cache and parameter cache are cleared clearLocalCache(); } } return list; }

Hit Scenarios for Level 1 Cache

Runtime Requirements:

1. Must be in the same session

2.SQL statements and parameters must be the same

3. Same statementId

4.RowBounds are the same (that is, added paging properties)

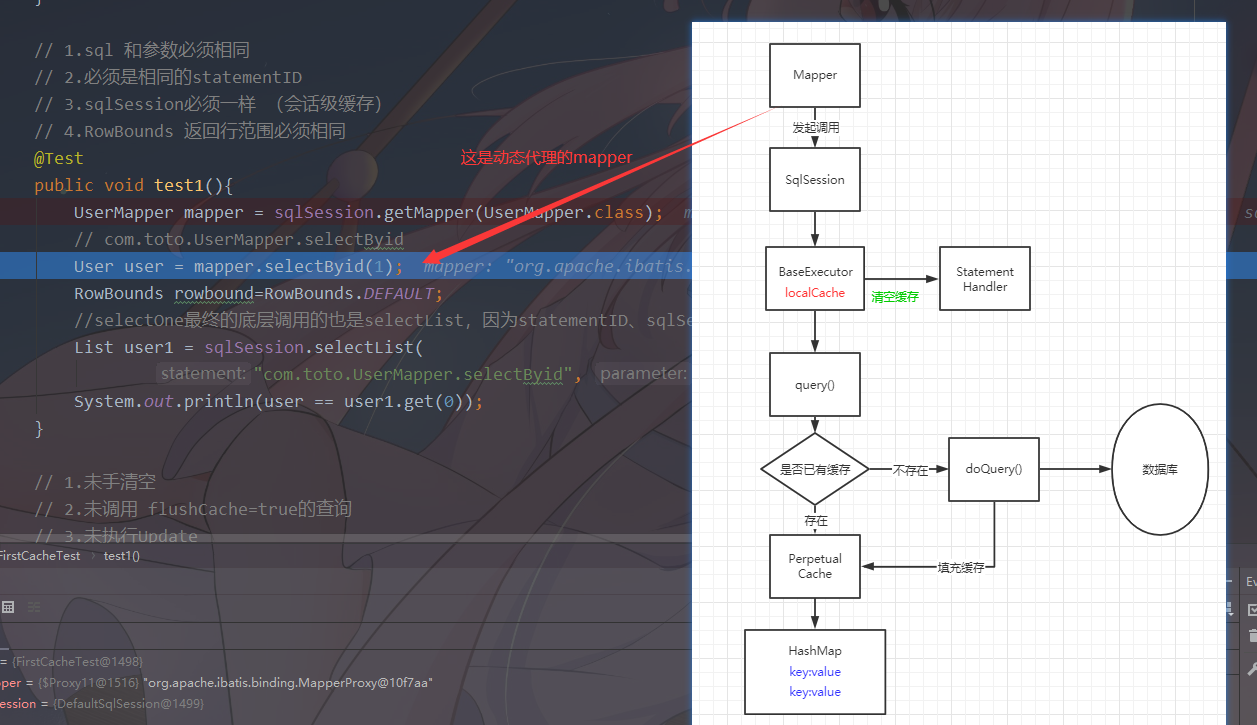

// 1.sql and parameters must be the same // 2. Must be the same statementID // 3. sqlSessions must be the same (session level cache) // 4.RowBounds return row ranges must be the same @Test public void test1(){ UserMapper mapper = sqlSession.getMapper(UserMapper.class); // com.toto.UserMapper.selectByid User user = mapper.selectByid(1); RowBounds rowbound=RowBounds.DEFAULT; //The final underlying call to selectOne is also selectList, because the statementID, sqlSession, rowbound, sql, and parameters are the same, so the first level cache is available List user1 = sqlSession.selectList( "com.toto.UserMapper.selectByid", 1,rowbound); System.out.println(user == user1.get(0)); }

Output results:

Preparing: select * from db_user where id=?

Parameters: 1(Integer)

Columns: id, name, password, status, nickname, createTime

Row: 1, admin, admin, 1, Administrator Big Brother, 2016-08-07 14:07:10

Total: 1

Cache Hit Ratio [com.toto.UserMapper]: 0.0

true

Conditions related to operation and configuration:

1. Uncommitted, roll back the current transaction, which is equivalent to manually emptying the cache

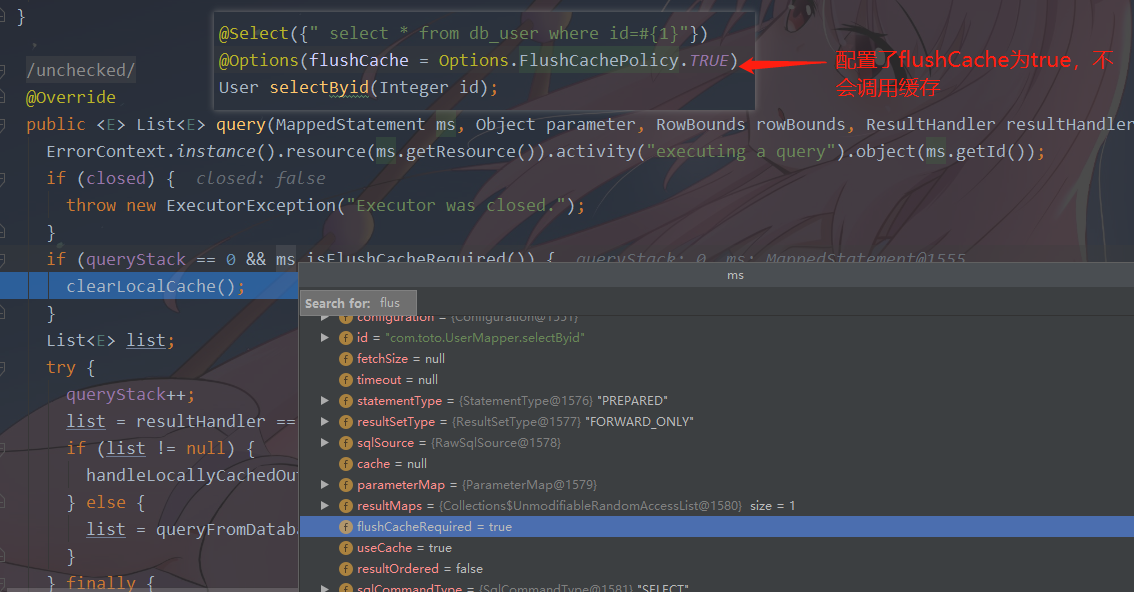

2. flushCache = true is not configured, default is default. If you configure true, the above code will delete the cache, and there is a legend below

3. update not performed

4. Cache scope is not STATEMENT

// 1. Empty without hands // 2. Query with flushCache=true not called // 3. Update not executed // 4. Cache scope is not STATEMENT--> @Test public void test2(){ // Session life cycle is short UserMapper mapper = sqlSession.getMapper(UserMapper.class); User user = mapper.selectByid3(1); sqlSession.commit();// This calls clearCache() sqlSession.rollback();// This calls clearCache() // mapper.setNickName(1,'Administrator's younger brother'); //will also clear Cache () User user1 = mapper.selectByid3(1);// Data consistency issues System.out.println(user == user1); }

Level 1 Cache Source Parsing

I'll start with the diagrams I've sorted out (too ugly to spray, just look at them):

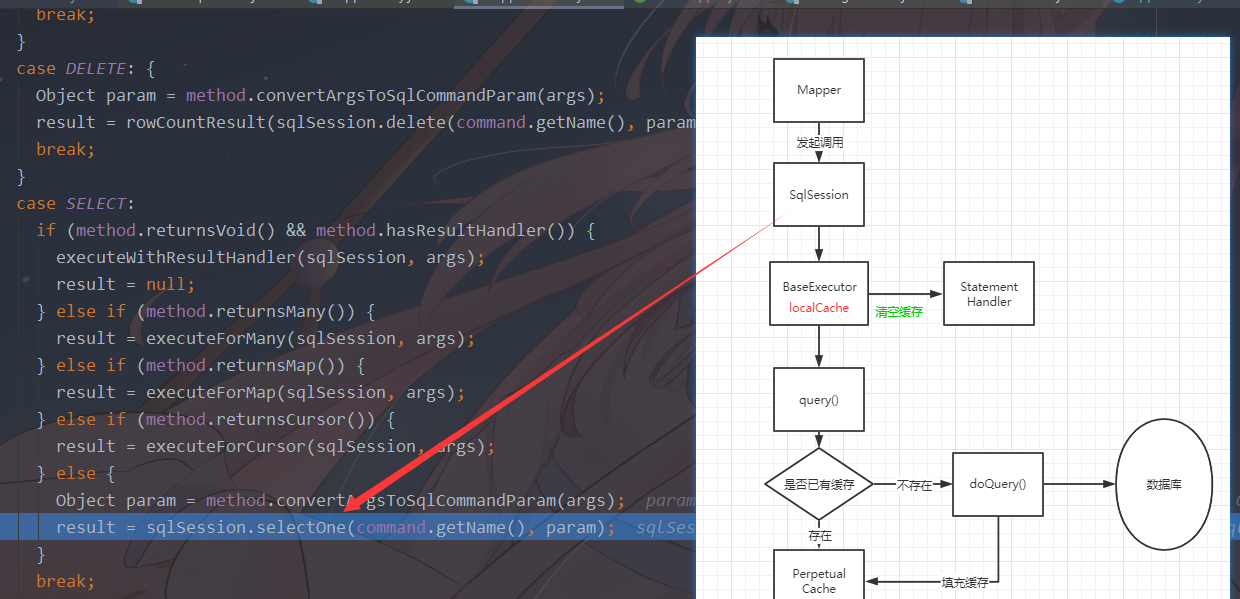

I follow the process to read the key code (multi-picture warning):

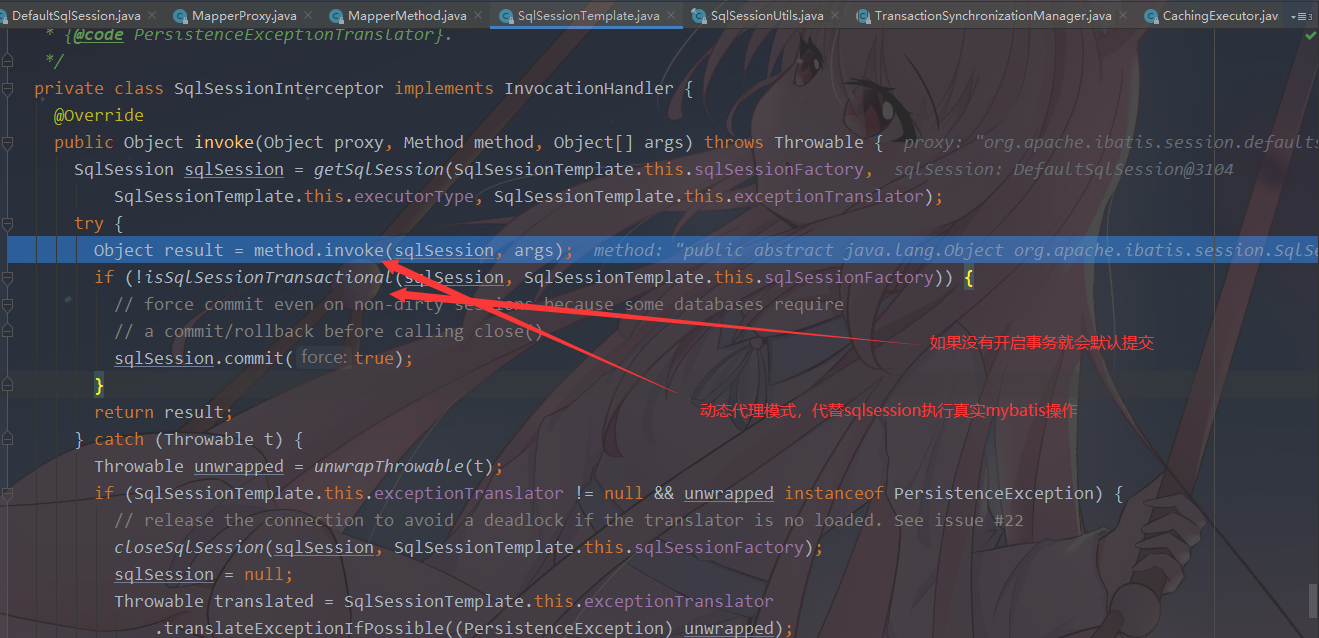

What Spring and Mybatis Level 1 Cache Have to Say

I mentioned earlier that adding transactions makes Mybatis usable. Why?

Let's take a quick look at the process

@Test public void testBySpring(){ ClassPathXmlApplicationContext context=new ClassPathXmlApplicationContext("spring.xml"); UserMapper mapper = context.getBean(UserMapper.class); //Dynamic Proxy Dynamic Proxy MyBatis // mapper ->SqlSessionTemplate --> SqlSessionInterceptor-->SqlSessionFactory DataSourceTransactionManager transactionManager = (DataSourceTransactionManager) context.getBean("txManager"); // Open things manually TransactionStatus status = transactionManager .getTransaction(new DefaultTransactionDefinition()); User user = mapper.selectByid(1); // Each time a new session is constructed to initiate a call // Each time a new session is constructed to initiate the call, but the original session is not closed after the transaction is opened, and the same transaction is still used, using a first-level cache User user1 =mapper.selectByid(1); System.out.println(user == user1); }

summary

1. Closely related to the session, the same session can be triggered

2. Parameter conditions are relevant, including that paging properties are also parameters, as well as parameters and their associated configurations

3. Submit the rollback and the changes will be cleared

MyBatis takes the following rules for its Key generation: [mappedStementId + offset + limit + SQL + queryParams + environment] generates a hash code.So the parameter condition is important

Mybatis Level 2 Cache

Application-level caching, which can be used across threads, is generally applied to query caching on tables with fewer modifications, and takes precedence over first-level caching when querying, with both attack and defense.

Complete solution

1. Memory Storage: Easy to implement, faster, but not persistent, with limited capacity

2. Store on hard disk: can be persisted, has a large capacity, but does not access fast memory

3. Distributed Storage: Storing distributed scenes in a single hard disk/memory server storage cache can cause some common problems and can be cached using redis, which is also one of the solutions to distributed scenes.

Cache Overflow Elimination Mechanism

When the capacity is full, we clean up the cache, and the algorithm to clean up is the overflow elimination mechanism.

Common algorithms are:

1.FIFO: FIFO algorithm

2.LRU: least recently used

3.WeakReference: Weak Reference

Wrap the cached object in a weak reference wrapper, and when Java gc, the object will be recycled regardless of the current memory space

4.SoftReference: Soft Reference

Similar to weak references, the difference is that GC only recycles soft reference objects when space is low

Security issues with cache threads

We want to make sure that the secondary cache is used by other threads. To make sure that the threads can write and read safely, we can modify the cache data when it is available without affecting the original cache data. We usually serialize the cache object and then make a deep copy.So this requires that the entity classes we want to cache must be serialized (implementing Serializable) or there will be BUG s that cannot be serialized.

Tip: The storage types we commonly use basically implement the Srializable method, such as hashmap, arraylist, String...

Mybatis Level 2 Cache Solution

How does mybaits implement its secondary caching solution?

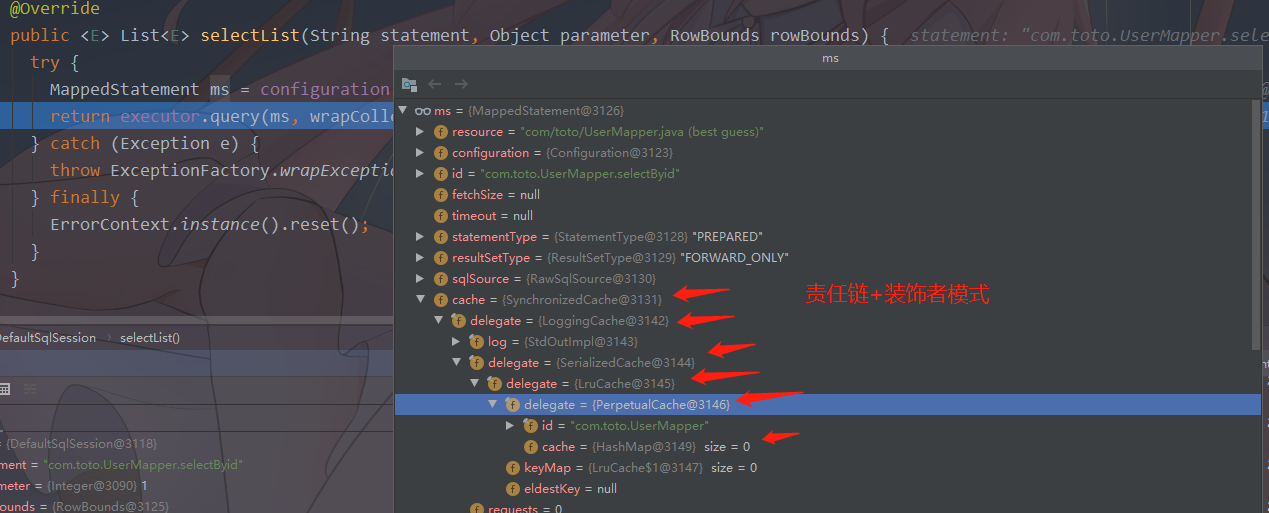

mybaits uses the responsibility chain solution, which first defines some of the most basic common methods in the cache, all of which are defined in the Cache class.These functions include setting up the cache, getting the cache, clearing the cache, getting the number of caches, then all of the above functions correspond to a component class, and concatenate the components based on the decorator mode plus the responsibility chain mode.When performing the basic functions of a cache, the other caches are passed down in turn through the chain of responsibility.

What are the advantages of its design pattern?

Single responsibilities, each node is responsible for its own logic, not other logic

Strong scalability, can expand nodes as needed, delete nodes can also swap order, ensuring flexibility

There is loosely coupled, where nodes are not forced to depend on other nodes, but indirectly through the top-level Cache interface

Detailed description of each cache corresponds to a longer piece of functionality, followed by a dig, and some caches provided by mybatis Org.apache.ibatisUnder the.Cache package, where the first level cache uses impl and the second level cache uses decorators.

Secondary Cache Open Conditions

Second-level caches are not turned on by default like first-level caches, and can be declared by @CacheNamespace or by specifying a Mappedstatement.The cache is unique to this Mapper after it is declared, and other unset caches are not available.However, if multiple mappers are required to use the same cache space, we can reference the same cache space through @CacheNamespaceRef.

If it is xml, you can add a level cache for this mapper through tags, using the same caching session space that can be referenced.

Here are some of the parameters we can configure when using caching:

| Configuration Name | Configuration Description |

|---|---|

| implementation | The storage implementation class for the specified cache is stored in memory by default with PerpetualCache's HashMap |

| eviction | Specify the cache overflow phase-out implementation class, default LRU, and clear the least recently used |

| flushInterval | Set the full empty time of the cache timer, which is not empty by default.Emptying strategy is to empty the entire time rather than a value |

| size | Specify the cache capacity beyond which the algorithm specified by eviction will be phased out |

| readWith | tue ensures that cached objects are readable and accessible through serialized replication, while the default true and false manipulate the same object with multiple threads |

| blocking | A blocking lock is added to each key's access to prevent cache breakdown |

| properties | Configure additional parameters for the above components, key corresponds to the field name in the component |

For example:

@CacheNamespace(implementation = MybatisRedisCache.class,eviction = FifoCache.class)

<cache type="com.toto.MybatisRedisCache"> <property name="eviction" value="LRU" /> <property name="size" value="1024" /> <property name="readOnly" value="false" /> </cache>

Secondary Cache Hit Criteria

Level 2 cache hits are similar to level 1 cache hits, but the session must be submitted and can be different. The hit criteria are listed below.

1. Session submission

2. The SQL statement and parameters are the same

3. Same StatementId

4.RowBounds are the same

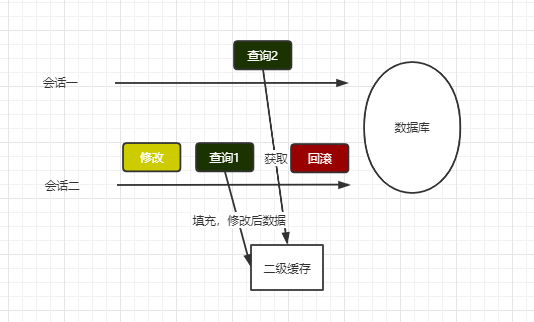

Why do secondary caches have to commit transactions? Let me draw a diagram to show that:

Dirty reads occur if a session does not commit, so to avoid this, it must be committed before a secondary cache can be acquired, including emptying the cache, and the session must be submitted normally before it takes effect.If not, is there a temporary storage area?

Secondary Cache Structure

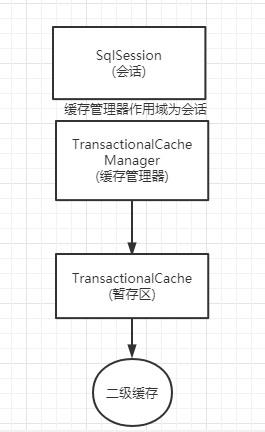

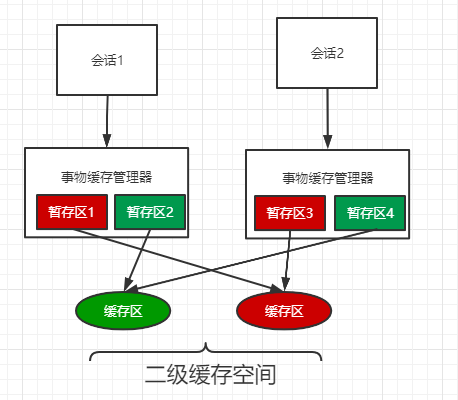

In order to change the secondary cache after session submission, MyBatis has set up several temporary zones for each session. Changes made to the specified cache space by the current session are stored in the corresponding temporary zone, and will not be committed to the corresponding cache space in each temporary zone until after session submission.In order to manage these staging areas uniformly, each session has a unique transaction cache manager, so the staging area here is also called the transaction cache.

Figure:

I draw a picture of the cache and staging area of things in two conversation scenarios:

Secondary Cache Execution Process

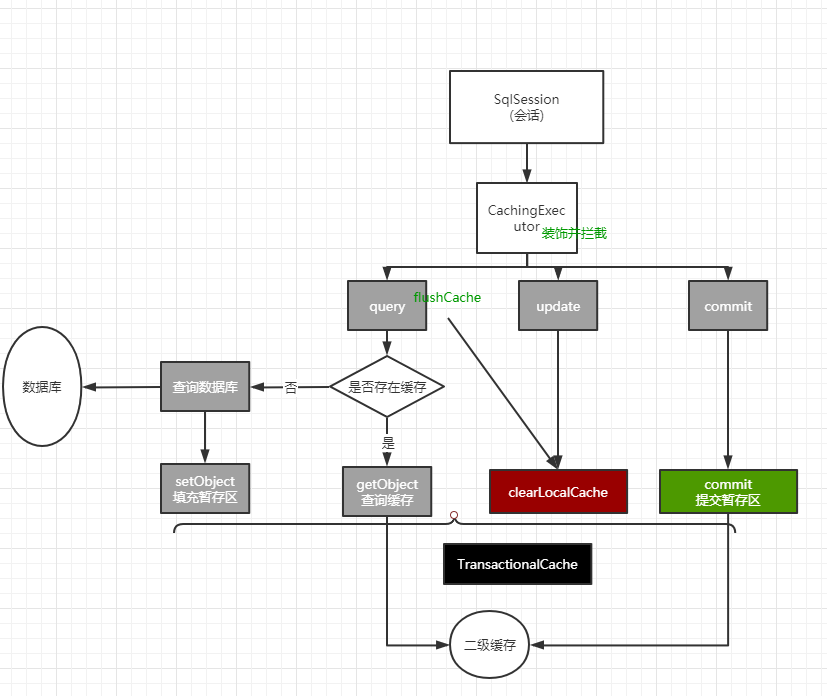

The original session was to implement SQL calls through an executor, where the logic of SQL calls was intercepted using CachingExecutor based on the decorator mode, and secondary cache-related logic was embedded.

query operation

When a session calls query(), a cache Key is composed based on data such as query statements, parameters, and so on, and then attempts to read data from the secondary cache.Read it, return directly, call the decorated Executor to query the database, and then populate the corresponding staging area.

Figure (the corresponding figure with the complement code after it is too late): None at the moment

Update operation update

When an update operation is performed, the cache KEY is also composed based on the query statements and parameters, and the cache is emptied before the update is executed.Cleanup here is for the staging area only, and a clear flag is recorded so that the secondary cache space is cleared based on that flag when the session is submitted.However, if flushCache=true is configured in a query operation, the same operation will be performed.

Figure (the corresponding figure with the complement code after it is too late): None at the moment

commit operation

When a session commit s, all changes to the staging area under that session are updated to the corresponding secondary cache space.

Figure (the corresponding figure with the complement code after it is too late): None at the moment

Flowchart of Level 1 and Level 2 Caches

Figure (the corresponding figure with the complement code after it is too late): None at the moment