Ah Ah Ah O (> mouth <) O!!! My first neural network was actually calculated.

Learning a simple neural network model, today out of interest, I build a huge simple model of the neural network, no, no, no, no, it is calculated.

For details, please refer to my blog

This is the link.

This code is based on my last blog Order me. According to the deduction formula, Xiaobai's skill is limited. Don't laugh at him.

import numpy as np import matplotlib.pyplot as plt %matplotlib inline #Activation function and derivative def sigmoid(x): return 1 / (1 + np.exp(-x)) def d_sigmoid(x): return x * (1 - x) def tanh(x): return np.tanh(x) def d_tanh(x): return 1.0 - np.tanh(x) * np.tanh(x) ##Parameter Settings alph = 0.5 esp = 0.01 step = 5000 #Forward propagation def qianxiangchuanbo(init,weight,b): #Input Layer - > Implicit Layer neth=[]#Neuron input weighted sum outh=[]#Neuronal output #Implicit Layer - > Output Layer neto=[]#Output neurons outo=[]#Neuronal output neth.append(weight[0]*init[0]+weight[1]*init[1]+b[0]) neth.append(weight[2]*init[0]+weight[3]*init[1]+b[0]) outh.append(sigmoid(neth[0])) outh.append(sigmoid(neth[1])) neto.append(weight[4]*outh[0]+weight[5]*outh[1]+b[1]) neto.append(weight[6]*outh[0]+weight[7]*outh[1]+b[1]) outo.append(sigmoid(neto[0])) outo.append(sigmoid(neto[1])) return neth,outh,neto,outo def fanxiangchuanbo(out,outo,outh): new_weight=[] q=[] #Transport as Layer - > Hidden Layer a1=(-(init[0]-outo[0])*outo[0]*(1-outo[0])) a2=(-(init[1]-outo[1])*outo[1]*(1-outo[1])) q.append(a1) q.append(a2) w1 = q[0]*weight[4]+q[1]*weight[6]*outh[0]*(1-outh[0])*init[0] w2 = q[0]*weight[4]+q[1]*weight[6]*outh[0]*(1-outh[0])*init[1] w3 = q[0]*weight[5]+q[1]*weight[7]*outh[1]*(1-outh[1])*init[0] w4 = q[0]*weight[5]+q[1]*weight[7]*outh[1]*(1-outh[1])*init[1] new_weight.append(w1) new_weight.append(w2) new_weight.append(w3) new_weight.append(w4) #Output Layer - > Hidden Layer w5 = -(out[0]-outo[0])*outo[0]*(1-outo[0])*outh[0] w6 = -(out[0]-outo[0])*outo[0]*(1-outo[0])*outh[1] w7 = -(out[1]-outo[1])*outo[1]*(1-outo[1])*outh[0] w8 = -(out[1]-outo[1])*outo[1]*(1-outo[1])*outh[1] new_weight.append(w5) new_weight.append(w6) new_weight.append(w7) new_weight.append(w8) return new_weight #Input Set init = [0.05,0.10] #Real output set out = [0.01,0.99] #weight weight = [0.15,0.20,0.25,0.30,0.40,0.45,0.50,0.55] #bias term b=[0.35,0.60] count=0 result = [] while True: count=count+1 neth,outh,neto,outo = qianxiangchuanbo(init,weight,b) e=(abs(out[0]-outo[0])+abs(out[1]-outo[1])) result.append(e) gd_weight = fanxiangchuanbo(out,outo,outh) for i in range(len(weight)): weight[i]=weight[i]-alph*gd_weight[i] # if e<esp: # break if count > step: break for k in range(len(result)): plt.scatter(k,result[k]) plt.show() print(weight) print(out) print(outo)

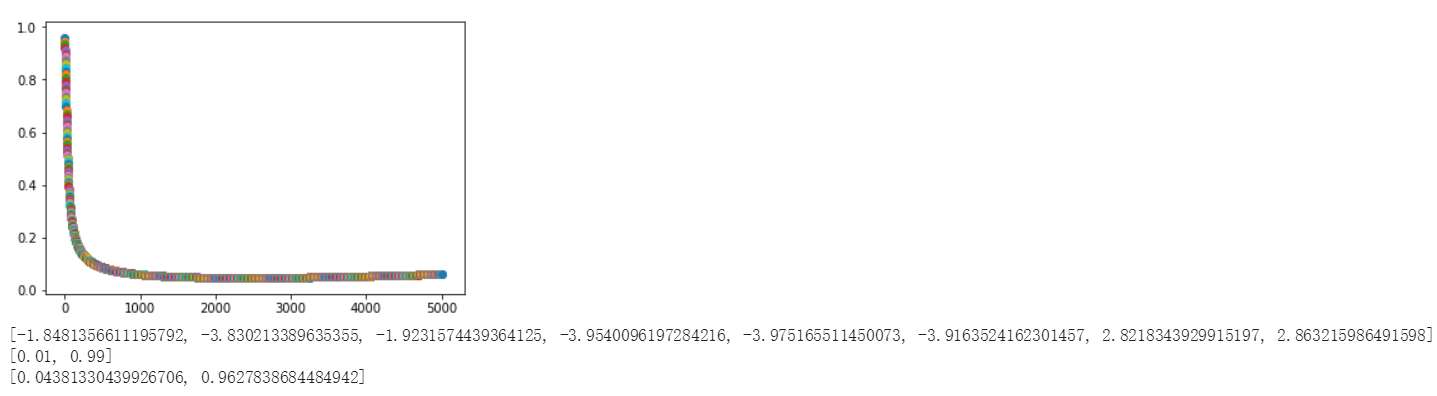

The final result of the operation is shown in the figure.

[External Link Picture Transfer Failure (img-wtS3oNWX-1566473155894) (My First Neural Network Building/1.png)]

Since this code only runs 5000 times, it can be seen that the gap with the actual is still very large, if interested, you can try to increase the number of iterations, or control accuracy. Ah Ah Ah O (> mouth <) O!!! My first neural network was actually calculated.

Learning a simple neural network model, today out of interest, I build a huge simple model of the neural network, no, no, no, no, it is calculated.

This code is based on my last blog Order me. According to the deduction formula, Xiaobai's skill is limited. Don't laugh at him.

import numpy as np import matplotlib.pyplot as plt %matplotlib inline #Activation function and derivative def sigmoid(x): return 1 / (1 + np.exp(-x)) def d_sigmoid(x): return x * (1 - x) def tanh(x): return np.tanh(x) def d_tanh(x): return 1.0 - np.tanh(x) * np.tanh(x) ##Parameter Settings alph = 0.5 esp = 0.01 step = 5000 #Forward propagation def qianxiangchuanbo(init,weight,b): #Input Layer - > Implicit Layer neth=[]#Neuron input weighted sum outh=[]#Neuronal output #Implicit Layer - > Output Layer neto=[]#Output neurons outo=[]#Neuronal output neth.append(weight[0]*init[0]+weight[1]*init[1]+b[0]) neth.append(weight[2]*init[0]+weight[3]*init[1]+b[0]) outh.append(sigmoid(neth[0])) outh.append(sigmoid(neth[1])) neto.append(weight[4]*outh[0]+weight[5]*outh[1]+b[1]) neto.append(weight[6]*outh[0]+weight[7]*outh[1]+b[1]) outo.append(sigmoid(neto[0])) outo.append(sigmoid(neto[1])) return neth,outh,neto,outo def fanxiangchuanbo(out,outo,outh): new_weight=[] q=[] #Transport as Layer - > Hidden Layer a1=(-(init[0]-outo[0])*outo[0]*(1-outo[0])) a2=(-(init[1]-outo[1])*outo[1]*(1-outo[1])) q.append(a1) q.append(a2) w1 = q[0]*weight[4]+q[1]*weight[6]*outh[0]*(1-outh[0])*init[0] w2 = q[0]*weight[4]+q[1]*weight[6]*outh[0]*(1-outh[0])*init[1] w3 = q[0]*weight[5]+q[1]*weight[7]*outh[1]*(1-outh[1])*init[0] w4 = q[0]*weight[5]+q[1]*weight[7]*outh[1]*(1-outh[1])*init[1] new_weight.append(w1) new_weight.append(w2) new_weight.append(w3) new_weight.append(w4) #Output Layer - > Hidden Layer w5 = -(out[0]-outo[0])*outo[0]*(1-outo[0])*outh[0] w6 = -(out[0]-outo[0])*outo[0]*(1-outo[0])*outh[1] w7 = -(out[1]-outo[1])*outo[1]*(1-outo[1])*outh[0] w8 = -(out[1]-outo[1])*outo[1]*(1-outo[1])*outh[1] new_weight.append(w5) new_weight.append(w6) new_weight.append(w7) new_weight.append(w8) return new_weight #Input Set init = [0.05,0.10] #Real output set out = [0.01,0.99] #weight weight = [0.15,0.20,0.25,0.30,0.40,0.45,0.50,0.55] #bias term b=[0.35,0.60] count=0 result = [] while True: count=count+1 neth,outh,neto,outo = qianxiangchuanbo(init,weight,b) e=(abs(out[0]-outo[0])+abs(out[1]-outo[1])) result.append(e) gd_weight = fanxiangchuanbo(out,outo,outh) for i in range(len(weight)): weight[i]=weight[i]-alph*gd_weight[i] # if e<esp: # break if count > step: break for k in range(len(result)): plt.scatter(k,result[k]) plt.show() print(weight) print(out) print(outo)

The final result of the operation is shown in the figure.

[External Link Picture Transfer Failure (img)

Since this code only runs 5000 times, it can be seen that the gap with the actual is still very large, if interested, you can try to increase the number of iterations, or control accuracy.