Mutex

Critical zone

In concurrent programming, if a part of the program is accessed or modified concurrently, to avoid unexpected results caused by concurrent access, this part of the program needs to be protected. The protected part of the program is called the critical zone.

A critical zone can be a shared resource or an entire set of shared resources, such as access to a database, operations on a shared data structure, use of an IO device, and calls to connections in a connection pool.

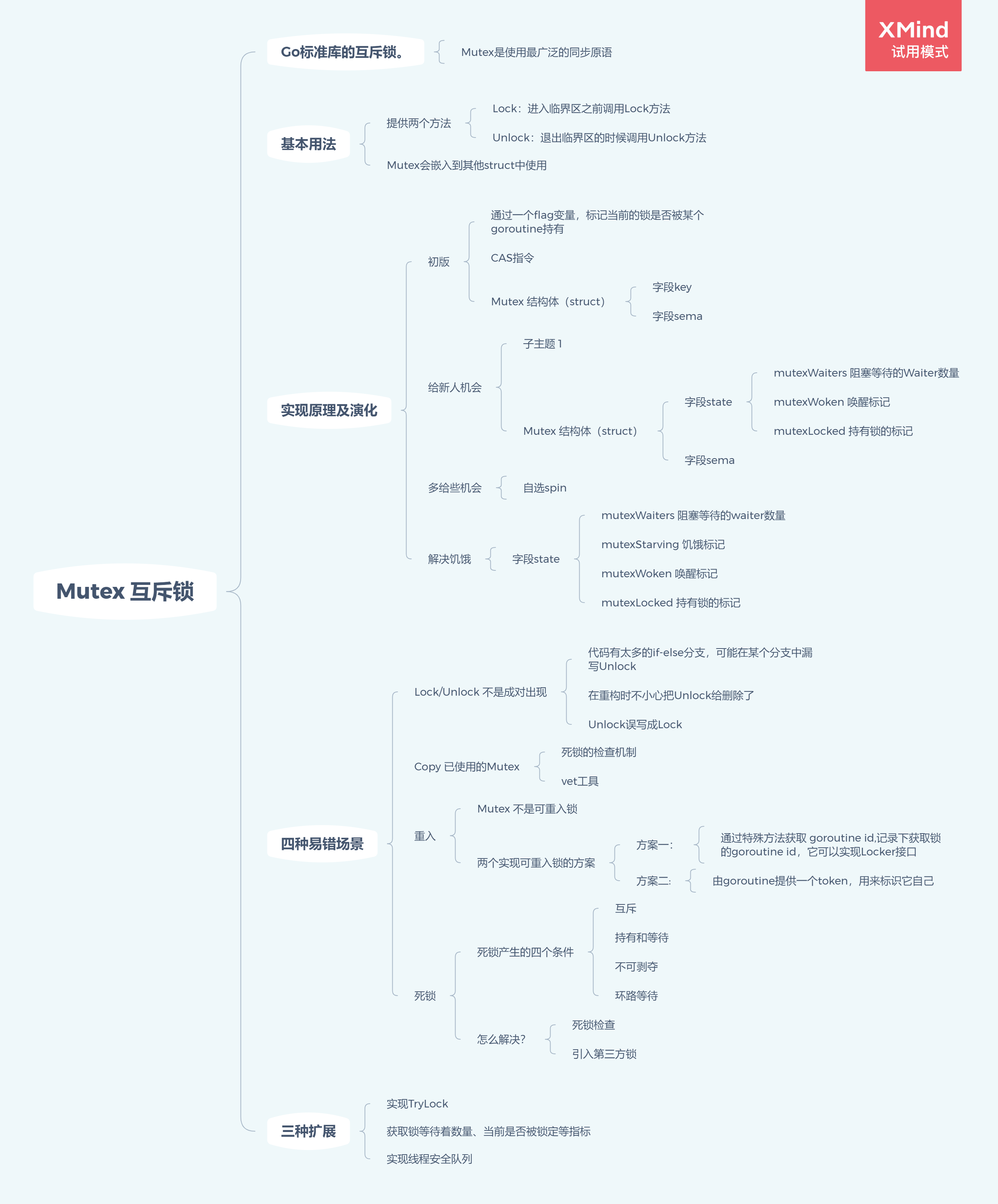

Mutex mutex

mutex is the most widely used synchronization primitive (concurrent primitive)

Sync Primitive Scenario

- Shared resources: Shared resources can be read and written concurrently, which can cause data competition problems, so Mutex, RWMutex, and other concurrent primitives are needed to protect them

- Task scheduling: A goroutine is required to execute according to a certain rule, and there is a sequence of waiting or dependency between goroutines, usually using WaitGroup or Channel.

- Messaging: Information exchange and thread-safe data exchange between different goroutine s, often using Channel

Be careful:

Since Mutex itself does not contain information about the goroutine holding the lock, and Unlock does not check for it, Unlock can be freed by any goroutine call, even if there is no information about the goroutine holding the mutex.

When using Mutex, you must ensure that goroutine does not release locks that it does not hold, and you must follow the principle of "who applies, who releases"

Basic Usage

Mutex provides two methods, Lock and Unlock, calling the Lock method before entering the critical zone and calling the Unlock method after exiting the critical zone.

When a goroutine calls the Lock method to gain ownership of the lock, the goroutine requesting the lock will block the call to the Lock method knowing that the lock has been released and that it has acquired ownership of the lock itself

func(m *Mutex)Lock() func(m *Mutex)Unlock()

Which of the waiting goroutine s will get Mutex first after the lock is released?

Reference resources:https://golang.org/src/sync/mutex.go

Mutex has two modes of normality and hunger

- When Mutex is in normal mode, if there is no new goroutine competing with the head goroutine, the head goroutine gets the lock, and if there is a new goroutine competing probability goroutine gets the lock

- When the team leader goroutine loses 1 ms of competition, it adjusts Mutex to hunger mode. When entering hunger mode, the ownership of the lock is transferred directly from the unlocked goroutine to the team leader goroutine, and the new goroutine is placed directly at the end of the team

- When a goroutine acquires a lock, it will switch to normal mode if it finds that it meets any of the following conditions

- It is the last one in the queue

- It waits for locks for less than 1 ms

CAS

CAS is the foundation for mutex and synchronization primitives

CAS is an atomic operation supported by the CPU and its atomicity is guaranteed at the hardware level.

The CAS directive compares a given value to a value in a memory address, and if they are the same value, replaces the value in a memory address with a new value. Atomicity guarantees that the directive always calculates based on the latest value, and CAS returns fail if other threads modify the value at the same time

Mutext Architecture Evolution

First Edition

Marks whether the current lock is held by a goroutine by setting a flag variable.

If the flag value is 1, the lock is already held and other competing goroutine s can only wait.

If the flag has a value of 0, you can set the flag to 1 through cas, identifying that the lock is held by the current goroutine.

// CAS operation, atomic package was not abstracted at that time

func cas(val *int32, old, new int32) bool

func semacquire(*int32)

func semrelease(*int32)

// The structure of the mutex, which contains two fields

type Mutex struct {

key int32 // Identification of whether a lock is held

sema int32 // Semaphore specific for blocking/waking goroutine s

}

// Ensure success in increasing delta value on val

func xadd(val *int32, delta int32) (new int32) {

for {

v := *val

if cas(val, v, v+delta) {

return v + delta

}

}

panic("unreached")

}

// Request Lock

func (m *Mutex) Lock() {

if xadd(&m.key, 1) == 1 { //Identity plus 1, if equal to 1, successfully acquired lock, if greater than 1, mutex already held

return

}

// goroutine waits here, if it gets locked directly after waking up

semacquire(&m.sema) // I didn't get the lock, I went to sleep and waited to wake up

}

func (m *Mutex) Unlock() {

if xadd(&m.key, -1) == 0 { // Subtract the identity by 1. If equal to 0, there are no other waiters. If not, there are waiters who need to wake up.

return

}

semrelease(&m.sema) // Wake up other blocked goroutine s

}

The Mutex structure contains two fields:

- Field Key: is a flag. Used to identify if the exclusion lock is held by a goroutine. If the key is greater than or equal to 1, the exclusion lock is already held.

- Field sema: is a semaphore variable used to control blocking hibernation and wakeup waiting for a goroutine

First Edition Questions:

A goroutine requesting a lock queues for mutex acquisition. Although this may seem fair, it is not optimal in terms of performance. Because if we can give the lock to a goroutine that is consuming CPU time slices, there is no need for context switching and there may be better performance under high concurrency

Give New Opportunities

Adjustment June 30, 2011

Mutex implementation:

type Mutex struct {

state int32

sema uint32

}

const (

mutexLocked = 1 << iota // mutex is locked

mutexWoken // Wake-up sign

mutexWaiterShift = iota // Number of Waiters

)

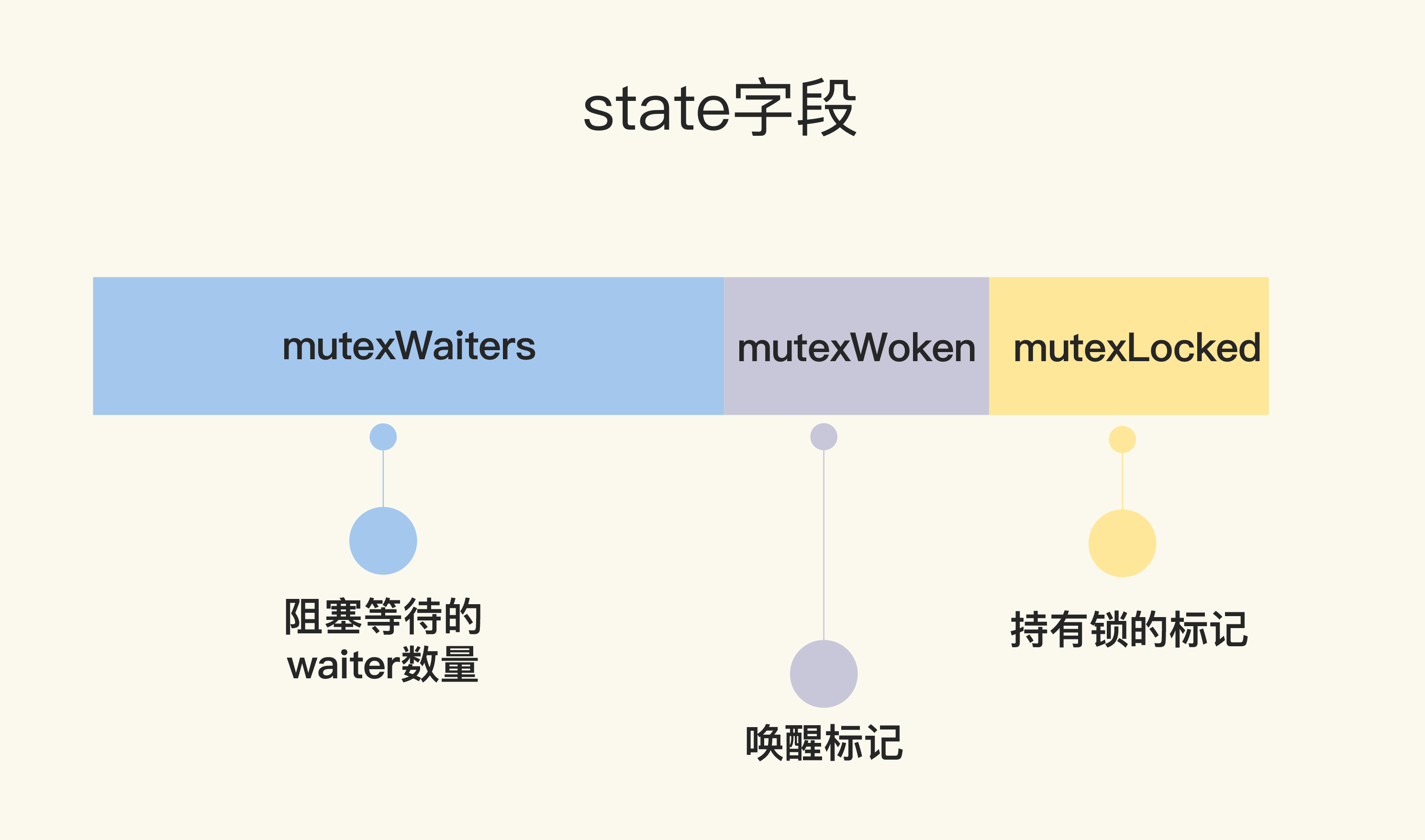

Mutex still contains two fields, but the first field has been changed to state and the meaning has changed

state is a composite field that contains multiple meanings, allowing mutual exclusion with as little memory as possible.

- The first bit indicates whether the lock is held or not

- The second digit indicates whether there is a goroutine waking up

- The remaining digits indicate the number of goroutine s waiting for this lock [2^(32-2)-1, which basically meets the vast majority of needs]

Lock method

func (m *Mutex) Lock() {

// Fast path: lucky case, get the lock directly

if atomic.CompareAndSwapInt32(&m.state, 0, mutexLocked) {

return

}

awoke := false

for {

old := m.state

new := old | mutexLocked // Lock privately and expect to be able to grab locks in subsequent operations

if old&mutexLocked != 0 { // If mutex is locked, add one to the number of waiters

new = old + 1<<mutexWaiterShift // 1 Move two left at the same time

}

if awoke {

// If goroutine is a wake-up flag that needs to be cleared from mutex

new &^= mutexWoken

}

if atomic.CompareAndSwapInt32(&m.state, old, new) { // cas set new state

if old&mutexLocked == 0 { // If the lock state is locked, the current goroutine acquires the lock successfully and ends the loop

break

}

runtime.Semacquire(&m.sema) // Request semaphore, wait for wake up

awoke = true

}

}

}

There are two types of goroutines requesting locks, one is a new goroutine requesting locks, the other is a wakeup goroutine waiting for request locks

Locks also have two states: locked and unlocked

| goroutine requesting locks | The current lock is held | The current lock is not held |

|---|---|---|

| New goroutine | waiter++;dormancy | Acquire locks |

| Wakeup goroutine | waiter++;Clear the mutexWoken flag; hibernate again, join the waiting queue | Clear mutextWoken; acquire locks |

Release lock unlock method:

func (m *Mutex) Unlock() {

// Fast path: drop lock bit.

new := atomic.AddInt32(&m.state, -mutexLocked) // Remove the lock flag, where the value of state has changed and the lock has been released

if (new+mutexLocked)&mutexLocked == 0 {

panic("sync: unlock of unlocked mutex") // Error if there was no lock

}

old := new

for {

// If there is no waiter, or no wake waiter, or the lock is locked

// If there is no other waiter [old > mutexWaiterShift == 0], then there is only one goroutine competing for this lock and it can be returned directly

// The current goroutine can be returned directly if it wakes up or is locked by someone else [old &(mutexLocked|mutexWoken)!= 0]

if old>>mutexWaiterShift == 0 || old&(mutexLocked|mutexWoken) != 0 {

return

}

// Prepare to wake up goroutine and set wake up flag

new = (old - 1<<mutexWaiterShift) | mutexWoken

if atomic.CompareAndSwapInt32(&m.state, old, new) {

runtime.Semrelease(&m.sema)

return

}

old = m.state

}

}

Changes to the original design:

New goroutine s also have the opportunity to acquire locks, breaking the logic of first come first served.

Give more opportunities

February 20, 2015

If a new goroutine or waked goroutine fails to acquire a lock for the first time, they will spin and try again and again runtime)

Mode, check whether the lock is released, try a certain number of spins, and then execute the original logic.

func (m *Mutex) Lock() {

// Fast path: The road to luck, just got the lock

if atomic.CompareAndSwapInt32(&m.state, 0, mutexLocked) {

if raceenabled {

raceAcquire(unsafe.Pointer(m))

}

return

}

awoke := false

iter := 0

for { // Whether it's a new goroutine requesting a lock or a waked goroutine, it keeps trying to request a lock

old := m.state // Save the current lock state first

new := old | mutexLocked // New state set lock flag

if old&mutexLocked != 0 { // Lock has not been released yet

// Determine if spin is still possible

// For critical zone code execution is short, locks are released very quickly, and goroutine s that grab locks do not have to wait for dispatch through sleep wake-up

if runtime_canSpin(iter) {

// The state is disguised as a wake-up state. Once set successfully, other operations to set old will fail, preventing the unlock operation from setting state to wake-up while spinning, leaving a large number of goroutine s in a competitive state.

if !awoke && old&mutexWoken == 0 && old>>mutexWaiterShift != 0 &&

atomic.CompareAndSwapInt32(&m.state, old, old|mutexWoken) {

awoke = true

}

runtime_doSpin()

iter++

continue

}

new = old + 1<<mutexWaiterShift

}

if awoke {

if new&mutexWoken == 0 {

panic("sync: inconsistent mutex state")

}

new &^= mutexWoken // New state clears wake-up flag

}

if atomic.CompareAndSwapInt32(&m.state, old, new) {

if old&mutexLocked == 0 { // Old state lock released, new state successfully holds lock, return directly

break

}

runtime_Semacquire(&m.sema) // dormancy

awoke = true

iter = 0

}

}

if raceenabled {

raceAcquire(unsafe.Pointer(m))

}

}

Because new goroutines compete, it is possible that new goroutines will seize the chance to acquire locks every time. In extreme cases, waiting goroutines may not be able to acquire locks, which is hunger.

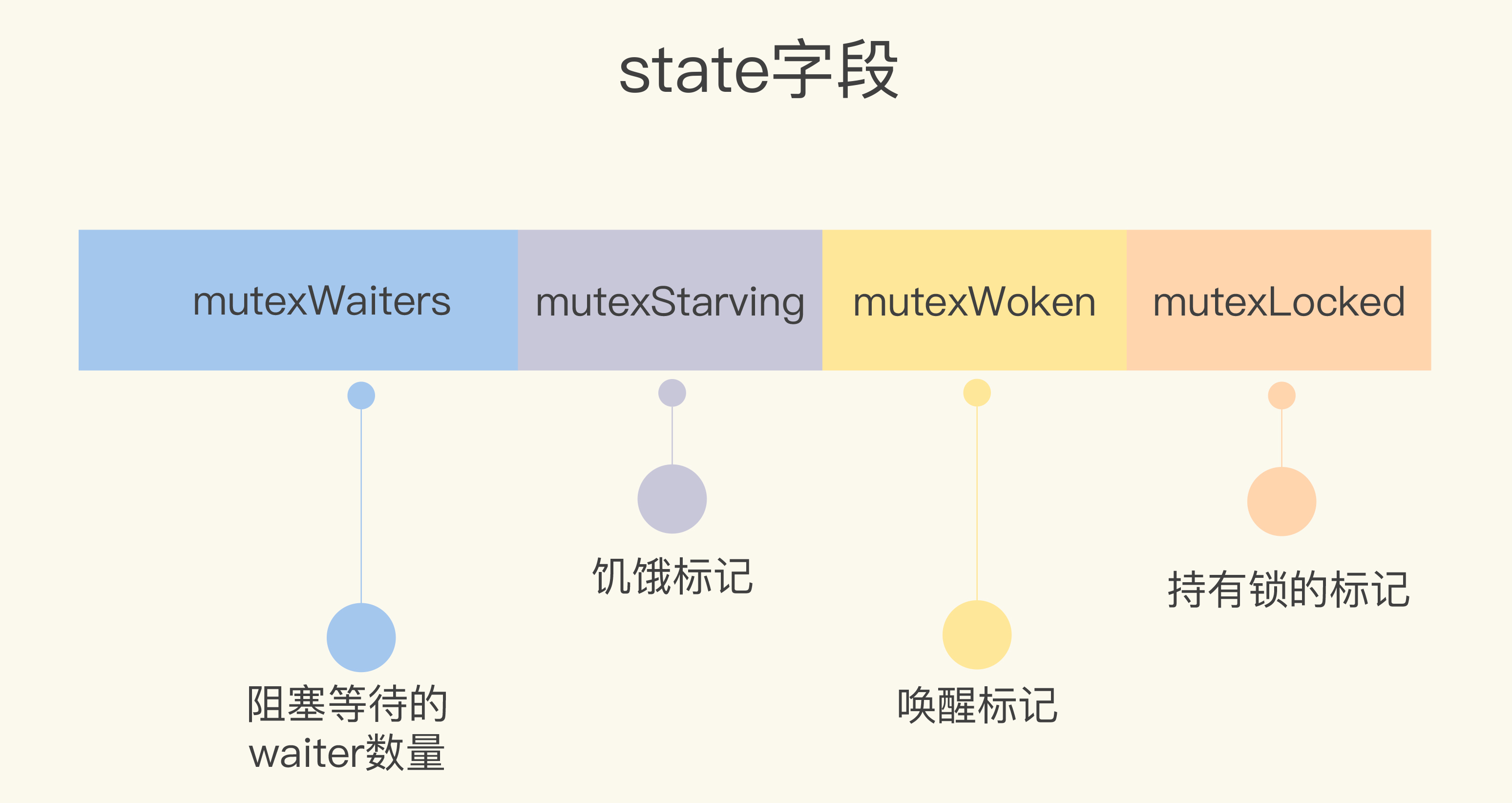

Fix hunger

In Go 1.9 of 2016, Mutex added a hunger mode, making locks more fair, limiting unfair wait times by another millisecond, and fixing a big Bug: Always putting the waking goroutine at the end of the waiting queue results in more unfair wait times.

In 2018 Go developers split fast path and slow path into separate methods for inlining (for inline reference) Go1.14 Improves performance with inline defer)

For the waiter holding the lock after Mutex wakes up in 2019, the scheduler can have a higher priority to execute

func (m *Mutex) Lock() {

// Fast path: Fortune Star

if atomic.CompareAndSwapInt32(&m.state, 0, mutexLocked) {

if race.Enabled {

race.Acquire(unsafe.Pointer(m))

}

return

}

// Slow path tries spin-lock competition or hungry goroutine competition

m.lockSlow()

}

func (m *Mutex) lockSlow() {

// Mark this goroutine wait time

var waitStartTime int64

// Is this goroutine already hungry

starving := false

// Is this goroutine waking up

awoke := false

// Number of spins

iter := 0

// Copy the current state of a lock

old := m.state

for {

// The first condition is that the state is locked, but not hungry. If it is hungry, spin is useless, and ownership of the lock is given directly to the first person in the waiting queue.

// old&(mutexLocked|mutexStarving) == mutexLocked

// The second condition is that it can also spin, has multiple cores, has little pressure, and can spin for a certain number of times

// If both conditions are met, keep spinning until the lock is released, or you go hungry, or you can no longer spin

if old&(mutexLocked|mutexStarving) == mutexLocked && runtime_canSpin(iter) {

// If a state is found during spin to have not been set with the woken flag, set its woken flag and mark that it is awakened

if !awoke && old&mutexWoken == 0 && old>>mutexWaiterShift != 0 &&

atomic.CompareAndSwapInt32(&m.state, old, old|mutexWoken) {

awoke = true

}

runtime_doSpin()

iter++

old = m.state

continue

}

new := old

// No hunger, lock

if old&mutexStarving == 0 {

new |= mutexLocked

}

// If locked or hungry, increase the number of waiters by one

if old&(mutexLocked|mutexStarving) != 0 {

new += 1 << mutexWaiterShift

}

// If the goroutine is currently in starvation mode and the mutex is locked

if starving && old&mutexLocked != 0 {

new |= mutexStarving // Set Hunger

}

if awoke {

if new&mutexWoken == 0 {

throw("sync: inconsistent mutex state")

}

// New state clears wake-up flag

new &^= mutexWoken

}

// New status set successfully

if atomic.CompareAndSwapInt32(&m.state, old, new) {

// The original lock has been released and is not hungry, normal request to lock, return

if old&(mutexLocked|mutexStarving) == 0 {

break // locked the mutex with CAS

}

// Except for starvation

// If you've previously joined a queue with a header

queueLifo := waitStartTime != 0

if waitStartTime == 0 {

waitStartTime = runtime_nanotime()

}

// Blocking Wait

runtime_SemacquireMutex(&m.sema, queueLifo, 1)

// Check if the lock should be hungry after waking up

starving = starving || runtime_nanotime()-waitStartTime > starvationThresholdNs

old = m.state

// If the lock is already hungry, grab the lock and return

if old&mutexStarving != 0 {

if old&(mutexLocked|mutexWoken) != 0 || old>>mutexWaiterShift == 0 {

throw("sync: inconsistent mutex state")

}

delta := int32(mutexLocked - 1<<mutexWaiterShift)

if !starving || old>>mutexWaiterShift == 1 {

// Exit starvation mode.

// Critical to do it here and consider wait time.

// Starvation mode is so inefficient, that two goroutines

// can go lock-step infinitely once they switch mutex

// to starvation mode.

delta -= mutexStarving

}

atomic.AddInt32(&m.state, delta)

break

}

awoke = true

iter = 0

} else {

old = m.state

}

}

if race.Enabled {

race.Acquire(unsafe.Pointer(m))

}

}

func (m *Mutex) Unlock() {

if race.Enabled {

_ = m.state

race.Release(unsafe.Pointer(m))

}

// Fast path: drop lock bit.

new := atomic.AddInt32(&m.state, -mutexLocked)

if new != 0 {

// Outlined slow path to allow inlining the fast path.

// To hide unlockSlow during tracing we skip one extra frame when tracing GoUnblock.

m.unlockSlow(new)

}

}

func (m *Mutex) unlockSlow(new int32) {

if (new+mutexLocked)&mutexLocked == 0 {

throw("sync: unlock of unlocked mutex")

}

if new&mutexStarving == 0 {

old := new

for {

if old>>mutexWaiterShift == 0 || old&(mutexLocked|mutexWoken|mutexStarving) != 0 {

return

}

new = (old - 1<<mutexWaiterShift) | mutexWoken

if atomic.CompareAndSwapInt32(&m.state, old, new) {

runtime_Semrelease(&m.sema, false, 1)

return

}

old = m.state

}

} else {

// If Mutex is hungry, wake up the waiter in the waiting queue directly.

runtime_Semrelease(&m.sema, true, 1)

}

}

Four common error scenarios for Mutex

Lock and Unlock do not appear in pairs

Lock/Unlock does not appear in pairs, meaning a deadlock, or panic due to an unlocked Matrix of Unlock

Common cases:

- There are too many if-else branches in the code, and an Unlock may have been omitted from one of them k8s missed unlock

- Lock/Unlock was deleted during refactoring

- Unlock Written as Lock google grpc unlock is miswritten as lock

Copy used Mutex

The synchronization primitive of Package sync cannot be copied after use.

Mutext is a stateful object, and the state field records the state of the lock.

type Counter struct {

sync.Mutex

Count int

}

func main() {

var c Counter

c.Lock()

defer c.Unlock()

c.Count++

foo(c) // Copy Lock

}

// Here Counter's parameters are passed in by copying

func foo(c Counter) {

c.Lock()

defer c.Unlock()

fmt.Println("in foo")

}

Deadlock check mechanism vet tool

Reentry

Re-lockable:

When a thread acquires a lock, if no other thread owns it, the thread successfully acquires the lock, and then if the other thread requests the lock again, it will enter and exit the blocking wait state. However, if the thread that owns the lock requests the lock again, it will not block but return successfully.

Mutex is not reentrant

func foo(l sync.Locker) {

fmt.Println("in foo")

l.Lock()

bar(l)

l.Unlock()

}

func bar(l sync.Locker) {

l.Lock()

fmt.Println("in bar")

l.Unlock()

}

func main() {

l := &sync.Mutex{}

foo(l)

}

How to implement reentrant locks

- Get the goroutine id by hacker, record the goroutine id to get the lock, which can implement the Locker interface

- When calling the Lock/Unlock method, goroutine provides a token to identify itself, rather than a hacker to get the goroutine id, but this does not satisfy the Locker interface.

deadlock

Two or more processes are in a state of waiting for each other to compete for shared resources during execution. Without external interference, they will not be able to proceed. In this case, we call the system deadlocked or the system deadlocked.

- Mutual exclusion: at least one resource is exclusive, other threads must wait until the resource is released

- Hold and wait: goroutine holds a resource and is requesting resources held by other goroutines.

- Indeprivable: Resource can only be released by holding his goroutine

- Loop waiting: Generally speaking, there is a set of waiting processes, P={P1,P2...PN}, P1 waits for resources held by P2, P2 waits for resources held by P3 times, and so on, and finally PN waits for resources held by P1, which forms a loop waiting