This article mainly introduces the use of MongoDB's bulkWrite() method by comparing batch and non batch operations. Incidentally, it is compared with relational database MySQL to compare the efficiency of these two different types of databases. If you just want to learn how to use bulkWrite(), just look at the first part.

Test environment: win7 ultimate, 16G memory, i3 processor, MongoDB3.0.2, mysql5.0

1, MongoDB batch operation

MongoDB's data operations are divided into Read Operations and Write Operations. Read Operations includes query operations and Write Operations includes delete, insert, replace and update operations. MongoDB provides the client to execute Write Operations in bulk, that is, batch Write Operations. In the java driver, corresponding to the bulkWrite() method of MongoCollection, let's take a look at the method signature:

BulkWriteResult com.mongodb.client.MongoCollection.bulkWrite(List<? extends WriteModel<? extends Document>> requests)

This method requires to pass in a List collection. The element type in the collection is WriteModel, which represents a base class model that can be used for batch write operations. It has the following subclasses: DeleteManyModel, DeleteOneModel, InsertOneModel, ReplaceOneModel, UpdateManyModel, UpdateOneModel. From the name, it corresponds to delete, insert, replace Update several operations. This method returns a BulkWriteResult object, which represents a successful batch write operation result and encapsulates the status information of the operation result, such as the number of records inserted, updated and deleted.

1. Insert operation

(1) . batch insert

The code is as follows: this method receives a set parameter that contains the Document object to be inserted, traverses the collection, constructs the InsertOneModel object using Document, each InsertOneModel instance represents an operation to insert a single Document, then adds the instance to the List set, calling bulkWrite() method. Pass in the list collection that stores all insertion operations to complete batch insertion.

public void bulkWriteInsert(List<Document> documents){

List<WriteModel<Document>> requests = new ArrayList<WriteModel<Document>>();

for (Document document : documents) {

//Construct an operation model for inserting a single document

InsertOneModel<Document> iom = new InsertOneModel<Document>(document);

requests.add(iom);

}

BulkWriteResult bulkWriteResult = collection.bulkWrite(requests);

System.out.println(bulkWriteResult.toString());

}Test: let's test it through a main function. First, we construct 100 thousand Product entity objects, convert them into json strings using a tool class, then parse them into Document objects, save them into a list collection, and then invoke the method written above to test the insertion time of the 100 thousand objects.

TestMongoDB instance = TestMongoDB.getInstance();

ArrayList<Document> documents = new ArrayList<Document>();

for (int i = 0; i < 100000; i++) {

Product product = new Product(i,"book","Kite Runner",22.5);

//Convert java objects to json strings

String jsonProduct = JsonParseUtil.getJsonString4JavaPOJO(product);

//Parsing json strings into Document objects

Document docProduct = Document.parse(jsonProduct);

documents.add(docProduct);

}

System.out.println("Start inserting data...");

long startInsert = System.currentTimeMillis();

instance.bulkWriteInsert(documents);

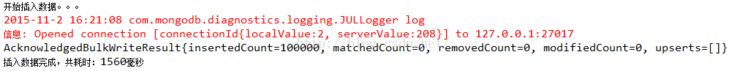

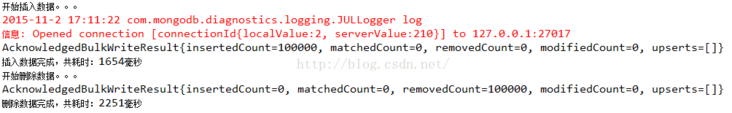

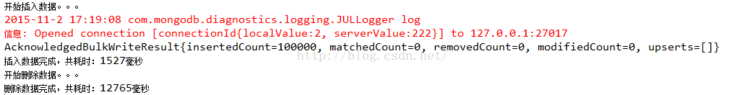

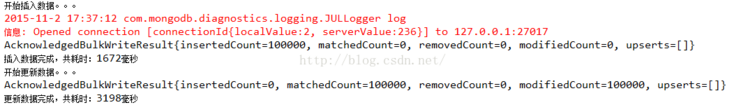

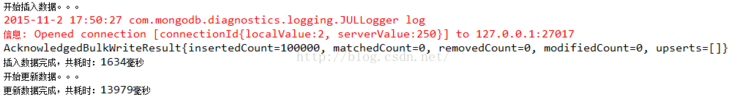

System.out.println("Insert data completed, total time:"+(System.currentTimeMillis() - startInsert)+"millisecond");Results: 1560 MS, multiple tests were basically about 1.5 seconds

(2) . insert item by item

Next, by inserting 100000 data in non batch, the method is as follows:

public void insertOneByOne(List<Document> documents) throws ParseException{

for (Document document : documents){

collection.insertOne(document);

}

}Test: 100000 pieces of data

System.out.println("Start inserting data...");

long startInsert = System.currentTimeMillis();

instance.insertOneByOne(documents);

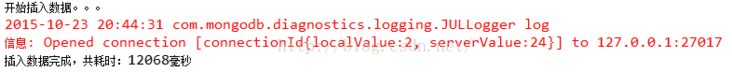

System.out.println("Insert data completed, total time:"+(System.currentTimeMillis() - startInsert)+"millisecond");Results: 12068 MS, the gap was very large. It can be seen that the efficiency of MongoDB batch insertion is much higher than that of item by item data insertion.

Supplement:

The insertMany() method of MongoCollection is equivalent to the bulkWrite() method. The test time is about the same and no mapping is needed.

public void insertMany(List<Document> documents) throws ParseException{

//Equivalent to bulkWrite() method

collection.insertMany(documents);

}2. Delete operation

(1) . batch deletion

If you master batch insertion and batch deletion, you can draw a ladle according to the gourd. The construction of DeleteOneModel requires a Bson type parameter to represent a deletion operation. Here, the subclass Document of Bson class is used. Here's the point. The deletion condition here uses the Document's_ ID field, which is automatically generated after the Document is inserted into the database. Before inserting the database, document.get("_id") is null. If other conditions such as productId are used, the index should be added to the productId field after the Document is inserted into the collection

collection.createIndex(new Document("productId", 1));Because with the increase of the amount of collection data, the search will be more time-consuming. Adding an index is to improve the search efficiency and speed up the deletion efficiency. In addition, it is worth mentioning that DeleteOneModel means to delete at most one record matching the criteria, and DeleteManyModel means to delete all records matching the criteria. To prevent deleting multiple records at once, DeleteOneModel is used here to ensure that only one record is deleted in one operation. Of course, it is impossible to match multiple records because_ id is unique.

public void bulkWriteDelete(List<Document> documents){

List<WriteModel<Document>> requests = new ArrayList<WriteModel<Document>>();

for (Document document : documents) {

//Delete condition

Document queryDocument = new Document("_id",document.get("_id"));

//Construct an operation model for deleting a single document,

DeleteOneModel<Document> dom = new DeleteOneModel<Document>(queryDocument);

requests.add(dom);

}

BulkWriteResult bulkWriteResult = collection.bulkWrite(requests);

System.out.println(bulkWriteResult.toString());

}Test: 100000 pieces of data

System.out.println("Start deleting data...");

long startDelete = System.currentTimeMillis();

instance.bulkWriteDelete(documents);

System.out.println("Data deletion completed, total time:"+(System.currentTimeMillis() - startDelete)+"millisecond");Result: 2251 MS

(2) . delete item by item

Let's take a look at deletion in non batch

public void deleteOneByOne(List<Document> documents){

for (Document document : documents) {

Document queryDocument = new Document("_id",document.get("_id"));

DeleteResult deleteResult = collection.deleteOne(queryDocument);

}

}Test: 100000 pieces of data

System.out.println("Start deleting data...");

long startDelete = System.currentTimeMillis();

instance.deleteOneByOne(documents);

System.out.println("Data deletion completed, total time:"+(System.currentTimeMillis() - startDelete)+"millisecond");Results: 12765 milliseconds, much lower than the efficiency of batch deletion

3. Update operation

(1) . batch update

Let's take another look at batch update. There are two types: UpdateOneModel and UpdateManyModel. The difference is that the former updates one record of matching conditions, and the latter updates all records of matching conditions. For ReplaceOneModel, it means replacement operation, which is also classified as update. Now take UpdateOneModel as an example. The UpdateOneModel construction method receives three parameters. The first parameter is the query criteria, the second parameter is the content to be updated, and the third parameter is optional UpdateOptions. If it is not filled in, it will automatically help you create a new one, which represents the action when the batch update operation does not match the query criteria. Its user attribute value defaults to false and does nothing, true means to insert a new Document into the database. The new Document is a combination of query Document and update Document, but if it is a replacement operation, the new Document is the replacement Document.

There will be a question here: is this different from the result of replacement after matching the query criteria? The difference is_ id field, the name of the new Document inserted when the query criteria are not matched_ The id is new, and the replacement operation is successfully performed_ The id is old.

public void bulkWriteUpdate(List<Document> documents){

List<WriteModel<Document>> requests = new ArrayList<WriteModel<Document>>();

for (Document document : documents) {

//update criteria

Document queryDocument = new Document("_id",document.get("_id"));

//Update the content and change the price of the book

Document updateDocument = new Document("$set",new Document("price","30.6"));

//Construct an operation model to update a single document

UpdateOneModel<Document> uom = new UpdateOneModel<Document>(queryDocument,updateDocument,new UpdateOptions().upsert(false));

//UpdateOptions represents the action when the batch update operation does not match the query criteria. The default is false and does nothing. When true, it means to insert a new Document into the database. It is a combination of the query part and the update part

requests.add(uom);

}

BulkWriteResult bulkWriteResult = collection.bulkWrite(requests);

System.out.println(bulkWriteResult.toString());

}Test: 100000 pieces of data

System.out.println("Start updating data...");

long startUpdate = System.currentTimeMillis();

instance.bulkWriteUpdate(documents);

System.out.println("Data update completed, total time:"+(System.currentTimeMillis() - startUpdate)+"millisecond");Result: 3198 Ms

(2) . update item by item

Compare updates in non batch

public void updateOneByOne(List<Document> documents){

for (Document document : documents) {

Document queryDocument = new Document("_id",document.get("_id"));

Document updateDocument = new Document("$set",new Document("price","30.6"));

UpdateResult UpdateResult = collection.updateOne(queryDocument, updateDocument);

}

}Test: 100000 pieces of data

System.out.println("Start updating data...");

long startUpdate = System.currentTimeMillis();

instance.updateOneByOne(documents);

System.out.println("Data update completed, total time:"+(System.currentTimeMillis() - startUpdate)+"millisecond");Results: 13979 MS, which is much less efficient than batch update

4. Mixed batch operation

The bulkWrite() method can batch process different types of write operations. The code is as follows:

public void bulkWriteMix(){

List<WriteModel<Document>> requests = new ArrayList<WriteModel<Document>>();

InsertOneModel<Document> iom = new InsertOneModel<Document>(new Document("name","kobe"));

UpdateManyModel<Document> umm = new UpdateManyModel<Document>(new Document("name","kobe"),

new Document("$set",new Document("name","James")),new UpdateOptions().upsert(true));

DeleteManyModel<Document> dmm = new DeleteManyModel<Document>(new Document("name","James"));

requests.add(iom);

requests.add(umm);

requests.add(dmm);

BulkWriteResult bulkWriteResult = collection.bulkWrite(requests);

System.out.println(bulkWriteResult.toString());

}

Note: updateMany() and deleteMany() are different from insertMany(). They are not batch operations, but represent updating (deleting) all data matching conditions.

2, Performance comparison with MySQL

1. Insert operation

(1) , batch insert

Like MongoDB, the Product entity object is also inserted. The code is as follows

public void insertBatch(ArrayList<Product> list) throws Exception{

Connection conn = DBUtil.getConnection();

try {

PreparedStatement pst = conn.prepareStatement("insert into t_product value(?,?,?,?)");

int count = 1;

for (Product product : list) {

pst.setInt(1, product.getProductId());

pst.setString(2, product.getCategory());

pst.setString(3, product.getName());

pst.setDouble(4, product.getPrice());

pst.addBatch();

if(count % 1000 == 0){

pst.executeBatch();

pst.clearBatch();//Every 1000 sql batches are processed, and then the parameters in PreparedStatement are set to be empty, which can also improve efficiency and prevent excessive parameter accumulation and transaction timeout, but the actual test effect is not obvious

}

count++;

}

conn.commit();

} catch (SQLException e) {

e.printStackTrace();

}

DBUtil.closeConnection(conn);

}JDBC automatically submits transactions by default. Remember to add the following line of code after obtaining the connection to turn off automatic transaction submission.

connection.setAutoCommit(false);

Test: 100000 pieces of data

public static void main(String[] args) throws Exception {

TestMysql test = new TestMysql();

ArrayList<Product> list = new ArrayList<Product>();

for (int i = 0; i < 1000; i++) {

Product product = new Product(i, "book", "Kite Runner", 20.5);

list.add(product);

}

System.out.println("MYSQL Start inserting data...");

long insertStart = System.currentTimeMillis();

test.insertBatch(list);

System.out.println("MYSQL Insert data completed, total time:"+(System.currentTimeMillis() - insertStart)+"millisecond");

}Results: 7389 milliseconds, about 7 seconds after multiple tests

(2) . insert item by item

Let's look at mysql insert one by one. The code is as follows:

public void insertOneByOne(ArrayList<Product> list) throws Exception{

Connection conn = DBUtil.getConnection();

try {

for (Product product : list) {

PreparedStatement pst = conn.prepareStatement("insert into t_product value(?,?,?,?)");

pst.setInt(1, product.getProductId());

pst.setString(2, product.getCategory());

pst.setString(3, product.getName());

pst.setDouble(4, product.getPrice());

pst.executeUpdate();

//conn.commit();// With this sentence, each insert commits a transaction, and the result will be very time-consuming

}

conn.commit();

} catch (SQLException e) {

e.printStackTrace();

}

DBUtil.closeConnection(conn);

}Test: 100000 records

System.out.println("MYSQL Start inserting data...");

long insertStart = System.currentTimeMillis();

test.insertOneByOne(list);

System.out.println("MYSQL Insert data completed, total time:"+(System.currentTimeMillis() - insertStart)+"millisecond");Results: 8921 milliseconds, more than 1 second slower than batch.

2. Delete operation

(1) , batch delete

The where condition for deletion is productId. There is no primary key added when creating the table. The deletion is abnormally slow. After checking for a long time, I don't know why. Remember to add a primary key. The primary key has an index by default, so that all records can be matched faster.

public void deleteBatch(ArrayList<Product> list) throws Exception{

Connection conn = DBUtil.getConnection();

try {

PreparedStatement pst = conn.prepareStatement("delete from t_product where id = ?");//Query by primary key, otherwise the whole table traversal is very slow

int count = 1;

for (Product product : list) {

pst.setInt(1, product.getProductId());

pst.addBatch();

if(count % 1000 == 0){

pst.executeBatch();

pst.clearBatch();

}

count++;

}

conn.commit();

} catch (SQLException e) {

e.printStackTrace();

}

DBUtil.closeConnection(conn);

}Test: 100000 pieces of data

System.out.println("MYSQL Start deleting data...");

long deleteStart = System.currentTimeMillis();

test.deleteBatch(list);

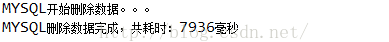

System.out.println("MYSQL Data deletion completed, total time:"+(System.currentTimeMillis() - deleteStart)+"millisecond");Result: 7936 Ms

(2) . delete item by item

The code is as follows

public void deleteOneByOne(ArrayList<Product> list) throws Exception{

Connection conn = DBUtil.getConnection();

PreparedStatement pst = null;

try {

for (Product product : list) {

pst = conn.prepareStatement("delete from t_product where id = ?");

pst.setInt(1, product.getProductId());

pst.executeUpdate();

//conn.commit();// With this sentence, each insert commits a transaction, and the result will be very time-consuming

}

conn.commit();

} catch (SQLException e) {

e.printStackTrace();

}

DBUtil.closeConnection(conn);

}Test: 100000 pieces of data

System.out.println("MYSQL Start deleting data...");

long deleteStart = System.currentTimeMillis();

test.deleteOneByOne(list);

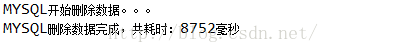

System.out.println("MYSQL Data deletion completed, total time:"+(System.currentTimeMillis() - deleteStart)+"millisecond");Results: 8752 MS, about one second slower than batch deletion

3. Update operation

(1) , batch update

The code is as follows

public void updateBatch(ArrayList<Product> list) throws Exception{

Connection conn = DBUtil.getConnection();

try {

PreparedStatement pst = conn.prepareStatement("update t_product set price=31.5 where id=?");

int count = 1;

for (Product product : list) {

pst.setInt(1, product.getProductId());

pst.addBatch();

if(count % 1000 == 0){

pst.executeBatch();

pst.clearBatch();//Every 1000 sql batches are processed, and then the parameters in PreparedStatement are set to be empty, which can also improve efficiency and prevent excessive parameter accumulation and transaction timeout, but the actual test effect is not obvious

}

count++;

}

conn.commit();

} catch (SQLException e) {

e.printStackTrace();

}

DBUtil.closeConnection(conn);

}Test: 100000 pieces of data

System.out.println("MYSQL Start updating data...");

long updateStart = System.currentTimeMillis();

test.updateBatch(list);

System.out.println("MYSQL Data update completed, total time:"+(System.currentTimeMillis() - updateStart)+"millisecond");Result: 8611 Ms

(2) . update item by item

The code is as follows

public void updateOneByOne(ArrayList<Product> list) throws Exception{

Connection conn = DBUtil.getConnection();

try {

for (Product product : list) {

PreparedStatement pst = conn.prepareStatement("update t_product set price=30.5 where id=?");

pst.setInt(1, product.getProductId());

pst.executeUpdate();

//conn.commit();// With this sentence, each insert commits a transaction, and the result will be very time-consuming

}

conn.commit();

} catch (SQLException e) {

e.printStackTrace();

}

DBUtil.closeConnection(conn);

}Test: 100000 pieces of data

System.out.println("MYSQL Start updating data...");

long updateStart = System.currentTimeMillis();

test.updateOneByOne(list);

System.out.println("MYSQL Data update completed, total time:"+(System.currentTimeMillis() - updateStart)+"millisecond");Results: 9430 milliseconds, about 1 second slower than batch update

3, Summary

This paper mainly introduces the use of bulkWrite() method, that is, the batch write operation of MongoDB. Through experiments, it can be seen that MongoDB uses bulkWrite() method to write a large amount of data, which is much more efficient than using conventional methods. The article also introduces the comparison between batch and non batch under several mysql write operations. It can be seen that their batch processing method is faster than non batch processing, but it is not as obvious as MongoDB.

For the comparison between MongoDB and MYSQL, in batch operation, MongoDB insertion, deletion and update are faster than mysql, while in non batch operation, MongoDB insertion, deletion and update are slower than mysql. Of course, it is only a preliminary conclusion. There is no comparison of 100, 1000, 10000 or greater data and monitoring of CPU memory usage. Those who are interested can try.

Original link: https://blog.csdn.net/u014513...

Copyright notice: This is the original article of CSDN blogger "wind tree seed", which follows the CC 4.0 BY-SA copyright agreement. Please attach the original source link and this notice for reprint.

Recent hot article recommendations:

1.1000 + Java interview questions and answers (2021 latest version)

2.Stop playing if/ else on the full screen. Try the strategy mode. It's really fragrant!!

3.what the fuck! What is the new syntax of xx ≠ null in Java?

4.Spring Boot 2.6 was officially released, a wave of new features..

5.Java development manual (Songshan version) is the latest release. Download it quickly!

Feel good, don't forget to like + forward!