Common replication sets

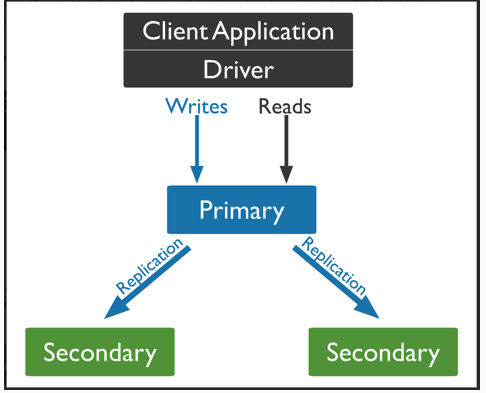

The common architecture of online environment is replica set, which can be understood as one master and many slaves.

Below: 1 master and 2 slave

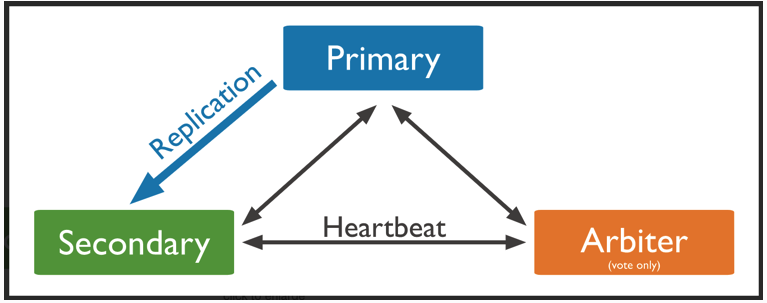

Below: one master one slave one arbitration

Server information:

Three machines are configured with 2-core 16G memory storage disk 100G

"host" : "10.1.1.159:27020"

"host" : "10.1.1.77:27020"

"host" : "10.1.1.178:27020

1. We configure one of the machines:

[root@10-1-1-159 ~]# wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-rhel70-4.2.1.tgz

[root@10-1-1-159 ~]# tar -zxvf mongodb-linux-x86_64-rhel70-4.2.1.tgz -C /data/

[root@10-1-1-159 ~]# mkdir /data/mongodb/{data,logs,pid,conf} -p

Profile:

[root@10-1-1-159 ~]# cat /data/mongodb/conf/mongodb.conf

systemLog:

destination: file

logAppend: true

path: /data/mongodb/logs/mongod.log

storage:

dbPath: /data/mongodb/data

journal:

enabled: true

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 8 #If a machine starts an instance, you can note that the default can be selected. If a machine starts multiple instances, you need to set the memory size to avoid preempting each other's memory

directoryForIndexes: true

processManagement:

fork: true

pidFilePath: /data/mongodb/pid/mongod.pid

net:

port: 27020

bindIp: 10.1.1.159,localhost #Change to local IP address

maxIncomingConnections: 5000

#security:

#keyFile: /data/mongodb/conf/keyfile

#authorization: enabled

replication:

# oplogSizeMB: 1024

replSetName: rs022. To copy a configuration negatively to another machine:

[root@10-1-1-159 ~]# scp -r /data/ root@10.1.1.77:/data/

[root@10-1-1-159 ~]# scp -r /data/ root@10.1.1.178:/data/

Directory structure:

[root@10-1-1-178 data]# tree mongodb mongodb ├── conf │ └── mongodb.conf ├── data ├── logs └── pid

3. Three machines are executed respectively:

groupadd mongod

useradd -g mongod mongod

yum install -y libcurl openssl glibc

cd /data

ln -s mongodb-linux-x86_64-rhel70-4.2.1 mongodb-4.2

chown -R mongod.mongod /data

sudo -u mongod /data/mongodb4.2.1/bin/mongod -f /data/mongodb/conf/mongodb.conf

To configure a replica set:

#The replica set name rs02 is consistent with the replSetName in the configuration file

config = { _id:"rs02", members:[

{_id:0,host:"10.1.1.159:27010",priority:90},

{_id:1,host:"10.1.1.77:27010",priority:90},

{_id:2,host:"10.1.1.178:27010",arbiterOnly:true}

]

}

Initialization

rs.initiate(config);

4. On one of the machines:

[root@10-1-1-159 ~]# /data/mongodb3.6.9/bin/mongo 10.1.1.159:27020

> use admin

switched to db admin

> config = { _id:"rs02", members:[

... {_id:0,host:"10.1.1.159:27020",priority:90},

... {_id:1,host:"10.1.1.77:27020",priority:90},

... {_id:2,host:"10.1.1.178:27020",arbiterOnly:true}

... ]

... }

{

"_id" : "rs02",

"members" : [

{

"_id" : 0,

"host" : "10.1.1.159:27020",

"priority" : 90

},

{

"_id" : 1,

"host" : "10.1.1.77:27020",

"priority" : 90

},

{

"_id" : 2,

"host" : "10.1.1.178:27020",

"arbiterOnly" : true

}

]

}

>

> rs.initiate(config); Initialize replica set########eeeerrrr

{

"ok" : 1,

"operationTime" : Timestamp(1583907929, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1583907929, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}5. View node status

rs02:PRIMARY> rs.status()

{

"set" : "rs02",

"date" : ISODate("2020-03-13T07:11:09.427Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1584083465, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1584083465, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1584083465, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1584083465, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "10.1.1.159:27020",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", #Master node

"uptime" : 185477,

"optime" : {

"ts" : Timestamp(1584083465, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-03-13T07:11:05Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1583907939, 1),

"electionDate" : ISODate("2020-03-11T06:25:39Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "10.1.1.77:27020",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY", #Slave node

"uptime" : 175540,

"optime" : {

"ts" : Timestamp(1584083465, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1584083465, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-03-13T07:11:05Z"),

"optimeDurableDate" : ISODate("2020-03-13T07:11:05Z"),

"lastHeartbeat" : ISODate("2020-03-13T07:11:08.712Z"),

"lastHeartbeatRecv" : ISODate("2020-03-13T07:11:08.711Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "10.1.1.159:27020",

"syncSourceHost" : "10.1.1.159:27020",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "10.1.1.178:27020",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER", #Arbitration node

"uptime" : 175540,

"lastHeartbeat" : ISODate("2020-03-13T07:11:08.712Z"),

"lastHeartbeatRecv" : ISODate("2020-03-13T07:11:08.711Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1584083465, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1584083465, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

rs02:PRIMARY>7. Current replica set status:

10.1.1.178:27020 ARBITER arbitration node

10.1.1.77:27020 secondary slave

10.1.1.159: 27020 primary master node

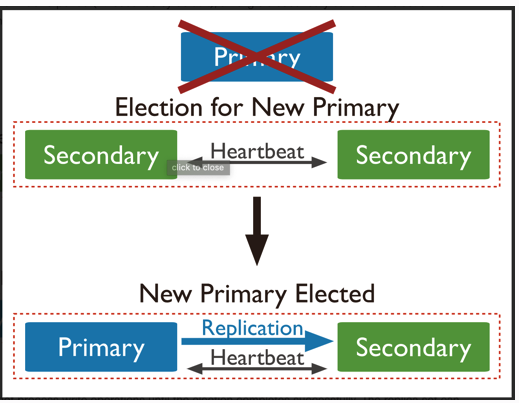

We insert some data queries, and then stop the primary node,

Log of arbitration node

We can see that when node 10.1.1.159 goes down, it is re elected: Member 10.1.1.77:27010 is now in state PRIMARY

2020-03-18T14:34:53.636+0800 I NETWORK [conn9] end connection 10.1.1.159:49160 (1 connection now open) 2020-03-18T14:34:54.465+0800 I CONNPOOL [Replication] dropping unhealthy pooled connection to 10.1.1.159:27010 2020-03-18T14:34:54.465+0800 I CONNPOOL [Replication] after drop, pool was empty, going to spawn some connections 2020-03-18T14:34:54.465+0800 I ASIO [Replication] Connecting to 10.1.1.159:27010 ...... 2020-03-18T14:35:02.473+0800 I ASIO [Replication] Failed to connect to 10.1.1.159:27010 - HostUnreachable: Error connecting to 10.1.1.159:27010 :: caused by :: Connection refused 2020-03-18T14:35:02.473+0800 I CONNPOOL [Replication] Dropping all pooled connections to 10.1.1.159:27010 due to HostUnreachable: Error connecting to 10.1.1.159:27010 :: caused by :: Connection refused 2020-03-18T14:35:02.473+0800 I REPL_HB [replexec-8] Error in heartbeat (requestId: 662) to 10.1.1.159:27010, response status: HostUnreachable: Error connecting to 10.1.1.159:27010 :: caused by :: Connection refused 2020-03-18T14:35:04.463+0800 I REPL [replexec-5] Member 10.1.1.77:27010 is now in state PRIMARY 2020-03-18T14:35:04.473+0800 I ASIO [Replication] Connecting to 10.1.1.159:27010 2020-03-18T14:35:04.473+0800 I ASIO [Replication] Failed to connect to 10.1.1.159:27010 - HostUnreachable: Error connecting to 10.1.1.159:27010 :: caused by :: Connection refused 2020-03-18T14:35:04.473+0800 I CONNPOOL [Replication] Dropping all pooled connections to 10.1.1.159:27010 due to HostUnreachable: Error connecting to 10.1.1.159:27010 :: caused by :: Connection refused

The architecture is as follows:

At present, the replica set has been built, and it has been tested that when a node has problems (at least three nodes), it will not affect the normal reading and writing of the service.

In the next chapter, we start to add users: