Welcome to my official account, reply to 001 Google programming specification.

O_o >_< o_O O_o ~_~ o_O

Hello, I'm Jizhi horizon. This paper introduces the method of compiling darknet and yolo training on ubuntu.

1. Compiling darknet

1.1 compiling opencv

I won't say much about the installation of cuda and cudnn. For the compilation of opencv, please refer to what I wrote before< [experience sharing] opencv method of compiling / cross compiling in x86, aarch64 and arm32 environments >, which records the method of compiling opencv on x86, aarch64 and arm32 platforms, which is concise and effective.

1.2 compiling darknet

clone Source Code:

git clone https://github.com/AlexeyAB/darknet.git cd darknet

modify Makefile and open gpu, opencv and openmp:

GPU=1 CUDNN=1 CUDNN_HALF=1 OPENCV=1 AVX=0 OPENMP=1 LIBSO=1 ZED_CAMERA=0 ZED_CAMERA_v2_8=0

then start compiling, which is very simple:

make -j32

after that, verify whether the installation is successful:

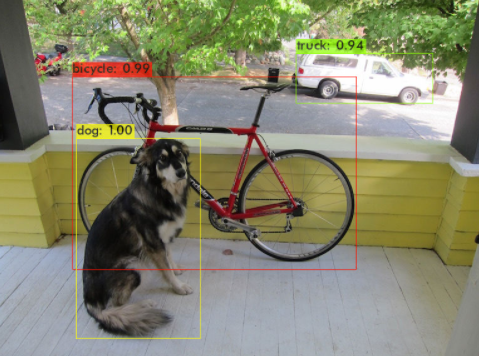

./darknet detect cfg/yolov3.cfg cfg/yolov3.weights data/dog.jpg

of course, yolov3.weights needs to be downloaded and transmitted by yourself: https://pjreddie.com/media/files/yolov3.weights

after running successfully, a classic detection diagram predictions.jpg will be generated in the < Darknet Path > Directory:

2. Yolo training

2.1 making VOC dataset

you can make your own data set in VOC format, or you can directly use VOC data for training.

for how to make VOC format data, please refer to my article:< [experience sharing] target detection VOC format data set production >, the introduction was quite detailed.

2.2 Yolo training

after having the data set, get the model structure file and pre training weight, and you can start a happy alchemy journey. In fact, many model structure files have been provided in the CFG folder, such as yolov3.cfg, yolov3-tiny.cfg, yolov4.cfg, yolov4-tiny.cfg, etc. you only need to find the corresponding pre training weight, such as:

- yolov3.cfg - > darknet53.conv.74 transfer: https://pjreddie.com/media/files/darknet53.conv.74

- yolov3-tiny.cfg - > yolov3-tiny.conv.11 transfer: https://drive.google.com/file/d/18v36esoXCh-PsOKwyP2GWrpYDptDY8Zf/view?usp=sharing

- yolov4-tiny.cfg - > yolov4-tiny.conv.29 transfer: https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-tiny.conv.29

- yolov4.cfg - > yolov4.conv.137 transfer: https://drive.google.com/open?id=1JKF-bdIklxOOVy-2Cr5qdvjgGpmGfcbp

next, let's take yolov4 as an example and start our pleasant training trip.

this is a non desktop environment, so - dont is added_ Show pass parameters.

./darknet detector train cfg/voc.data cfg/yolov4.cfg yolov4.conv.137 -dont_show

from the above command,. / darknet detector train is fixed, others:

- cfg/voc.data: transfer training data;

- cfg/yolov4.cfg: structure of training model;

- yolov4.conv.137: pre training weight

the above command to execute training is very clear. Take a look at voc.data:

classes= 20 # Number of target detection categories train = /home/pjreddie/data/voc/train.txt # Training data set valid = /home/pjreddie/data/voc/test.txt # Test data set names = data/voc.names # Category name backup = /home/pjreddie/backup/ # Intermediate weight backup directory during training

in. cfg, we can also make some changes according to our own training, mainly some parameters in [net]:

[net] batch=64 # batch settings subdivisions=32 # Each time the data transferred into the batch/subdivision is insufficient, increase the parameter if the gpu memory is insufficient # Training width=608 # Picture width height=608 # Picture high channels=3 # Number of channels momentum=0.949 # Momentum, the influence gradient decreases to the optimal value velocity decay=0.0005 # Weight attenuation regular term to prevent overfitting angle=0 # Increase training samples by rotation angle saturation = 1.5 # Increase training samples by adjusting image saturation exposure = 1.5 # Increase the training samples by adjusting the exposure hue=.1 # Increase the training samples by adjusting the tone learning_rate=0.0013 # Learning rate, which is an important parameter, determines the speed of training convergence and whether it can achieve good results burn_in=1000 # The learning rate setting is related. When it is less than the parameter, there is a way to update it. When it is greater than the parameter, the policy update method is adopted max_batches = 500500 # Stop training when the training batch reaches this participation policy=steps # Learning rate adjustment strategy steps=400000,450000 # step and scales are used together, which means that the learning rate decreases by 10 times when it reaches 400000 and 450000 respectively, because it converges slowly later scales=.1,.1 #cutmix=1 # cutmix transform is a way of data enhancement mosaic=1 # mosaic transform is a way of data enhancement

in addition to these, if you are training your own dataset, the number of detected categories may not be the official 20. Therefore, some modifications need to be made to the yolo layer. Take one of the yolo layers:

... [convolutional] size=1 stride=1 pad=1 filters=75 # filters = 3*(classes+5), which needs to be modified according to the number of your classes activation=linear [yolo] mask = 6,7,8 anchors = 12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401 classes=20 # Number of detection categories num=9 jitter=.3 ignore_thresh = .7 truth_thresh = 1 random=1 scale_x_y = 1.05 iou_thresh=0.213 cls_normalizer=1.0 iou_normalizer=0.07 iou_loss=ciou nms_kind=greedynms beta_nms=0.6 max_delta=5

anchors in yolo layer also need to be modified for different detection tasks. For example, for the detection person, the anchor frame needs to be thin and tall, such as the detection vehicle, which may prefer the narrow and wide anchor frame. Then, some parameters such as confidence threshold and nms threshold also need to be adjusted when training.

then explain why you need to modify the filters of one layer of convolution on yolo. I'm in this article< [experience sharing] analyze the pointer offset logic of darknet entry_index >Some analysis has been done. From the data layout of yolo layer:

(1) according to four dimensions [N, C, H, W], N is batch, C is 3 * (5 + classes), and H / W is feature_map height and width. It needs to be explained that C, C = 3 * (1 + 4 + classes), where 1 represents the confidence, 4 is the location information of the detection box, and classes is the number of categories, that is, each category gives a detection score, and multiplying 3 means that each grid has 3 anchor boxes. In this way, the four-dimensional data arrangement accepted by yolo layer is formed, that is, the output data arrangement of the previous layer of yolo;

(2) as for the output of yolo layer, one-dimensional dynamic array will be used in darknet to store the data of yolo layer. Here is how to convert four-dimensional data into one-dimensional data. This is done in darknet. Assuming that the four-dimensional data is [n, c, h, w] and the corresponding index of each dimension is [n, c, h, w], then the expansion is n*C*H*W + c*H*W + h*W + w, which is stored in * output according to this logic.

in this way, looking back, it should be better to understand why the filters of the convolution layer on yolo are 3 * (classes + 5).

well, let's start training, or perform:

./darknet detector train cfg/voc.data cfg/yolov4.cfg yolov4.conv.137 -dont_show

if you need to save the training log, you can do this:

./darknet detector train cfg/voc.data cfg/yolov4l.cfg yolov4.conv.137 2>1 | tee visualization/train_yolov4.log

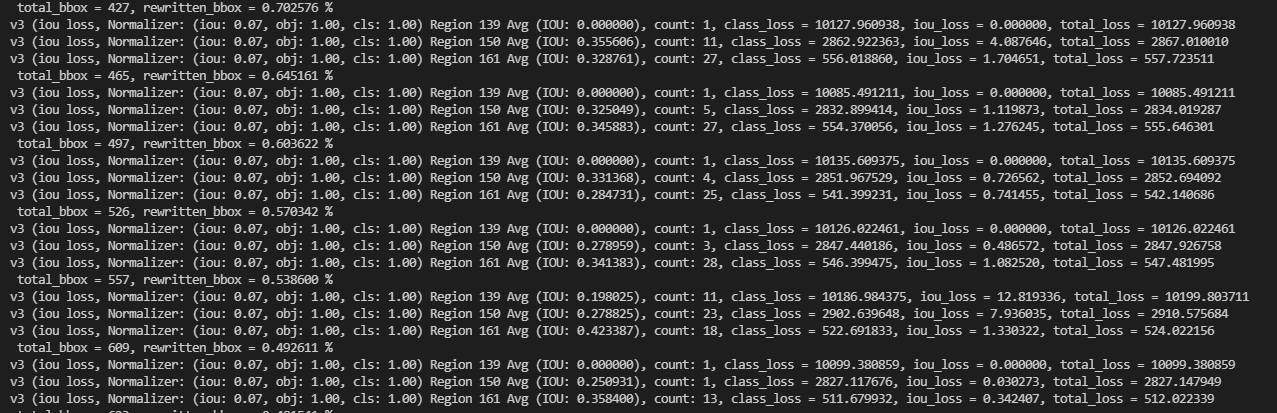

the console will output the training log:

after the training, the final and intermediate weight files obtained from the training will be saved in backup = /home/pjreddie/backup /. If the effect is satisfactory, it can be deployed. For target detection, the indicator to measure the effect is generally map.

well, the above share the methods of compiling darknet on ubuntu and training yolo. I hope my sharing can be a little helpful to your learning.

[official account transmission]

[model training] ubuntu compiling Darknet and YOLO training

Scan the bottom two dimensional code to focus on my WeChat official account, and get more AI experience sharing. Let's welcome AI with the ultimate + geek mentality.