To be perfected...

Articles Catalogue

- Experimental environment

- pacemaker+corosync for high availability

- Deployment of server1 and server4 host mfs master

- Serr1 and server4 configure highly available yum sources

- Install pacemaker+corosync on server1 and server4

- Because of high availability, there is a need for privacy-free between server1 and server4

- Install resource management tools on server1 and server4 and open corresponding services

- Create mfs cluster on server 1 and start

- View cluster status

- Check and resolve errors

- Creating Cluster Resources and Adding VIP

- test

Experimental environment

| Host (IP) | service |

|---|---|

| server1(172.25.11.1) | mfsmaster corosync+pacemaker |

| server2(172.25.11.2) | chunk server |

| server3(172.25.11.3) | chunk server |

| server4(172.25.11.4) | mfsmaster corosync+pacemaker |

| Real machine (172.25.11.250) | client |

pacemaker+corosync for high availability

Deployment of server1 and server4 host mfs master

- Let's take the installation of server1 as an example. The installation of server4 is the same.

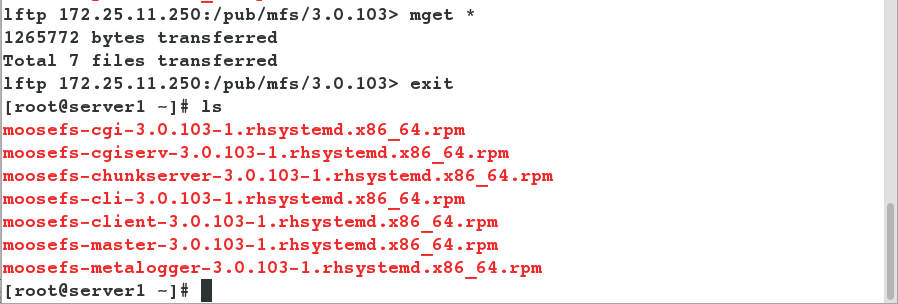

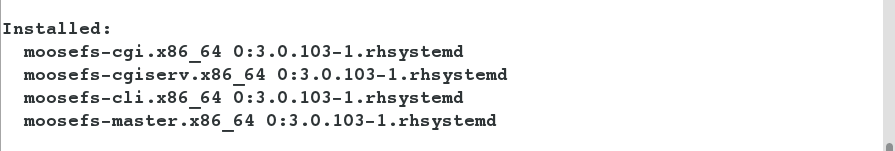

- Download and install.

[root@server1 ~]# ls moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm moosefs-client-3.0.103-1.rhsystemd.x86_64.rpm moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm moosefs-metalogger-3.0.103-1.rhsystemd.x86_64.rpm [root@server1 ~]# yum install moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm -y

- Serr1 add parsing

[root@server1 ~]# vim /etc/hosts 172.25.11.1 server1 mfsmaster

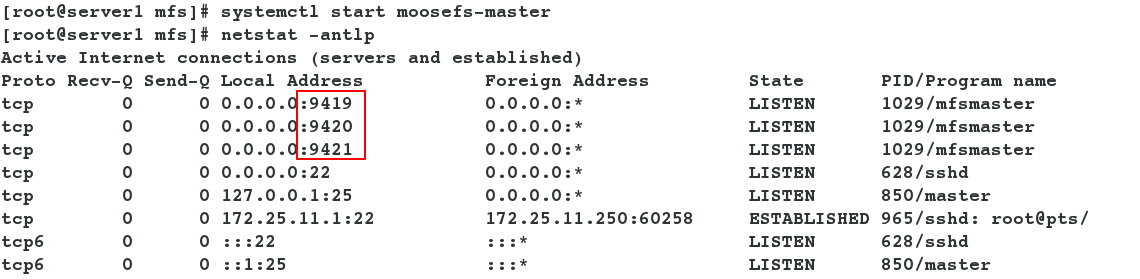

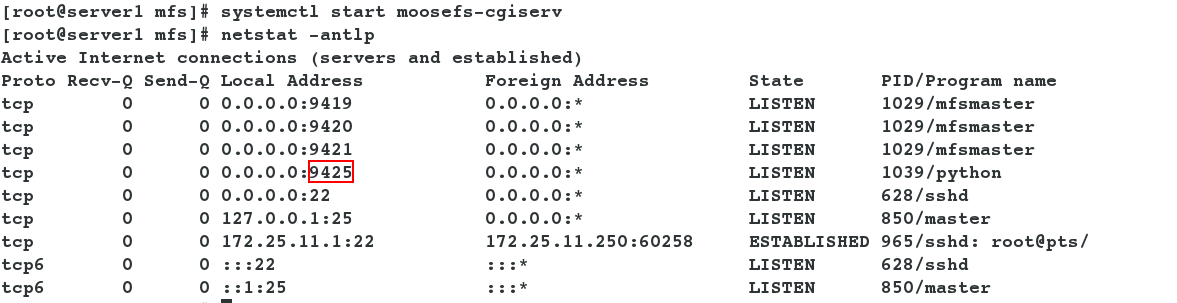

- Open the service and view the port

[root@server1 mfs]# systemctl start moosefs-master [root@server1 mfs]# netstat -antlp [root@server1 mfs]# systemctl start moosefs-cgiserv [root@server1 mfs]# netstat -antlp

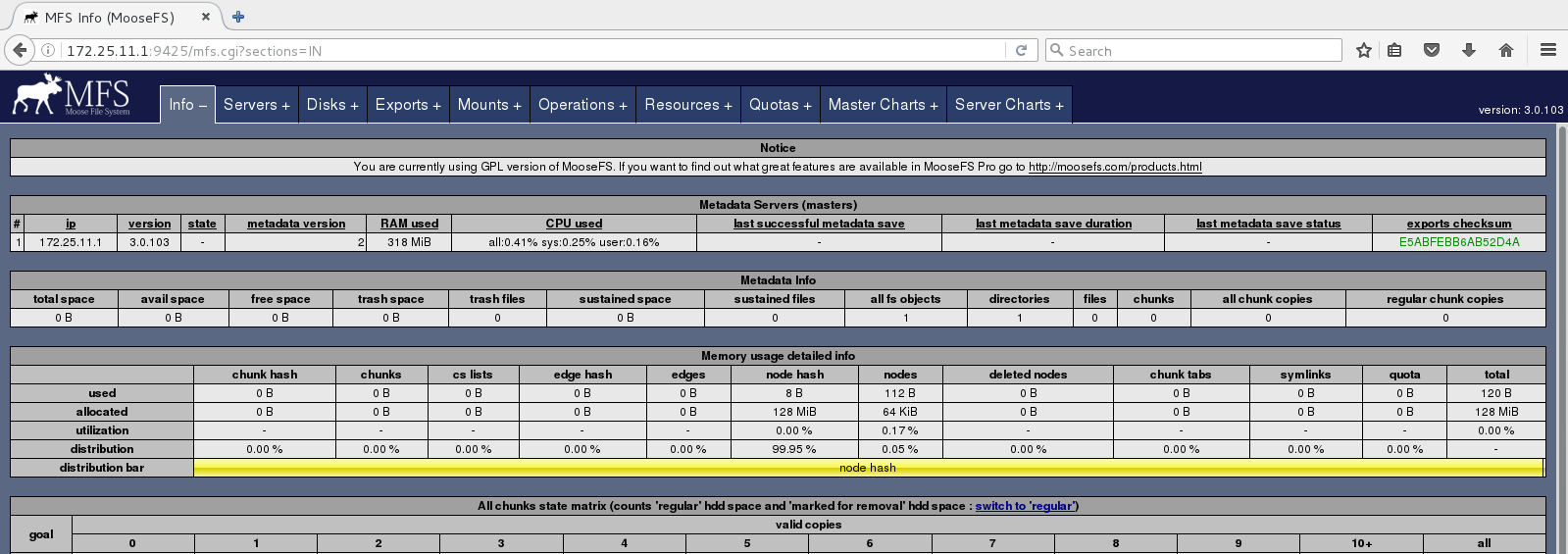

- Access View in Browser: http://172.25.11.1:9425/mfs.cgi

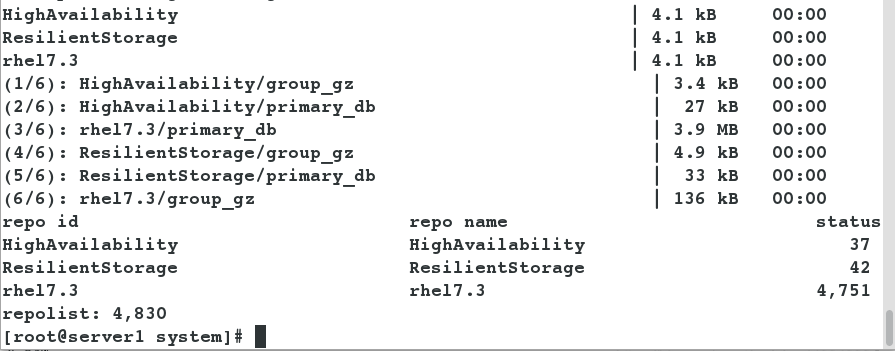

Serr1 and server4 configure highly available yum sources

- Taking server1 as an example, the construction of server4 is the same

[root@server1 system]# vim /etc/yum.repos.d/rhel7.repo [rhel7.3] name=rhel7.3 gpgcheck=0 baseurl=http://172.25.11.250/rhel7.3 [HighAvailability] name=HighAvailability gpgcheck=0 baseurl=http://172.25.11.250/rhel7.3/addons/HighAvailability [ResilientStorage] name=ResilientStorage gpgcheck=0 baseurl=http://172.25.11.250/rhel7.3/addons/ResilientStorage

Install pacemaker+corosync on server1 and server4

- server1:

[root@server1 system]# yum install pacemaker corosync -y

- server4:

[root@server4 ~]# yum install pacemaker corosync -y

Because of high availability, there is a need for privacy-free between server1 and server4

[root@server1 system]# ssh-keygen [root@server1 system]# ssh-copy-id server4 [root@server1 system]# ssh server4 [root@server1 system]# ssh-copy-id server1 [root@server1 ~]# ssh server1

Install resource management tools on server1 and server4 and open corresponding services

- Serr1 is the same as server4. Here we take server1 as an example.

[root@server1 ~]# yum install pcs -y [root@server1 ~]# systemctl start pcsd [root@server1 ~]# systemctl enable pcsd [root@server1 ~]# id hacluster uid=189(hacluster) gid=189(haclient) groups=189(haclient) [root@server1 ~]# passwd hacluster

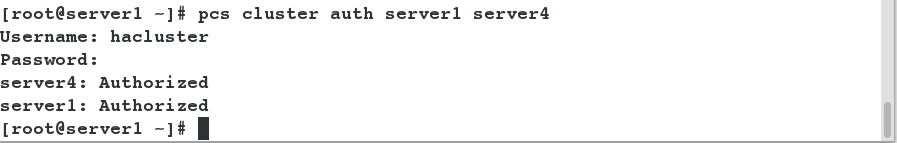

Create mfs cluster on server 1 and start

- Creating Clusters

[root@server1 ~]# pcs cluster auth server1 server4 Username: hacluster Password: server4: Authorized server1: Authorized

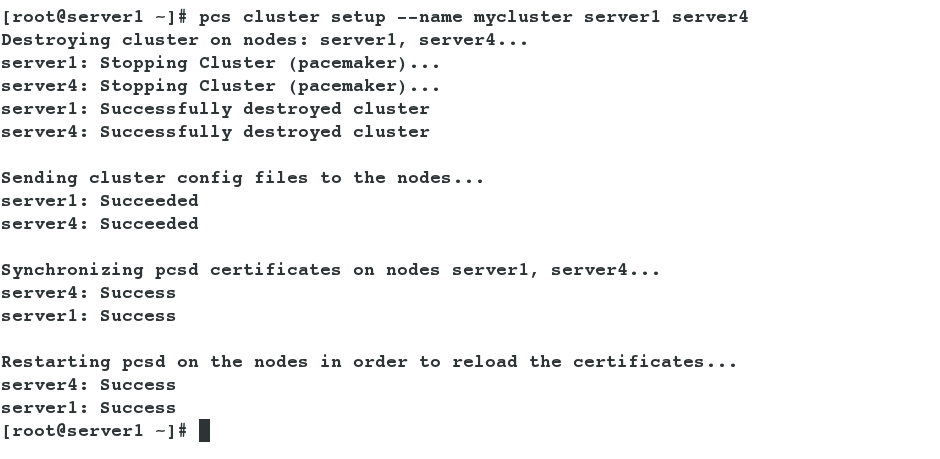

- Name the cluster

[root@server1 ~]# pcs cluster setup --name mycluster server1 server4

- Open Cluster

[root@server1 ~]# pcs cluster start --all server1: Starting Cluster... server4: Starting Cluster...

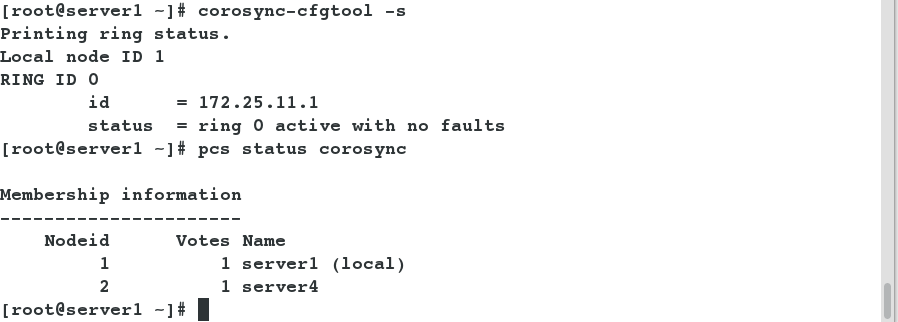

View cluster status

[root@server1 ~]# corosync-cfgtool -s [root@server1 ~]# pcs status corosync

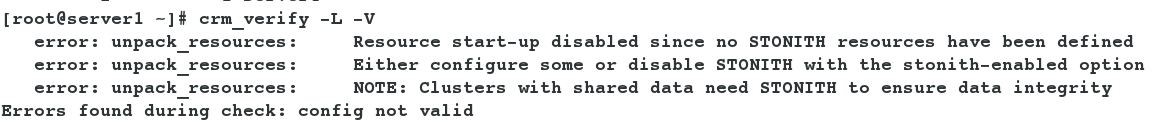

Check and resolve errors

[root@server1 ~]# crm_verify -L -V [root@server1 ~]# pcs property set stonith-enabled=false [root@server1 ~]# crm_verify -L -V

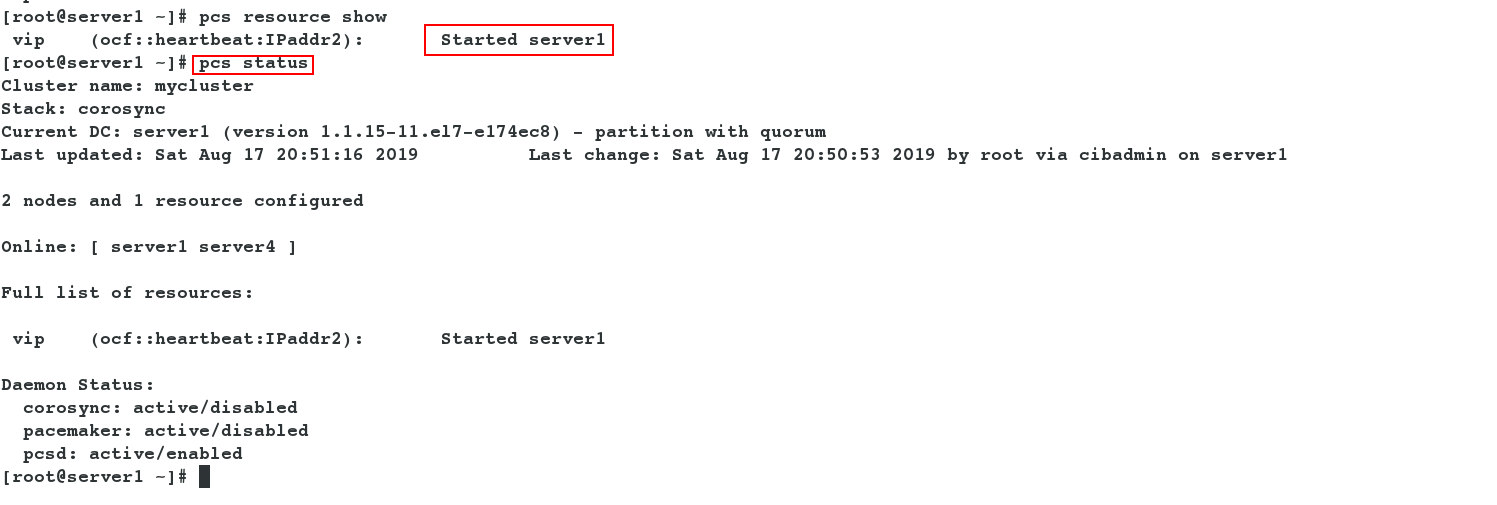

Creating Cluster Resources and Adding VIP

- On server 1:

[root@server1 ~]# pcs resource create --help [root@server1 ~]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.11.100 cidr_netmask=32 op monitor interval=30s [root@server1 ~]# pcs resource show [root@server1 ~]# pcs status

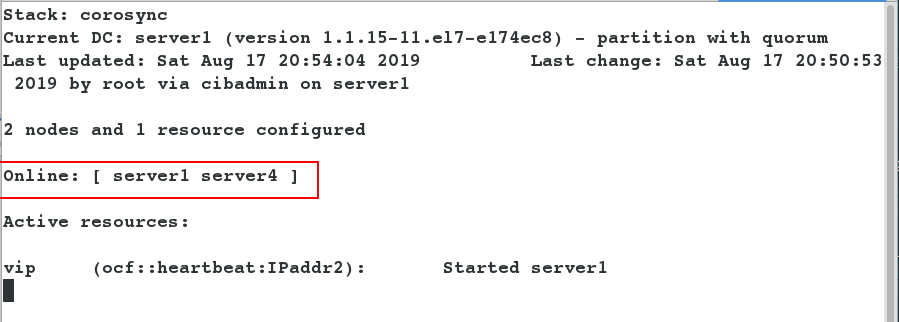

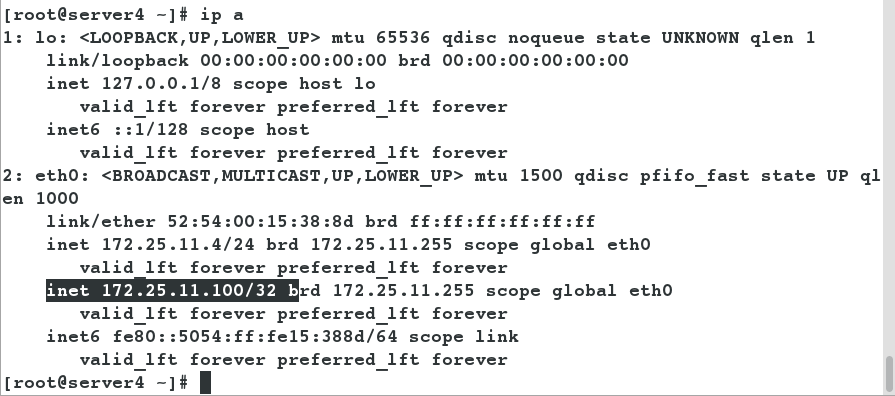

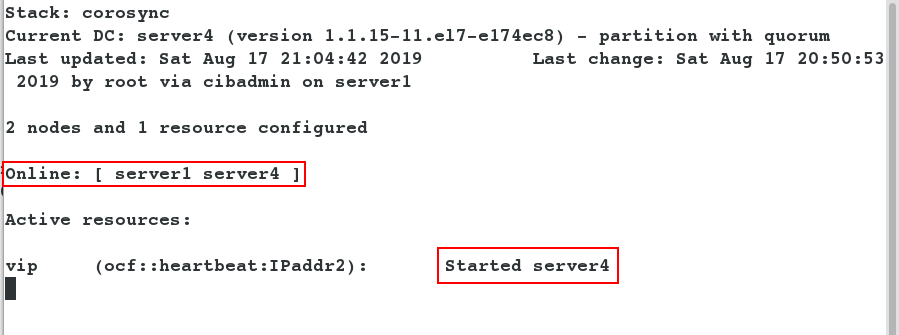

- View vip on server 4:

[root@server4 ~]# crm_mon

test

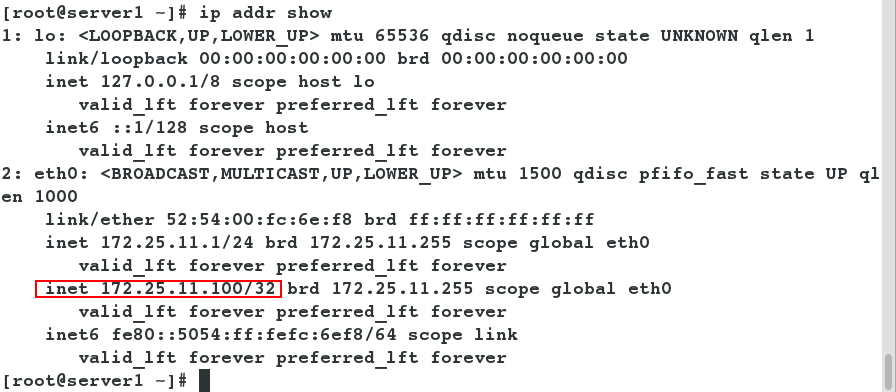

- View vip on server 1 at this time:

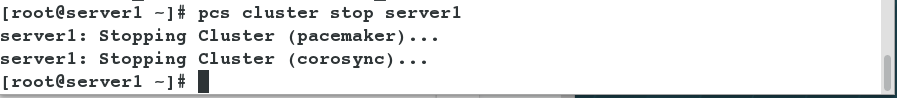

- Turn off server 1 in cluster cluster cluster, and vip drifts to server 4.

[root@server1 ~]# pcs cluster stop server1

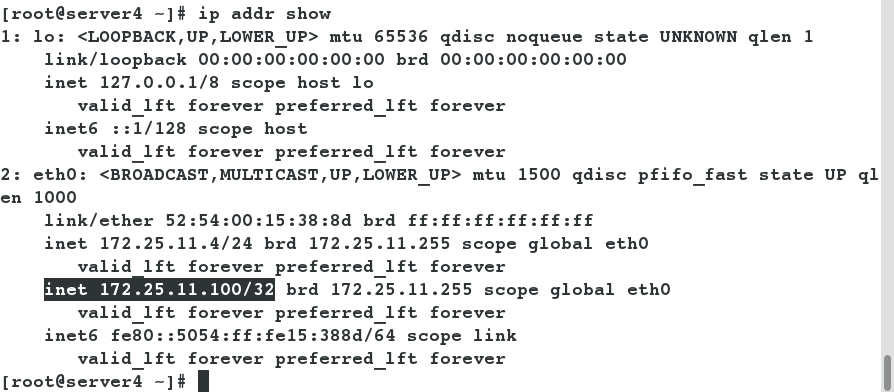

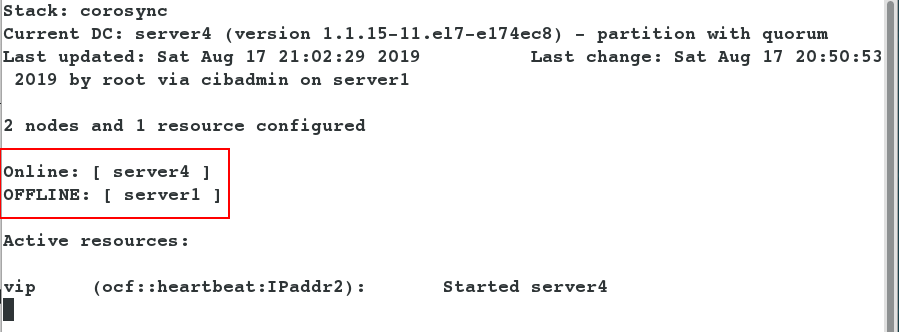

- Viewing the monitoring information shows that only server4 is online at this time:

[root@server4 ~]# crm_mon

- Open server1 in the cluster again and find vip is still on server4, but server1 and server4 are online when monitoring information is viewed.

[root@server1 ~]# pcs cluster start server1 server1: Starting Cluster... [root@server4 ~]# crm_mon [root@server4 ~]# ip a

Overall, high availability deployment is successful!