catalogue

1, What is document scanning

Document scanning steps

2, Introduction to functions and variables used

Variable introduction

Function introduction

3, Practical operation

1 image preprocessing

2 obtain image contour

3 extract and mark document edges

4 reorder edges

5 trim trim edges

1, What is document scanning

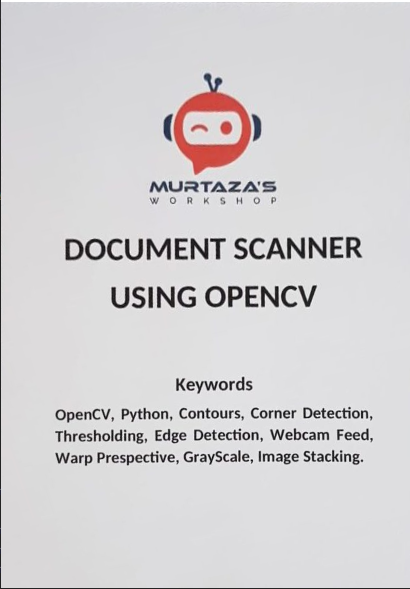

Document scanning is to present the text in front of the text images taken from different perspectives. As shown below:

What you want is the A4 document information in the picture. After scanning the document, you can get the following:

What you want is the A4 document information in the picture. After scanning the document, you can get the following:

Document scanning steps

1 Convert the imported picture to grayscale, blur and noise reduction canny Image preprocessing such as edge detection and expansion 2 Definition, contour variable, obtain the preprocessed image contour 3 Extract the text box part of the outline by finding the maximum outer rectangular outline function 4 Use the perspective matrix to position the image in the front view and trim the edges

2, Introduction to functions and variables used

Variable introduction

vector description:

Vector is a vector type that can hold many types of data. Therefore, it is also called a container (which can be understood as a dynamic array and an encapsulated class)

Before vector operation, add header file #include, which can be viewed

vector< Point >

Two dimensional coordinate points are placed in the vector container

vector<vector< Point >>

A vector container is placed in the vector container, and two-dimensional coordinate points are placed in the sub container

vector< Vec4i > vector< int >

Put a 4-dimensional int vector in the vector container

Point2f 2D coordinate point

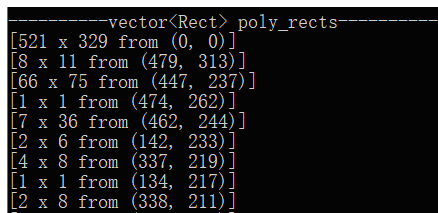

Rect pixel width * height from position (x*y)

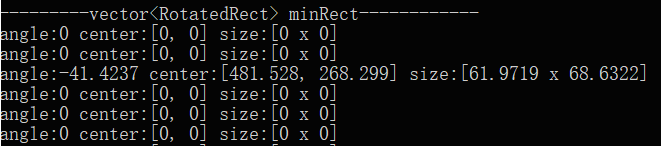

RotateRect [angle,center[0,0],size[width*height]]

RotateRect [angle,center[0,0],size[width*height]]

Function introduction

findContours(image, contours, hierarchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

You can view the specific contents of the findContours function Detailed explanation of findContours function parameters.

The brief description is as follows:

The first image: it is an input image, which can be a gray-scale image. Binary images are commonly used. Generally, they have passed Canny and other edge detection

The second counters is defined as a vector of "vector < vector > type, and is a double vector. The first vector corresponds to the contour element. For example, the contours [i] is the ith contour element. The contour element is also a vector, and the saved data type is Point type. For example, the counts [i] [J] is the jth feature Point of the ith contour.

The third hierarchy is defined as vector < vec4i > type, that is, each element in the vector includes four int variables. Vector represents the index number of the next contour, the previous contour, the parent contour and the embedded contour of the ith contour respectively.

The fourth defines the contour retrieval mode for int mode

Value 1: CV_RETR_EXTERNAL only detects the outermost contour, and the inner contour contained in the outer contour is ignored

Value 2: CV_RETR_LIST detects all contours, including inner and outer contours, but the detected contours do not establish a hierarchical relationship, are independent of each other, and have no hierarchical relationship, which means that there is no parent contour or embedded contour in this retrieval mode, so the 3rd and 4th components of all elements in the hierarchy vector will be set to - 1.

Value 3: CV_RETR_CCOMP detects all contours, but only two hierarchical relationships are established for all contours. The periphery is the top layer. If the inner contour in the periphery also contains other contour information, all contours in the inner circumference belong to the top layer

Value 4: CV_RETR_TREE, detect all contours, and establish a hierarchical tree structure for all contours. The outer contour includes the inner contour, and the inner contour can continue to include the embedded contour.

The fifth parameter: int type method, which defines the approximate method of contour:

Value 1: CV_CHAIN_APPROX_NONE saves all continuous contour points on the object boundary into the contours vector

Value 2: CV_CHAIN_APPROX_SIMPLE only saves the inflection point information of the contour, saves all points at the inflection point of the contour into the contour vector, and does not retain the information points on the straight line segment between the inflection point and the inflection point.

Values 3 and 4: CV_CHAIN_APPROX_TC89_L1CV_CHAIN_APPROX_TC89_KCOS uses teh chinl chain approximation algorithm

The sixth parameter: Point offset. All points move a certain distance relative to each other, which is not used in this project

approxPolyDP(contours[i], conPoly[i], 0.02 * peri, true);

void approxPolyDP(InputArray curve, OutputArray approxCurve, double epsilon, bool closed); / / find the polygon fitting curve of the contour

First parameter InputArray curve: the set of input points

The second parameter, OutputArray approxCurve: the output point set. The current point set can contain the specified point set at least. The drawn point is a polygon.

The third parameter double epsilon: the specified precision, that is, the maximum distance between the original curve and the approximate curve, is calculated according to the perimeter.

The fourth parameter bool closed: if true, the approximate curve is closed; otherwise, if false, it is disconnected.

Double arclength (inputarray curve, bool closed); calculate contour perimeter

curve of InputArray type, input vector, two-dimensional point (contour vertex), which can be std::vector or Mat type.

Closed of bool type, an identifier used to indicate whether the curve is closed. It is generally set to true

3, Practical operation

1 image preprocessing

Mat preProcessing(Mat img)

{

cvtColor(img, imgGray, COLOR_BGR2GRAY);

GaussianBlur(imgGray, imgBlur, Size(3, 3), 3, 0);

Canny(imgBlur, imgCanny, 25, 75);

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3));

dilate(imgCanny, imgDil, kernel);

//erode(imgDil, imgErode, kernel);

return imgDil;

}

2 obtain image contour

vector<vector<Point>> contours; vector<Vec4i> hierarchy; findContours(image, contours, hierarchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

.

3 extract and mark document edges

vector<vector<Point>> conPoly(contours.size());

vector<Rect> boundRect(contours.size());

vector<Point> biggest;

int maxArea = 0;

for (int i = 0; i < contours.size(); i++)

{

int area = contourArea(contours[i]);

string objectType;

if (area > 1000)

{

float peri = arcLength(contours[i], true);

approxPolyDP(contours[i], conPoly[i], 0.02 * peri, true);

if (area > maxArea && conPoly[i].size() == 4) {

biggest = { conPoly[i][0],conPoly[i][1] ,conPoly[i][2] ,conPoly[i][3] };

maxArea = area;

}

}

}

return biggest;

}

4 reorder edges

vector<Point> reorder(vector<Point> points)

{

vector<Point> newPoints;

vector<int> sumPoints, subPoints;

for (int i = 0; i < 4; i++)

{

sumPoints.push_back(points[i].x + points[i].y);

subPoints.push_back(points[i].x - points[i].y);

}

//cout << sumPoints.begin() << endl;

newPoints.push_back(points[min_element(sumPoints.begin(), sumPoints.end())-sumPoints.begin()]); // 0

//cout << newPoints[0].x << endl;

newPoints.push_back(points[max_element(subPoints.begin(), subPoints.end())-subPoints.begin()]); //1

newPoints.push_back(points[min_element(subPoints.begin(), subPoints.end())-subPoints.begin()]); //2

newPoints.push_back(points[max_element(sumPoints.begin(), sumPoints.end())-sumPoints.begin()]); //3

cout << sizeof(newPoints) << endl;

return newPoints;

}

Where push_back is to add an element after the container, and min_element and max_element are to find the maximum and minimum values respectively.

5 draw trim edges

Get the perspective projection image

Mat getWarp(Mat img, vector<Point> points, float w, float h)

{

Point2f src[4] = { points[0],points[1],points[2],points[3] }; //Four weeks of initial change

Point2f dst[4] = { {0.0f,0.0f},{w,0.0f},{0.0f,h},{w,h} }; //Four weeks after change

Mat matrix = getPerspectiveTransform(src, dst); //The change matrix is obtained

warpPerspective(img, imgWarp, matrix, Point(w, h)); //I, h is the changed pixel size

return imgWarp;

}

The image results are as follows:

It can be seen that there are still defects around, so the pruning results are as follows:

It can be seen that there are still defects around, so the pruning results are as follows:

int cropVal = 5; Rect roi(cropVal, cropVal, w-(2 * cropVal), h-(2 * cropVal)); imgCrop = imgWarp(roi);

The final operation results are as follows:

The final code is as follows:

The final code is as follows:

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

/// Project 2 – Document Scanner //

Mat imgOriginal, imgGray, imgBlur, imgCanny, imgThre, imgDil, imgErode, imgWarp, imgCrop;

vector<Point> initialPoints, docPoints;

float w = 420, h = 596;

Mat preProcessing(Mat img)

{

cvtColor(img, imgGray, COLOR_BGR2GRAY);

GaussianBlur(imgGray, imgBlur, Size(3, 3), 3, 0);

Canny(imgBlur, imgCanny, 25, 75);

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3));

dilate(imgCanny, imgDil, kernel);

//erode(imgDil, imgErode, kernel);

return imgDil;

}

vector<Point> getContours(Mat image) {

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

findContours(image, contours, hierarchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

//drawContours(img, contours, -1, Scalar(255, 0, 255), 2);

vector<vector<Point>> conPoly(contours.size());

vector<Rect> boundRect(contours.size());

vector<Point> biggest;

int maxArea = 0;

for (int i = 0; i < contours.size(); i++)

{

int area = contourArea(contours[i]);

//cout << area << endl;

string objectType;

if (area > 1000)

{

float peri = arcLength(contours[i], true);

approxPolyDP(contours[i], conPoly[i], 0.02 * peri, true);

if (area > maxArea && conPoly[i].size() == 4) {

//drawContours(imgOriginal, conPoly, i, Scalar(255, 0, 255), 5);

biggest = { conPoly[i][0],conPoly[i][1] ,conPoly[i][2] ,conPoly[i][3] };

maxArea = area;

}

//drawContours(imgOriginal, conPoly, i, Scalar(255, 0, 255), 2);

//rectangle(imgOriginal, boundRect[i].tl(), boundRect[i].br(), Scalar(0, 255, 0), 5);

}

}

return biggest;

}

void drawPoints(vector<Point> points, Scalar color)

{

for (int i = 0; i < points.size(); i++)

{

circle(imgOriginal, points[i], 10, color, FILLED);

putText(imgOriginal, to_string(i), points[i], FONT_HERSHEY_PLAIN, 4, color, 4);

}

}

vector<Point> reorder(vector<Point> points)

{

vector<Point> newPoints;

vector<int> sumPoints, subPoints;

for (int i = 0; i < 4; i++)

{

sumPoints.push_back(points[i].x + points[i].y);

subPoints.push_back(points[i].x - points[i].y);

}

//cout << sumPoints.begin() << endl;

newPoints.push_back(points[min_element(sumPoints.begin(), sumPoints.end())-sumPoints.begin()]); // 0

//cout << newPoints[0].x << endl;

newPoints.push_back(points[max_element(subPoints.begin(), subPoints.end())-subPoints.begin()]); //1

newPoints.push_back(points[min_element(subPoints.begin(), subPoints.end())-subPoints.begin()]); //2

newPoints.push_back(points[max_element(sumPoints.begin(), sumPoints.end())-sumPoints.begin()]); //3

cout << sizeof(newPoints) << endl;

return newPoints;

}

Mat getWarp(Mat img, vector<Point> points, float w, float h)

{

Point2f src[4] = { points[0],points[1],points[2],points[3] };

Point2f dst[4] = { {0.0f,0.0f},{w,0.0f},{0.0f,h},{w,h} };

Mat matrix = getPerspectiveTransform(src, dst);

warpPerspective(img, imgWarp, matrix, Point(w, h));

return imgWarp;

}

void main() {

string path = "resources/paper.jpg";

imgOriginal = imread(path);

resize(imgOriginal, imgOriginal, Size(), 0.5, 0.5);

// Preprpcessing – Step 1

imgThre = preProcessing(imgOriginal);

// Get Contours – Biggest – Step 2

initialPoints = getContours(imgThre);

//drawPoints(initialPoints, Scalar(0, 0, 255));

docPoints = reorder(initialPoints);

//drawPoints(docPoints, Scalar(0, 255, 0));

// Warp – Step 3

imgWarp = getWarp(imgOriginal, docPoints, w, h);

//Crop – Step 4

int cropVal = 5;

Rect roi(cropVal, cropVal, w-(2 * cropVal), h-(2 * cropVal));

imgCrop = imgWarp(roi);

imshow("Image", imgOriginal);

//imshow("Image Dilation", imgThre);

imshow("Image Warp", imgWarp);

imshow("Image Crop", imgCrop);

waitKey(0);

}