Reference link: mainly based on the Chinese version of scikit learn (sklearn) official documents: https://sklearn.apachecn.org/#/

7 text feature extraction methods: http://blog.sina.com.cn/s/blog_b8effd230102zu8f.html

Train of sklearn_ test_ Explanation of the meaning of the parameters of split() function (very complete): https://www.cnblogs.com/Yanjy-OnlyOne/p/11288098.html

1 Introduction

It is mainly about the use of some API s. You can see the details machine learning I sorted out all the contents of this article. They correspond to each other

You can try the case of iris first

Of course, you need to download the library first

In order of use

2 load dataset

Some data sets built into sklearn

3 partition test set training set

from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test =sklearn.model_selection.train_test_split( train_data, train_target, test_size=0.4, random_state=0, stratify=y_train) train_x, test_x, train_y, test_y = train_test_split(x, y, train_size=0.7, random_state=0)

train_data: Sample feature set to be divided train_target: Sample results to be divided test_size: Sample proportion, if it is an integer, is the number of samples random_state: Is the seed of a random number. Random number seed: in fact, it is the number of this group of random numbers. When it is necessary to repeat the test, it is guaranteed to get a group of the same random numbers. For example, if you fill in 1 every time, the random array you get is the same when other parameters are the same. But filling in 0 or not will be different every time. stratify To keep split Distribution of former classes. For example, there are 100 data and 80 belong to A Class, 20 belong to B Class. If train_test_split(... test_size=0.25, stratify = y_all), that split After that, the data are as follows: training: 75 Data, 60 of which belong to A Class, 15 belong to B Class. testing: 25 Data, of which 20 belong to A Class, 5 of B Class. Yes stratify Parameters, training Set sum testing The proportion of classes in the set is A: B= 4: 1,Equivalent to split The proportion before (80:20). It is usually used when the distribution of this kind is unbalanced stratify. take stratify=X Just according to X Proportional distribution in take stratify=y Just according to y Proportional distribution in To sum up, the settings and types of each parameter are as follows:

4 feature extraction

Module sklearn.feature_extraction can be used to extract features that meet the support of machine learning algorithms, such as text and pictures.

4.1 General

vectorizer.fit() vectorizer.transform() vectorizer.fit_transform(measurements).toarray() >>>array([[ 1., 0., 0., 33.], [ 0., 1., 0., 12.], [ 0., 0., 1., 18.]]) count_vectorizer.fit_transform()The result is a sparse matrix. If you want to get a matrix with dense representation of normal two-dimensional data, you need to use x_ctv.toarray(). Note that sparse matrices cannot be sliced, for example x_ctv[1][2]. Note: use tfidf_vectorizer.fit_transformer()Enter as a numpy.array,Shape is(n_samples, n_features). Because the input settings of the two methods are different, for CountVectorizer and TfidfVectorizer As long as it is iterable(Iterative) is enough. According to the settings, TfidfTransformer Will be CountVectorizer As input. vectorizer.get_feature_names() Return a list,Names of all features

4.2 loading features from dictionary types

from sklearn.feature_extraction import DictVectorizer vec = DictVectorizer()

4.3 text feature extraction

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer count_vectorizer= CountVectorizer()

4.3.1CountVectorizer

CountVectorizer counts the number of words and uses the sparse matrix of the number of words to represent the characteristics of the text. It will count all the words that appear and how many times each word appears. The last sparse matrix column is the number of words (each word is a feature / dimension)

The CountVectorizer here uses the default parameters, mainly:

(1)ngram_range=(min_num_words,max_num_words). Where x and y are numbers, i.e. n-ary syntax. (granularity of words)

(2)stop_words = stop_words. Where, stop_words is the list read from the stop word file, one stop word per line.

(3)max_features = n. Where n is the number of vocabularies. Indicates the number of TOP n words in descending order according to word frequency. (number of selected eigenvalues)

4.3.2TfidfVectorizer

Like CountVectorizer, tfidfvector extracts the TFIDF value corresponding to each effective word in a text. At the same time, each text feature vector will be normalized automatically.

tfidf_vectorizer = TfidfVectorizer(analyzer='word', ngram_range=(1,4), max_features=10000)

The main parameters are similar to CounterVectorizer

5 model training classifier

5.1 general

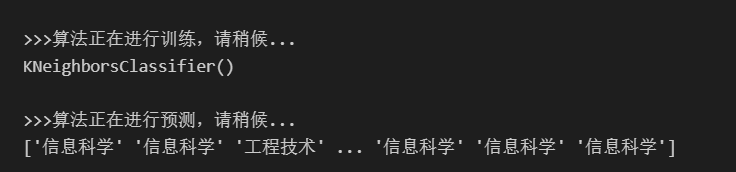

print('\n>>>Please wait while the algorithm is being trained...')

model.fit(X, y) # X is the feature training set and y is the target training set (x should be two-dimensional and y one-dimensional)

print(model)

print('\n>>>Please wait while the algorithm predicts...')

y_pred_model = model.predict(X_test) # X_test is a characteristic test set, and the target predicted value of the test set is predicted according to the characteristics of the test set

print(y_pred_model)

The effect is as follows

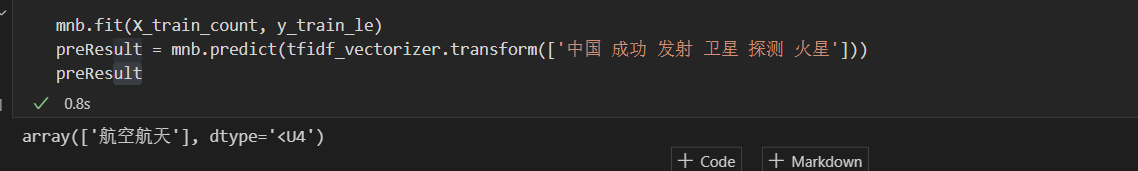

It can also be like this

Pay attention to the processing of eigenvalues

Pay attention to the processing of eigenvalues

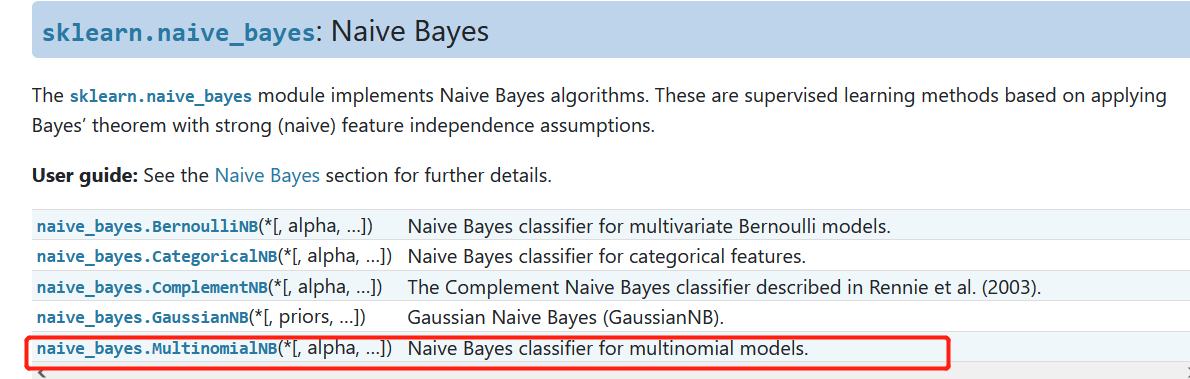

5.2 naive Bayes

from sklearn.naive_bayes import MultinomialNB, BernoulliNB, ... mnb = MultinomialNB(alpha = 1) # alpha Laplace smoothing coefficient mnb.fit(X_train, y_train) mnb.predict(X_test)