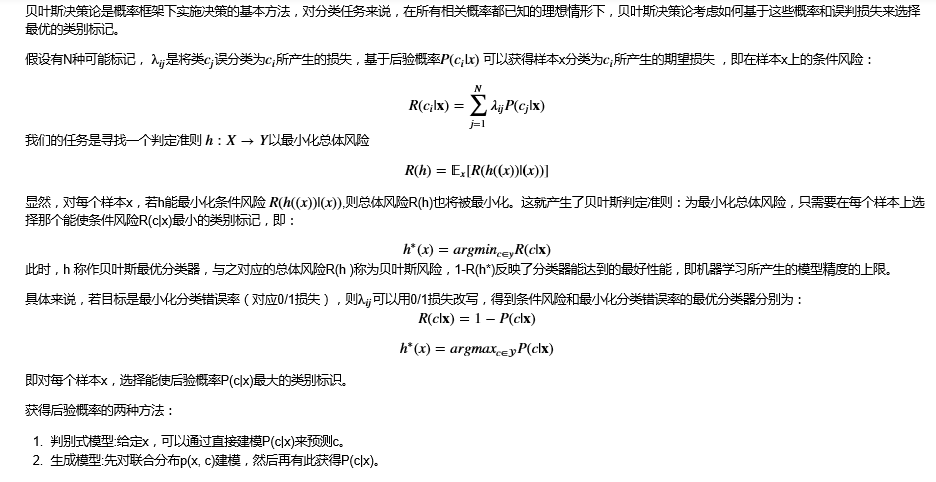

Bayesian Theory:

Bayesian python implementation:

from sklearn.naive_bayes import GaussianNB from sklearn.datasets import load_iris import pandas as pd import numpy as np from sklearn.model_selection import train_test_split iris = load_iris() X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2) clf = GaussianNB().fit(X_train, y_train) print ("Classifier Score:", clf.score(X_test, y_test))

import math class NaiveBayes: def __init__(self): self.model = None # Mathematical expectation @staticmethod def mean(X): """Calculated mean value Param: X : list or np.ndarray Return: avg : float """ avg = 0.0 # ========= show me your code ================== avg = np.mean(X) # ========= show me your code ================== return avg # Standard deviation (variance) def stdev(self, X): """Calculate standard deviation Param: X : list or np.ndarray Return: res : float """ res = 0.0 # ========= show me your code ================== res = math.sqrt(np.mean(np.square(X-self.mean(X)))) # ========= show me your code ================== return res # probability density function def gaussian_probability(self, x, mean, stdev): """Calculation based on mean value and marked difference x Sign the probability of the Gaussian distribution Parameters: ---------- x : input mean : mean value stdev : standard deviation Return: res : float, x Probability of compliance """ res = 0.0 # ========= show me your code ================== exp = math.exp(-math.pow(x - mean, 2) / 2 * math.pow(stdev, 2)) res = (1 / (math.sqrt(2 * math.pi) * stdev)) * exp # ========= show me your code ================== return res # Process x? Train def summarize(self, train_data): """Calculate the mean value and standard deviation of corresponding data under each category Param: train_data : list Return : [mean, stdev] """ summaries = [0.0, 0.0] # ========= show me your code ================== summaries = [(self.mean(i), self.stdev(i)) for i in zip(*train_data)] # ========= show me your code ================== return summaries # Find mathematical expectation and standard deviation by classification def fit(self, X, y): labels = list(set(y)) data = {label: [] for label in labels} for f, label in zip(X, y): data[label].append(f) self.model = { label: self.summarize(value) for label, value in data.items() } return 'gaussianNB train done!' # Computing probability def calculate_probabilities(self, input_data): """Calculate the probability of data under each Gaussian distribution Paramter: input_data : Input data Return: probabilities : {label : p} """ # summaries:{0.0: [(5.0, 0.37),(3.42, 0.40)], 1.0: [(5.8, 0.449),(2.7, 0.27)]} # input_data:[1.1, 2.2] probabilities = {} # ========= show me your code ================== for label, value in self.model.items(): probabilities[label] = 1 # here for i in range(len(value)): mean, stdev = value[i] probabilities[label] *= self.gaussian_probability(input_data[i], mean, stdev) # ========= show me your code ================== return probabilities # category def predict(self, X_test): # {0.0: 2.9680340789325763e-27, 1.0: 3.5749783019849535e-26} label = sorted(self.calculate_probabilities(X_test).items(), key=lambda x: x[-1])[-1][0] return label # Calculated score def score(self, X_test, y_test): right = 0 for X, y in zip(X_test, y_test): label = self.predict(X) if label == y: right += 1 return right / float(len(X_test))

Advantages and disadvantages

Advantage

- Naive Bayesian model has stable classification efficiency.

- It has good performance for small-scale data, can handle multi classification tasks, and is suitable for incremental training, especially when the amount of data exceeds the memory, it can be a batch of incremental training.

- It is not sensitive to missing data, and its algorithm is simple, which is often used in text classification.

Disadvantages:

- In theory, naive Bayesian model has the smallest error rate compared with other classification methods. But in fact, it is not always the case, because when the naive Bayesian model gives the output category, it assumes that the attributes are independent from each other, which is often not true in practical application. When the number of attributes is relatively large or the correlation between attributes is large, the classification effect is not good. However, when the correlation of attributes is small, naive Bayes has the best performance. For this point, some algorithms such as semi naive Bayes are improved by considering partial relevance.

- It is necessary to know the prior probability, and the prior probability depends on the hypothesis a lot of times. There can be many kinds of hypothetical models, so the prediction effect will be poor due to the reason of the hypothetical prior model at some times.

- Because we determine the posterior probability through prior and data, so we can determine the classification, so there is a certain error rate in the classification decision.

- It is sensitive to the expression of input data.

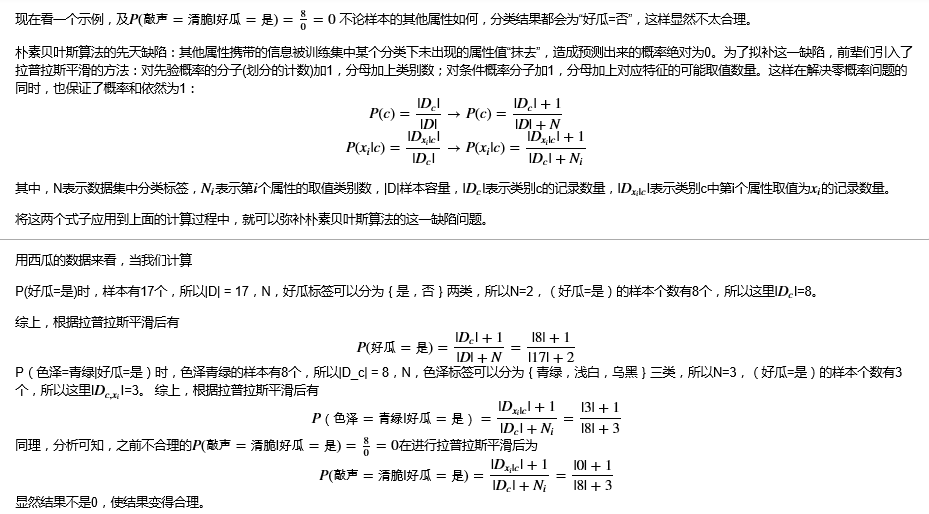

How to solve the problem of zero probability?

The problem of zero probability is that when calculating the probability of an instance, if a certain quantity x does not appear in the observation sample base (training set), the probability result of the whole instance will be 0

In the actual model training process, there may be a problem of zero probability (because the prior probability and the inverse conditional probability are calculated according to the training samples, but the number of training samples is not infinite, so there may be some situations that exist in practice, but not in the training samples, resulting in a probability value of 0, affecting the calculation of the posterior probability). Even if the training can continue to increase Data volume, but for some problems, how to increase data is not enough. At this time, we say that the model is not smooth. One way to make it smooth is to replace the training (learning) method with Bayesian estimation.

reference:

Watermelon book https://samanthachen.github.io/2016/08/05 /% E6% 9C% Ba% E5% 99% A8% E5% ad% A6% E4% B9% a0_5% 91% A8% E5% BF% 97% E5% 8D% 8e_7% AC% 94% E8% AE% B07/

https://www.jianshu.com/p/f1d3906e4a3e

https://zhuanlan.zhihu.com/p/66117273

https://zhuanlan.zhihu.com/p/39780650

https://blog.csdn.net/zrh_CSDN/article/details/81007851