preface

Concurrent programming technology is an important knowledge point in Java. How much do you know about the following?

Overview of process, thread and collaboration relationship

Process: in essence, it is an independently executed program. Process is the basic concept of resource allocation and scheduling by the operating system. The operating system is an independent unit for resource allocation and scheduling. Thread: the smallest unit that the operating system can schedule operations. It is included in the process and is the actual operation unit in the process. Multiple threads can be concurrent in a process. Each thread performs different tasks, and the switching is controlled by the system. Coroutine: also known as micro thread, it is a lightweight thread in user mode. Unlike threads and processes, coroutine requires context switching on the system kernel. The context switching of coroutine is determined by the user and has its own context. Therefore, it is a lightweight thread, also known as user level thread. A thread can have multiple coroutines, Threads and processes are synchronous mechanisms, while coprocesses are asynchronous. The native syntax of Java does not implement the co process. At present, python, Lua, GO and other languages support it. Relationship: a process can have multiple threads, which allows the computer to run two or more programs at the same time. The thread is the smallest execution unit of the process. The scheduling of CPU switches the process and thread. After the process and thread are more, scheduling will consume a lot of CPU, and the thread running on CPU is actually thread, and the thread can correspond to multiple cooperations.

Advantages and disadvantages of concurrency for multithreading

advantage:

-

Very fast context switching without the context switching of the system kernel to reduce the overhead

-

A single thread can achieve high concurrency, and a single core CPU can support tens of thousands of collaborative processes

-

Since there is only one thread and there is no conflict of writing variables at the same time, there is no need to lock the shared resources in the collaboration

Disadvantages:

-

A coroutine cannot utilize multi-core resources, and is essentially a single thread

-

The coroutine needs to cooperate with the process to run on multiple CPU s

-

At present, Java does not have a mature third-party library, which is at risk

-

It is difficult to debug and debug, which is not conducive to finding problems

The difference between concurrency and parallelism

concurrency: multiple tasks are processed simultaneously on one processor. In fact, multiple tasks are processed alternately at the same time. The program can have two or more threads at the same time. When there are multiple threads operating, if the system has only one CPU, it is impossible for it to carry out more than one thread at the same time, It can only divide the CPU running time into several time periods, and then allocate the time periods to each thread for execution. Parallelism: multiple tasks are processed on multiple CPUs at the same time. When one CPU executes one process, the other CPU can execute another process. The two processes do not preempt CPU resources and can be carried out at the same time.

It also refers to handling multiple tasks macroscopically over a period of time. Parallelism means that multiple tasks really run at the same time.

Several ways to implement multithreading in Java

1. Inherit Thread class

Inherit the Thread class, override the run method, create an instance, and execute the start method.

Advantages: code writing is the simplest and direct operation disadvantages: there is no return value. After inheriting one class, you can't inherit other classes, and the expansibility is poor

public class ThreadDemo1 extends Thread {

@Override

public void run() {

System.out.println("inherit Thread Implement multithreading, name:"+Thread.currentThread().getName());

}

}public static void main(String[] args) {

ThreadDemo1 threadDemo1 = new ThreadDemo1();

threadDemo1.setName("demo1");

threadDemo1.start();

System.out.println("Main thread Name:"+Thread.currentThread().getName());

}2. Implement Runnable interface

The user-defined class implements the Runnable interface, implements the run method inside, creates a Thread class, uses the implementation object of the Runnable interface as a parameter, passes it to the Thread object, and calls the start method. Advantages: the Thread class can implement multiple interfaces and inherit another class. Disadvantages: it has no return value and cannot be started directly. It needs to be started by constructing a Thread instance

public class ThreadDemo2 implements Runnable {

@Override

public void run() {

System.out.println("adopt Runnable Implement multithreading, name:"+Thread.currentThread().getName());

}

}public static void main(String[] args) {

ThreadDemo2 threadDemo2 = new ThreadDemo2();

Thread thread = new Thread(threadDemo2);

thread.setName("demo2");

thread.start();

System.out.println("Main thread Name:"+Thread.currentThread().getName());

}lambda expressions are used after JDK8

public static void main(String[] args) {

Thread thread = new Thread(()->{

System.out.println("adopt Runnable Implement multithreading, name:"+Thread.currentThread().getName());

});

thread.setName("demo2");

thread.start();

System.out.println("Main thread Name:"+Thread.currentThread().getName());

}3. Through Callable and FutureTask

Create the implementation class of the callable interface, implement the call method, and wrap the callable object with the FutureTask class to realize multithreading.

Advantages: it has return value and high expansibility. Disadvantages: it can only be supported after jdk5. You need to rewrite the call method and combine multiple classes, such as FutureTask and Thread

public class MyTask implements Callable<Object> {

@Override

public Object call() throws Exception {

System.out.println("adopt Callable Implement multithreading, name:"+Thread.currentThread().getName());

return "This is the return value";

}

}

public static void main(String[] args) {

MyTask myTask = new MyTask();

FutureTask<Object> futureTask = new FutureTask<>(myTask);

//FutureTask inherits Runnable and can be put into Thread to start execution

Thread thread = new Thread(futureTask);

thread.setName("demo3");

thread.start();

System.out.println("Main thread name:"+Thread.currentThread().getName());

try {

System.out.println(futureTask.get());

} catch (InterruptedException e) {

//Thrown if it is interrupted during blocking waiting

e.printStackTrace();

} catch (ExecutionException e) {

//Exception sent during execution is thrown

e.printStackTrace();

}

}lambda expressions are used

public static void main(String[] args) {

FutureTask<Object> futureTask = new FutureTask<>(()->{

System.out.println("adopt Callable Implement multithreading, name:"+Thread.currentThread().getName());

return "This is the return value";

});

//FutureTask inherits Runnable and can be put into Thread to start execution

Thread thread = new Thread(futureTask);

thread.setName("demo3");

thread.start();

System.out.println("Main thread name:"+Thread.currentThread().getName());

try {

System.out.println(futureTask.get());

} catch (InterruptedException e) {

//Thrown if it is interrupted during blocking waiting

e.printStackTrace();

} catch (ExecutionException e) {

//Exception sent during execution is thrown

e.printStackTrace();

}

}4. Create threads through thread pool

Customize the Runnable interface, implement the run method, create a thread pool, call the execution method and pass in the object.

Advantages: safety, high performance, reusing threads disadvantages: it is only supported after jdk5 and needs to be used in combination with Runnable

public class ThreadDemo4 implements Runnable {

@Override

public void run() {

System.out.println("Through thread pool+runnable Implement multithreading, name:"+Thread.currentThread().getName());

}

}

public static void main(String[] args) {

ExecutorService executorService = Executors.newFixedThreadPool(3);

for(int i=0;i<10;i++){

executorService.execute(new ThreadDemo4());

}

System.out.println("Main thread name:"+Thread.currentThread().getName());

//Close thread pool

executorService.shutdown();

}-

The commonly used Runnable and the fourth thread pool + Runnable are simple, easy to expand, and high-performance (the idea of pooling)

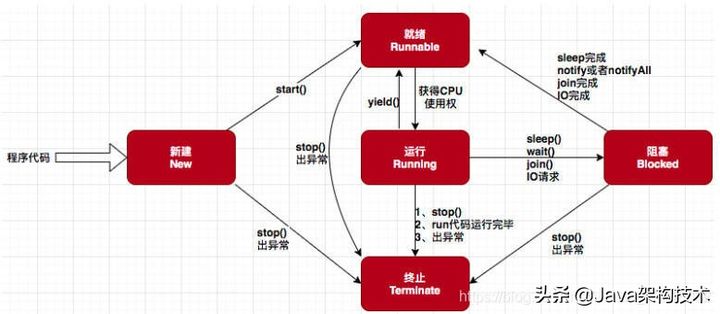

Common basic states of Java threads

There are 6 thread states in JDK and 9 in JVM.

Five common states

Create (NEW): the thread object is generated, but the object start() is not called. Runnable: when the start () method of the thread object is called, the thread enters the ready state, but the thread scheduler has not set the thread as the current thread, that is, it has not obtained the CPU usage right. If the thread runs and comes back from waiting or sleep, it will also enter the ready state.

Running: the program sets the thread in the ready state as the current thread, that is, it obtains the CPU usage right. At this time, the thread enters the running state and starts running the logic in run. Blocked WAITING for blocking: threads entering this state need to wait for other threads to make certain actions (notification or interrupt). In this state, CPUs will not be allocated. They need to be awakened and may wait indefinitely. For example, call wait (the state will change to WAITING), or call sleep (the state will change to TIMED_WAITING), join, or issue IO requests. After blocking, the thread will re-enter the ready state.

Synchronization blocking: if a thread fails to obtain a synchronized synchronization lock, that is, the lock is occupied by other threads, it will enter the synchronization blocking state.

Note: relevant information will be subdivided into the following state WAITING: the thread entering this state needs to wait for other threads to make some specific actions (notification or interrupt). Timed_WAITING: this state is different from WAITING. It can return after a specified time.

Terminated: when the execution of a thread's run method ends, the thread dies and cannot enter the ready state.

Common methods of multithreading development

sleep

Methods belonging to Thread; Let the Thread suspend execution and wait for the expected time before resuming; Hand over the CPU usage right without releasing the lock; Enter blocking state time_ Waiting, the end of sleep becomes ready Runnable;

yield

Methods belonging to Thread;

Pause the object of the current thread to execute other threads;

Handing over the CPU usage right will not release the lock, which is similar to sleep;

Function: let threads with the same priority execute in turn, but it is not guaranteed to take turns;

Note: the thread will not enter the blocking state, but directly become ready Runnable, and only need to regain the CPU usage right;

join

Methods belonging to Thread;

Running and calling this method on the main thread will make the main thread sleep and will not release the object lock already held;

Let the thread calling the join method finish executing first, and then execute other threads;

wait

Methods belonging to Object;

When the current thread calls the wait method of the object, it will release the lock and enter the thread's waiting queue;

You need to wake up by notifyor notifyAll, or automatically wake up by waiting (timeout) time;

notify

Methods belonging to Object;

Wake up a single thread waiting on the object monitor, and the selection is arbitrary;

notifyAll

Methods belonging to Object;

Wake up all threads waiting on the object monitor;

State transition diagram of thread

Methods to ensure thread safety in Java

-

Lock, such as synchronize/ReentrantLock

-

Variable declaration using volatile, lightweight synchronization, can not guarantee atomicity

-

Use thread safety class, atomic class AtomicXXX, concurrency container, synchronization CopyOnWriteArrayList/ConcurrentHashMap, etc

-

ThreadLocal local private variable / Semaphore semaphore, etc

Parsing volatile keyword

Volatile is a lightweight synchronized variable, which ensures the visibility of shared variables. Variables modified by volatile keywords are immediately visible to other threads if the value changes, so as to avoid dirty reading. Why does dirty reading occur? The JAVA memory model is abbreviated as JMM. JMM stipulates that all variables exist in the main memory. Each thread has its own working memory. The thread's operations on variables are carried out in the working memory. It cannot operate directly on the main memory. Volatile is used to modify variables. The latest value must be obtained from the main memory attribute before each reading. Each writing needs to be written to the main memory immediately. The variable modified by volatile keyword can see its latest value at any time. If thread 1 modifies variable v, thread 2 can see it immediately. Volatile: visibility is guaranteed, but atomicity is not guaranteed. Synchronized: visibility and atomicity are guaranteed

What is instruction rearrangement

There are two types of instruction reordering: compiler reordering and runtime reordering. The JVM reorders existing instructions when compiling Java code or CPU executes JVM bytecode. The main purpose is to optimize operation efficiency (without changing program results)

For example: int a = 3 / / step 1: int b = 4 / / step 2: int c = 5 / / step 3: int h = ABC / / step 4: define the order 1,2,3,4, and calculate the order 1,3,2,4 and 2,1,3,4. The results are the same

What is happens before and why

Happens before: A happens before B means that A occurs before B (this statement is not very accurate), which is defined as hb(A, B). In the Java memory model, happens before means that the result of the previous operation can be obtained by subsequent operations. The JVM compiles and optimizes the code, resulting in instruction reordering. In order to avoid the impact of compilation and Optimization on the security of concurrent programming, the happens before rule needs to define some scenes that prohibit compilation and optimization to ensure the correctness of concurrent programming.

Happens before eight rules

1. Program order rules: the execution results of a piece of code in a thread are orderly. It will also rearrange instructions, but whatever it is, the results will be generated in the order of our code and will not change. 2. Process locking rules: for the same lock, whether in a single threaded environment or a multi-threaded environment, after one thread unlocks the lock, another thread obtains the lock, and can see the operation results of the previous thread! (the process is a general synchronization primitive, and synchronized is the implementation of the process) 3.volatile variable rule: if a thread writes a volatile variable first, and then a thread reads the variable, the result of the write operation must be visible to the reading thread. 4. Thread startup rule: during the execution of main thread a, start sub thread B, and then the thread A's modification of shared variables before starting child thread B is visible to thread B. 5. Thread termination rule: when child thread B terminates during the execution of main thread a, the modification result of shared variables by thread B before termination is visible in thread A. It is also known as thread join() rule. 6. Thread interrupt rule: interrupt() to thread The method is called first when the interrupted thread code detects the occurrence of an interrupt event. You can detect whether an interrupt occurs through Thread.interrupted(). 7. Transitivity rule: this simple rule is that the happens before principle is transitive, that is, hb(A, B), hb(B, C), then hb(A, C) 8. Object termination rule: This is also simple, that is, the completion of the initialization of an object, that is, the end of the constructor execution must happen before its finalize() method.

Three elements of concurrent programming

Atomicity: an indivisible particle. Atomicity means that one or more operations are either executed successfully or failed. They cannot be interrupted during this period, and there is no context switching. Thread switching will bring atomicity problems

int num = 1; / / atomic operation num + +; / / for non atomic operation, read num from main memory to thread working memory, perform + 1, and then write num to main memory, unless atomic class, i.e. atomic variable class in java.util.concurrent.atomic, is used. The solution is to use synchronized or lock (such as ReentrantLock) to "turn" this multi-step operation into atomic operation

public class Test {

private int num = 0;

//Using lock, each object has a lock. Only when you obtain this lock can you perform the corresponding operation

Lock lock = new ReentrantLock();

public void add1(){

lock.lock();

try {

num++;

}finally {

lock.unlock();

}

}

//Using synchronized, and the above is an operation. This is to ensure that the method is locked. The above is to ensure that the code block is locked

public synchronized void add2(){

num++;

}

}Solution core idea: regard a method or code block as a whole to ensure that it is an indivisible whole

Orderliness: the order in which the program executes is in the order of the code, because the processor may reorder the instructions

When the JVM compiles java code or the CPU executes JVM bytecode, it reorders the existing instructions. The main purpose is to optimize the running efficiency (without changing the program results)

int a = 3 //Step 1 int b = 4 //Step 2 int c =5 //Step 3 int h = a*b*c //Step 4

The results of the above example execution sequences 1,2,3,4 and 2,1,3,4 are the same. Instruction reordering can improve execution efficiency, but multithreading may affect the results. If the following scenario is normal, it is sequential processing

//Thread 1

before();//The following run method can be formally run after the initialization is processed

flag = true; //The marked resources are handled well. If the resources are not handled well, the program may have problems

//Thread 2

while(flag){

run(); //Core business code

}

After reordering the instructions, the order is changed, and the program has problems, which are difficult to troubleshoot

//Thread 1

flag = true; //The marked resources are handled well. If the resources are not handled well, the program may have problems

//Thread 2

while(flag){

run(); //Core business code

}

before();//The following run method can be formally run after the initialization is processedVisibility: the modification of shared variables by one thread A can be seen immediately by another thread B

// Thread A execution

int num = 0;

// Thread A execution

num++;

// Thread B execution

System.out.print("num Value of:" + num);Thread A executes i + + and then executes thread B. thread B may have two results, which may be 0 and 1. Because i + + performs operations in thread A and does not immediately update to the main memory, thread B reads and prints in the main memory. At this time, 0 is printed; It is also possible that thread A has finished updating to main memory, and the value of thread B is 1. Therefore, it is necessary to ensure thread visibility. synchronized, lock and volatile can ensure thread visibility.

Common inter process scheduling algorithms

First come, first served scheduling algorithm: scheduling according to the order of arrival of jobs / processes, that is, giving priority to the jobs with the longest waiting time in the system. The waiting time of the short processes after the long processes is long, which is not conducive to the short jobs / processes. Short job priority scheduling algorithm: short processes / jobs (requiring the shortest service time) account for a large proportion in actual situations, In order to make them execute preferentially and unfriendly to long jobs, the high response ratio priority scheduling algorithm: in each scheduling, the priority of each job is calculated first: priority = response ratio = (waiting time + required service time) / required service time, because the sum of waiting time and service time is the response time of the system to the job, Therefore, priority = response ratio = response time / required service time. Selecting the service with high priority needs to calculate priority information, which increases the overhead of the system

Time slice rotation scheduling algorithm: serve each process in turn, so that each process can get a response within a certain time interval. Due to the high frequency of process switching, it will increase the overhead and do not distinguish the urgency of the task. Priority scheduling algorithm: schedule according to the urgency of the task, first process with high priority and slow process with low priority, If there are many high priority tasks and they are generated continuously, the low priority tasks may be processed slowly

Common inter thread scheduling algorithms

Thread scheduling refers to the process in which the system allocates CPU usage rights to threads, which is mainly divided into two types: collaborative thread scheduling (time-sharing scheduling mode): the thread execution time is controlled by the thread itself. After the thread completes its own work, it should actively notify the system to switch to another thread. The biggest advantage is that the implementation is simple, and the switching operation is known to the thread itself, and there is no thread synchronization problem. The disadvantage is that the thread execution time is uncontrollable. If there is a problem with a thread, it may be blocked there all the time. Preemptive thread scheduling: the execution time of each thread will be allocated by the system, and the thread switching is not determined by the thread itself (in Java, Thread.yield() can give up the execution time, but cannot obtain the execution time). The thread execution time can be controlled by the system, and the whole process will not be blocked by one thread. Java thread scheduling is preemptive scheduling, which gives priority to the threads with high priority in the runnable pool to occupy the CPU. If the threads in the runnable pool have the same priority, select a thread at random. Therefore, if we want some threads to allocate more time and some threads to allocate less time, we can set the thread priority.

JAVA thread priority, specified as an integer from 1 to 10. When multiple threads can run, the JVM will generally run the thread with the highest priority (Thread.MIN_PRIORITY to Thread.MAX_PRIORITY). When the two threads are in the ready runnable state at the same time, the higher the priority, the easier it is for the system to choose to execute. However, the priority is not 100%, but the chance is greater.

Locks commonly used in Java multithreading

Pessimistic lock: when a thread operates on data, it always thinks that other threads will modify the data, so it will lock every time it takes the data, and other threads will block when it takes the data. For example, synchronized optimistic lock: every time it takes the data, it thinks that others will not modify it. When updating, it will judge whether others will go back to update the data, Judging from the version, if the data is modified, it will refuse to update. For example, CAS is an optimistic lock, but strictly speaking, it is not a lock. It is through atomicity to ensure data synchronization. For example, the optimistic lock of the database is realized through version control, and CAS will not ensure thread synchronization, Optimistic that there is no other thread impact during data update summary: pessimistic locks are suitable for scenarios with more write operations, optimistic locks are suitable for scenarios with more read operations, and the throughput of optimistic locks will be more than pessimistic locks. Fair lock: multiple threads acquire locks according to the order in which they apply for locks. In short, in a thread group, it can ensure that each thread can get locks, such as ReentrantLock (the bottom layer is implemented by synchronous queue FIFO:First Input First Output). Unfair lock: the way to obtain locks is random, which can not ensure that each thread can get locks, That is, there are threads that starve and can't get locks. For example, synchronized and ReentrantLock summary: the performance of unfair locks is higher than fair locks and can reuse CPU time. Reentrant lock: also called recursive lock. After the lock is used in the outer layer, it can still be used in the inner layer without life and death lock. Non reentrant lock: if the current thread has obtained the lock after executing a method, it will not be blocked when trying to obtain the lock again in the method. Summary: reentrant lock can avoid deadlock to a certain extent. Synchronized ReentrantLock is a reentrant lock. Spin lock: when a thread acquires a lock, if the lock has been acquired by other threads, the thread will wait in a loop, and then constantly judge whether the lock can be successfully acquired. It will not exit the loop until the lock is acquired. At most one execution unit can obtain the lock at any time. Summary: spin lock will not switch thread state and is always in user state, It reduces the consumption of thread context switching. The disadvantage is that the loop will consume CPU. Common spin locks: TicketLock,CLHLock,MSCLock.

Shared lock: also called S lock / read lock, it is A data lock that can be viewed but cannot be modified and deleted. After locking, other users can read and query data concurrently, but cannot modify, add or delete data. This lock can be held by multiple threads for resource data sharing. Mutex lock: also known as X lock / exclusive lock / write lock / exclusive lock / exclusive lock / this lock can only be held by one thread at A time. After locking, any thread trying to lock again will be blocked until the current thread is unlocked. Example: if thread A adds an exclusive lock to data1, other threads cannot add any type of lock to data1. The thread that obtains the mutex can read and modify data. Deadlock: A blocking phenomenon caused by two or more threads competing for resources or communicating with each other during execution. If there is no external force, they will not be able to make the program continue. The following three optimizations are made by the JVM to improve the efficiency of lock acquisition and release. For Synchronized lock upgrade, the lock status is indicated by the field in the object header of the object monitor, which is an irreversible process.

Biased lock: if a piece of synchronization code is accessed by a thread all the time, the thread will automatically acquire the lock, and the cost of acquiring the lock is lower.

Lightweight lock: when the lock is a biased lock and accessed by other threads, the biased lock will be upgraded to a lightweight lock. Other threads will try to obtain the lock in the form of spin, but it will not block, and the performance will be high.

Heavyweight lock: when the lock is a lightweight lock, although other threads spin, the spin will not continue to cycle. When they spin a certain number of times and have not obtained the lock, they will enter blocking. The lock will be upgraded to a heavyweight lock. The heavyweight lock will cause other applied threads to enter blocking, and the performance will be reduced. Segment lock, row lock and table lock

Write an example of multithreaded deadlock

Deadlock: A thread applies for lock B when it obtains lock A and does not release it. At this time, another thread has obtained lock B and needs to obtain lock A before releasing lock B. therefore, the closed loop occurs and falls into A deadlock cycle.

public class DeadLockDemo {

private static String locka = "locka";

private static String lockb = "lockb";

public void methodA(){

synchronized (locka){

System.out.println("I am A Method A "+Thread.currentThread().getName() );

//Give up the CPU execution right without releasing the lock

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

synchronized(lockb){

System.out.println("I am A Method B "+Thread.currentThread().getName() );

}

}

}

public void methodB(){

synchronized (lockb){

System.out.println("I am B Method B "+Thread.currentThread().getName() );

//Give up the CPU execution right without releasing the lock

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

synchronized(locka){

System.out.println("I am B Method A "+Thread.currentThread().getName() );

}

}

}

public static void main(String [] args){

System.out.println("The main thread starts running:"+Thread.currentThread().getName());

DeadLockDemo deadLockDemo = new DeadLockDemo();

new Thread(()->{

deadLockDemo.methodA();

}).start();

new Thread(()->{

deadLockDemo.methodB();

}).start();

System.out.println("End of main thread:"+Thread.currentThread().getName());

}

}

There are two common solutions to deadlock in the above example:

-

Adjust the range of lock application

-

Adjust the order of applying for locks

public class FixDeadLockDemo {

private static String locka = "locka";

private static String lockb = "lockb";

public void methodA(){

synchronized (locka){

System.out.println("I am A Method A "+Thread.currentThread().getName() );

//Give up the CPU execution right without releasing the lock

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

synchronized(lockb){

System.out.println("I am A Method B "+Thread.currentThread().getName() );

}

}

public void methodB(){

synchronized (lockb){

System.out.println("I am B Method B "+Thread.currentThread().getName() );

//Give up the CPU execution right without releasing the lock

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

synchronized(locka){

System.out.println("I am B Method A "+Thread.currentThread().getName() );

}

}

public static void main(String [] args){

System.out.println("The main thread starts running:"+Thread.currentThread().getName());

FixDeadLockDemo deadLockDemo = new FixDeadLockDemo();

for(int i=0; i<10;i++){

new Thread(()->{

deadLockDemo.methodA();

}).start();

new Thread(()->{

deadLockDemo.methodB();

}).start();

}

System.out.println("End of main thread:"+Thread.currentThread().getName());

}

}

Four necessary conditions for Deadlock:

-

Mutually exclusive condition: the process does not allow other processes to access the allocated resource. If other processes access the resource, they can only wait until the process occupying the resource is used and releases the resource

-

Request and hold conditions: after a process obtains a certain resource, it makes a request for other resources, but the resource may be occupied by other processes. The request is blocked, but it still keeps the resources it obtains

-

Inalienable conditions: refers to the resources obtained by the process, which cannot be deprived before the use is completed, and can only be released after the use is completed

-

Loop wait condition: refers to a loop wait resource relationship formed between several processes after a process deadlock

These four conditions are the necessary conditions for deadlock. As long as the system deadlock occurs, these conditions must be true, and as long as one of the above conditions is not satisfied, deadlock will not occur.

Design a simple example of non reentrant lock

Non reentrant lock: if the current thread has acquired the lock by executing a method, the blocked lock will not be acquired when trying to acquire the lock again in other methods.

private void methodA(){

//Get lock TODO

methodB();

}

private void methodB(){

//Get lock TODO

//Other operations

}

/**

* Simple example of non reentrant lock

*

* Non reentrant lock: if the current thread has acquired the lock by executing a method, the blocked lock will not be acquired when trying to acquire the lock again in other methods

*/

public class UnreentrantLock {

private boolean isLocked = false;

public synchronized void lock() throws InterruptedException {

System.out.println("get into lock Lock "+Thread.currentThread().getName());

//Judge whether it has been locked. If it is locked, the thread of the current request will wait

while (isLocked){

System.out.println("get into wait wait for "+Thread.currentThread().getName());

wait();

}

//Lock

isLocked = true;

}

public synchronized void unlock(){

System.out.println("get into unlock Unlock "+Thread.currentThread().getName());

isLocked = false;

//Wake up a thread in the object lock pool

notify();

}

}

public class Main {

private UnreentrantLock unreentrantLock = new UnreentrantLock();

//Locking is recommended in try and unlocking is recommended in finally

public void methodA(){

try {

unreentrantLock.lock();

System.out.println("methodA Method called");

methodB();

}catch (InterruptedException e){

e.fillInStackTrace();

} finally {

unreentrantLock.unlock();

}

}

public void methodB(){

try {

unreentrantLock.lock();

System.out.println("methodB Method called");

}catch (InterruptedException e){

e.fillInStackTrace();

} finally {

unreentrantLock.unlock();

}

}

public static void main(String [] args){

//The same thread is demonstrated

new Main().methodA();

}

}

//For the same thread, repeated lock acquisition fails to form a deadlock, which is a non reentrant lockDesign a simple example of reentrant lock

Reentrant lock: also called recursive lock. After the lock is used in the outer layer, it can still be used in the inner layer without deadlock

/**

* Simple example of reentrant lock

*

* Reentrant lock: also called recursive lock. After the lock is used in the outer layer, it can still be used in the inner layer without deadlock

*/

public class ReentrantLock {

private boolean isLocked = false;

//The thread used to record whether it is reentrant or not

private Thread lockedOwner = null;

//The number of times of locking is accumulated. The number of times of locking is accumulated by 1, and the number of times of unlocking is reduced by 1

private int lockedCount = 0;

public synchronized void lock() throws InterruptedException {

System.out.println("get into lock Lock "+Thread.currentThread().getName());

Thread thread = Thread.currentThread();

//Judge whether the same thread obtains the lock and compare the reference address

while (isLocked && lockedOwner != thread ){

System.out.println("get into wait wait for "+Thread.currentThread().getName());

System.out.println("Current lock status isLocked = "+isLocked);

System.out.println("current count quantity lockedCount = "+lockedCount);

wait();

}

//Lock

isLocked = true;

lockedOwner = thread;

lockedCount++;

}

public synchronized void unlock(){

System.out.println("get into unlock Unlock "+Thread.currentThread().getName());

Thread thread = Thread.currentThread();

//The lock added by thread A can only be unlocked by thread A, but not by other threads B

if(thread == this.lockedOwner){

lockedCount--;

if(lockedCount == 0){

isLocked = false;

lockedOwner = null;

//Wake up a thread in the object lock pool

notify();

}

}

}

}public class Main {

//private UnreentrantLock unreentrantLock = new UnreentrantLock();

private ReentrantLock reentrantLock = new ReentrantLock();

//Locking is recommended in try and unlocking is recommended in finally

public void methodA(){

try {

reentrantLock.lock();

System.out.println("methodA Method called");

methodB();

}catch (InterruptedException e){

e.fillInStackTrace();

} finally {

reentrantLock.unlock();

}

}

public void methodB(){

try {

reentrantLock.lock();

System.out.println("methodB Method called");

}catch (InterruptedException e){

e.fillInStackTrace();

} finally {

reentrantLock.unlock();

}

}

public static void main(String [] args){

for(int i=0 ;i<10;i++){

//The same thread is demonstrated

new Main().methodA();

}

}

}Welcome to add!!!

last

Thank you for reading here. If you don't understand anything, you can ask me in the comment area. If you think the article is helpful to you, remember to give me a praise. You will share java related technical articles or industry information every day. You are welcome to pay attention to and forward the article!