Importance of logs

The role of log is very important. The log can record the user's operation and program exceptions, and can also provide a basis for data analysis. The significance of log is to record errors in the running process of the program, facilitate maintenance and debugging, quickly locate errors and reduce maintenance costs. Every programmer should know that logging is not for logging, and logging is not arbitrary. It is necessary to realize the process of restoring the execution of the whole program only through the log file, so as to transparently see the execution of the program and where each thread and process are executed. Like the black box of an aircraft, the log should be able to recover the whole scene and even the details of the abnormality!

Common logging methods

print()

The most common way is to take the output function print() as a log record and print various prompt information directly. It is common in personal practice projects. It is usually too lazy to configure the log separately. Moreover, the project is too small and does not need log information, online or continuous operation. It is not recommended to print log information directly for complete projects, and almost no one does so in reality.

Self writing template

We can see in many small projects that the author has written a log template himself. Usually, simple log output can be realized by slightly encapsulating print() or sys.stdout. Sys.stdout here is the standard output stream in Python, and the print() function is an advanced encapsulation of sys.stdout. When we call print(obj) for printing objects in python, In fact, sys.stdout.write(obj+'\n') is called. Print() prints the content to the console, and then appends a newline \ n.

The self writing log template is suitable for small projects. You can write the template according to your own preferences. It does not need too many complex configurations and is convenient and fast. However, this way of recording logs is not very standardized. You may feel that your reading experience is good, but others often need to spend some time learning the logic, format and format of logs when contacting your projects Output mode, etc. this method is also not recommended for large projects.

An example of a simple self writing log template:

Log template log.py:

import sys

import traceback

import datetime

def getnowtime():

return datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

def _log(content, level, *args):

sys.stdout.write("%s - %s - %s\n" % (getnowtime(), level, content))

for arg in args:

sys.stdout.write("%s\n" % arg)

def debug(content, *args):

_log(content, 'DEBUG', *args)

def info(content, *args):

_log(content, 'INFO', *args)

def warn(content, *args):

_log(content, 'WARN', *args)

def error(content, *args):

_log(content, 'ERROR', *args)

def exception(content):

sys.stdout.write("%s - %s\n" % (getnowtime(), content))

traceback.print_exc(file=sys.stdout)Call log module:

import log

log.info("This is log info!")

log.warn("This is log warn!")

log.error("This is log error!")

log.debug("This is log debug!")

people_info = {"name": "Bob", "age": 20}

try:

gender = people_info["gender"]

except Exception as error:

log.exception(error)Log output:

2021-10-19 09:50:58 - INFO - This is log info!

2021-10-19 09:50:58 - WARN - This is log warn!

2021-10-19 09:50:58 - ERROR - This is log error!

2021-10-19 09:50:58 - DEBUG - This is log debug!

2021-10-19 09:50:58 - 'gender'

Traceback (most recent call last):

File "D:/python3Project/test.py", line 18, in <module>

gender = people_info["gender"]

KeyError: 'gender'Logging

In a complete project, most people will introduce a special logging library, and Python's own standard library logging is specially designed for logging. The functions and classes defined in the logging module implement a flexible event logging system for the development of applications and libraries. The key benefit of the logging API provided by the standard library module is that all Python modules can use this logging function. Therefore, your application log can integrate your own log information with information from third-party modules.

Although the logging module is powerful, its configuration is cumbersome. In large projects, you usually need to initialize the log separately, configure the log format, etc. brother K usually encapsulates the logging in the following way in daily use, so that the log can be saved by day and retained for 15 days. You can configure whether to output to the console and files, as shown below:

# Split and retain logs by day

import os

import sys

import logging

from logging import handlers

PARENT_DIR = os.path.split(os.path.realpath(__file__))[0] # Parent directory

LOGGING_DIR = os.path.join(PARENT_DIR, "log") # Log directory

LOGGING_NAME = "test" # Log file name

LOGGING_TO_FILE = True # Log output file

LOGGING_TO_CONSOLE = True # Log output to console

LOGGING_WHEN = 'D' # Log file segmentation dimension

LOGGING_INTERVAL = 1 # Automatically rebuild the file after an interval of less than when

LOGGING_BACKUP_COUNT = 15 # Number of logs reserved, 0 keeps all logs

LOGGING_LEVEL = logging.DEBUG # Log level

LOGGING_suffix = "%Y.%m.%d.log" # Old log file name

# Log output format

LOGGING_FORMATTER = "%(levelname)s - %(asctime)s - process:%(process)d - %(filename)s - %(name)s - line:%(lineno)d - %(module)s - %(message)s"

def logging_init():

if not os.path.exists(LOGGING_DIR):

os.makedirs(LOGGING_DIR)

logger = logging.getLogger()

logger.setLevel(LOGGING_LEVEL)

formatter = logging.Formatter(LOGGING_FORMATTER)

if LOGGING_TO_FILE:

file_handler = handlers.TimedRotatingFileHandler(filename=os.path.join(LOGGING_DIR, LOGGING_NAME), when=LOGGING_WHEN, interval=LOGGING_INTERVAL, backupCount=LOGGING_BACKUP_COUNT)

file_handler.suffix = LOGGING_suffix

file_handler.setFormatter(formatter)

logger.addHandler(file_handler)

if LOGGING_TO_CONSOLE:

stream_handler = logging.StreamHandler(sys.stderr)

stream_handler.setFormatter(formatter)

logger.addHandler(stream_handler)

def logging_test():

logging.info("This is log info!")

logging.warning("This is log warn!")

logging.error("This is log error!")

logging.debug("This is log debug!")

people_info = {"name": "Bob", "age": 20}

try:

gender = people_info["gender"]

except Exception as error:

logging.exception(error)

if __name__ == "__main__":

logging_init()

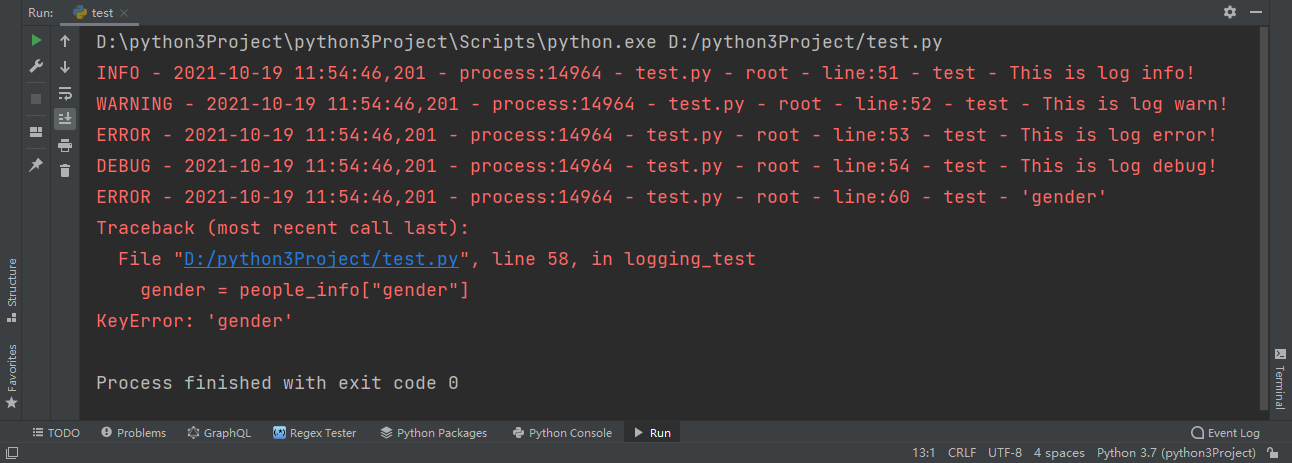

logging_test()Output log:

INFO - 2021-10-19 11:28:10,103 - process:15144 - test.py - root - line:52 - test - This is log info!

WARNING - 2021-10-19 11:28:10,105 - process:15144 - test.py - root - line:53 - test - This is log warn!

ERROR - 2021-10-19 11:28:10,105 - process:15144 - test.py - root - line:54 - test - This is log error!

DEBUG - 2021-10-19 11:28:10,105 - process:15144 - test.py - root - line:55 - test - This is log debug!

ERROR - 2021-10-19 11:28:10,105 - process:15144 - test.py - root - line:61 - test - 'gender'

Traceback (most recent call last):

File "D:/python3Project/test.py", line 59, in logging_test

gender = people_info["gender"]

KeyError: 'gender'It looks like this in the console:

Of course, if you don't need very complex functions and want to be concise, you can only output logs on the console, or you can only perform simple configuration:

import logging logging.basicConfig(level=logging.DEBUG, format='%(asctime)s - %(name)s - %(levelname)s - %(message)s') logging.getLogger()

More elegant solution: Loguru

For the logging module, you need to define your own format even for simple use. Here is a more elegant, efficient and concise third-party module: loguru. The official introduction is: loguru is a library which aims to bring enjoyable logging in Python. An official GIF is cited here to quickly demonstrate its functions:

install

Loguru only supports Python version 3.5 and above. You can install it using pip:

pip install loguru

Out of the box

The main concept of Loguru is that there is only one: logger

from loguru import logger

logger.info("This is log info!")

logger.warning("This is log warn!")

logger.error("This is log error!")

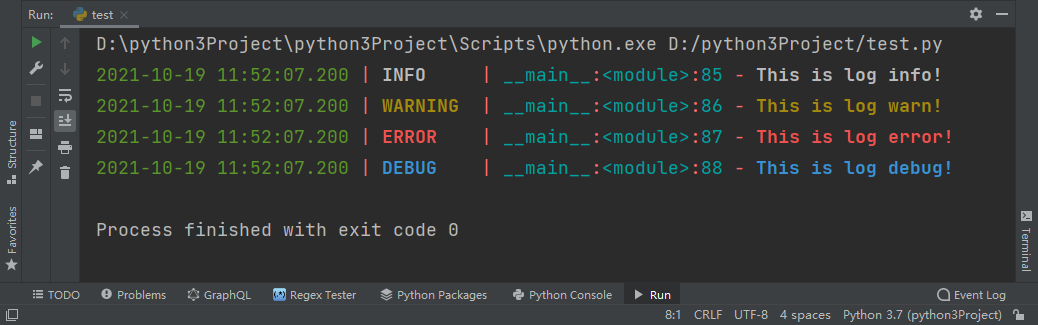

logger.debug("This is log debug!")Console output:

It can be seen that there is no need for manual setting. Loguru will configure some basic information in advance, and automatically output information such as time, log level, module name and line number. Moreover, different colors are automatically set according to different levels to facilitate observation. It really achieves out of the box!

add() / remove()

What if you want to customize the log level, customize the log format, and save the log to a file? Unlike the logging module, it does not require a Handler or Formatter. It only requires an add() function. For example, we want to store the log in a file:

from loguru import logger

logger.add('test.log')

logger.debug('this is a debug')We don't need to declare another FileHandler like the logging module, just a line of add() statement. After running, we will find that the debug information just output from the console also appears in the test.log directory.

Contrary to the add() statement, the remove() statement can delete the configuration we added:

from loguru import logger

log_file = logger.add('test.log')

logger.debug('This is log debug!')

logger.remove(log_file)

logger.debug('This is another log debug!')At this time, the console will output two debug messages:

2021-10-19 13:53:36.610 | DEBUG | __main__:<module>:86 - This is log debug! 2021-10-19 13:53:36.611 | DEBUG | __main__:<module>:88 - This is another log debug!

There is only one debug message in the test.log log log file because we used the remove() statement before the second debug statement.

Complete parameters

Loguru has very strong support for the configuration of outputting to files, such as outputting to multiple files, outputting separately by level, creating new files too large, automatically deleting files too long, etc. Let's take a detailed look at the detailed parameters of the add() statement:

Basic syntax:

add(sink, *, level='DEBUG', format='<green>{time:YYYY-MM-DD HH:mm:ss.SSS}</green> | <level>{level: <8}</level> | <cyan>{name}</cyan>:<cyan>{function}</cyan>:<cyan>{line}</cyan> - <level>{message}</level>', filter=None, colorize=None, serialize=False, backtrace=True, diagnose=True, enqueue=False, catch=True, **kwargs)Definition of basic parameters:

- sink: it can be a file object, such as sys.stderr or open ('File. Log ','w'), a str string or a pathlib.Path object, that is, a file path, or a method. You can define your own output implementation, or a Handler of a logging module, such as FileHandler, StreamHandler, or coroutine function , that is, a function that returns a coroutine object, etc.

- Level: log output and save level.

- Format: log format template.

- filter: an optional instruction that determines whether each recorded message should be sent to sink.

- colorize: whether the color marks contained in the formatted message should be converted to ansi code for terminal coloring, or otherwise stripped. If not, the selection is automatically made according to whether sink is tty (teletypewriter abbreviation).

- serialize: whether the recorded message should be converted into a JSON string before being sent to sink.

- backtrace: whether the formatted exception trace should extend up beyond the capture point to show the full stack trace that generated the error.

- diagnose: whether the exception trace should display variable values to simplify debugging. It is recommended to set False in the production environment to avoid disclosing sensitive data.

- enqueue: whether the message to be logged should first pass through the multi process security queue before reaching sink. This is very useful when logging to files through multiple processes. The advantage of this is to make the logging call non blocking.

- Catch: whether to automatically catch the errors that occur when sink processes log messages. If True, an exception message will be displayed on sys.stderr, but the exception will not be propagated to sink to prevent application crash.

- **kwargs: additional parameters that are valid only for configuration coroutines or file receivers (see below).

If and only if sink is a coprocessor function, the following parameters apply:

- Loop: the event loop in which the asynchronous logging task is scheduled and executed. If None, asyncio.get will be used_ event_ Loop() returns a loop.

If and only if sink is a file path, the following parameters apply:

- rotation: a condition indicating when the currently recorded file should be closed and a new file started.

- retention: an instruction to filter old files, which will be deleted during the cycle or the end of the program.

- compression: the compressed or archive format to which the log file should be converted when closed.

- Delay: whether to create the file immediately after configuring sink or delay until the first recorded message. The default is False.

- Mode: the opening mode of the built-in open() function. The default is a (open the file in append mode).

- Buffering: the buffering strategy of the built-in open() function. The default value is 1 (line buffered file).

- encoding: the file code of the built-in open() function. If None, the default is locale.getpreferredencoding().

- **kwargs: other parameters passed to the built-in open() function.

With so many parameters, we can see the power of the add() function. Only one function can realize many functions of the logging module. Next, we introduce some common methods.

rotation log file separation

The rotation parameter of the add() function enables you to create a new log file at a fixed time, such as setting 0 o'clock every day to create a new log file:

logger.add('runtime_{time}.log', rotation='00:00')Set more than 500 MB to create a new log file:

logger.add('runtime_{time}.log', rotation="500 MB")Set to create a new log file every other week:

logger.add('runtime_{time}.log', rotation='1 week')Retention log retention time

The retention parameter of the add() function allows you to set the maximum retention time of the log. For example, set the maximum retention time of the log file to 15 days:

logger.add('runtime_{time}.log', retention='15 days')Set up a maximum of 10 log files:

logger.add('runtime_{time}.log', retention=10)It can also be a datetime.timedelta object. For example, set the log file to be retained for up to 5 hours:

import datetime

from loguru import logger

logger.add('runtime_{time}.log', retention=datetime.timedelta(hours=5))Compression log compression format

The compression parameter of the add() function can configure the compression format of the log file, which can save more storage space. For example, set to save in the zip file format:

logger.add('runtime_{time}.log', compression='zip')Its formats support: GZ, bz2, XZ, lzma, tar, tar.gz, tar.bz2, tar.xz

String formatting

Loguru also provides a very friendly string formatting function when outputting log, which is equivalent to str.format():

logger.info('If you are using Python {}, prefer {feature} of course!', 3.6, feature='f-strings')Output:

2021-10-19 14:59:06.412 | INFO | __main__:<module>:3 - If you are using Python 3.6, prefer f-strings of course!

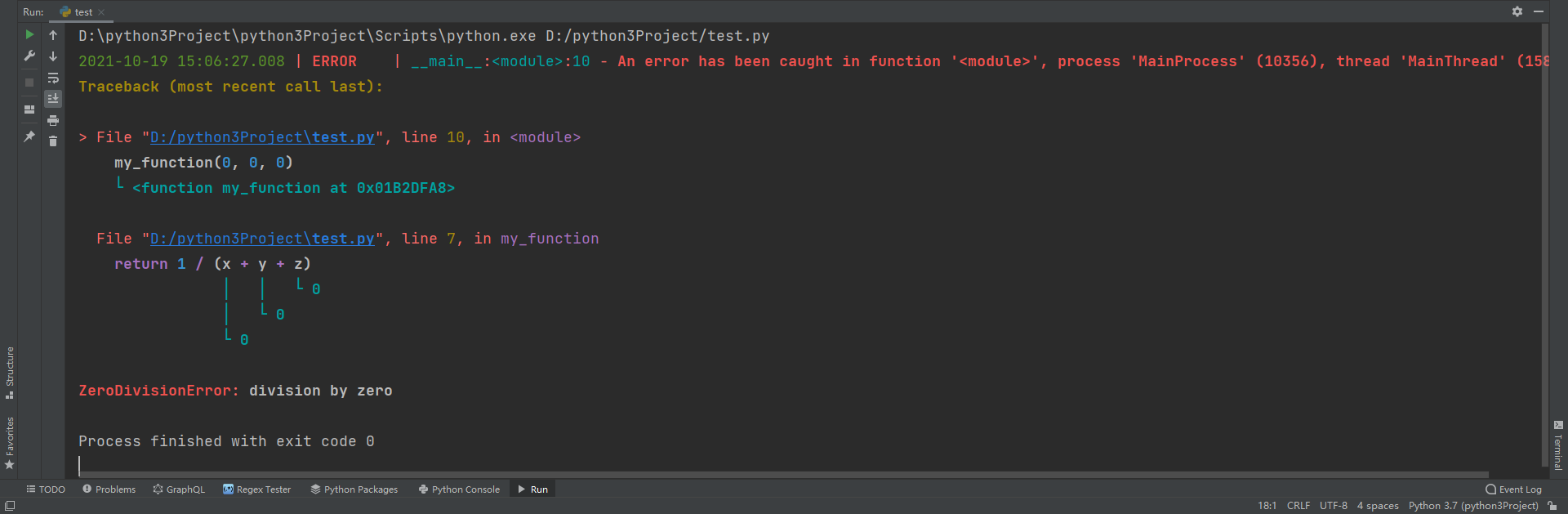

Anomaly tracing

In Loguru, you can directly use the decorator provided by it to directly capture exceptions, and the logs obtained are extremely detailed:

from loguru import logger

@logger.catch

def my_function(x, y, z):

# An error? It's caught anyway!

return 1 / (x + y + z)

my_function(0, 0, 0)Log output:

2021-10-19 15:04:51.675 | ERROR | __main__:<module>:10 - An error has been caught in function '<module>', process 'MainProcess' (30456), thread 'MainThread' (26268):

Traceback (most recent call last):

> File "D:/python3Project\test.py", line 10, in <module>

my_function(0, 0, 0)

└ <function my_function at 0x014CDFA8>

File "D:/python3Project\test.py", line 7, in my_function

return 1 / (x + y + z)

│ │ └ 0

│ └ 0

└ 0

ZeroDivisionError: division by zeroThe output on the console is as follows:

Compared with Logging, Loguru is much better than Logging in terms of configuration, log output style and exception tracking. Using Loguru can undoubtedly improve the efficiency of developers