(the catalog is on the right →

Code file address of this article

The preparation section is of personal interest. You can directly see the use section of locust. You can find a public interface test, such as https://www.baidu.com

prepare

database

Start a database

# Lifting container docker run -itd --name test_db -p 3396:3306 -e MYSQL_ROOT_PASSWORD=123456 mariadb # Sign in mysql -h192.168.1.105 -P3396 -uroot -p123456 # Create database MariaDB [(none)]> create database tdb;

Make some data

import pymysql

import numpy as np

HOST = '192.168.1.105'

PORT = 3396

USER = 'root'

PWD = '123456'

DB = 'tdb'

TABLE = 'employee'

def getEmployee(bit=8):

chars = [chr(i) for i in range(65, 91)] + [chr(i) for i in range(97, 123)]

name = ''.join(np.random.choice(chars, bit))

age = np.random.randint(18, 60)

sex = np.random.choice(['0', '1'])

return str((0, name, age, sex))

def dataGen():

database = pymysql.connect(user=USER, password=PWD, host=HOST, port=PORT, database=DB, charset='utf8')

cursor = database.cursor()

# Create table

sql_ct = "CREATE TABLE IF NOT EXISTS {} ( " \

"eid INT AUTO_INCREMENT, " \

"ename VARCHAR(20) NOT NULL, " \

"age INT, " \

"sex VARCHAR(1), " \

"PRIMARY KEY(eid))".format(TABLE)

cursor.execute(sql_ct)

for i in range(100000):

employee = getEmployee()

sql_i = "INSERT INTO {} VALUE {}".format(TABLE, employee)

cursor.execute(sql_i)

if (i+1)%100==0:

print('\r[{}/100000]'.format(i+1), end='')

database.commit()

database.commit()

database.close()

if __name__ == '__main__':

dataGen()

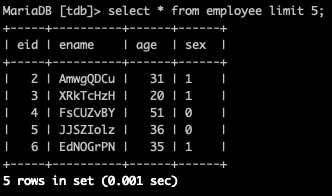

The constructed data are as follows

Start a service

Make an http service, dbaserver.py

import pymysql

from socketserver import ThreadingMixIn

from http.server import HTTPServer

from http.server import SimpleHTTPRequestHandler

from sys import argv

import logging

HOST = '192.168.5.217'

PORT = 3396

USER = 'root'

PWD = '123456'

DB = 'tdb'

TABLE = 'employee'

def dataSelect(eid):

database = pymysql.connect(user=USER, password=PWD, host=HOST, port=PORT, database=DB, charset='utf8')

cursor = database.cursor()

sql_s = "SELECT * FROM {} WHERE eid={}".format(TABLE, eid)

cursor.execute(sql_s)

res = cursor.fetchall() # Obtain results

database.close()

if res:

ee = res[0]

return {'name': ee[1], 'age': ee[2], 'sex': 'female' if ee[3]=='0' else 'male'}

return 'no this employee by eid={}'.format(eid)

class ThreadingServer(ThreadingMixIn, HTTPServer):

pass

class RequestHandler(SimpleHTTPRequestHandler):

def do_GET(self):

self.send_response(200)

self.send_header('Content-type', 'text/plain;charset=utf-8')

try:

eid = int(self.path[1:])

except:

eid = -1

response = dataSelect(eid)

logging.info('request of {} by eid={}'.format(response, eid))

self.end_headers()

self.wfile.write(str(response).encode())

def run(server_class=ThreadingServer, handler_class=RequestHandler, port=8888):

server_address = ('0.0.0.0', port)

httpd = server_class(server_address, handler_class)

logging.info('server start in http://{}:{}'.format(*server_address))

try:

httpd.serve_forever()

except KeyboardInterrupt:

pass

httpd.server_close()

def main():

logging.basicConfig(level=logging.INFO, format='%(levelname)-8s %(asctime)s: %(message)s',

datefmt='%m-%d %H:%M')

if len(argv) == 2:

run(port=int(argv[1]))

else:

run()

if __name__ == '__main__':

main()

Run the python script to access the service

locust pressure test

install

pip install locust

python based test script

from locust import HttpUser, between, task

import numpy as np

class StressHandler(HttpUser): # HttpUser class that inherits locust

wait_time = between(0, 0) # Send a random delay p1-p2 seconds before the request

@task # The function to which this annotation is added will be executed

def testApi(self):

# Random id

eid = np.random.randint(1, 100000)

path = '/{}'.format(eid)

# Just pass path here, and the domain name will be passed in from the web front end

self.client.get(path)

# @task

def testBaidu(self):

path = '/'

self.client.get(path)

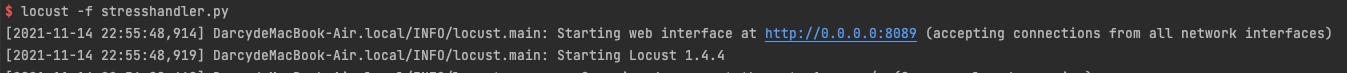

Run locust

locust -f stresshandler.py

The operation effect is as follows

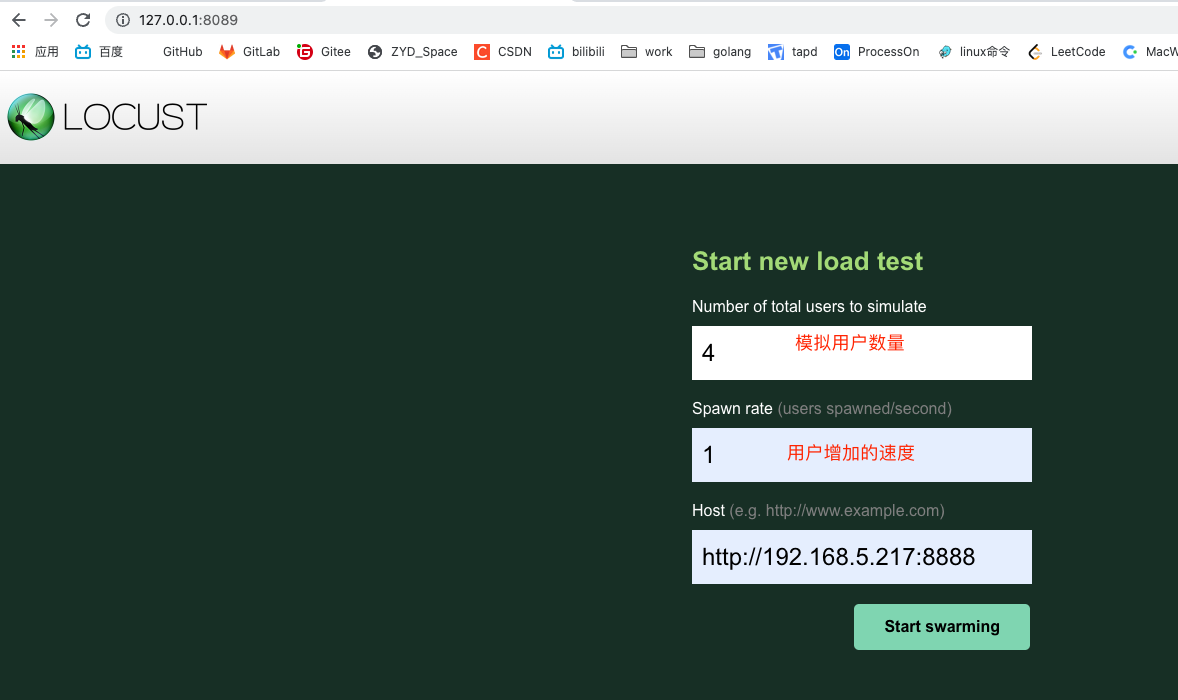

Then, you can access it from the front end. Replace 0.0.0.0 with the ip of the machine that starts locust or 127.0.0.1. If you don't make it yourself, the default port is 8089.

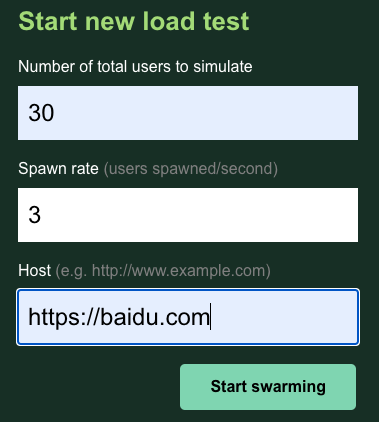

Create a new task. Note that if host is not a domain name, you need to specify a port.

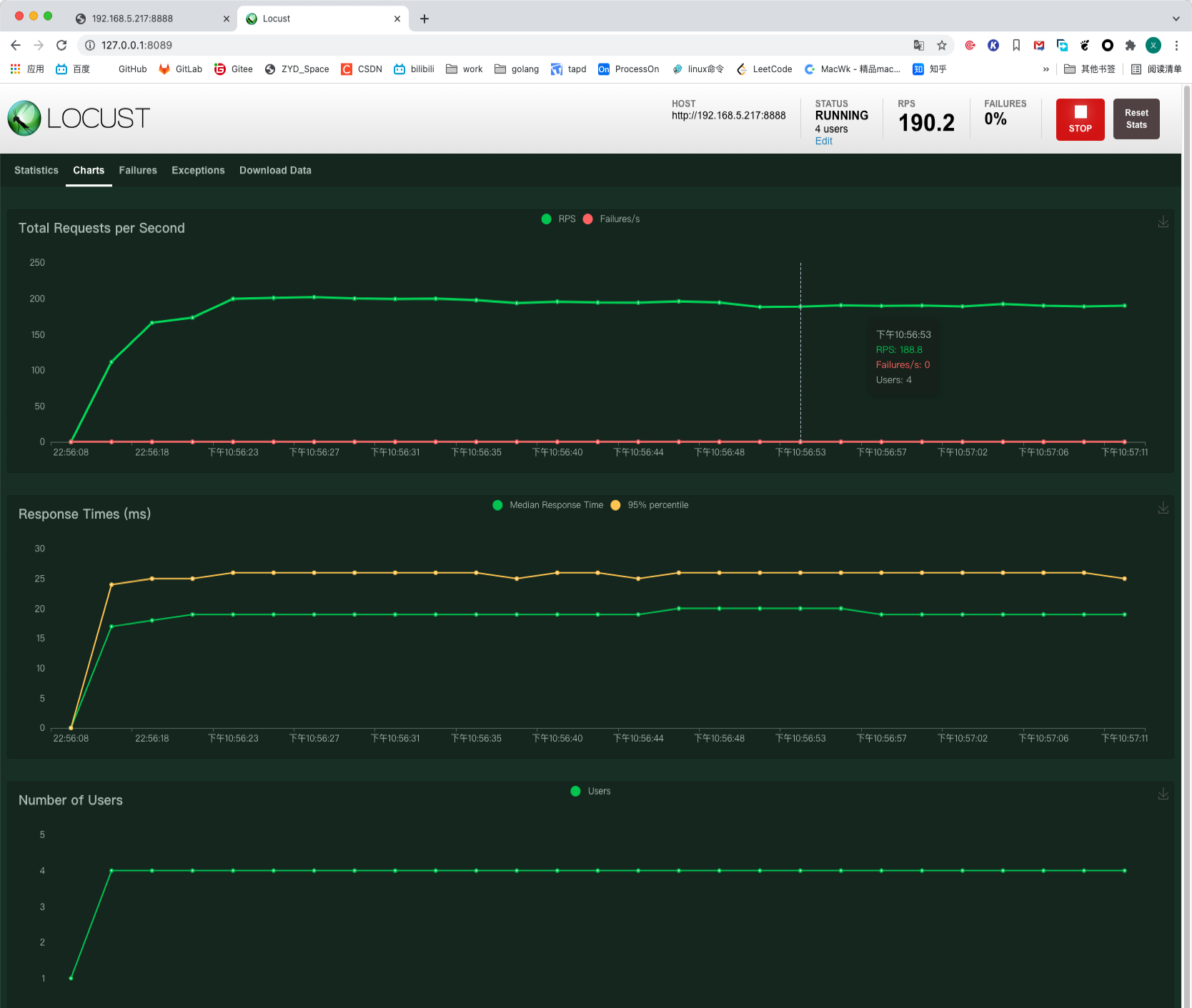

After startup

Further (multi process)

In a real scenario, the pressure generation capacity will be limited by the current machine performance. CPU and bandwidth are common constraints, and it may not be easy to improve the bandwidth. Rational use of multi-core CPU can further improve the pressure generation intensity. Lockus also provides such support, and even distributed pressure generation can be realized through multiple machines.

You can use the top command to observe CPU and memory usage

iftop observes bandwidth usage

iostat can observe the disk status (not used here)

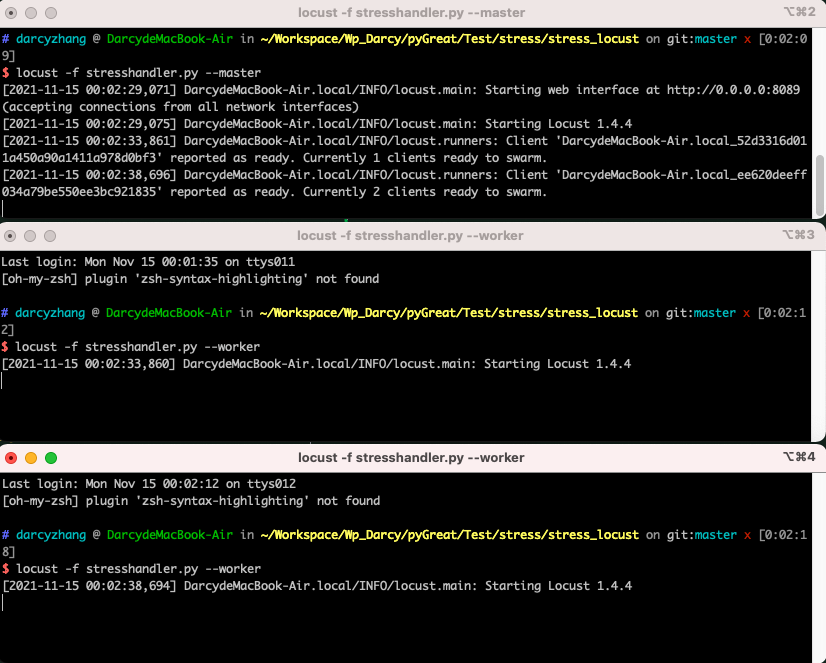

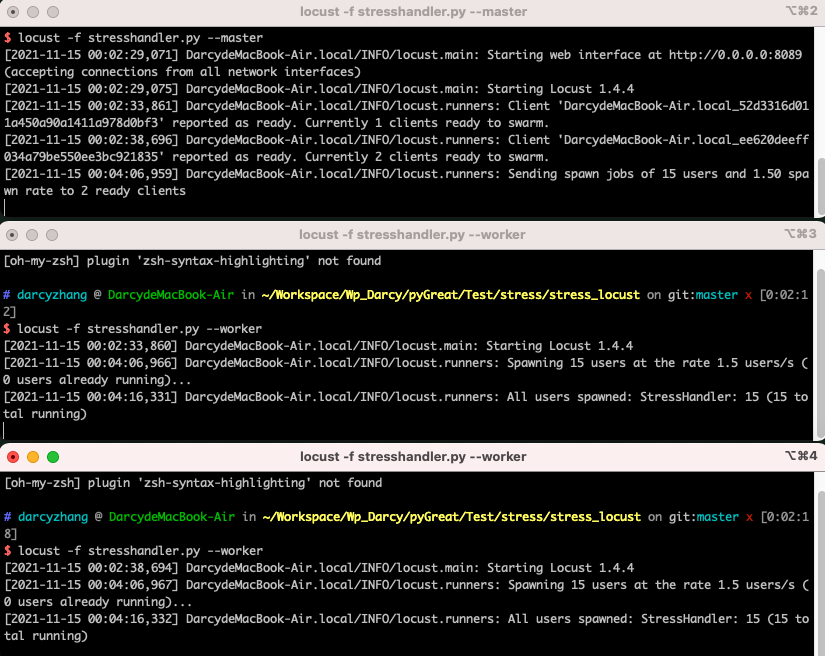

locust -f stresshandler.py --master # master # Start another console locust -f stresshandler.py --worker # worker # You can restart the console to start multiple worker s

There are two workers here. The number of users and the speed of user increase will be divided equally into two workers.

Further (profile)

Can be added to configure There are many items, which can be configured uniformly through the configuration file.

# master.conf # https://docs.locust.io/en/stable/configuration.html locustfile = stresshandler.py master = true web-port = 6789 print-stats = true only-summary = true

locust --config master.conf

# worker.conf # https://docs.locust.io/en/stable/configuration.html locustfile = stresshandler.py headless = true worker = true # master-host = 127.0.0.1 # master-port = 5557

# Need a new command line window to start locust --config salve.conf

The interaction port between master and worker is 5557. If the ports conflict, please refer to the configuration document to modify the ports.

Go further (skip the front-end task)

It can be configured to write the parameters directly in the configuration file without passing in the parameters through the web, and the results will be obtained after startup.

# master.conf locustfile = stresshandler.py master = true web-port = 6789 host = https://www.baidu.com users = 9 spawn-rate = 3 run-time = 20s headless = true # It is not started at the front end, and the test parameters need to be passed in with the above configuration csv = ./data/csv_prefix # Generate CSV result files, _stats.csv, _stats_history.csv and _failures.csv print-stats = true # Print status on console html = ./data/web_report.html # html front end report print-stats = true only-summary = true

# worker.conf locustfile = stresshandler.py headless = true worker = true # master-host = 127.0.0.1 # master-port = 5557

locust --conf worker.conf

Improve the script (multiple worker s)

The startup process can also be started with the help of python's multithreading.

import os

import subprocess

N_WORKER = 3

WORK_DIR = os.path.abspath(os.path.dirname(__file__))

def runMaster():

print('start Yes master')

subprocess.run('locust --config ./data/master.conf', shell=True, cwd=WORK_DIR)

def runWorker():

print('start One worker')

subprocess.Popen('locust --config ./data/worker.conf', shell=True, cwd=WORK_DIR)

def clear():

# Clean up the tasks with 5557, which is the default interaction port between master and worker

subprocess.run("kill -9 $(lsof -i:5557 | awk 'NR>1{print $2}')", shell=True)

print('Cleared the task')

def main():

for i in range(N_WORKER):

runWorker()

runMaster()

if __name__ == '__main__':

clear()

main()

A complete demo

Finally, how can I use this to start a pressure test( Code file address of this article)

Take this url as an example

https://www.baidu.com/s?wd=test # The function is Baidu search "test" Host: https://www.baidu.com path: /s params: wd=test

Modify test script

-

Add such a task in stresshandler.py

@task def testSearch(self): path = '/s?wd=test' self.client.get(path) -

Modify the configuration files (data/master.conf, data/worker.conf). If not from the front end, the configuration files here use the configuration files in "further"

-

Modify the startup file start.py, mainly to modify the number of worker processes. The default is 3,

N_WORKER = 3

-

Start start.py and python3 start.py, and the report will be generated in the data directory