1.OpenResty

Openresty is a scalable Web platform based on NGINX. It was initiated by Chinese people Zhang Yichun and provides many high-quality third-party modules.

OpenResty is a powerful web application server. Web developers can use Lua scripting language to mobilize various C and Lua modules supported by Nginx. More importantly, in terms of performance, OpenResty can quickly build an ultra-high performance web application system that is competent for more than 10K concurrent connection responses.

360, UPYUN, alicloud, Sina, Tencent, qunar, kugou music, etc. are all in-depth users of OpenResty.

openresty's goal is to make your Web service run directly inside the Nginx service, and make full use of Nginx's non blocking I/O model to make consistent high-performance responses not only to HTTP client requests, but also to remote back ends such as Mysql, PostgreSQL, Memcaches and Redis. So for some high-performance services, you can directly use openresty to access Mysql or Redis, and do not need to use a third-party language (PHP, Python, Ruby) to access the database and return, which greatly improves the application performance. Refer to the openresty official website http://openresty.org/cn/

==OpenResty is the "PLUS" version of nginx, = = has made many improvements on the source code of nginx

2.OpenResty deployment process

(1) Decompress and compile

[root@server1 lnmp]# tar zxf openresty-1.13.6.1.tar.gz [root@server1 lnmp]# cd openresty-1.13.6.1 [root@server1 openresty-1.13.6.1]# ./configure --prefix=/usr/local/openresty

(2) Installation

[root@server1 openresty-1.13.6.1]# gmake && gmake install

Configure openresty

First stop the original nginx:

[root@server1 nginx]# nginx -s stop

[root@server1 conf]# vim nginx.conf //Amendment: 2 user nginx nginx; //Add to20-22Content,68-88Row content 17 http { 18 include mime.types; 19 default_type application/octet-stream; 20 upstream memcache { 21 server localhost:11211; 22 keepalive 512; 23 } 68 location /memc { 69 internal; #Only internal access, not external http access. Relatively safe 70 memc_connect_timeout 100ms; 71 memc_send_timeout 100ms; #Back end server data return time 72 memc_read_timeout 100ms; #Response time of back-end server after successful connection 73 set $memc_key $query_string; 74 set $memc_exptime 300; 75 memc_pass memcache; 76 } 77 78 79 location ~ \.php$ { 80 set $key $uri$args; #The get method of http is get, and the PUT method represents set 81 srcache_fetch GET /memc $key; #When requesting a php page, you will first go to memcache to find it. If not, you can access it normally; 82 srcache_store PUT /memc $key; #After the access, save the result to memcache, and read it directly from the cache in the next access 83 root html; 84 fastcgi_pass 127.0.0.1:9000; 85 fastcgi_index index.php; 86 #fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; 87 include fastcgi.conf; 88 }

(4) Build test file

You can copy the previously used test files:

[root@server1 conf]# cp /usr/local/lnmp/nginx/html/index.php /usr/local/openresty/nginx/html/ [root@server1 conf]# cp /usr/local/lnmp/nginx/html/example.php /usr/local/openresty/nginx/html/ [root@server1 conf]# cd /usr/local/openresty/nginx/html/ [root@server1 html]# ls 50x.html example.php index.html index.php

(5) Open resty

[root@server1 html]# /usr/local/openresty/nginx/sbin/nginx

(7) Testing

Test on the real client:

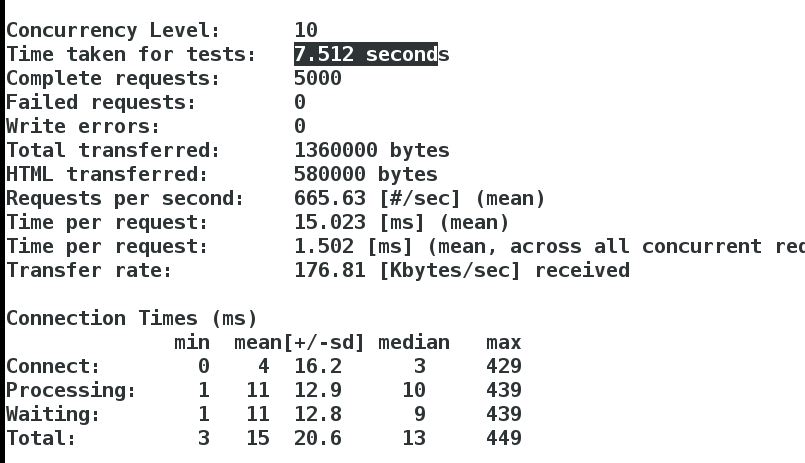

When memcache is not used:

[root@foundation1 ~]# ab -c 10 -n 5000 http://172.25.1.1/index.php

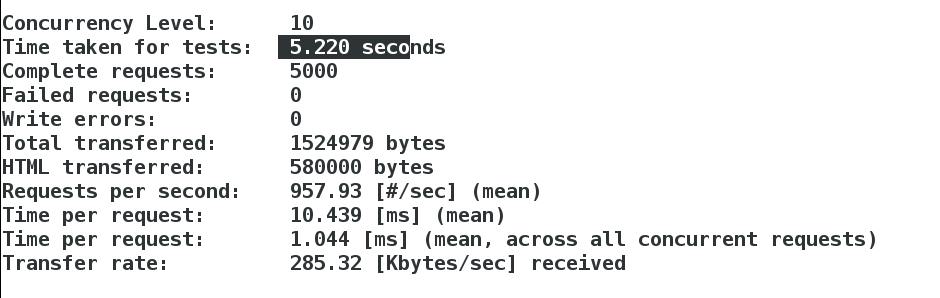

Visit website time comparison:

There is no memcache cache, but there is nginx (OpenResty) cache

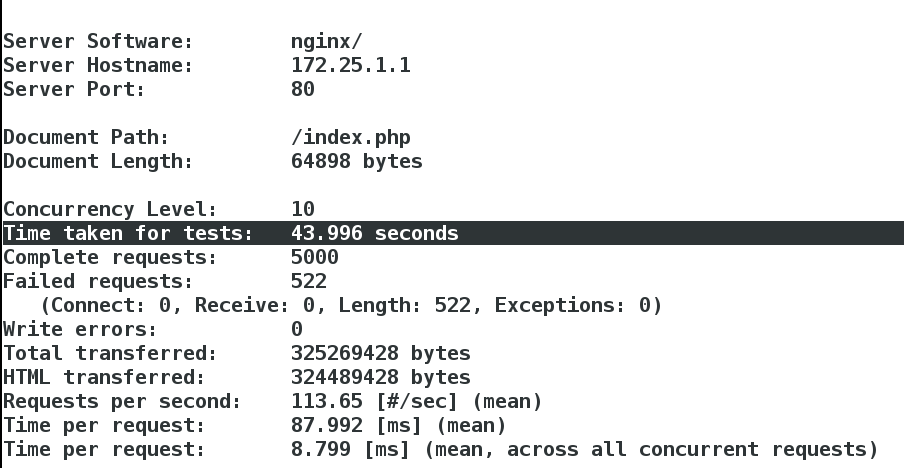

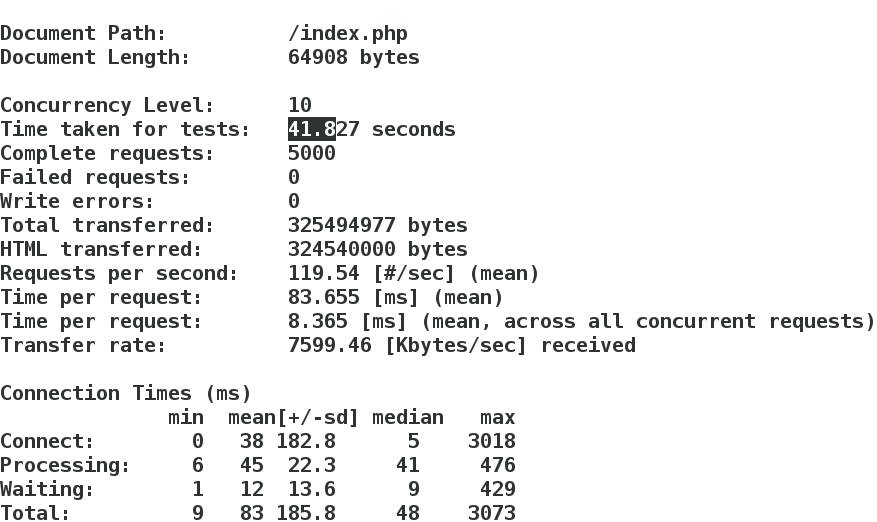

Test when memcache cache is used:

[root@foundation1 ~]# ab -c 10 -n 5000 http://172.25.1.1/example.php

Visit website time comparison:

The cache time of using memcache alone is longer than that of using nginx (openresty) cache + memcache