Reptiles take three steps:

1. Get the HTML of the Web page you want to crawl

2. Parse HTML to get the information you want

3. Text and picture information are downloaded locally or stored in a database.

Environment: MySQL + Python 2.7 (32 bits) + vscode

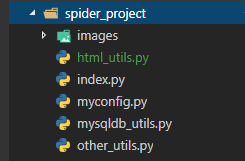

html_utils.py file (request and parse)

#-*-coding:utf-8 -*-

import requests

from bs4 import BeautifulSoup

import other_utils as otherUtil

import sys

reload(sys)

sys.setdefaultencoding('UTF-8')

#Get the HTML code for the entire page

def download_page_html(url,headers):

data = requests.get(url,headers=headers)

return data

#Analyzing and Playing Back HTML Code-Label

def lagou_parse_page_html(data):

soup = BeautifulSoup(data.content,"html.parser")

#The < li > tag for obtaining all job information

ul = soup.find_all('li',attrs={'class':'con_list_item default_list'})

object_list = []

for li in ul:

#Job Title (get the data-positionname attribute value of the < li > tag)

job_name = li.get('data-positionname')

#Position salary

salary_range = li.get('data-salary')

#Corporate name

company_name = li.get('data-company')

#Company Address (< li > Sublabel < EM > Text under the label)

company_address = li.find('em').string

#Company type and financing (find the text under the < div > label of attribute class=industry, and remove the blanks on both sides)

company_type = li.find('div',attrs={'class':'industry'}).string.strip()

#Job requirements

requirment = li.find('div',attrs={'class':'li_b_l'}).stripped_strings

job_requirement = ''

for str in requirment:

job_requirement = str.strip()

#Company self-description

company_desc = li.find('div',attrs={'class':'li_b_r'}).string[1:-1]

#Company logo

company_logo = 'https:' + li.find('img').get('src')

img_name = li.find('img').get('alt')

#Download company logo to local

otherUtil.download_img(company_logo,img_name)

dict = {

'jobName':job_name,

'salaryRange':salary_range,

'companyName':company_name,

'companyAddress':company_address,

'companyType':company_type,

'jobRequirement':job_requirement,

'companyDesc':company_desc,

'companyLogo':company_logo

}

object_list.append(dict)

#Get the next page's URL ('javascript:;'for the last page, soup seems to have to start looking at some parent node)

next_page_a = soup.find('div',attrs={'id':'s_position_list'}).find('div',attrs={'class':'pager_container'}).contents[-2]

next_page_url = next_page_a.get('href')

return object_list,next_page_urlThe Request module is generally understood as follows:____________< https://zhuanlan.zhihu.com/p/20410446 There are good crawler tutorials in his column.

Chinese documents of Beautiful Soup:< https://www.crummy.com/software/BeautifulSoup/bs4/doc/index.zh.html>

In this way, the web page request and parsing is completed, and then the data is saved to the local or database according to their own needs.

other_utils.py file (download)

There are from inside< http://www.xicidaili.com/wt > Climbing instead of proxy IP code, but I did not use it, only use the function of downloading pictures.

Some websites have to do more anti-crawling when crawling, otherwise IP can easily be blocked, such as Douban top250 movie.

#-*-coding:utf-8 -*-

import requests

from bs4 import BeautifulSoup

import telnetlib

import codecs

import myconfig as config

#Getting proxy IP from proxy website

def get_ip_list(url,headers):

proxy_list = []

data = requests.get(url,headers=headers)

#Resolution using Beautiful Soup

soup = BeautifulSoup(data.content,"html.parser")

#Solving Code Parsing Problem Based on Specific Web Code

ul_list = soup.find_all('tr',limit=20)

for i in range(2,len(ul_list)):

print 'wait for i:' + str(i)

line = ul_list[i].find_all('td')

ip = line[1].text

port = line[2].text

#Check the availability of proxy IP

flag = check_proxie(ip,port)

print str(i) + ' is ' + str(flag)

if flag:

proxy = get_proxy(ip,port)

proxy_list.append(proxy)

return proxy_list

#Verify that proxy IP is available

def check_proxie(ip,port):

try:

ip = str(ip)

port = str(port)

telnetlib.Telnet(ip,port=port,timeout=200)

except Exception,e:

#Abnormal information

print e.message

return False

else:

return True

#Format proxy parameters, add http/https

def get_proxy(ip,port):

aip = ip + ':' + port

proxy_ip = str('http://' + aip)

proxy_ips = str('https://' + aip)

proxy = {"http":proxy_ip,"https":proxy_ips}

return proxy

#Company logo Download

def download_img(url,img_name):

try:

#The path saved locally

temp_path = 'D:\\tarinee_study_word\\Python\\spider_project\\images\\' + str(img_name) + '.jpg'

#The path coding problem, img_name is Chinese

save_path = temp_path.decode('UTF-8').encode('GBK')

r = requests.get(url)

r.raise_for_status()

with codecs.open(save_path,'wb') as f:

f.write(r.content)

except Exception as e:

print 'Error Msg:' + str(e)mysqldb_utils.py file (saved to database)

myconfig in the file is my configuration file, which includes the account number, password and other configuration data linked to the database.

#-*-coding:utf-8 -*-

import MySQLdb

import myconfig as config

#Storage database

def insert_data(object_list):

#create link

conn = MySQLdb.Connect(

host= config.HOST,

port= config.PORT,

user= config.USER,

passwd= config.PASSWD,

db= config.DB,

charset= config.CHARSET

)

#Get cursor cursor

cur = conn.cursor()

#Create SQL statements

sql = "insert into t_job(company_logo,company_name,company_address,company_desc,salary_range,job_name,job_requirement,company_type) values('{0}','{1}','{2}','{3}','{4}','{5}','{6}','{7}')"

#str = 'insert into t_job(company_logo,company_name,company_address,company_desc,salary_range,job_name,job_requirement,company_type) values({companyLogo},{companyName},{companyAddress},{companyDesc},{salaryRange},{jobName},{jobRequirement},{companyType})'

for index in object_list:

d = list(index.values())

#skr = sql.format(d[0].encode('GBK'),d[1].encode('GBK'),d[2].encode('GBK'),d[3].encode('GBK'),d[4].encode('GBK'),d[5].encode('GBK'),d[6].encode('GBK'),d[7].encode('GBK'))

skr = sql.format(d[0],d[1],d[2],d[3],d[4],d[5],d[6],d[7])

#print skr

cur.execute(skr) #Execute SQL statements

#Submission

conn.commit()

print 'insert success......'

#Close object

cur.close()

conn.close()index.py (startup file)

def lagou_main():

url = config.DOWNLOAD_URL

while(url):

print url

# 1. Getting the whole HTML page

html = htmlUtil.download_page_html(url,config.HEADERS)

# Second, parse HTML pages to get the data you want

data,next_page_url = htmlUtil.lagou_parse_page_html(html)

if not next_page_url == 'javascript:;':

url = next_page_url

else:

url = False

# 3. Save to the database

dbUtil.insert_data(data)

if __name__ == '__main__':

lagou_main()Problems encountered in operating databases:

1. Error in installing MySQLdb (error: Microsoft Visual C++ 9.0 is required Unable to find vcvarsall.bat)

Solution:

1. Install the wheel library first to run the whl file

pip install wheel

2. Download according to your python number MySQL-python <https://www.lfd.uci.edu/~gohlke/pythonlibs/#mysql-python>

pip install MySQL_python‑1.2.5‑cp27‑none‑win_amd32.whl

2. Excute (sql [, list]) (python 2.7, when output a list object, or pass a list object, the data encoding in the list will be problematic, the single data acquisition will not be problematic) - no solution, I can only take a single one, and then splice it into the SQL statement, know, look for message help.

MySQLdb can directly pass a list object or execute emany (sql [, list]) to execute more than one item at a time to save data, but because of list encoding problems, it will report errors.

summary

Writing python code, no problem, but for python coding problems, I have seen other people's explanations, but I think I still seem to understand the stage, may need to try more in the process of writing code to realize.