In daily business development, there are occasional scenarios in which the duplicate data in the List collection needs to be removed. At this time, some students may ask: why not use Set or LinkedHashSet directly? So there is no problem of duplicate data?

I have to say that the students who can ask this question are very clever and see the essence of the problem at a glance.

However, the situation encountered in the actual business development will be more complex. For example, the List set may be a problem left over from history, or it may be the type limit returned by the calling interface. You can only use the List to receive, or you can only find this problem when you merge multiple sets after writing half of the code. In short, there are many reasons for the problem, so I won't List them one by one here.

When this problem is found, if you can change the original code and replace the original List type with Set type, you can directly modify the type of Set. But if it can't be modified at all, or the cost of modification is too large, the next six ways to remove the weight will help you solve the problem.

Pre knowledge

Before we begin, let's understand two sets of concepts: unordered set and ordered set & unordered and ordered. Because these two sets of concepts will be mentioned repeatedly in the next method implementation, it is necessary to clarify them before the formal start.

unordered set

An unordered set means that the order of data reading is inconsistent with the order of data insertion. For example, the order of inserting a set is 1, 5, 3, and 7, while the order of reading a set is 1, 3, 5, and 7.

Ordered set

The concept of ordered set is just opposite to that of unordered set, which means that the reading order and insertion order of the set are consistent. For example, if the order of inserting data is 1, 5, 3 and 7, the order of reading is also 1, 5, 3 and 7.

order and disorder

Through the above disordered set and ordered set, we can get the concepts of order and disorder.

Order refers to the order in which the data is arranged and read. If the order meets our expectations, it is called order. Disorder refers to that the data arrangement order and reading order do not meet our expectations, which is called disorder.

PS: it doesn't matter if the concepts of order and disorder are not very clear. We can further understand their meaning through the following examples.

Method 1: contents judgment de duplication (order)

To de duplicate the data, we first think of creating a new set, and then cycle the original set. Each cycle judges the cycle items in the original set. If the data of the current cycle does not exist in the new set, it will be inserted, and if it already exists, it will be discarded. In this way, when the cycle is completed, we will get a set without duplicate elements. The implementation code is as follows:

public class ListDistinctExample {

public static void main(String[] args) {

List<Integer> list = new ArrayList<Integer>() {{

add(1);

add(3);

add(5);

add(2);

add(1);

add(3);

add(7);

add(2);

}};

System.out.println("Original set:" + list);

method(list);

}

/**

* Custom de duplication

* @param list

*/

public static void method(List<Integer> list) {

// New set

List<Integer> newList = new ArrayList<>(list.size());

list.forEach(i -> {

if (!newList.contains(i)) { // Insert if it does not exist in the new collection

newList.add(i);

}

});

System.out.println("De duplication set:" + newList);

}

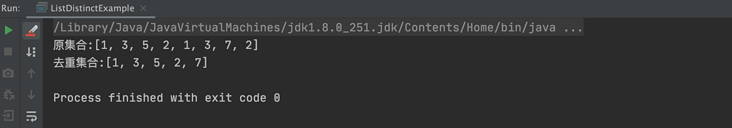

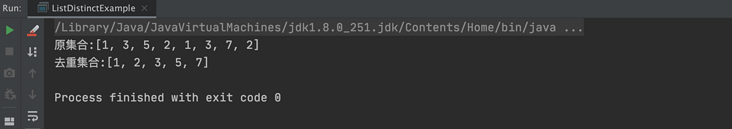

}The results of the above procedures are as follows:

The advantages of this method are: it is relatively simple to understand, and the final set is also orderly. The order here refers to that the order of the new set is consistent with that of the original set; But the disadvantage is that the implementation code is a little more, not concise and elegant enough.

Method 2: iterator de duplication (unordered)

For custom List de duplication, in addition to the above new collection, we can also use the iterator loop to judge each item of data. If there are two or more copies of the current circulating data in the collection, delete the current element. After the loop is completed, we can also get a collection without duplicate data. The implementation code is as follows:

public class ListDistinctExample {

public static void main(String[] args) {

List<Integer> list = new ArrayList<Integer>() {{

add(1);

add(3);

add(5);

add(2);

add(1);

add(3);

add(7);

add(2);

}};

System.out.println("Original set:" + list);

method_1(list);

}

/**

* Using iterators to remove duplicates

* @param list

*/

public static void method_1(List<Integer> list) {

Iterator<Integer> iterator = list.iterator();

while (iterator.hasNext()) {

// Gets the value of the loop

Integer item = iterator.next();

// If two identical values exist

if (list.indexOf(item) != list.lastIndexOf(item)) {

// Remove the last same value

iterator.remove();

}

}

System.out.println("De duplication set:" + list);

}

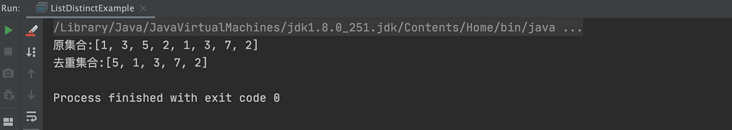

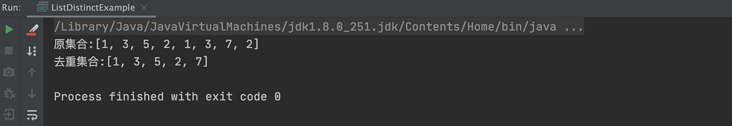

}The results of the above procedures are as follows:

The implementation code of this method is less than that of the previous method, and there is no need to create a new set, but the new set obtained by this method is disordered, that is, the arrangement order of the new set is inconsistent with the original set, so it is not the optimal solution.

Method 3: HashSet de duplication (unordered)

We know that HashSet naturally has the feature of "de duplication", so we only need to convert the List set into a HashSet set. The implementation code is as follows:

public class ListDistinctExample {

public static void main(String[] args) {

List<Integer> list = new ArrayList<Integer>() {{

add(1);

add(3);

add(5);

add(2);

add(1);

add(3);

add(7);

add(2);

}};

System.out.println("Original set:" + list);

method_2(list);

}

/**

* Using HashSet to remove duplicate

* @param list

*/

public static void method_2(List<Integer> list) {

HashSet<Integer> set = new HashSet<>(list);

System.out.println("De duplication set:" + set);

}

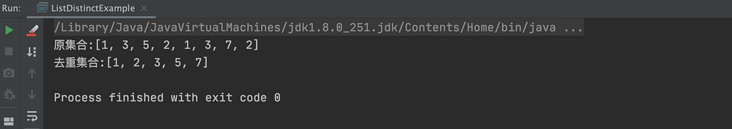

}The results of the above procedures are as follows:

The implementation code of this method is relatively simple, but the disadvantage is that HashSet will sort automatically, so the data sorting of the new set is inconsistent with the original set. If there are requirements for the order of sets, this method can not meet the current requirements.

Method 4: LinkedHashSet de duplication (ordered)

Since the HashSet can automatically sort and cannot meet the requirements, use LinkedHashSet, which can not only remove duplication, but also ensure the order of sets. The implementation code is as follows:

public class ListDistinctExample {

public static void main(String[] args) {

List<Integer> list = new ArrayList<Integer>() {{

add(1);

add(3);

add(5);

add(2);

add(1);

add(3);

add(7);

add(2);

}};

System.out.println("Original set:" + list);

method_3(list);

}

/**

* Use LinkedHashSet to remove duplicate

* @param list

*/

public static void method_3(List<Integer> list) {

LinkedHashSet<Integer> set = new LinkedHashSet<>(list);

System.out.println("De duplication set:" + set);

}

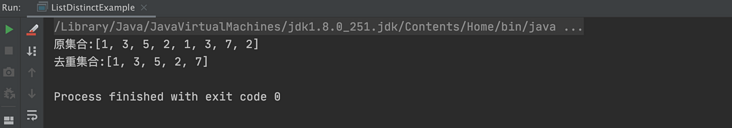

}The results of the above procedures are as follows:

From the above code and execution results, it can be seen that LinkedHashSet is a simple implementation method so far, and the final generated new set is consistent with the order of the original set. It is a de duplication method that we can consider using.

Method 5: TreeSet de duplication (unordered)

In addition to the above Set set, we can also use the TreeSet Set to realize the de duplication function. The implementation code is as follows:

public class ListDistinctExample {

public static void main(String[] args) {

List<Integer> list = new ArrayList<Integer>() {{

add(1);

add(3);

add(5);

add(2);

add(1);

add(3);

add(7);

add(2);

}};

System.out.println("Original set:" + list);

method_4(list);

}

/**

* Use TreeSet to remove duplicates (unordered)

* @param list

*/

public static void method_4(List<Integer> list) {

TreeSet<Integer> set = new TreeSet<>(list);

System.out.println("De duplication set:" + set);

}

}The results of the above procedures are as follows:

Unfortunately, although the implementation of TreeSet is relatively simple, it has the same problem as HashSet and will sort automatically, so it can not meet our needs.

Method 6: Stream de duplication (ordered)

JDK 8 brings us a very practical method Stream, which can realize many functions, such as the following de duplication functions:

public class ListDistinctExample {

public static void main(String[] args) {

List<Integer> list = new ArrayList<Integer>() {{

add(1);

add(3);

add(5);

add(2);

add(1);

add(3);

add(7);

add(2);

}};

System.out.println("Original set:" + list);

method_5(list);

}

/**

* Using Stream to remove duplicate

* @param list

*/

public static void method_5(List<Integer> list) {

list = list.stream().distinct().collect(Collectors.toList());

System.out.println("De duplication set:" + list);

}

}The results of the above procedures are as follows:

The difference between Stream and other methods is that it does not need to create a new set. It can receive a de duplication result by itself. The implementation code is also very concise, and the order of the de duplicated set is consistent with that of the original set. It is our top priority de duplication method.

summary

In this paper, we introduce six methods of set de duplication, among which the implementation is the most concise and the order after de duplication can be consistent with the original set. There are only two methods: LinkedHashSet de duplication and Stream de duplication. The latter method is the de duplication method we give priority to without the help of a new set.

Right and wrong are judged by ourselves, bad reputation is heard by others, and the number of gains and losses is safe.

Blogger introduction: post-80s programmers have "persisted" in blogging for 11 years. Hobbies: reading, jogging and badminton.

My official account: Java interview real question analysis

Personal wechat: GG_Stone, welcome to the circle of friends, make a point of praise and just pay.