Realization way

- Bonding

- Network Teaming

Environmental Science

| OS | selinux | firewalld | Network card |

|---|---|---|---|

| CentOS7 | Close | Close | eth0,eth1 |

Bonding

https://www.kernel.org/doc/Documentation/networking/bonding.txt

brief introduction

The Linux binding driver provides a way to aggregate multiple network interfaces into a single logical "binding" interface. The behavior of the binding interface depends on the pattern. In general, mode provides hot standby or load balancing services. In addition, link integrity monitoring can be performed

Working mode

There are 7 (0-6) modes. The default is balance RR (loop)

Mode0 (balance RR) loop policy: transfers packets sequentially from the first available slave to the last slave. This mode provides load balancing and fault tolerance.

Mode1 (active backup) active backup policy: only one slave in the binding is active. When and only when the active slave fails, the other slave becomes active. The bound MAC address is visible externally on only one port (network adapter) to avoid confusing the switch.

Mode2 (balance XOR): sets XOR policy for fault tolerance and load balancing. Using this method, the interface matches the MAC address of the incoming request to the MAC address of one of the slave NIC s. Once the link is established, the transmission is issued in turn, starting with the first available interface.

Mode3 (broadcast) broadcast policy: transmit all content on all slave interfaces. This mode provides fault tolerance.

Mode4 (802.3ad): set the dynamic link aggregation policy of IEEE802.3ad. Create an aggregation group that shares the same speed and duplex settings. Send and receive on all slaves in the active aggregator. Switches to 802.3ad are required

Mode5 (balance TLB): set the transmission load balancing (TLB) policy to achieve fault tolerance and load balancing. The outgoing traffic is allocated based on the current load on each slave interface. Incoming traffic is currently received from the station. If the receiver slave fails, the other slave takes over the MAC address of the failed slave.

Mode6 (balance ALB): set and active load balancing (ALB) policies for fault tolerance and load balancing. Including the sending and receiving of IPV4 traffic and load balancing. Receive load balancing through ARP negotiation

To configure

Implemented by nmcli command

View the status of added eth0 and eth1 network cards

[root@CentOS7 ~]# nmcli dev status DEVICE TYPE STATE CONNECTION eth0 ethernet disconnected -- eth1 ethernet disconnected --

1) use active backup mode to add binding interface

[root@CentOS7 ~]# nmcli con add type bond con-name bond01 ifname bond0 mode active-backup Connection 'bond01' (ca0305ce-110c-4411-a48e-5952a2c72716) successfully added.

2) add slave interface

[root@CentOS7 ~]# nmcli con add type bond-slave con-name bond01-slave0 ifname eth0 master bond0 Connection 'bond01-slave0' (5dd5a90c-9a2f-4f1d-8fcc-c7f4b333e3d2) successfully added. [root@CentOS7 ~]# nmcli con add type bond-slave con-name bond01-slave1 ifname eth1 master bond0 Connection 'bond01-slave1' (a8989d38-cc0b-4a4e-942d-3a2e1eb8f95b) successfully added.

Note: if no connection name is provided for the slave interface, the name is composed of type and interface name.

3) start the slave interface

[root@CentOS7 ~]# nmcli con up bond01-slave0 Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/14) [root@CentOS7 ~]# nmcli con up bond01-slave1 Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/15)

4) start the bond interface

[root@CentOS7 ~]# nmcli con up bond01 Connection successfully activated (master waiting for slaves) (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/16)

5) check the bond status

[root@CentOS7 ~]# cat /proc/net/bonding/bond0 Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011) Bonding Mode: fault-tolerance (active-backup) Primary Slave: None Currently Active Slave: eth0 MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: eth0 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:0c:29:08:2a:73 Slave queue ID: 0 Slave Interface: eth1 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:0c:29:08:2a:7d Slave queue ID: 0

It can be found from the above results that the current active slave is eth0.

6) test

ping the bond0 interface ip of the local machine on another Linux host, and then manually disconnect the eth0 network card to see if the master-slave switch will occur

View native bond0 interface ip

[root@CentOS7 ~]# ip ad show dev bond0|sed -rn '3s#.* (.*)/24.*#\1#p' 192.168.8.129 [root@CentOS6 ~]# ping 192.168.8.129 PING 192.168.8.129 (192.168.8.129) 56(84) bytes of data. 64 bytes from 192.168.8.129: icmp_seq=1 ttl=64 time=0.600 ms 64 bytes from 192.168.8.129: icmp_seq=2 ttl=64 time=0.712 ms 64 bytes from 192.168.8.129: icmp_seq=3 ttl=64 time=2.20 ms 64 bytes from 192.168.8.129: icmp_seq=4 ttl=64 time=0.986 ms 64 bytes from 192.168.8.129: icmp_seq=7 ttl=64 time=0.432 ms 64 bytes from 192.168.8.129: icmp_seq=8 ttl=64 time=0.700 ms 64 bytes from 192.168.8.129: icmp_seq=9 ttl=64 time=0.571 ms ^C --- 192.168.8.129 ping statistics --- 9 packets transmitted, 7 received, 22% packet loss, time 8679ms rtt min/avg/max/mdev = 0.432/0.887/2.209/0.562 ms

When the other host is ping, the eth0 network card is broken and two packets are lost in the middle.

[root@CentOS7 ~]# cat /proc/net/bonding/bond0 Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011) Bonding Mode: fault-tolerance (active-backup) Primary Slave: None Currently Active Slave: eth1 MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: eth0 MII Status: down Speed: Unknown Duplex: Unknown Link Failure Count: 4 Permanent HW addr: 00:0c:29:08:2a:73 Slave queue ID: 0 Slave Interface: eth1 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 2 Permanent HW addr: 00:0c:29:08:2a:7d Slave queue ID: 0

Check that the current active slave is eth1, indicating that the active slave switch is successful.

MII Polling Interval (ms): specifies (in milliseconds) how often MII link monitoring occurs. This is useful if high availability is required because MII is used to verify that the NIC is active

Configuration file generated automatically after nmcli command configuration

[root@CentOS7 ~]# cd /etc/sysconfig/network-scripts/ [root@CentOS7 network-scripts]# ls ifcfg-bond* ifcfg-bond01 ifcfg-bond-slave-eth0 ifcfg-bond-slave-eth1 [root@CentOS7 network-scripts]# cat ifcfg-bond01 BONDING_OPTS=mode=active-backup TYPE=Bond BONDING_MASTER=yes PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=dhcp DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=bond01 UUID=e5369ad8-2b8b-4cc1-aca2-67562282a637 DEVICE=bond0 ONBOOT=yes [root@CentOS7 network-scripts]# cat ifcfg-bond-slave-eth0 TYPE=Ethernet NAME=bond01-slave0 UUID=f6ed385e-e1ae-487d-b36a-43b13ac3f84f DEVICE=eth0 ONBOOT=yes MASTER_UUID=e5369ad8-2b8b-4cc1-aca2-67562282a637 MASTER=bond0 SLAVE=yes bond01-slave1 The configuration file of is basically the same as this file

Network Teaming

brief introduction

- network team to achieve high availability of Linux server

- Link aggregation is the process of combining two or more network interfaces into one unit. Compared with the old version of binding technology, two main advantages of team are as follows:

Redundancy: improves network availability

Load balancing: improve network efficiency - Netgroups are driven by kernel and implemented by team Daemons

The concept and terminology of Teaming

- Team: an application daemon that uses the libteam library for load balancing and loop logic. It listens and communicates through Unix domain sockets.

- teamdctl: This utility is used to control the running instance of team d using D-bus. It can be used at run time to read configuration, link monitor status, check and change port status, add and remove ports, and change ports between active and backup status.

- runners: a separate code unit, which can realize the unique functions of various load sharing and backup methods, such as polling. The user specifies the runner in the JSON format configuration file, and then compiles the code into a team instance when the instance is created.

Team operation mode

broadcast: data is transmitted through all ports

Active backup: use one port or link and keep other ports or links as backups

Round robin: data is transmitted on all ports in turn

Load balance: with active Tx load balance and Tx port selector based on BPF

lacp: implementation of 802.3ad link aggregation control protocol

To configure

Implemented by nmcli command

1) view the status of added eth0 and eth1 network cards

[root@CentOS7 ~]# nmcli dev status DEVICE TYPE STATE CONNECTION eth0 ethernet disconnected -- eth1 ethernet disconnected --

2) use active backup mode to add a network group interface named team0

[root@CentOS7 ~]# nmcli con add type team ifname team0 con-name team0 config '{"runner":{"name":"activebackup"}}'

Connection 'team0' (28b4e208-339f-4eb2-ae0f-6b07621e7685) successfully added.3) add the secondary network to the network group named team0

[root@CentOS7 ~]# nmcli con add type team-slave ifname eth0 con-name team0-slave0 master team0 Connection 'team0-slave0' (3c1b3008-ebeb-4e2d-9790-30111f1e1271) successfully added. [root@CentOS7 ~]# nmcli con add type team-slave ifname eth1 con-name team0-slave1 master team0

4) start network group and slave network

[root@CentOS7 ~]# nmcli con up team0 [root@CentOS7 ~]# nmcli con up team0-slave0 [root@CentOS7 ~]# nmcli con up team0-slave1 [root@CentOS7 ~]# nmcli dev status DEVICE TYPE STATE CONNECTION team0 team connected team0 eth0 ethernet connected team0-slave0 eth1 ethernet connected team0-slave1

5) view network group status

[root@CentOS7 ~]# teamdctl team0 state

setup:

runner: activebackup

ports:

eth0

link watches:

link summary: up

instance[link_watch_0]:

name: ethtool

link: up

down count: 0

eth1

link watches:

link summary: up

instance[link_watch_0]:

name: ethtool

link: up

down count: 0

runner:

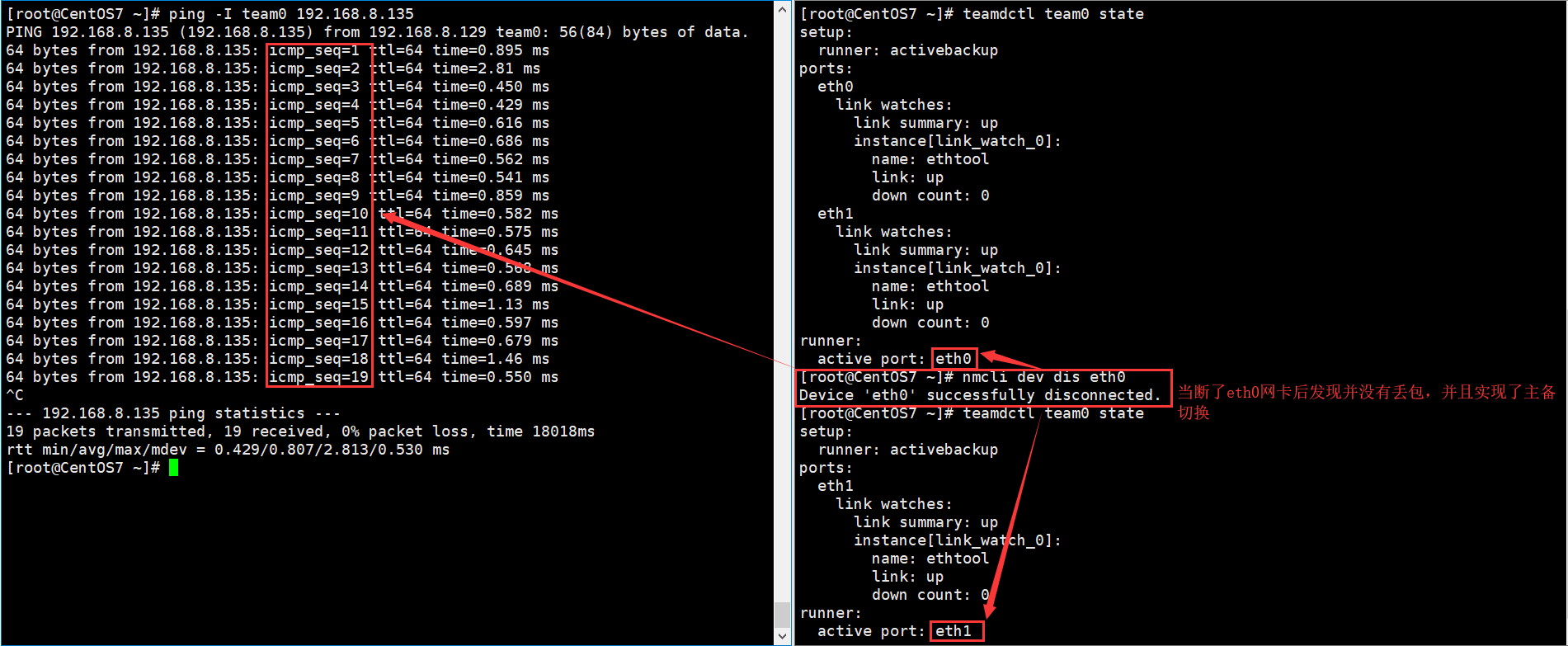

active port: eth06) test

Configuration file generated automatically after nmcli command configuration

[root@CentOS7 ~]# cd /etc/sysconfig/network-scripts/

[root@CentOS7 network-scripts]# ls ifcfg-team0*

[root@CentOS7 network-scripts]# grep -v "^IPV6" ifcfg-team0

TEAM_CONFIG="{\"runner\":{\"name\":\"activebackup\"}}"

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=dhcp

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

NAME=team0

UUID=28b4e208-339f-4eb2-ae0f-6b07621e7685

DEVICE=team0

ONBOOT=yes

DEVICETYPE=Team

[root@CentOS7 network-scripts]# cat ifcfg-team0-slave0

NAME=team0-slave0

UUID=3c1b3008-ebeb-4e2d-9790-30111f1e1271

DEVICE=eth0

ONBOOT=yes

TEAM_MASTER=team0

DEVICETYPE=TeamPort

[root@CentOS7 network-scripts]# cat ifcfg-team0-slave1

NAME=team0-slave1

DEVICE=eth1

ONBOOT=yes

TEAM_MASTER=team0