Why use large file upload?

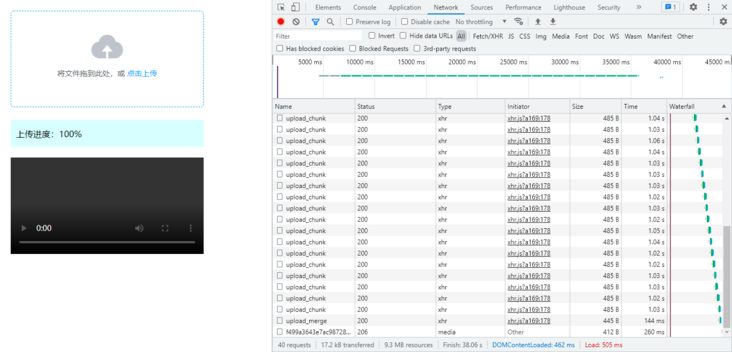

In the project, there is a need to upload large files. In the same request, a large amount of data needs to be uploaded, resulting in a long time for the interface request, which may lead to the consequence of interface timeout. In addition, if there are network exceptions during the upload process, the re upload is still from scratch. Large file upload can perfectly solve the above disadvantages and support the functions of pause and continue.

How to upload large files?

1. File slicing

We can read the selected file into ArrayBuffer or DataURL by reading the file, and cut it according to the number of uploaded copies specified by us through the slice method in the file

2. Front end generated file name

This step needs to be sent to the server after the front end generates the file name, so that the server will generate a folder according to the file name regardless of the content. Each time the front end requests the interface to upload slices, the server will check the file name, find the corresponding folder and insert it

3. Browser problems

Because we split the file into N, it means n requests. If a one-time request is made, Google browser can process up to six requests at a time. When the file is too large, it will cause the browser to jam. Therefore, we need to use the publish subscribe model to control the problem of concurrent requests

4. Breakpoint continuation

When a network problem occurs in the process of uploading, resulting in forced interruption, we need to verify which step we uploaded to the next upload, and then continue to upload. Here, the front end can store the uploaded flag in LCAL storage, but it will not get the content if you change a browser. Therefore, we complete this logic on the server. The front end needs to send a request to the server to obtain the currently uploaded content. After obtaining, we need to judge whether the file is included. If it exists, we don't need to upload the slice, so as to realize breakpoint continuous transmission

5. Upload progress and pause

A pause button is set at the front end. Due to the publish and subscribe mode we use above, we generate a function from each slice, and then put each function into the queue. We use a variable to record how many are uploaded. When the user clicks pause, it will end directly. When the user clicks continue, According to the variables set above, we can know the corresponding methods in the event pool, and then notify them to execute in turn

6. Consolidation

When all slices are uploaded successfully, the front end needs to call a merged interface of the server and send the file name and the number of slices to the server. The server will merge the slices and send the video to the front end.

Front end source code

<template>

<div id="app">

<el-upload

drag

action

:auto-upload="false"

:show-file-list="false"

:on-change="changeFile"

>

<i class="el-icon-upload"></i>

<div class="el-upload__text">

Drag the file here, or

<em>Click upload</em>

</div>

</el-upload>

<!-- PROGRESS -->

<div class="progress">

<span>Upload progress:{{ totalNum }}%</span>

<el-link

v-if="total > 0 && total < 30"

type="primary"

@click="handleBtn"

>{{ btn | btnText }}</el-link

>

</div>

<!-- VIDEO -->

<div class="uploadImg" v-if="video">

<video :src="video" controls />

</div>

</div>

</template>

<script>

import SparkMD5 from "spark-md5";

export default {

name: "App",

data() {

return {

total: 0,

video: null,

btn: false,

HASH: "",

chunks: [],

already: [],

requestList: [],

count: 0,

alreadyUploadIndex: 0,

};

},

filters: {

btnText(btn) {

return btn ? "continue" : "suspend";

},

},

computed: {

totalNum() {

let isNum = (num) => num != null && !isNaN(num);

let n =

isNum(this.count) && isNum(this.total)

? ((this.total / this.count) * 100).toFixed(0)

: 0;

return isNaN(n) ? 0 : n;

},

},

methods: {

async changeFile(file) {

if (!file) return;

file = file.raw;

// Read the file into buffer type

let buffer = await this.fileParse(file, "buffer");

let suffix = this.createName(buffer, file);

// Verify uploaded file slices

let data = await this.axios.get("/upload_already", {

params: {

HASH: this.HASH,

},

});

this.already = data.fileList;

this.total = data.fileList.length;

// Split slice

this.splitBuffer(file, suffix);

// Store tiles in the event pool

this.saveEventPool()

// Send slices to the server and verify whether the transmission has been completed. If the transmission is completed, call the merge interface of the server

this.sendRequest();

},

// Read the file as buffer type

fileParse(file, type) {

return new Promise((resolve) => {

let fileRead = new FileReader();

fileRead.readAsArrayBuffer(file);

fileRead.onload = (ev) => {

resolve(ev.target.result);

};

});

},

// Front end generated file name

createName(buffer, file) {

let spark = new SparkMD5.ArrayBuffer();

spark.append(buffer);

this.HASH = spark.end();

let suffix = /\.([0-9a-zA-Z]+)$/i.exec(file.name)[1];

return suffix;

},

// Split the buffer reasonably according to its size

splitBuffer(file, suffix) {

let max = 1024 * 100,

index = 0;

this.count = Math.ceil(file.size / max);

if (this.count > 30) {

max = file.size / 30;

this.count = 30;

}

while (index < this.count) {

this.chunks.push({

file: file.slice(index * max, (index + 1) * max),

filename: `${this.HASH}_${index + 1}.${suffix}`,

});

index++;

}

},

//Using publish subscribe mode, first store the slicing method in the event pool

saveEventPool() {

this.chunks.forEach((chunk, index) => {

if (this.already.length > 0 && this.already.includes(chunk.filename)) {

return;

}

let fn = () => {

let fm = new FormData();

fm.append("file", chunk.file);

fm.append("filename", chunk.filename);

return this.axios.post("/upload_chunk", fm).then((data) => {

this.total++;

});

};

this.requestList.push(fn);

});

},

async complete() {

if (this.total < 30) return;

let res = await this.axios.post(

"/upload_merge",

{

HASH: this.HASH,

count: this.count,

},

{

headers: {

"Content-Type": "application/x-www-form-urlencoded",

},

}

);

this.video = res.servicePath;

alert("success");

},

async sendRequest() {

let send = async () => {

// If it has been interrupted, it will not be uploaded

if (this.abort) return;

if (this.alreadyUploadIndex >= this.requestList.length) {

// It's all over

this.complete();

return;

}

await this.requestList[this.alreadyUploadIndex]();

this.alreadyUploadIndex++;

send();

};

send();

},

// Pause / resume

handleBtn() {

if (this.btn) {

//Breakpoint continuation

this.abort = false;

this.btn = false;

this.sendRequest();

return;

}

//Pause upload

this.btn = true;

this.abort = true;

},

},

};

</script>

Server

const express = require('express'),

fs = require('fs'),

bodyParser = require('body-parser'),

multiparty = require('multiparty'),

SparkMD5 = require('spark-md5');

/*-CREATE SERVER-*/

const app = express(),

PORT = 8888,

HOST = 'http://127.0.0.1',

HOSTNAME = `${HOST}:${PORT}`;

app.listen(PORT, () => {

console.log(`THE WEB SERVICE IS CREATED SUCCESSFULLY AND IS LISTENING TO THE PORT: ${PORT},YOU CAN VISIT: ${HOSTNAME}`);

});

/*-Middleware-*/

app.use((req, res, next) => {

res.header("Access-Control-Allow-Origin", "*");

req.method === 'OPTIONS' ? res.send('CURRENT SERVICES SUPPORT CROSS DOMAIN REQUESTS!') : next();

});

app.use(bodyParser.urlencoded({

extended: false,

limit: '1024mb'

}));

/*-API-*/

// Detect whether the file exists

const exists = function exists(path) {

return new Promise(resolve => {

fs.access(path, fs.constants.F_OK, err => {

if (err) {

resolve(false);

return;

}

resolve(true);

});

});

};

// Create a file and write to the specified directory & return the client result

const writeFile = function writeFile(res, path, file, filename, stream) {

return new Promise((resolve, reject) => {

if (stream) {

try {

let readStream = fs.createReadStream(file.path),

writeStream = fs.createWriteStream(path);

readStream.pipe(writeStream);

readStream.on('end', () => {

resolve();

fs.unlinkSync(file.path);

res.send({

code: 0,

codeText: 'upload success',

originalFilename: filename,

servicePath: path.replace(__dirname, HOSTNAME)

});

});

} catch (err) {

reject(err);

res.send({

code: 1,

codeText: err

});

}

return;

}

fs.writeFile(path, file, err => {

if (err) {

reject(err);

res.send({

code: 1,

codeText: err

});

return;

}

resolve();

res.send({

code: 0,

codeText: 'upload success',

originalFilename: filename,

servicePath: path.replace(__dirname, HOSTNAME)

});

});

});

};

// Large file slice upload & Merge slice

const merge = function merge(HASH, count) {

return new Promise(async (resolve, reject) => {

let path = `${uploadDir}/${HASH}`,

fileList = [],

suffix,

isExists;

isExists = await exists(path);

if (!isExists) {

reject('HASH path is not found!');

return;

}

fileList = fs.readdirSync(path);

if (fileList.length < count) {

reject('the slice has not been uploaded!');

return;

}

fileList.sort((a, b) => {

let reg = /_(\d+)/;

return reg.exec(a)[1] - reg.exec(b)[1];

}).forEach(item => {

!suffix ? suffix = /\.([0-9a-zA-Z]+)$/.exec(item)[1] : null;

fs.appendFileSync(`${uploadDir}/${HASH}.${suffix}`, fs.readFileSync(`${path}/${item}`));

fs.unlinkSync(`${path}/${item}`);

});

fs.rmdirSync(path);

resolve({

path: `${uploadDir}/${HASH}.${suffix}`,

filename: `${HASH}.${suffix}`

});

});

};

app.post('/upload_chunk', async (req, res) => {

try {

let {

fields,

files

} = await multiparty_upload(req);

let file = (files.file && files.file[0]) || {},

filename = (fields.filename && fields.filename[0]) || "",

path = '',

isExists = false;

// Create a temporary directory for storing slices

let [, HASH] = /^([^_]+)_(\d+)/.exec(filename);

path = `${uploadDir}/${HASH}`;

!fs.existsSync(path) ? fs.mkdirSync(path) : null;

// Store slices in a temporary directory

path = `${uploadDir}/${HASH}/${filename}`;

isExists = await exists(path);

if (isExists) {

res.send({

code: 0,

codeText: 'file is exists',

originalFilename: filename,

servicePath: path.replace(__dirname, HOSTNAME)

});

return;

}

writeFile(res, path, file, filename, true);

} catch (err) {

res.send({

code: 1,

codeText: err

});

}

});

app.post('/upload_merge', async (req, res) => {

let {

HASH,

count

} = req.body;

try {

let {

filename,

path

} = await merge(HASH, count);

res.send({

code: 0,

codeText: 'merge success',

originalFilename: filename,

servicePath: path.replace(__dirname, HOSTNAME)

});

} catch (err) {

res.send({

code: 1,

codeText: err

});

}

});

app.get('/upload_already', async (req, res) => {

let {

HASH

} = req.query;

let path = `${uploadDir}/${HASH}`,

fileList = [];

try {

fileList = fs.readdirSync(path);

fileList = fileList.sort((a, b) => {

let reg = /_(\d+)/;

return reg.exec(a)[1] - reg.exec(b)[1];

});

res.send({

code: 0,

codeText: '',

fileList: fileList

});

} catch (err) {

res.send({

code: 0,

codeText: '',

fileList: fileList

});

}

});

app.use(express.static('./'));

app.use((req, res) => {

res.status(404);

res.send('NOT FOUND!');

});Full version

node and front-end code github address: https://github.com/mengyuhang...