Access to service s in k8s is usually based on kube proxy for load balancing. Today we will explore the core design of industrial implementation of user-based TCP proxy components, including the core implementation of random port generator, TCP stream replication and other technologies.

1. Foundation

Today we are mainly talking about user-state transition, but not kernel-based first

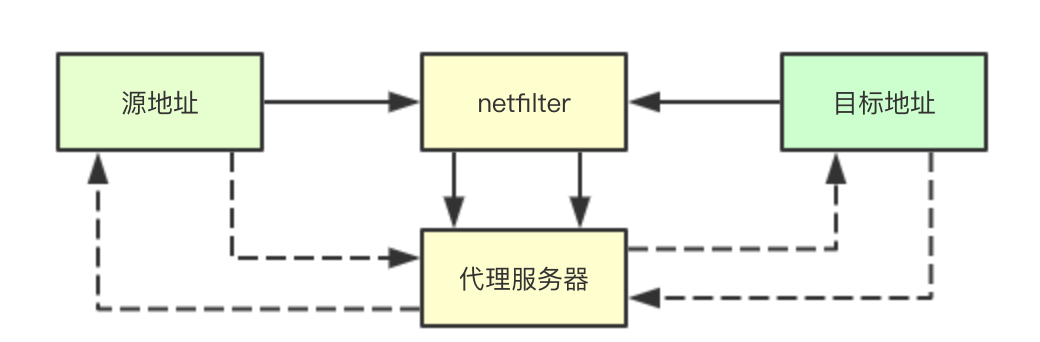

1.1 Traffic Redirection

Traffic redirection usually refers to intercepting packets through the kernel's netfilter, directing them to the port we specify, hijacking traffic, and doing some extra processing on some packets in the traffic, which is completely transparent to the application.

Traffic redirection usually refers to intercepting packets through the kernel's netfilter, directing them to the port we specify, hijacking traffic, and doing some extra processing on some packets in the traffic, which is completely transparent to the application.

1.1.1 Destination Address Redirection

Destination address redirection refers to the redirection of traffic to an IP or a port for the processing of traffic sending. In kube proxy, it is mainly achieved by REDIRECT.

1.1.2 Target Address Translation

Target address translation is mainly the traffic that the pointer returns to REDIRECT. It needs to do a redirection operation, that is, return its address to the local proxy service, and then forward it to the real application by the local proxy service.

1.2 TCP Proxy Implementation

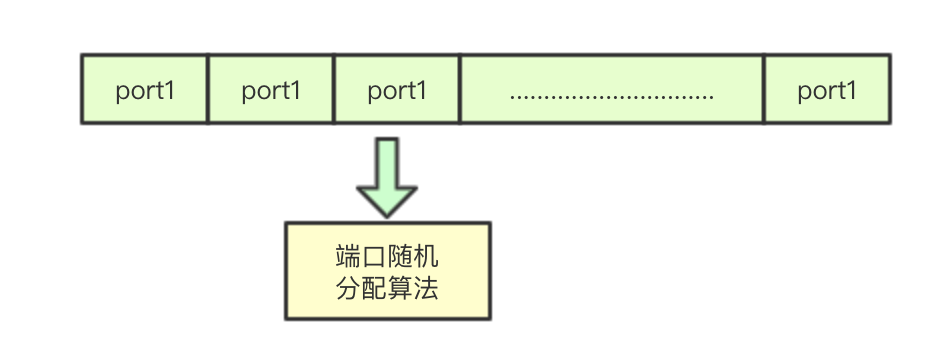

1.2.1 Random Port

Random ports are when we want to set up a temporary proxy server for the corresponding Service that needs to randomly select a local port to listen on

1.2.2 Stream Replication

The proxy server needs to replicate the data sent by the local service to the target service, receiving the data returned by the target service, and replicating to the local service

2. Core Design Implementation

2.1 Random Port Allocator

2.1.1 Core Data Structure

The core data structure of the allocator is to obtain ports randomly by rand providing a random number generator, and record port usage status by bit counting. The acquired random ports are put into ports to provide port acquisition.

type rangeAllocator struct { net.PortRange ports chan int // Save the currently available random ports used big.Int // Bit count, recording port usage lock sync.Mutex rand *rand.Rand // Random number generator, generating random ports }

2.1.2 Building a Random Allocator

Random allocator is mainly to build rand random number generator with the current time as the random factor, and build ports buffer, currently 16

ra := &rangeAllocator{ PortRange: r, ports: make(chan int, portsBufSize), rand: rand.New(rand.NewSource(time.Now().UnixNano())), }

2.1.3 Random Number Generator

Random number generator mainly generates random ports by calling nextFreePort and puts them into ports chan

func (r *rangeAllocator) fillPorts() { for { // Get a random port if !r.fillPortsOnce() { return } } } func (r *rangeAllocator) fillPortsOnce() bool { port := r.nextFreePort() // Get the current random port if port == -1 { return false } r.ports <- port // Put the acquired random port in the buffer buffer return true }

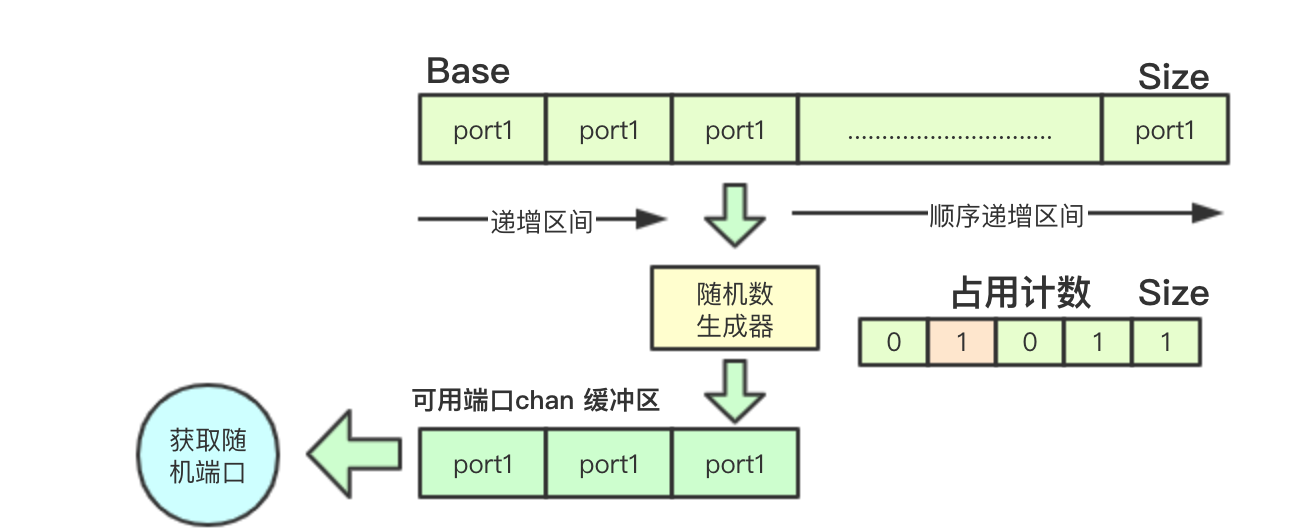

2.1.4 portrange

PortRange is a random range of ports that supports three settings: single port, min-max interval, min+offset interval. It constructs Base and Size parameters for the algorithm to use.

switch notation { case SinglePortNotation: var port int port, err = strconv.Atoi(value) if err != nil { return err } low = port high = port case HyphenNotation: low, err = strconv.Atoi(value[:hyphenIndex]) if err != nil { return err } high, err = strconv.Atoi(value[hyphenIndex+1:]) if err != nil { return err } case PlusNotation: var offset int low, err = strconv.Atoi(value[:plusIndex]) if err != nil { return err } offset, err = strconv.Atoi(value[plusIndex+1:]) if err != nil { return err } high = low + offset default: return fmt.Errorf("unable to parse port range: %s", value) } pr.Base = low pr.Size = 1 + high - low

2.1.5 Random Port Generation Algorithm

Random ports are generated mainly by Mr. A random number. If a port is already in use, it will be searched in the order of the high and low intervals until an unoccupied port is found.

func (r *rangeAllocator) nextFreePort() int { r.lock.Lock() defer r.lock.Unlock() // Randomly select port j := r.rand.Intn(r.Size) if b := r.used.Bit(j); b == 0 { r.used.SetBit(&r.used, j, 1) return j + r.Base } // search sequentially // If the current port is already occupied, the search is incremented from the current random number sequence, and if found, the corresponding bit bit bit is set to 1 for i := j + 1; i < r.Size; i++ { if b := r.used.Bit(i); b == 0 { r.used.SetBit(&r.used, i, 1) return i + r.Base } } // If high-end ports are already occupied, they will be searched in low address order for i := 0; i < j; i++ { if b := r.used.Bit(i); b == 0 { r.used.SetBit(&r.used, i, 1) return i + r.Base } } return -1 }

2.2 TCP Proxy Implementation

2.2.1 Core Data Structure

Build a tcp listener based on net.Listener on the tcp proxy implementation, and port is the address to listen on

type tcpProxySocket struct { net.Listener port int }

2.2.2 Receive Local Redirected Traffic to Build Forwarders

When the tcp proxy receives a link request, it accept s the link, chooses a back-end pod based on the Service and loadbalancer algorithms to establish the link, and then starts asynchronous traffic replication to achieve two-way replication of flow direction

func (tcp *tcpProxySocket) ProxyLoop(service proxy.ServicePortName, myInfo *ServiceInfo, loadBalancer LoadBalancer) { for { if !myInfo.IsAlive() { // The service port was closed or replaced. return } // Waiting for receive link inConn, err := tcp.Accept() if err != nil { if isTooManyFDsError(err) { panic("Accept failed: " + err.Error()) } if isClosedError(err) { return } if !myInfo.IsAlive() { // Then the service port was just closed so the accept failure is to be expected. return } klog.Errorf("Accept failed: %v", err) continue } klog.V(3).Infof("Accepted TCP connection from %v to %v", inConn.RemoteAddr(), inConn.LocalAddr()) // Select a back-end server for link building based on destination address information and load balancing algorithm outConn, err := TryConnectEndpoints(service, inConn.(*net.TCPConn).RemoteAddr(), "tcp", loadBalancer) if err != nil { klog.Errorf("Failed to connect to balancer: %v", err) inConn.Close() continue } // Start Asynchronous Traffic Replication go ProxyTCP(inConn.(*net.TCPConn), outConn.(*net.TCPConn)) } }

2.2.3 Two-way TCP traffic replication

Two-way replication of TCP traffic is a two-way replication of traffic from both input and output streams through io.Copy at the bottom

func ProxyTCP(in, out *net.TCPConn) { var wg sync.WaitGroup wg.Add(2) klog.V(4).Infof("Creating proxy between %v <-> %v <-> %v <-> %v", in.RemoteAddr(), in.LocalAddr(), out.LocalAddr(), out.RemoteAddr()) // Two-way replication for traffic go copyBytes("from backend", in, out, &wg) go copyBytes("to backend", out, in, &wg) wg.Wait() } func copyBytes(direction string, dest, src *net.TCPConn, wg *sync.WaitGroup) { defer wg.Done() klog.V(4).Infof("Copying %s: %s -> %s", direction, src.RemoteAddr(), dest.RemoteAddr()) // Implement a copy of underlying traffic n, err := io.Copy(dest, src) if err != nil { if !isClosedError(err) { klog.Errorf("I/O error: %v", err) } } klog.V(4).Infof("Copied %d bytes %s: %s -> %s", n, direction, src.RemoteAddr(), dest.RemoteAddr()) dest.Close() src.Close() }

2.2.4 Link establishment through load balancing and retry mechanisms

There are two core designs for establishing links, the sessionAffinity mechanism and the timeout retry mechanism Affinity mechanism: If previous affinity links are still valid, they will be used, and if they are broken, they will need to be reset Timeout retry mechanism: actually backoff mechanism can be used here to implement the timeout retry mechanism

func TryConnectEndpoints(service proxy.ServicePortName, srcAddr net.Addr, protocol string, loadBalancer LoadBalancer) (out net.Conn, err error) { // The default is not to reset affinity, i.e. the previously established affinity links are still valid at this time sessionAffinityReset := false // EndpointDialTimeouts = []time.Duration{250 * time.Millisecond, 500 * time.Millisecond, 1 * time.Second, 2 * time.Second} // Is a delayed retry mechanism, is a wait for retry mechanism, reduces the transient retry failure caused by network instability for _, dialTimeout := range EndpointDialTimeouts { // Link by selecting an endpoint with load balancing algorithm and affinity endpoint, err := loadBalancer.NextEndpoint(service, srcAddr, sessionAffinityReset) if err != nil { klog.Errorf("Couldn't find an endpoint for %s: %v", service, err) return nil, err } klog.V(3).Infof("Mapped service %q to endpoint %s", service, endpoint) // Build underlying links outConn, err := net.DialTimeout(protocol, endpoint, dialTimeout) if err != nil { if isTooManyFDsError(err) { panic("Dial failed: " + err.Error()) } klog.Errorf("Dial failed: %v", err) // If a link failure occurs, the previous affinity may fail, at which point the node must be re-selected for the link sessionAffinityReset = true continue } return outConn, nil } return nil, fmt.Errorf("failed to connect to an endpoint.") }

Well, that's all for today. The core of this article is to introduce an implementation of random port selection algorithm, and then analyze the underlying implementation mechanism of TCP proxy. The core of this article includes affinity for establishing links, timeout retry, and the implementation of replication technology for TCP traffic. Today, I'm here, I hope you can help share the dissemination, so that authors can continue to share the power of writing. Thank you.everybody

>Microsignal: baxiaoshi2020  >Focus on bulletin numbers to read more source analysis articles

>Focus on bulletin numbers to read more source analysis articles  >More articles www.sreguide.com

>This article is a multi-article blog platform OpenWrite Release

>More articles www.sreguide.com

>This article is a multi-article blog platform OpenWrite Release