This chapter mainly discusses how to realize rolling update and rollback, arbitrarily change the version and rollback the previous version (version update), while the next chapter will discuss Pod scaling and automatically expand and shrink applications according to machine resources (automatic capacity expansion instances).

This article is part of the author's Kubernetes series of e-books. E-books have been open source. Please pay attention. E-book browsing address:

https://k8s.whuanle.cn [suitable for domestic visit]

https://ek8s.whuanle.cn [gitbook]

Rolling update and rollback

Deploy application

First, let's deploy nginx and use nginx as the mirror of the exercise.

open https://hub.docker.com/_/nginx You can query the image version of nginx. The author selects three versions: 1.19.10, 1.20.0 and latest. We will choose between these versions when updating and rolling back later.

[Info] prompt

Readers need to clearly select three different versions of nginx. Our later upgrade rollback exercises will switch back and forth in these three versions.

1.19.10 -> 1.20.0 -> latest

First, we create a Deployment of Nginx. The number of copies is 3. When deploying for the first time, it is the same as the previous operation, and no special commands are required. Here we use the older version, and the author uses 1.19.0.

kubectl create deployment nginx --image=nginx:1.19.0 --replicas=3 # perhaps # kubectl create deployment nginx --image=nginx:1.19.0 --replicas=3 --record

Note: We can also add -- record Flag writes the executed command to the resource annotation kubernetes.io/change-cause Yes. This is useful for future inspection. For example, to view the commands executed for each Deployment revision, we will explain the role of this parameter later.

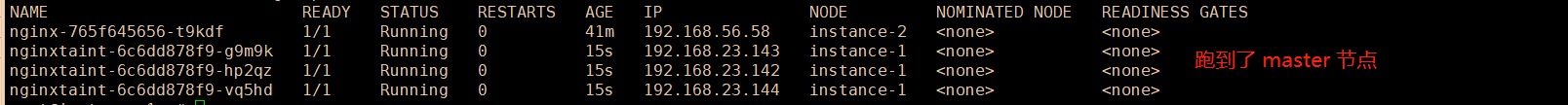

implement kubectl get pods and kubectl describe pods can be observed that there are three pods, and the nginx image version of each Pod is 1.19.0.

NAME READY STATUS RESTARTS AGE nginx-85b45874d9-7jlrv 1/1 Running 0 5s nginx-85b45874d9-h22xv 1/1 Running 0 5s nginx-85b45874d9-vthfb 1/1 Running 0 5s

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 119s default-scheduler Successfully assigned default/nginx-85b45874d9-vthfb to instance-2 Normal Pulled 117s kubelet Container image "nginx:1.19.0" already present on machine Normal Created 117s kubelet Created container nginx Normal Started 117s kubelet Started container nginx

Updated version

In fact, updating the Pod is very simple. We don't need to control the update of each Pod or worry about whether it will affect the business. K8S will automatically control these processes. We only need to trigger the image version update event, and K8S will automatically update all pods for us.

kubectl set image You can update the container image of existing resource objects, including Pod,Deployment,DaemonSet,Job,ReplicaSet. In the updated version, the pod behavior of a single container is similar to that of multiple containers, so we can use the single container pod exercise.

Update the image version in the Deployment to trigger the Pod:

kubectl set image deployment nginx nginx=nginx:1.20.0

The format is:

kubectl set image deployment {deployment name} {Image name}:={Image name}:{edition}

This command can arbitrarily modify the version of one of the containers in the Pod. As long as the mirror version of a container changes, the whole Pod will be redeployed. If the mirror version does not change, even if it is executed kubectl set image , It won't have an impact.

We can view the details of Pod:

kubectl describe pods

Events description found:

... ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 66s default-scheduler Successfully assigned default/nginx-7b87485749-rlmcx to instance-2 Normal Pulled 66s kubelet Container image "nginx:1.20.0" already present on machine Normal Created 66s kubelet Created container nginx Normal Started 65s kubelet Started container nginx

You can see that the created Pod instance is version 1.20.0.

During the update process, a new version of Pod will be created and the old Pod will be gradually removed.

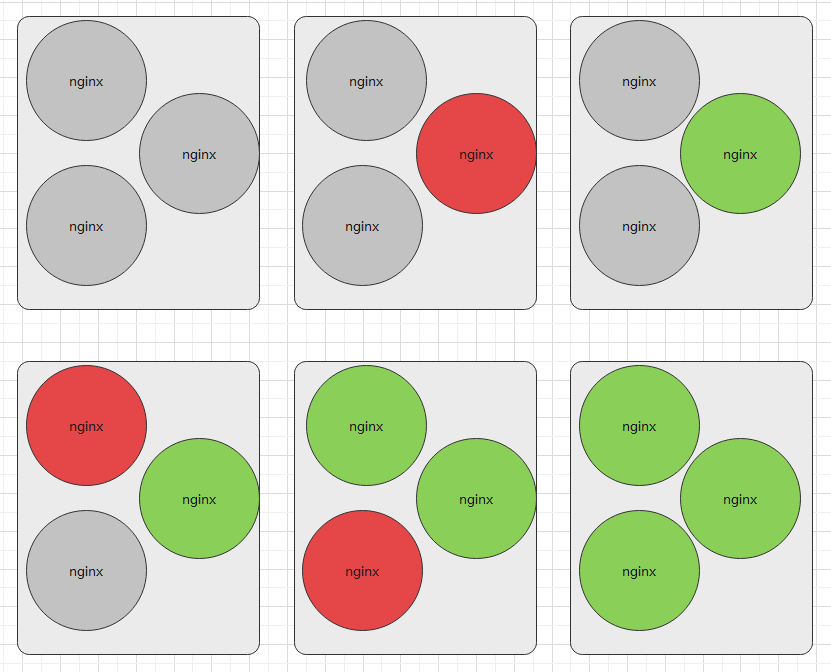

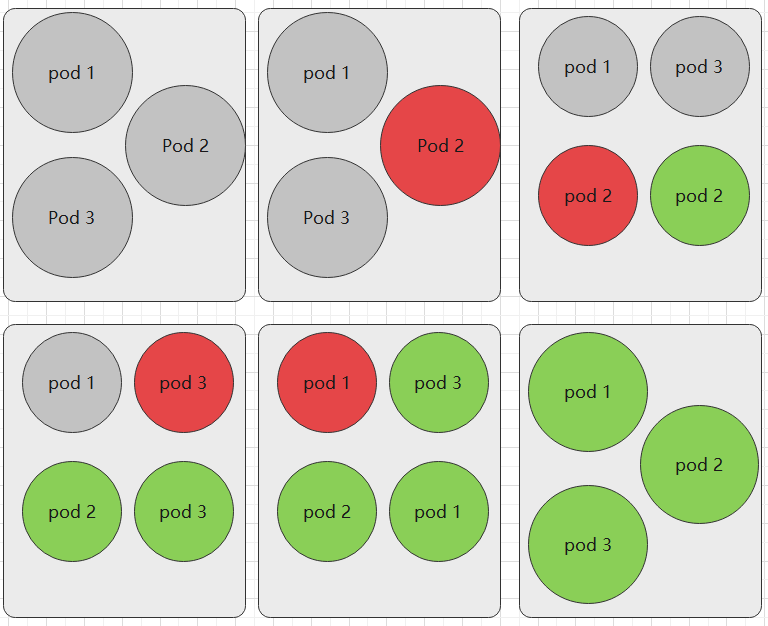

When we create a Deployment, we generate three pods. When we trigger the image version update, the pods will not be updated at one time, but only some pods will be redeployed at a time according to certain rules. The Pod update and replacement process is similar to the following figure (in fact, the number of pods may be greater than 3):

In addition, we can also kubectl edit yaml Update the Pod in the same way.

Execution:

kubectl edit deployment nginx

Then the interface of editing YAML will pop up, and . spec.template.spec.containers[0].image from nginx:1.19.0 Change to nginx:1.20.0, and then save it.

In order to record version update information, we need to kubectl create deployment,kubectl set image Add after the command -- record.

Don't forget kubectl scale The command can also change the number of copies.

go online

Only if the Deployment Pod template (i.e . When the spec.template) is changed, for example, the label or container image of the template is updated, the Deployment online will be triggered.

Other updates (such as expanding or shrinking the capacity of the Deployment) will not trigger the online action. The online action of the Deployment can update the version of the Pod (the container version in the Pod) for us.

The online / updated version mentioned here is because the container version will change, and the update generally refers to the modification of YAML, etc., which will not necessarily affect the container.

When we update the Pod version, K8S will automatically load balance instead of deleting all pods and re creating a new version of Pod. It will gradually replace the Pod copy in a robust way, so it is called rolling update.

We can pass kubectl rollout status Command to view the online status of the Pod, that is, the replacement status of the Pod replica set:

kubectl rollout status deployment nginx

There are generally two output results:

# When completed: deployment "nginx-deployment" successfully rolled out # While still updating: Waiting for rollout to finish: 2 out of 3 new replicas have been updated...

We can also view the number of updated pod s when obtaining Deployment information:

kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE nginx 3/3 3 3 18m

You can see the number of successfully updated pod s in the UP-TO-DATE field.

You can also view the ReplicaSet and Pod:

kubectl get replicaset kubectl get pods

Output type:

NAME DESIRED CURRENT READY AGE nginx-7b87485749 0 0 0 20m nginx-85b45874d9 3 3 3 21m

NAME READY STATUS RESTARTS AGE nginx-85b45874d9-nrbg8 1/1 Running 0 12m nginx-85b45874d9-qc7f2 1/1 Running 0 12m nginx-85b45874d9-t48vw 1/1 Running 0 12m

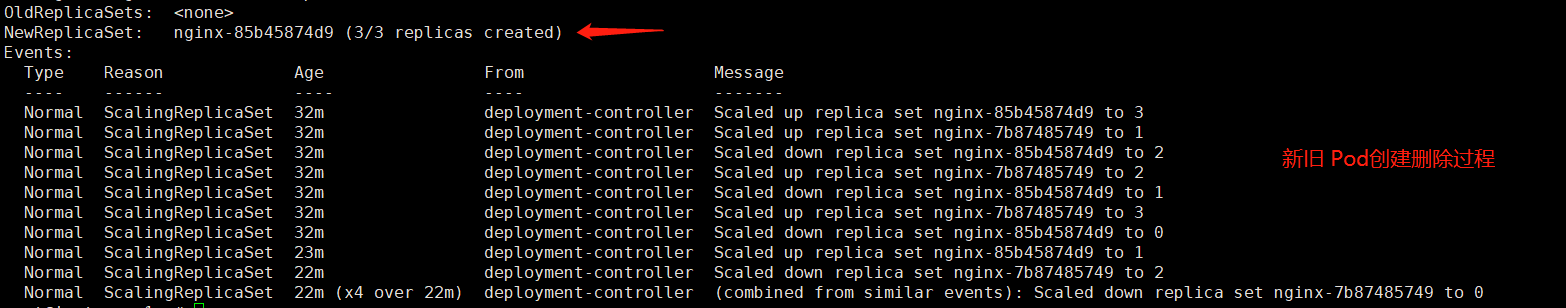

You can see that there are two replicasets. nginx-7b87485749 is version 1.19.0, which has been updated to version 1.20.0, so the number of the former is 0. We can also see that all pods in the Pod are nginx-85b45874d9 As a prefix. We can take a screenshot of these key information and compare it again later.

How to scroll updates

When we update the mirror version, the old Pod version will be replaced, but the replica record of the ReplicaSet will not be deleted. In fact, rolling update is to control the number of copies. Originally, the number of copies of 1.19.0 was 3, but now it has become 0, and the number of copies of 1.20.0 has become 3.

If our project goes online, we update the software version. If all containers or pods are updated at one time, our software will be unavailable for a period of time until all pods are updated.

Deployment ensures that only a certain number of pods are closed when updating. By default, it ensures that at least 75% of the required pods are running, that is, the proportion of pods being updated does not exceed 25%. Of course, the deployment of only two or three pods will not be limited according to this proportion. In other words, when deployment is in the rolling update state, it can always ensure that there are available pods to provide services.

If the number of Pods is large enough, or the online status is quickly output when updating the Deployment, we can see that the total number of old and new Pods is not necessarily 3, because it will not kill the old Pods until there are enough new Pods. No new Pods were created before a sufficient number of old Pods were killed. When the number of copies is 3, it ensures that at least 2 Pods are available and a total of up to 4 Pods exist (different versions).

The rolling update process is shown in the following figure:

Deployment ensures that the number of Pods created can only be a little higher than the expected number of Pods. By default, it can ensure that the number of started Pods is 25% more than the expected number (the maximum peak is 25%), so when automatically updating the deployment, the observed number of Pods may be 4, which is determined by the scaling configuration of the deployment (explained in the next chapter). In addition, when the deployment is updated, you can change not only the version of the mirror, but also the number of replicasets.

implement kubectl describe deployment nginx Viewing the Deployment details, we can also observe the replacement process of old and new pods by viewing the Event field.

However, we don't need to remember these principles and other knowledge, and we don't need to go deep. We can just remember this. We can directly view the documents when necessary. The rules of ReplicaSet will be introduced in detail in the following chapters.

View online records

By default, the online records of Deployment will be kept in the system so that they can be rolled back at any time. We also mentioned viewing earlier kubectl get replicasets The copy record that appears when.

Let's view the online history of Deployment:

kubectl rollout history deployment nginx

REVISION CHANGE-CAUSE 1 2 # With -- record, the output is REVISION CHANGE-CAUSE 2 kubectl set image deployment nginx nginx=nginx:1.20.0 --record=true

You can see that there are two versions, but change-case by < none> And? This is because the author did not use it -- record Parameter record information, if not brought -- record If we look at this historical record, we can't tell what version it is.

Now let's look at the details of version 2:

kubectl rollout history deployment nginx --revision=2

deployment.apps/nginx with revision #2

Pod Template:

Labels: app=nginx

pod-template-hash=85b45874d9

Containers:

nginx:

Image: nginx:1.20.0

Port:

Host Port:

Environment:

Mounts:

Volumes:

RollBACK

When a new version of the deployed program finds serious bug s that affect the stability of the platform, you may need to switch the project to the previous version. At present, several commands for viewing the history of Deployment online are introduced. The following describes how to change the Pod to the old version.

Rollback to previous version command:

root@master:~# kubectl rollout undo deployment nginx deployment.apps/nginx rolled back

For example, if the current version is version 2, it will be rolled back to version 1.

Re execution kubectl rollout history deployment nginx You will find that revision has become 3.

root@master:~# kubectl rollout history deployment nginx deployment.apps/nginx REVISION CHANGE-CAUSE 2 3

The revision records the deployment record, which is independent of the image version of the Pod. Each time the version is updated or rolled back, the revision will automatically increment by 1.

If the number of versions is large, we can also specify the version to roll back to.

kubectl rollout undo deployment nginx --to-revision=2

Let me mention it here -- record. Previously, we didn't use this parameter when creating and updating Deployment. In fact, this parameter is very useful. Next, we should bring this parameter every time we execute rolling update.

Update mirror to specified version:

kubectl set image deployment nginx nginx=nginx:1.19.0 --record

kubectl rollout history deployment nginx

Output:

REVISION CHANGE-CAUSE 5 6 kubectl set image deployment nginx nginx=nginx:1.19.0 --record=true

However, at present, there are only two records here. We have submitted them many times. Although the revision will change, there are only two records queried here. At this time, because we only use versions 1.19.0 and 1.20.0 during operation, we only have the submission records of these two versions. Use several versions to output the results:

REVISION CHANGE-CAUSE 7 kubectl set image deployment nginx nginx=nginx:1.19.0 --record=true 8 kubectl set image deployment nginx nginx=nginx:1.20.0 --record=true 9 kubectl set image deployment nginx nginx=nginx:latest --record=true

The number of REVISION field will increase. When we trigger the online action (container label, version, etc.), a new online record will be generated.

Suspend Online

This section requires knowledge of horizontal scaling and proportional scaling. Please read chapter 3.6 about scaling first.

If you find that the machine is not enough or you need to adjust some configurations during the online process, you can suspend the online process.

kubectl rollout pause The command allows us to pause the rolling update when the Pod version of the Deployment is.

Command:

kubectl rollout pause deployment nginx

In the process of rolling update, there will be some phenomena that need our attention.

First create a Deployment or update the Pod of the Deployment to 10 copies.

kubectl create deployment --image=nginx:1.19.0 --replicas=10

We execute kubectl edit deployment nginx Modify the number of zooms:

strategy:

rollingUpdate:

maxSurge: 3

maxUnavailable: 2

type: RollingUpdate

With maxSurge and maxUnavailable set, the Deployment can replace the Pod more slowly.

We've used it before 1.19.0,1.20.0 Two versions for demonstration, here we use latest Version for practice.

Copy the following two commands and execute them quickly to quickly block the online process. After we pause online, check some status information.

kubectl set image deployment nginx nginx=nginx:latest kubectl rollout pause deployment nginx

implement kubectl get replicaset View the number of these versions.

NAME DESIRED CURRENT READY AGE nginx-7b87485749 8 8 8 109m nginx-85b45874d9 0 0 0 109m nginx-bb957bbb5 5 5 5 52m

It can be seen that the total number of all pods is greater than 10, and the number of old containers is reduced by 2 at a time; New containers are created in the number of 3 at a time; After suspending the launch, execute it multiple times kubectl get replicaset , You will find that the number of copies does not change.

We have suspended the launch earlier. If we execute the launch command and change to another version:

kubectl set image deployment nginx nginx=nginx:1.19.0

You will find that although the prompt has been updated, it has not actually changed. implement kubectl rollout history deployment nginx We can't find the information we submitted 1.19.0 Your request. This is because it is invalid to perform a new online action in the controller object that has been suspended online.

When pausing, we can update some configurations, such as limiting the CPU and resources used by the nginx container in the Pod:

kubectl set resources deployment nginx -c=nginx --limits=cpu=200m,memory=512Mi

Restore the Deployment online:

kubectl rollout resume deployment nginx