Key points:

1. Experimental environment

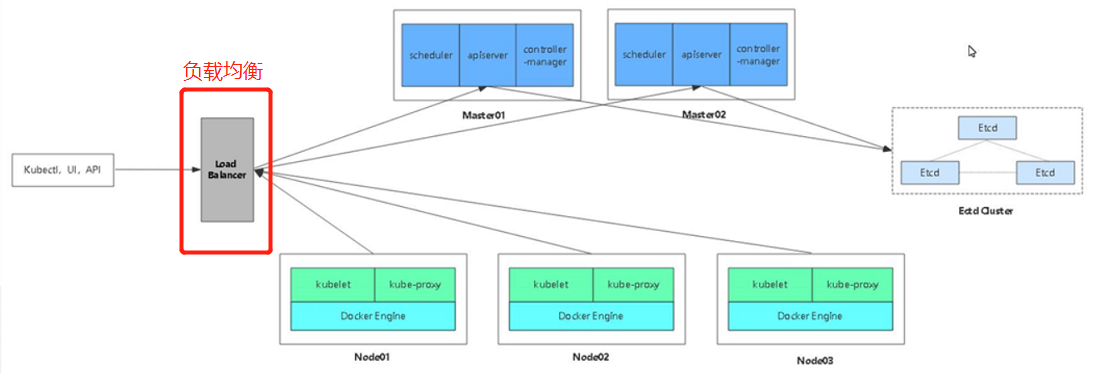

2. Load balancing scheduler deployment

1, Experimental environment:

Based on the previously deployed multi Master cluster architecture, deploy two scheduler servers (nginx is used here) to achieve load balancing:

Deployment 1 of kubernetes binary cluster - deployment of etcd storage component and flannel network component:

: https://blog.51cto.com/14475876/2470049

kubernetes binary cluster deployment I: single master cluster deployment + multiple master clusters and deployment:

: https://blog.51cto.com/14475876/2470063

server information

| role | IP address |

| master01 | 192.168.109.138 |

| master02 | 192.168.109.230 |

| Scheduler 1 (nginx01) | 192.168.109.131 |

| Scheduler 1 (nginx02) | 192.168.109.132 |

| node01 node | 192.168.109.133 |

| node02 node | 192.168.109.137 |

| Virtual ip | 192.168.109.100 |

Two scripts are required:

First: keepalived.conf

! Configuration File for keepalived

global_defs {

#Receiving email address

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

#Mailing address

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/usr/local/nginx/sbin/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51 #VRRP route ID instance, each instance is unique

priority 100 #Priority, standby server setting 90

advert_int 1 #Specifies the notification interval of VRRP heartbeat package, 1 second by default

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.188/24

}

track_script {

check_nginx

}

}

mkdir /usr/local/nginx/sbin/ -p

vim /usr/local/nginx/sbin/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

/etc/init.d/keepalived stop

fi

chmod +x /usr/local/nginx/sbin/check_nginx.sh

//Second: nginx

cat > /etc/yum.repos.d/nginx.repo << EOF

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

EOF

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 10.0.0.3:6443;

server 10.0.0.8:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}2, Load balancing scheduler deployment

//Turn off the firewall first:

[root@localhost ~]# systemctl stop firewalld.service

[root@localhost ~]# setenforce 0

//Put the script file in the home directory:

[root@localhost ~]# ls

anaconda-ks.cfg initial-setup-ks.cfg keepalived.conf nginx.sh public Template video picture File download Music desktop

//To establish a local yum warehouse:

[root@localhost ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

[root@localhost ~]# yum list

[root@localhost ~]#yum install nginx - y / / download nginx

//Next, add four layers of forwarding:

[root@localhost ~]# vim /etc/nginx/nginx.conf

//Add the following modules:

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.109.138:6443; //IP address of master01

server 192.168.109.230:6443; //IP address of master02

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

[root@localhost ~]#systemctl start nginx / / start the service

//Next, deploy the maintained service:

[root@localhost ~]# yum install keepalived -y

//Modify the configuration file (nginx01 is the master):

[root@localhost ~]# cp keepalived.conf /etc/keepalived/keepalived.conf

cp: Is it covered?"/etc/keepalived/keepalived.conf"? yes

[root@localhost ~]# vim /etc/keepalived/keepalived.conf

//Delete and modify as follows:

! Configuration File for keepalived

global_defs {

#Receiving email address

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

#Mailing address

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh" ##Detect the path of the script, which will be created later

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100 ##priority

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.109.100/24 ##Virtual IP address

}

track_script {

check_nginx

}

}

//nginx02 (backup) is configured as follows:

! Configuration File for keepalived

global_defs {

#Receiving email address

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

#Mailing address

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh" ##Detect the path of the script, which will be created later

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90 ##Priority lower than master

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.109.100/24 ##Virtual IP address

}

track_script {

check_nginx

}

}

//Create test script

[root@localhost ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

[root@localhost ~]#chmod + x / etc/nginx/check_nginx.sh / / authorization

[root@localhost ~]#systemctl start kept. Service / / start the service

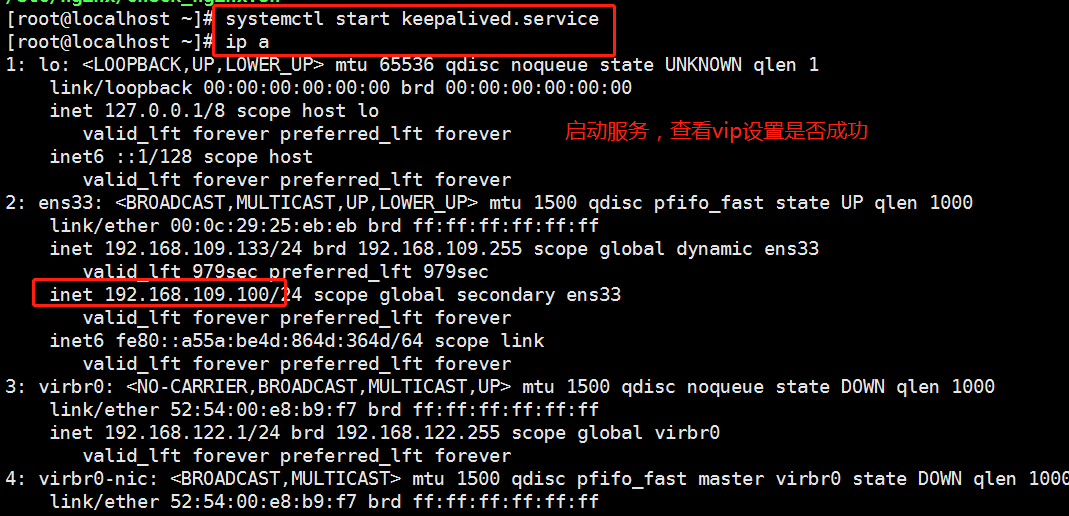

[root@localhost ~]#ip a / / view ip address

2. Verification of experimental results

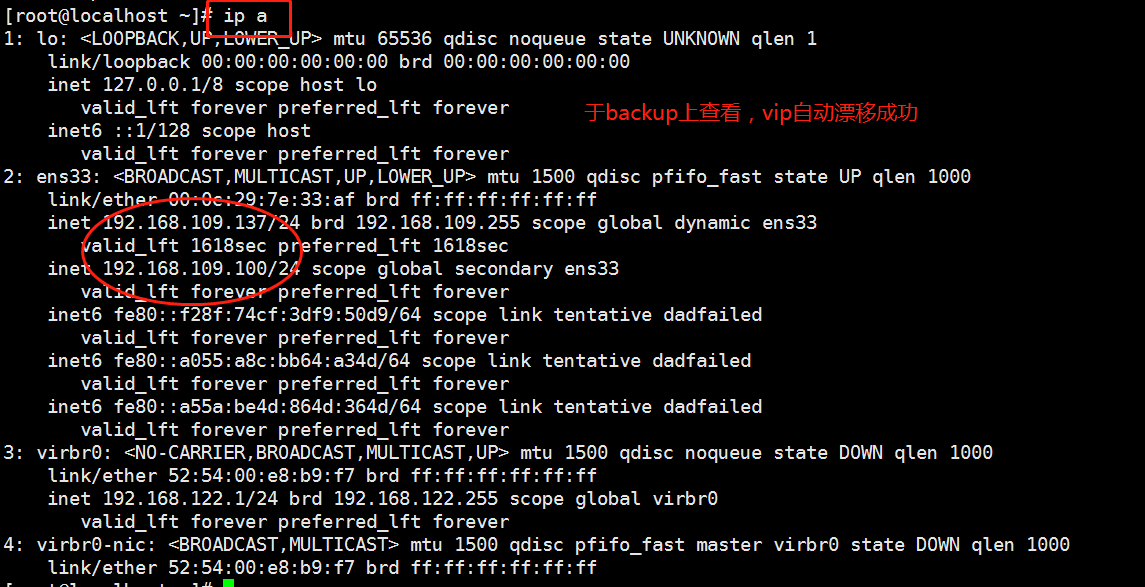

Verification 1: whether the drift address works (whether the high availability is realized)

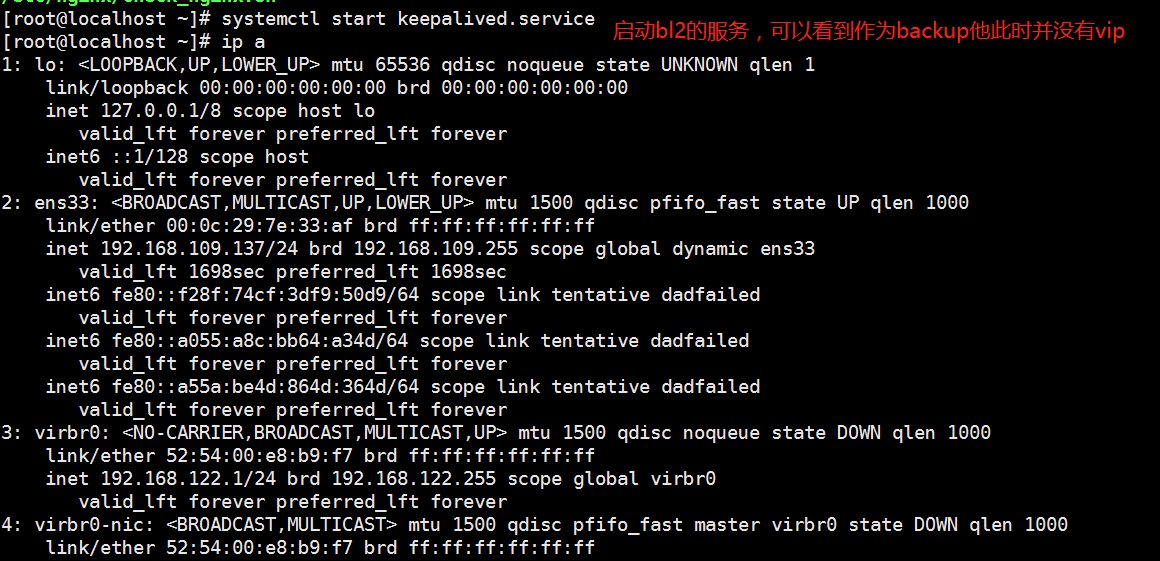

1. At this time, the virtual ip on nginx01 verifies the address drift. You can use pkill nginx in lb01 to stop the nginx service, and then use ip a command on lb02 to check whether the address has drifted.

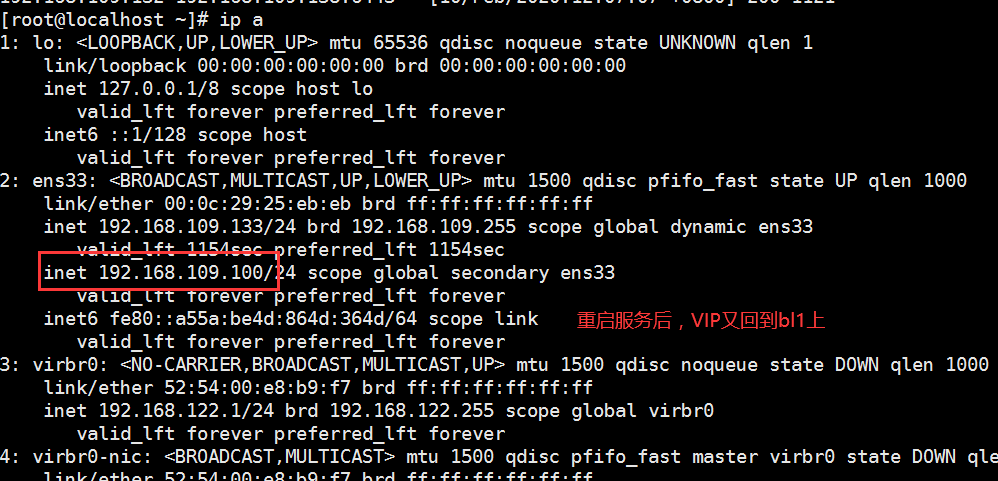

2. Recover. At this time, on nginx02, we start nginx service first, then keepalived service, and then check with ip a command. The address drifts back again, but there is no virtual ip on nginx02.

Verification 2: verify whether load balancing is implemented < VIP is on bl2 at this time >

1. Modify the homepage content of nginx01 (master):

[root@localhost ~]# vim /usr/share/nginx/html/index.html <h1>Welcome to master nginx!</h1>

2. Modify the homepage content of nginx02 (backup):

[root@localhost ~]# vim /usr/share/nginx/html/index.html <h1>Welcome to backup nginx!</h1>

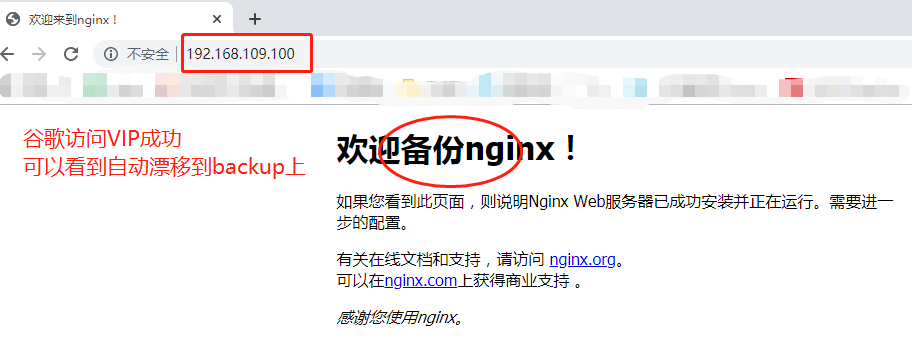

3. Access by browser: http://192.168.109.100/

At this time, load balancing and high availability functions have been fully realized!!!

3. Deploy node nodes:

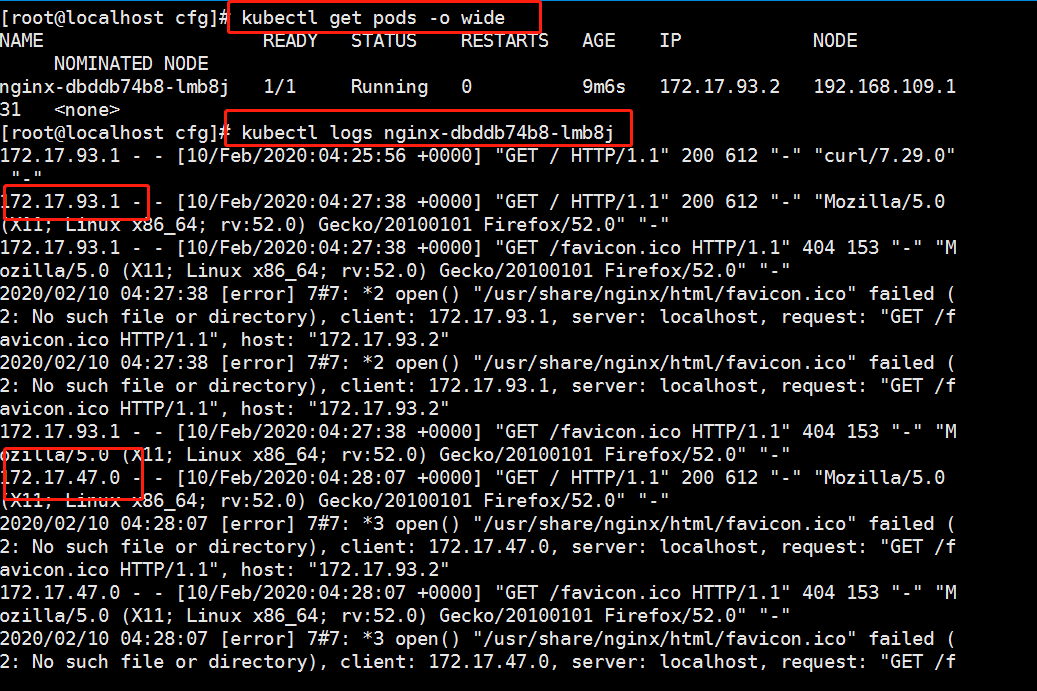

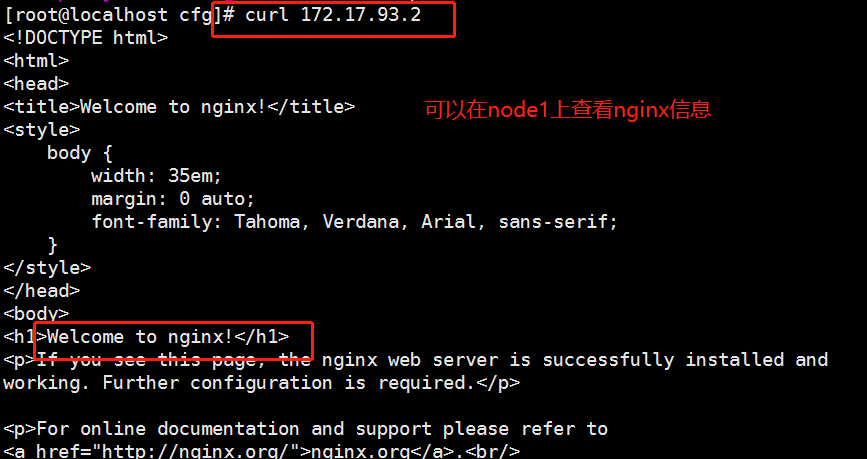

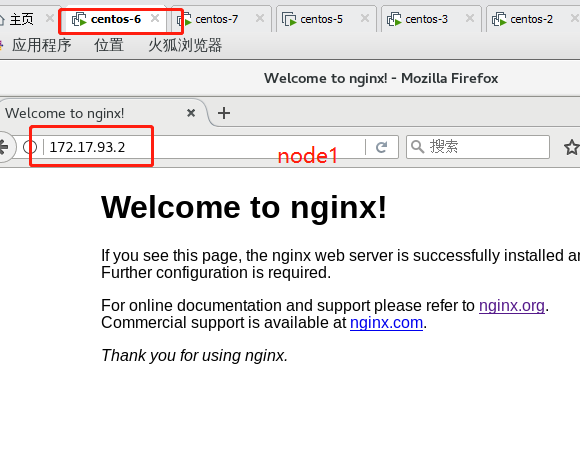

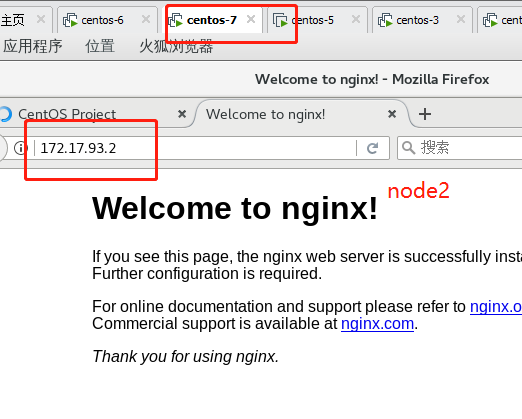

//Start to modify the unified VIP of node configuration file (bootstrap.kubeconfig,kubelet.kubeconfig) Modification content: server: https://192.168.109.100:6443 (all changed to vip) [root@localhost cfg]# vim /opt/kubernetes/cfg/bootstrap.kubeconfig [root@localhost cfg]# vim /opt/kubernetes/cfg/kubelet.kubeconfig [root@localhost cfg]# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig //Restart service: [root@localhost cfg]# systemctl restart kubelet.service [root@localhost cfg]# systemctl restart kube-proxy.service //Check the modification: [root@localhost cfg]# grep 100 * bootstrap.kubeconfig: server: https://192.168.109.100:6443 kubelet.kubeconfig: server: https://192.168.109.100:6443 kube-proxy.kubeconfig: server: https://192.109.220.100:6443 //Next, view the k8s log of nginx on scheduler 1: [root@localhost ~]# tail /var/log/nginx/k8s-access.log 192.168.109.131 192.168.109.138:6443 - [09/Feb/2020:13:14:45 +0800] 200 1122 192.168.109.131 192.168.109.230:6443 - [09/Feb/2020:13:14:45 +0800] 200 1121 192.168.109.132 192.168.109.138:6443 - [09/Feb/2020:13:18:14 +0800] 200 1120 192.168.109.132 192.168.109.230:6443 - [09/Feb/2020:13:18:14 +0800] 200 1121 It can be seen that the polling scheduling algorithm distributes the request traffic to two master s ————Next, the test creates a Pod: On master01: [root@localhost kubeconfig]# kubectl run nginx --image=nginx //View status: [root@localhost kubeconfig]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-dbddb74b8-zbhhr 1/1 Running 0 47s The creation is complete and running ***Attention to log issues * * *: [root@localhost kubeconfig]# kubectl logs nginx-dbddb74b8-zbhhr Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-dbddb74b8-zbhhr) At this time, an error will be reported when viewing the log due to permission problems Solution (escalate permissions): [root@localhost kubeconfig]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created At this time, check the log again and no error will be reported: //To view the Pod network: [root@localhost kubeconfig]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE nginx-dbddb74b8-zbhhr 1/1 Running 0 7m11s 172.17.93.2 192.168.109.131 <none> As you can see, the pod created on master01 has been assigned to node01. We can operate on the node node of the corresponding network to directly access: To operate on node01: [root@localhost cfg]# curl 172.17.93.2

At this time, due to the function of the flannel network component, you can access this address on the browsers of node01 and node02: 172.17.93.2

Because we just visited the web page, we can also view the log information on master01: