pod overview

Pod is the basic execution unit of Kubernetes application, that is, it is the smallest and simplest unit created or deployed in Kubernetes object model. Pod represents the process running on the cluster.

Pod encapsulates application containers (or in some cases multiple containers), storage resources, unique network IP, and options to control how containers should run.

Pod in the Kubernetes cluster can be used for two main purposes:

Run the Pod of a single container. The "one container per Pod" model is the most common Kubernetes use case; in this case, you can think of a Pod as a wrapper for a single container, and Kubernetes directly manages the Pod, not the container.

Pod that runs multiple containers that work together. Pod may encapsulate applications that consist of multiple tightly coupled co containers that need to share resources. These containers in the same location can form a single cohesive unit of service - one container provides files from a shared volume to the public, while a separate "sidecar" container refreshes or updates the files. Pod packages these containers and storage resources into a manageable entity.

Pod provides two kinds of shared resources for its container: network and storage.

network

Each Pod is assigned a unique IP address. Each container in the Pod shares the network namespace, including the IP address and network port. Containers within the Pod can communicate with each other using localhost. When containers in a Pod communicate with entities other than the Pod, they must coordinate how shared network resources (such as ports) are used.

storage

A Pod can specify a set of shared storage volumes. All containers in the Pod have access to shared volumes, allowing them to share data. The volume also allows persistent data in the Pod to remain in case the container in it needs to be restarted.

- Restart policy

- There is a restartPolicy field in PodSpec, with possible values of Always, OnFailure, and Never. The default is Always.

- Pod's life

- Generally Pod will not disappear until they are destroyed artificially, which may be a person or controller.

- It is recommended that you create the appropriate controller to create the Pod, rather than creating the Pod yourself. Because alone

Pod can't recover automatically in case of machine failure, but the controller can.

- Three controllers are available:

- Use job to run Pod that is expected to terminate, such as batch calculation. Job only applies to restart policy

Pod for OnFailure or Never. - Use ReplicationController, ReplicaSet, and

Deployment, such as a Web server. ReplicationController is only available for

There is a Pod with restartPolicy of Always. - Provides machine specific system services, using DaemonSet to run a Pod for each machine.

- Use job to run Pod that is expected to terminate, such as batch calculation. Job only applies to restart policy

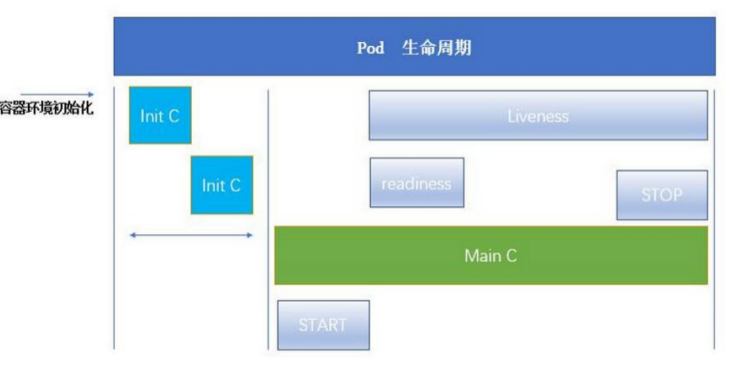

pod life cycle

There is such a mirror image on each node of k8s:

[root@server4 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE reg.caoaoyuan.org/library/pause 3.2 80d28bedfe5d 4 months ago 683kB //This is k8s's follow-up image for pod. Each pod is started by it. It provides the boot environment (network, volume) for pod.

Before the pod runs the container, it will run one or more init initialization containers first. They do it once, and the application container will start only after they run it.

- Init container is very similar to ordinary containers except for the following two points:

- They always run to completion.

- Init containers do not support readability because they must be run before the Pod is ready.

- Each Init container must run successfully before the next one can run.

- If the Init container of a Pod fails, Kubernetes will continue to restart the Pod until the Init container

Until the device succeeds. However, if the corresponding restartPolicy value of Pod is Never, it will not restart

Start.

After the initialization of the container, the common container starts to run. The start and stop of the common container are detected by the probe. The probe has a survival probe and a ready probe. If not specified, it defaults to success. The liveness probe is used for survival detection to see whether the container is running normally. readness is used to check whether the container service is ready

[root@server2 manifest]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-5fd54d7f56-k5jb9 1/1 Running 3 7d8h coredns-5fd54d7f56-xb5m4 1/1 Running 4 7d19h etcd-server2 1/1 Running 7 7d23h

Only when the survival probe is detected successfully can it be in the running state, and only when the ready detection is passed can it be in the ready state, so that users can access it, and traffic can come in.

init container

For more details, please refer to: https://kubernetes.io/zh/docs/concepts/workloads/pods/init-containers/

- What can the Init container do?

- The Init container can contain utilities or personalization that do not exist in the application container during installation

code. - Init container can run these tools safely to avoid the security of application image caused by these tools

Lower. - The creators and Deployers of the application image can work independently without the need to build a

A separate application image. - The Init container can run in a different file system view than the application container in the Pod. Therefore, the Init container

You can have access to Secrets, but the application container cannot. - Since the Init container must run before the application container starts, the Init container provides a

Mechanisms to block or delay the start of an application container until a set of prerequisites are met. Once before

If the conditions are met, all application containers in the Pod will start in parallel.

- The Init container can contain utilities or personalization that do not exist in the application container during installation

[root@server2 manifest]# vim init.yml apiVersion: v1 kind: Pod metadata: name: myapp-pod labels: app: myapp spec: initContainers: - name: init-myservice image: busybox:latest command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"] - name: init-mydb image: busybox:latest command: ['sh', '-c', "until nslookup mydb.default.svc.cluster.local; do echo waiting for mydb; sleep 2; done"] containers: - name: myapp-container image: busybox:latest ~

"until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

It means to perform nslookup instruction parsing myservice.default.svc.cluster.local If the address is resolved successfully, it will continue. If it is not resolved successfully, it will always be in the initialization state and loop the contents of do.

[root@server2 manifest]# kubectl get pod -w NAME READY STATUS RESTARTS AGE myapp-pod 0/2 Init:0/1 0 5s //Initialization of the container is in progress, but the card is here. init is in progress all the time Init Containers: init-myservice: Container ID: docker://3b83c7896675f49dab5b8c103e52b5f5e4b62bf6ce6d3659267267ebddc0978e Image: busyboxplus Image ID: docker-pullable://busyboxplus@sha256:9d1c242c1fd588a1b8ec4461d33a9ba08071f0cc5bb2d50d4ca49e430014ab06 Port: <none> Host Port: <none> Command: sh -c until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done State: Running //Running all the time Started: Fri, 26 Jun 2020 18:56:21 +0800 Ready: False

However, the current container cannot run because myservice is not created and cannot be resolved.

Pay attention to shut down the Internet, or it will be resolved through the dns of the host.

Create services for two initialization containers:

[root@server2 manifest]# vim service.yml kind: Service apiVersion: v1 metadata: name: myservice spec: ports: - protocol: TCP port: 80 targetPort: 9376 --- kind: Service apiVersion: v1 metadata: name: mydb spec: ports: - protocol: TCP port: 80 targetPort: 9377 [root@server2 manifest]# kubectl apply -f service.yml service/myservice created service/mydb created [root@server2 manifest]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8d mydb ClusterIP 10.100.111.80 <none> 80/TCP 7s myservice ClusterIP 10.107.87.62 <none> 80/TCP 7s [root@server2 manifest]# kubectl get pod -w / / view again NAME READY STATUS RESTARTS AGE myapp-pod 0/1 Init:1/2 0 9s myapp-pod 0/1 PodInitializing 0 13s //pod initialization myapp-pod 1/1 Running 0 52s //It's running

At this time, we are shutting down the service, and the container will not be affected, because the init is running before the container, and the running is finished. It will not affect the containers behind.

After entering the operation of the application container, we can arrange the probes. Used to detect service and running status.

probe

- Probes are periodic diagnostics performed by kubelet on containers:

- ExecAction: executes the specified command within the container. If the return code is 0 when the command exits

The diagnosis was successful. - TCPSocketAction: TCP checks the IP address of the container on the specified port. If

If the port is open, the diagnosis is considered successful. - Http getaction: performs HTTP on the IP address of the container on the specified port and path

Get request. If the status code of the response is greater than or equal to 200 and less than 400, the diagnosis is considered as

successful.

- ExecAction: executes the specified command within the container. If the return code is 0 when the command exits

- Each detection will obtain one of the following three results:

- Success: the container passed the diagnosis.

- Failed: container failed diagnostics.

- Unknown: diagnosis failed, so no action will be taken.

- Kubelet can choose whether to execute and react to the three probes running on the container:

- livenessProbe: indicates whether the container is running. If the survival probe fails, kubelet

The container is killed and will be affected by its restart policy. If the container does not provide a survival probe

Pin, the default state is Success. - readinessProbe: indicates whether the container is ready for service requests. If ready detection fails, end

The point controller will remove the IP address of the Pod from the endpoints of all services that match the Pod.

The ready state before the initial delay defaults to Failure. If the container does not provide a ready probe, the

Recognize the status as Success. - startupProbe: indicates whether the app in the container has started. If start detection is provided

(startup probe), all other probes are disabled until it succeeds. If detection is initiated

If it fails, kubelet will kill the container and the container will restart according to its restart policy. If the container does not

- livenessProbe: indicates whether the container is running. If the survival probe fails, kubelet

The survival probe and ready probe are ignored when startupProbe exists, so we only test the survival probe and ready probe

liveness

[root@server2 manifest]# vim init.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

initContainers:

- name: init-myservice

image: busyboxplus

command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

containers:

- name: myapp-container

image: myapp:v1

imagePullPolicy: IfNotPresent

livenessProbe: //Survival probe

tcpSocket:

port: 80 //Check port 80, open to normal operation

initialDelaySeconds: 1 //1 second delay after container opening

periodSeconds: 2 //Every 2 seconds

timeoutSeconds: 1 //overtime

[root@server2 manifest]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8d

myservice ClusterIP 10.99.245.243 <none> 80/TCP 15h //Our svc is on

[root@server2 manifest]# kubectl apply -f init.yml

pod/myapp-pod created

[root@server2 manifest]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 PodInitializing 0 4s

myapp-pod 1/1 Running 0 4s

[root@server2 manifest]# kubectl exec -it myapp-pod -- sh

/ # nginx -s stop / / enter the same period and close the nginx service

2020/06/27 02:51:21 [notice] 12#12: signal process started

/ # command terminated with exit code 137

[root@server2 manifest]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 1/1 Running 1 3m42s //Automatic restart.

[root@server2 manifest]# kubectl describe pod myapp-pod

Liveness: tcp-socket :80 delay=1s timeout=1s period=2s #success=1 #failure=3 / / successful detection once, failure detection three times

The life cycle of the survival probe and the container is always maintained, and the survival detection of the container will be carried out continuously. When the port is detected to be closed, kubelet will restart the container, but the restart strategy is not Never.

At this time, our service can't recognize the ip address of the pod, but has made a resolution. We should:

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

selector: //Add the selector and select the tag of our pod to add to the service

app: myapp //service is to expose a port

[root@server2 manifest]# kubectl apply -f service.yml

service/myservice created

[root@server2 manifest]# kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: Selector: app=myapp

Type: ClusterIP

IP: 10.100.34.244

Port: <unset> 80/TCP

TargetPort: 9376/TCP

Endpoints: 10.244.1.60:9376 //The pod resource is on the service. This is when the ready probe is finished,

Session Affinity: None

Events: <none>

[root@server2 manifest]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-pod 1/1 Running 1 22m 10.244.1.60 server3 <none> <none>

readiness

Above, we only add the survival probe. If the ready probe is not added, it will succeed by default. Therefore, we can see that the ip of pod is added to svc, which can be accessed. Next we add the ready probe for detection.

[root@server2 manifest]# vim init.yml

...

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 1

periodSeconds: 2

timeoutSeconds: 1

readinessProbe: //Add the ready probe

httpGet:

path: /hostname.html //Let it detect this page

port: 80 //And 80 ports

initialDelaySeconds: 1

periodSeconds: 2

timeoutSeconds: 1

[root@server2 manifest]# kubectl apply -f init.yml

pod/myapp-pod created

[root@server2 manifest]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 1/1 Running 0 2m9s

//The current ready status is normal, because both of the detected items are normal

[root@server2 manifest]# Kubectl exec - it myapp pod -- SH / / make some changes

/ # vi /etc/nginx/conf.d/default.conf

#location = /hostname.html {

# alias /etc/hostname; / / two lines are missing

#}

/ # nginx -s reload

2020/06/27 03:23:53 [notice] 23#23: signal process started

/ # ps ax

PID USER TIME COMMAND

1 root 0:00 nginx: master process nginx -g daemon off;

14 root 0:00 sh

24 nginx 0:00 nginx: worker process

25 root 0:00 ps ax

/ # [root@server2 manifest]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Running 0 6m50s //Container running, but not ready

//The ready state is turned off because the ready probe is in effect and it cannot find the path:/ hostname.html This is the path.

[root@server2 manifest]# kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: Selector: app=myapp

Type: ClusterIP

IP: 10.100.34.244

Port: <unset> 80/TCP

TargetPort: 9376/TCP

Endpoints: //So you don't have to put the pod address here.

[root@server2 manifest]# kubectl exec -it myapp-pod -- sh

/ # vi /etc/nginx/conf.d/default.conf //Which parameters are we opening in the container

/ # nginx -s reload

2020/06/27 04:03:16 [notice] 32#32: signal process started

/ # [root@server2 manifest]# kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: Selector: app=myapp

Type: ClusterIP

IP: 10.100.34.244

Port: <unset> 80/TCP

TargetPort: 9376/TCP

Endpoints: 10.244.1.61:9376 //Come on

Session Affinity: None

Events: <none>

[root@server2 manifest]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 1/1 Running 0 46m

[root@server2 manifest]# kubectl run demo --image=busyboxplus -it --restart=Never cuelIf you don't see a command prompt, try pressing enter. / # curl 10.102.77.37 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> // Then the container can be accessed through the ip address of svc. svc accesses the container through the tag recently.

We are adding another pod:

[root@server2 manifest]# vim pod2.yml apiVersion: apps/v1 kind: Deployment metadata: name: deployment-example spec: replicas: 2 //There are two copies selector: matchLabels: app: myapp template: metadata: labels: app: myapp //The setting label is the same. spec: containers: - name: nginx image: myapp:v2 //Using v2 mirror [root@server2 manifest]# kubectl apply -f pod2.yml kubec deployment.apps/deployment-example created [root@server2 manifest]# kubectl get pod NAME READY STATUS RESTARTS AGE demo 0/1 Completed 0 10m deployment-example-5c9fb4c54c-4f9dj 1/1 Running 0 5s deployment-example-5c9fb4c54c-pnfzb 1/1 Running 0 5s myapp-pod 1/1 Running 0 64m [root@server2 manifest]# kubectl describe svc myservice Name: myservice Namespace: default Labels: <none> Annotations: Selector: app=myapp Type: ClusterIP IP: 10.102.77.37 Port: <unset> 80/TCP TargetPort: 80/TCP Endpoints: 10.244.1.61:80,10.244.2.36:80,10.244.2.37:80 //Add two new container pages Session Affinity: None Events: <none>

So we can do load balancing.

[root@server2 manifest]# kubectl run demo --image=busyboxplus -it --restart=Never If you don't see a command prompt, try pressing enter. / # curl 10.102.77.37 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> / # curl 10.102.77.37 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> / # curl 10.102.77.37 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> / # curl 10.102.77.37 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> / # curl 10.102.77.37 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> / # curl 10.102.77.37 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> / # curl 10.102.77.37 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>