kubernetes-1.11.0 cluster deployment node cluster (3)

Node configuration

The components to be deployed in a single node include docker, calico, kubelet and Kube proxy. Node node makes master HA based on nginx load API

Start a nginx on each node, and each nginx reversely proxy all API servers;

Kubelet Kube proxy on node connects to local nginx proxy port

When nginx finds that it cannot link to the back end, it will automatically remove the api server in question, so as to realize the HA of the api server.

Create a Kebe proxy certificate

vim kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

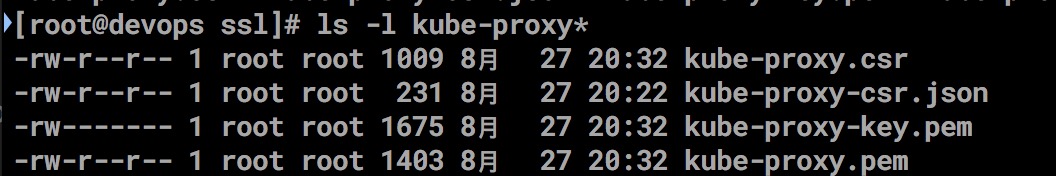

Generate Kube proxy certificate and private key

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

Copy to directory scp kube-proxy* root@10.39.10.160:/etc/kubernetes/ssl/

Create the Kube proxy kubeconfig file

#Configuration cluster

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://127.0.0.1:6443 --kubeconfig=kube-proxy.kubeconfig

#Configure client authentication

kubectl config set-credentials kube-proxy --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

#Configuration correlation

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

#Configure default associations

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

#Copy to other machines

scp kube-proxy.kubeconfig root@10.39.10.156:/etc/kubernetes/

scp kube-proxy.kubeconfig root@10.39.10.159:/etc/kubernetes/

#Create the kube-proxy.service file

//Ipvsadm ipset conntrack software needs to be installed

yum install ipset ipvsadm conntrack-tools.x86_64 -y

cd /etc/kubernetes/

vim kube-proxy.config.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 10.39.10.160

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 10.254.64.0/18

healthzBindAddress: 10.39.10.160:10256

hostnameOverride: kubernetes-master-154

kind: KubeProxyConfiguration

metricsBindAddress: 10.39.10.160:10249

mode: "ipvs"

vi /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.config.yaml \

--logtostderr=true \

--v=1

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

//This directory / var / lib / Kube proxy is started. If it fails, please create it manually

Start Kube proxy

systemctl daemon-reload

systemctl enable kube-proxy

systemctl start kube-proxy

systemctl status kube-proxy

# Issuing certificates

scp ca.pem kube-proxy.pem kube-proxy-key.pem root@10.39.10.160:/etc/kubernetes/ssl/

//Create nginx agent

yum install epel-release -y

yum install nginx -y

cat << EOF >> /etc/nginx/nginx.conf

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 1024;

}

stream {

upstream kube_apiserver {

least_conn;

server 10.39.10.154:6443;

server 10.39.10.156:6443;

server 10.39.10.159:6443;

}

server {

listen 0.0.0.0:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

EOF

# Configure nginx based on docker process, and then configure systemd to start

vim /etc/systemd/system/nginx-proxy.service

[Unit]

Description=kubernetes apiserver docker wrapper

Wants=docker.socket

After=docker.service

[Service]

User=root

PermissionsStartOnly=true

ExecStart=/usr/bin/docker run -p 127.0.0.1:6443:6443 \

-v /etc/nginx:/etc/nginx \

--name nginx-proxy \

--net=host \

--restart=on-failure:5 \

--memory=512M \

nginx:1.13.7-alpine

ExecStartPre=-/usr/bin/docker rm -f nginx-proxy

ExecStop=/usr/bin/docker stop nginx-proxy

Restart=always

RestartSec=15s

TimeoutStartSec=30s

[Install]

WantedBy=multi-user.target

# start nginx

systemctl daemon-reload

systemctl start nginx-proxy

systemctl enable nginx-proxy

systemctl status nginx-proxy

Configure the kubelet.service file

vi /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--hostname-override=kubernetes-64 \

--pod-infra-container-image=harbor.enncloud.cn/enncloud/pause-amd64:3.1 \

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.config.json \

--cert-dir=/etc/kubernetes/ssl \

--logtostderr=true \

--v=2

[Install]

WantedBy=multi-user.target

#Create kubelet config configuration file

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "172.16.1.66",

"port": 10250,

"readOnlyPort": 0,

"cgroupDriver": "cgroupfs",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletClientCertificate": true,

"RotateKubeletServerCertificate": true

},

"MaxPods": "512",

"failSwapOn": false,

"containerLogMaxSize": "10Mi",

"containerLogMaxFiles": 5,

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.254.0.2"]

}

#Add token of node (master operation)

kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:kubernetes-node-160 --kubeconfig ~/.kube/config

#Configure cluster parameters

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://127.0.0.1:6443 --kubeconfig=kubernetes-node-160-bootstrap.kubeconfig

#Configure client authentication

kubectl config set-credentials kubelet-bootstrap --token=ap4lcp.3yai1to1f98sfray --kubeconfig=kubernetes-node-160-bootstrap.kubeconfig

#Configuration key

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubernetes-node-160-bootstrap.kubeconfig

#Configure default associations

kubectl config use-context default --kubeconfig=kubernetes-node-160-bootstrap.kubeconfig

//Copy scp kubernetes-node-160-bootstrap.kubeconfig root@10.39.10.160:/etc/kubernetes/bootstrap.kubeconfig

systemctl restart kubelet

## If you fail to register the master because of a configuration error or other reasons, you need to delete some original files, such as

/etc/kubernetes/ssl Delete under directory kubelet Relevant certificates and key, delete/etc/kubernetes Lower kubelet.kubeconfig file, Restart kubelet That is, regenerate these files

#Configure kube-proxy.service

vim /etc/kubernetes/kube-proxy.config.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 10.39.10.160

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 10.254.64.0/18

healthzBindAddress: 10.39.10.160:10256

hostnameOverride: kubernetes-node-160

kind: KubeProxyConfiguration

metricsBindAddress: 10.39.10.160:10249

mode: "ipvs"

#Create the Kube proxy directory

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.config.yaml \

--logtostderr=true \

--v=1

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#start-up

systemctl start kube-proxy

systemctl status kube-proxy

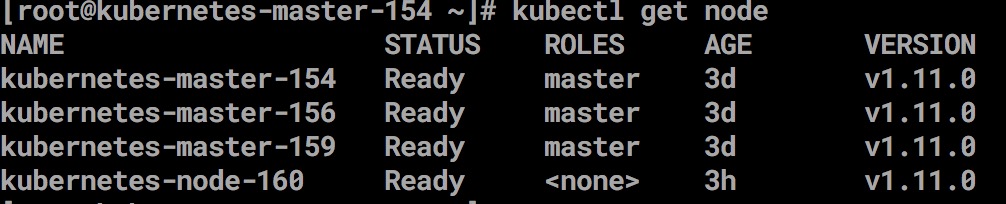

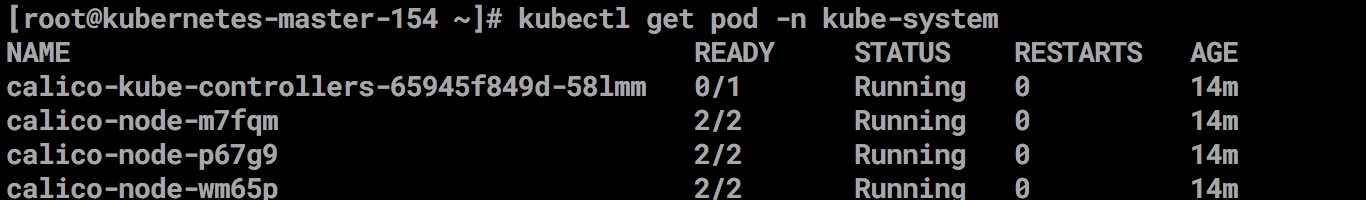

Verify nodes

kubectl get nodes

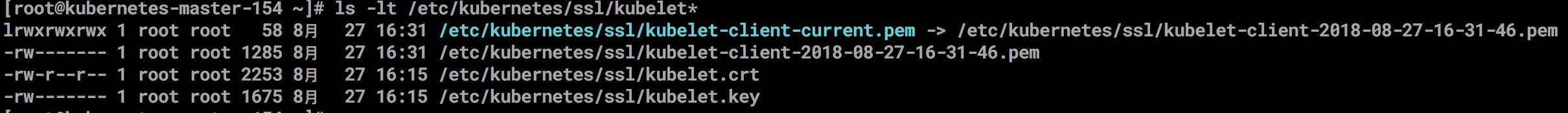

View kubelet generation file

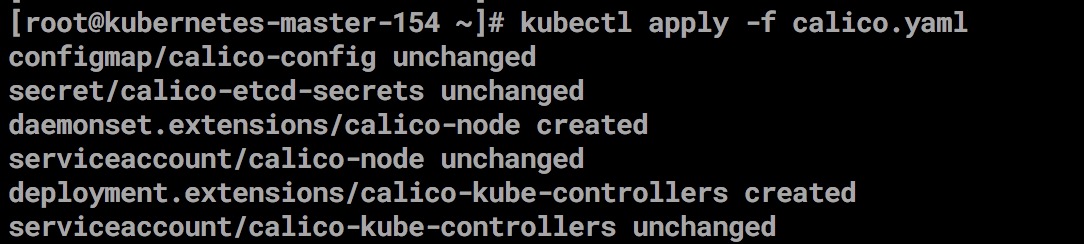

#Configure calico network

https://docs.projectcalico.org/v3.2/getting-started/kubernetes/

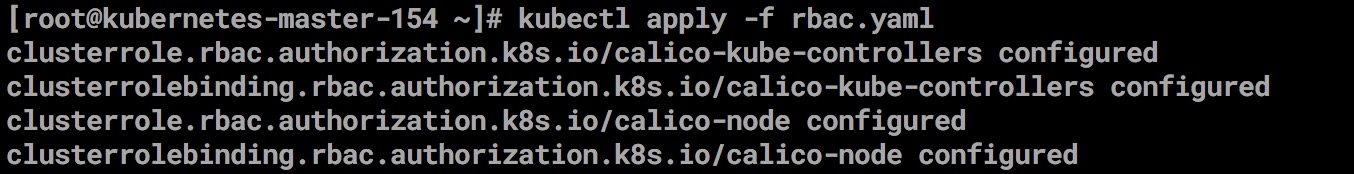

1.install calico Of RBAC role

kubectl apply -f \

https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/rbac.yaml

2.install calico Configuration content needs to be modified

kubectl apply -f \

https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/hosted/calico.yaml

//Modify calico.yaml

etcd_endpoints: Fill in here etcd Cluster information

etcd_ca: "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key"

data:

# Populate the following files with etcd TLS configuration if desired, but leave blank if

# not using TLS for etcd.

# This self-hosted install expects three files with the following names. The value

# should be base64 encoded strings of the entire contents of each file.

//Here, the certificate is encoded as base64 bit and filled in here

calico common commands

install calicoctl curl -O -L https://github.com/projectcalico/calicoctl/releases/download/v3.2.1/calicoctl /usr/local/bin/ chmod +x calicoctl

https://docs.projectcalico.org/v3.1/usage/calicoctl/configure/etcd

Establish calicoctl.cfg file

vim /etc/calico/calicoctl.cfg

apiVersion: projectcalico.org/v3

kind: CalicoAPIConfig

metadata:

spec:

etcdEndpoints: https://10.39.10.154:2379,https://10.39.10.156:2379,https://10.39.10.160:2379

etcdKeyFile: /etc/kubernetes/ssl/etcd-key.pem

etcdCertFile: /etc/kubernetes/ssl/etcd.pem

etcdCACertFile: /etc/kubernetes/ssl/ca.pem

//You can execute the calicctl node status