catalogue

- 1, Kubedm deployment

- 1.1 environmental preparation

- 1.2. Install docker on all nodes

- 1.3 install kubedm, kubelet and kubectl on all nodes

- 1.4. Deploy K8S cluster

- 1.4.1. On the master node, upload the kubedm-basic.images.tar.gz compressed package to the / opt directory

- 1.4.2 copy image to node node

- 1.4.3 initialize kubedm

- Method 2

- 1.4.4 setting kubectl

- 1.4.5 deploy the network plug-in flannel on all nodes

- 1.4.5 test pod resource creation

- 1.4.6 providing services through exposed ports

- 1.4.7 expand 3 copies

- 2, Installing dashboard

- 3, Install Harbor private warehouse

- 4, Kernel parameter optimization scheme

1, Kubedm deployment

1.1 environmental preparation

master(2C/4G,cpu The number of cores is required to be greater than 2) 192.168.80.11 docker,kubeadm,kubelet,kubectl,flannel node01(2C/2G) 192.168.80.12 docker,kubeadm,kubelet,kubectl,flannel node02(2C/2G) 192.168.80.13 docker,kubeadm,kubelet,kubectl,flannel Harbor Node( hub.lk.com) 192.168.80.14 docker,docker-compose,harbor-offline-v1.2.2 1,Install on all nodes Docker and kubeadm 2,deploy Kubernetes Master 3,Deploy container network plug-in 4,deploy Kubernetes Node,Join node Kubernetes In cluster 5,deploy Dashboard Web Page, visual view Kubernetes resources 6,deploy Harbor Private warehouse to store image resources #//All nodes, turn off firewall rules, turn off selinux, and turn off swap systemctl stop firewalld systemctl disable firewalld setenforce 0 iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X swapoff -a #The swap partition must be closed sed -ri 's/.*swap.*/#&/' /etc/fstab #Permanently close the swap partition, and the & symbol represents the last matching result in the sed command #Load ip_vs module for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done #Modify host name hostnamectl set-hostname master01 hostnamectl set-hostname node01 hostnamectl set-hostname node02 #Modify hosts file for all nodes vim /etc/hosts 192.168.80.11 master01 192.168.80.12 node01 192.168.80.13 node02 #Adjust kernel parameters cat > /etc/sysctl.d/kubernetes.conf << EOF #Turn on the bridge mode to transfer the traffic of the bridge to the iptables chain net.bridge.bridge-nf-call-ip6tables=1 net.bridge.bridge-nf-call-iptables=1 #Turn off ipv6 protocol net.ipv6.conf.all.disable_ipv6=1 net.ipv4.ip_forward=1 EOF #Effective parameters sysctl --system

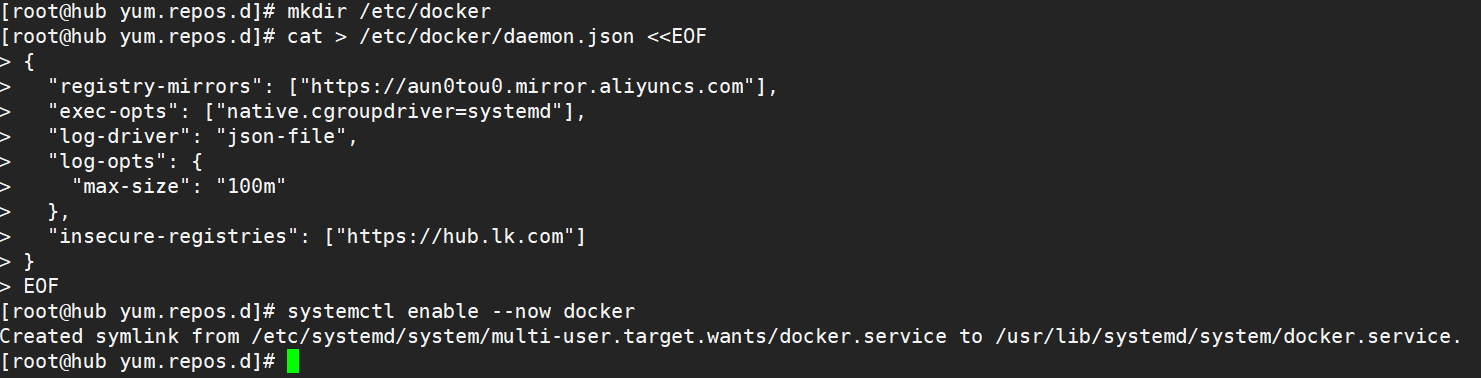

1.2. Install docker on all nodes

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

mkdir /etc/docker

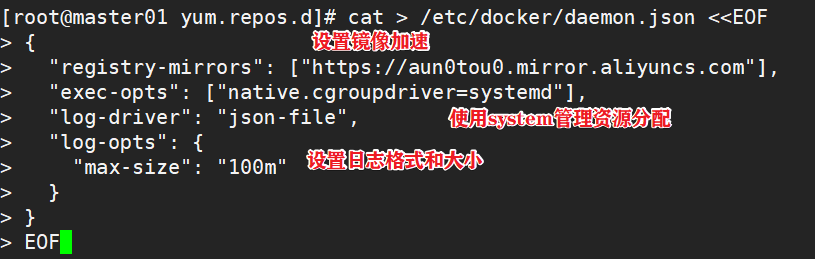

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://aun0tou0.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

}

}

EOF

#The Cgroup managed by system D is used for resource control and management, because compared with Cgroupfs, system D limits CPU, memory and other resources more simply, mature and stable.

#The logs are stored in JSON file format with a size of 100M and stored in / var/log/containers directory to facilitate ELK and other log systems to collect and manage logs.

systemctl daemon-reload

systemctl enable --now docker.service

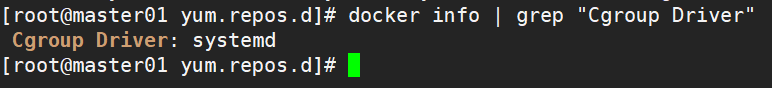

docker info | grep "Cgroup Driver"

1.3 install kubedm, kubelet and kubectl on all nodes

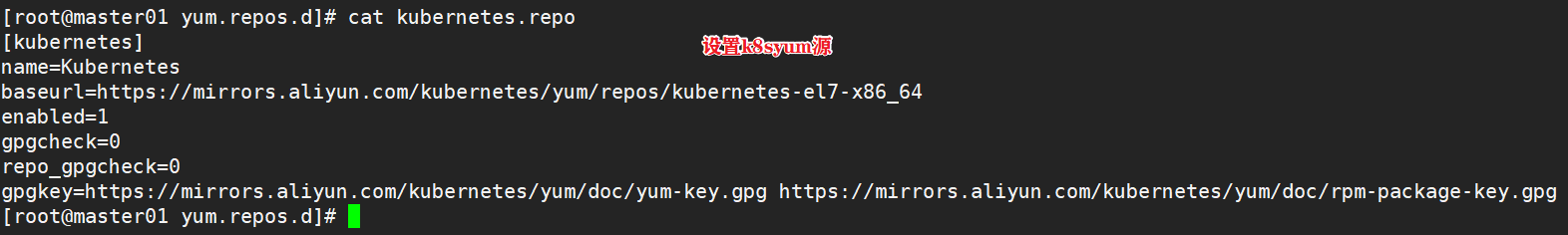

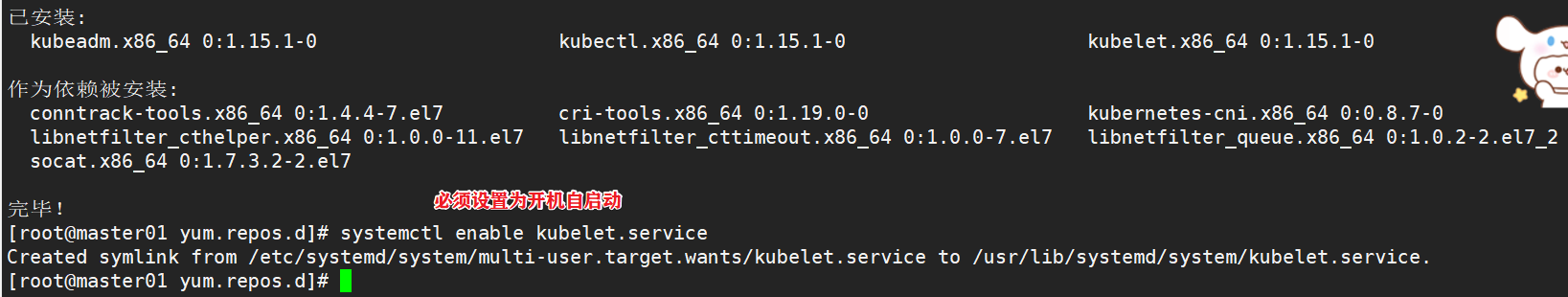

#Define kubernetes source cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install -y kubelet-1.15.1 kubeadm-1.15.1 kubectl-1.15.1 #Startup and self startup kubelet systemctl enable kubelet.service #After K8S is installed through kubedm, it exists in Pod mode, that is, the bottom layer runs in container mode, so kubelet must be set to start automatically

1.4. Deploy K8S cluster

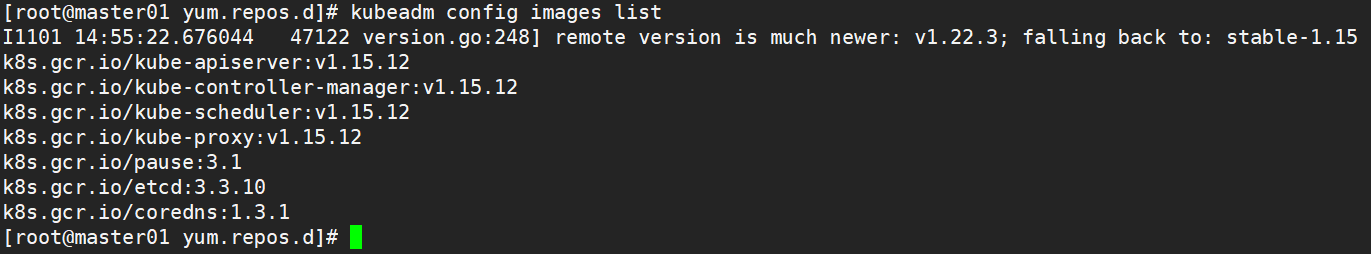

#View images required for initialization kubeadm config images list

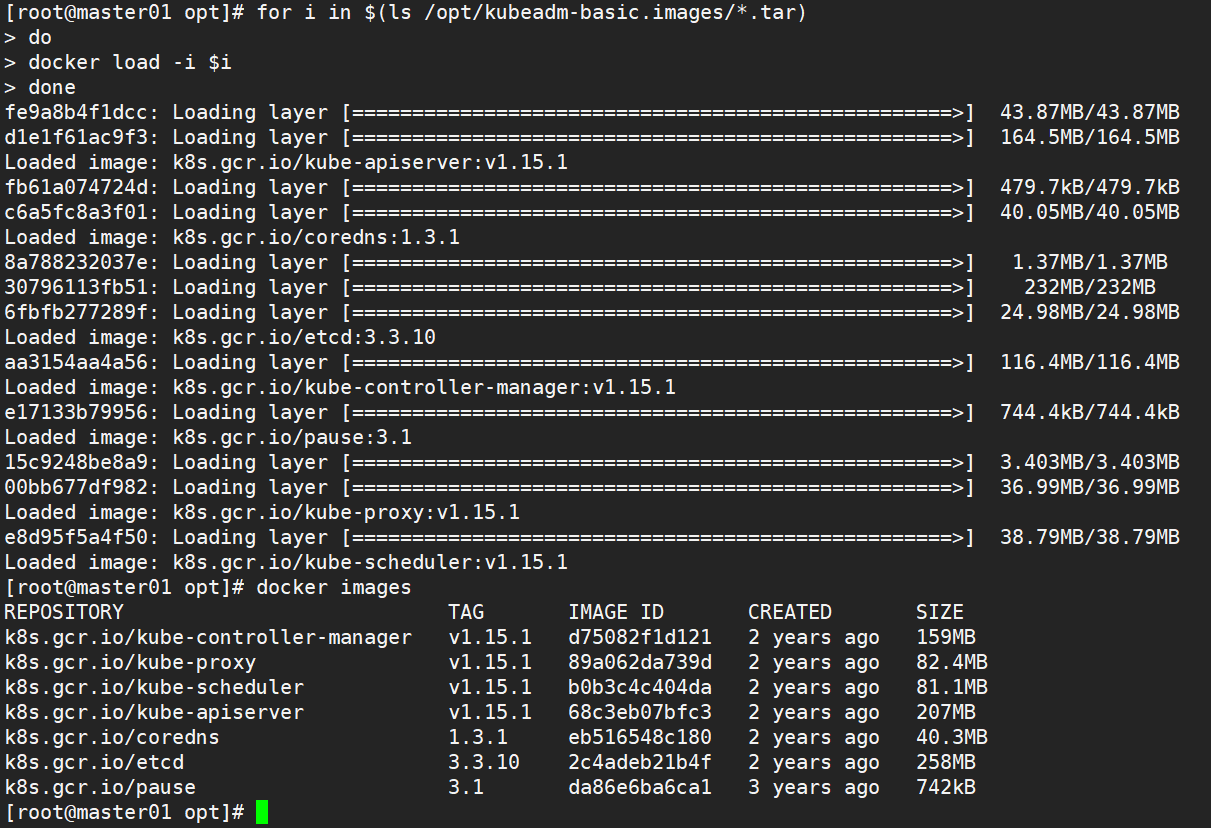

1.4.1. On the master node, upload the kubedm-basic.images.tar.gz compressed package to the / opt directory

cd /opt tar zxvf kubeadm-basic.images.tar.gz for i in $(ls /opt/kubeadm-basic.images/*.tar); do docker load -i $i; done

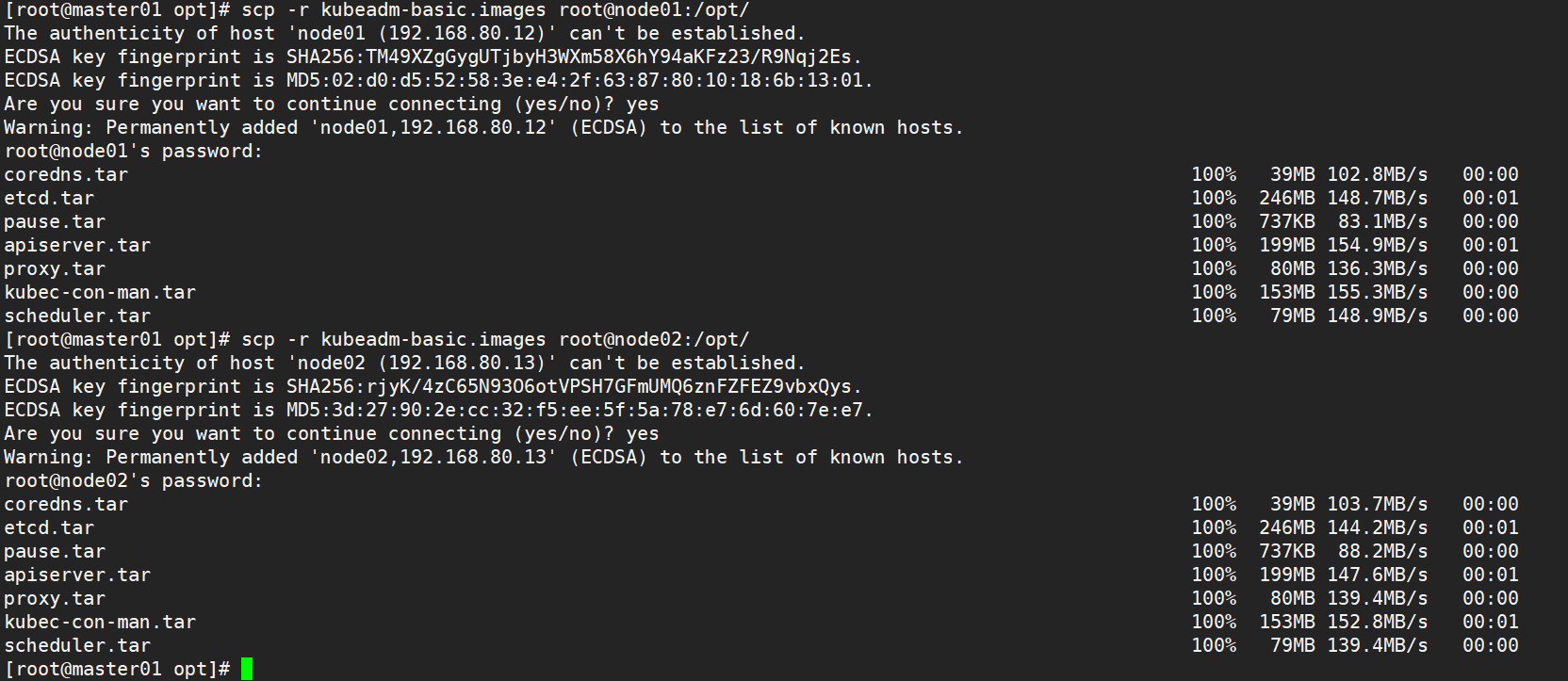

1.4.2 copy image to node node

scp -r kubeadm-basic.images root@node01:/opt scp -r kubeadm-basic.images root@node02:/opt

1.4.3 initialize kubedm

Method 1

#Deploy on all nodes

kubeadm config print init-defaults > /opt/kubeadm-config.yaml

cd /opt/

vim kubeadm-config.yaml

......

11 localAPIEndpoint:

12 advertiseAddress: 192.168.80.11 #Specifies the IP address of the master node

13 bindPort: 6443

......

34 kubernetesVersion: v1.15.1 #Specify the kubernetes version number

35 networking:

36 dnsDomain: cluster.local

37 podSubnet: "10.244.0.0/16" #Specify the pod network segment. 10.244.0.0/16 is used to match the flannel default network segment

38 serviceSubnet: 10.96.0.0/16 #Specify service segment

39 scheduler: {}

--- #Add the following at the end

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs #Change the default service scheduling mode to ipvs mode

kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

#--The experimental upload certs parameter can automatically distribute the certificate file when joining the node in the subsequent execution. From version k8sV1.16, replace it with -- upload certs

#Tee kubedm-init.log is used to output logs

#View kubedm init log

less kubeadm-init.log

#kubernetes configuration file directory

ls /etc/kubernetes/

#Directory for storing ca and other certificates and passwords

ls /etc/kubernetes/pki

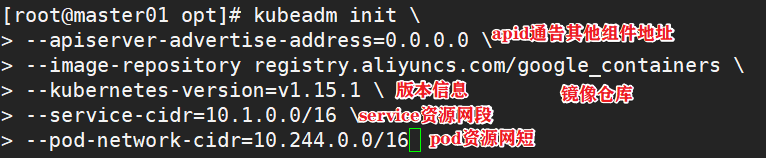

Method 2

#Deploy on master

kubeadm init \

--apiserver-advertise-address=0.0.0.0 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.15.1 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

--------------------------------------------------------------------------------------------

To initialize a cluster kubeadm init Command, you can specify either specific parameter initialization or configuration file initialization.

Optional parameters:

--apiserver-advertise-address: apiserver Notification to other components IP Address, usually Master Node is used for communication within the cluster IP Address, 0.0.0.0 Represents all available addresses on the node

--apiserver-bind-port: apiserver The default listening port is 6443

--cert-dir: Communicable ssl Certificate file, default/etc/kubernetes/pki

--control-plane-endpoint: The shared terminal on the console plane can be load balanced ip Address or dns Domain name, which needs to be added in highly available cluster

--image-repository: The image warehouse from which the image is pulled. The default value is k8s.gcr.io

--kubernetes-version: appoint kubernetes edition

--pod-network-cidr: pod The network segment of the resource needs to be connected with pod The value settings of the network plug-in are consistent. Generally, Flannel The default value of the network plug-in is 10.244.0.0/16,Calico The default value of the plug-in is 192.168.0.0/16;

--service-cidr: service Network segment of resource

--service-dns-domain: service The suffix of the full domain name. The default is cluster.local

---------------------------------------------------------------------------------------------

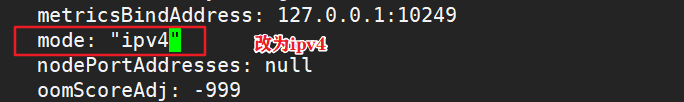

Method 2 needs to be modified after initialization kube-proxy of configmap,open ipvs

kubectl edit cm kube-proxy -n=kube-system

modify mode: ipvs

Tips:

......

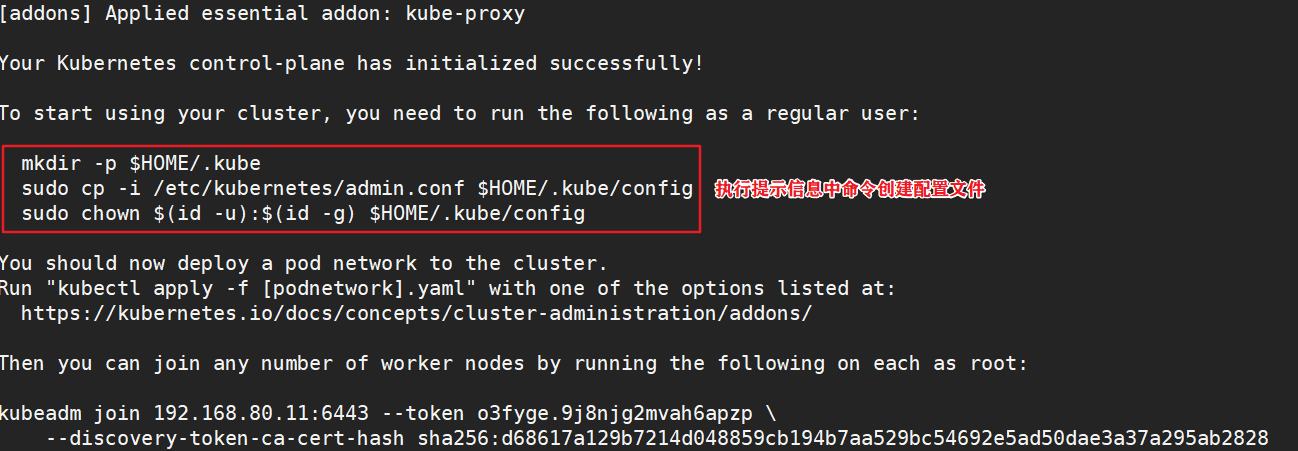

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

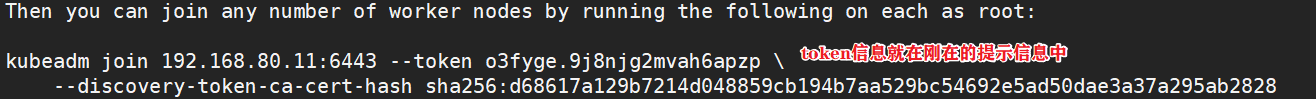

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.80.10:6443 --token rc0kfs.a1sfe3gl4dvopck5 \

--discovery-token-ca-cert-hash sha256:864fe553c812df2af262b406b707db68b0fd450dc08b34efb73dd5a4771d37a2

1.4.4 setting kubectl

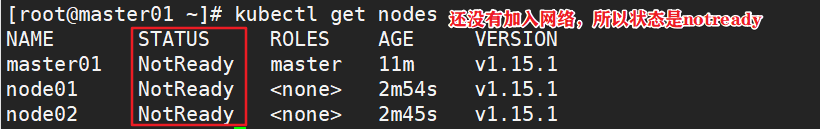

kubectl Need to pass API server The corresponding management operations can be performed only after authentication and authorization, kubeadm The deployed cluster generates an authentication profile for it with administrator privileges /etc/kubernetes/admin.conf,It can be kubectl By default“ $HOME/.kube/config" Load the path of. #Configure on master01 mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config #Execute the kubedm join command on the node node to join the cluster kubeadm join 192.168.80.11:6443 --token o3fyge.9j8njg2mvah6apzp \ --discovery-token-ca-cert-hash sha256:d68617a129b7214d048859cb194b7aa529bc54692e5ad50dae3a37a295ab2828

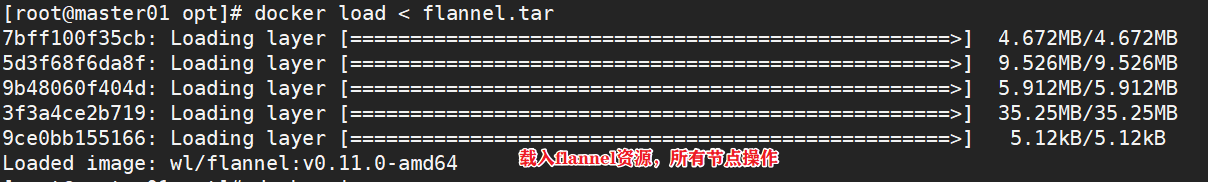

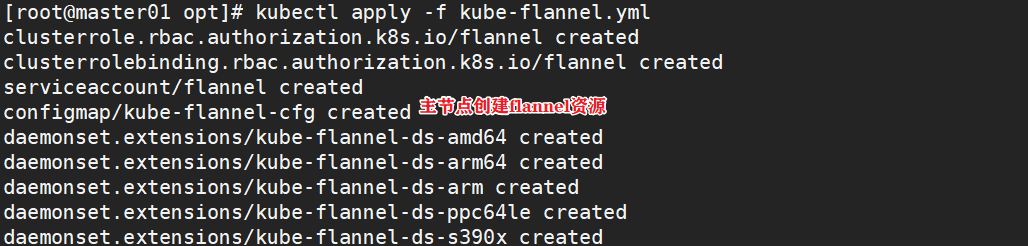

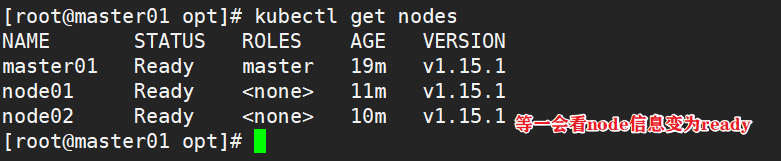

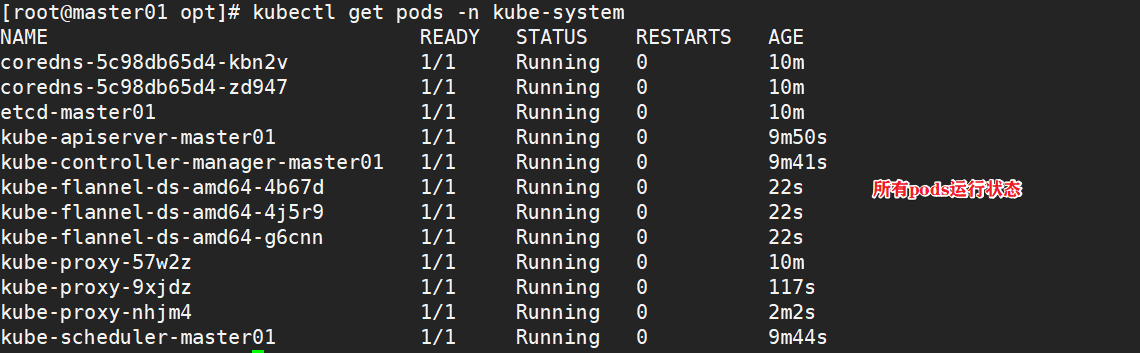

1.4.5 deploy the network plug-in flannel on all nodes

Method 1:

#All nodes upload the flannel image flannel.tar to the / opt directory, and the master node uploads the kube-flannel.yml file cd /opt docker load < flannel.tar #Create a flannel resource in the master node kubectl apply -f kube-flannel.yml

Method 2:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

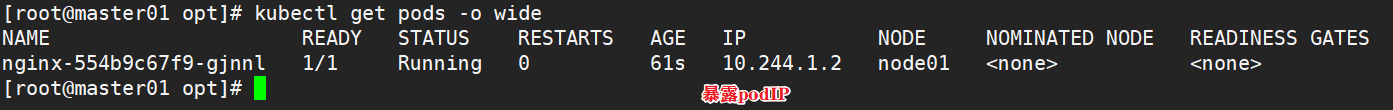

1.4.5 test pod resource creation

kubectl create deployment nginx --image=nginx

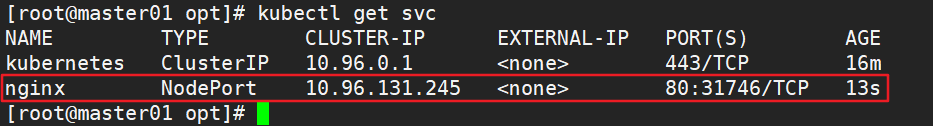

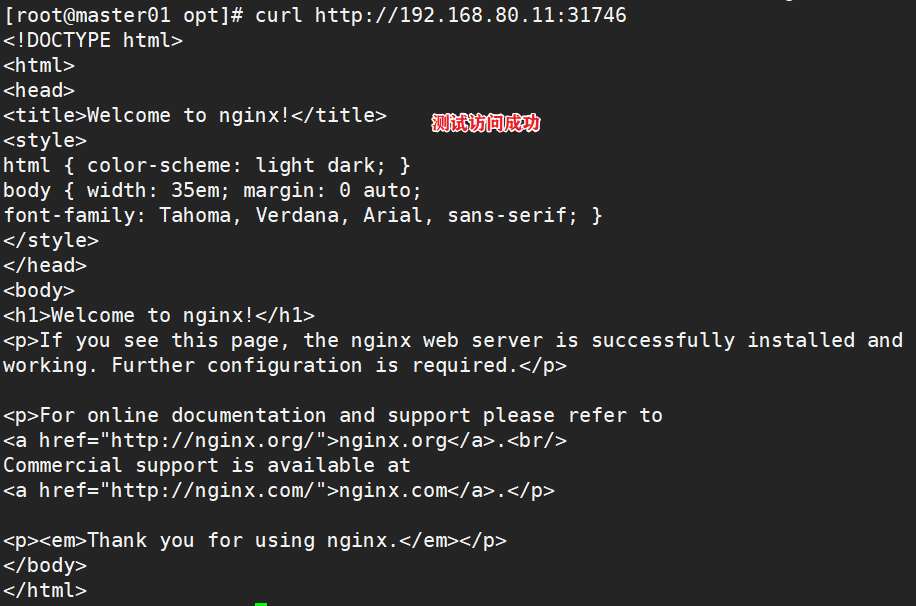

1.4.6 providing services through exposed ports

kubectl expose deployment nginx --port=80 --type=NodePort #Test access curl http://192.168.80.12:32698

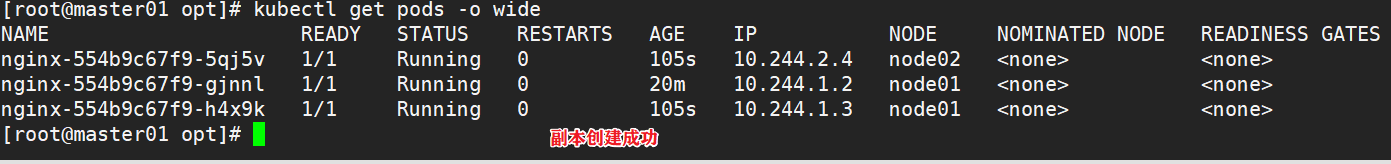

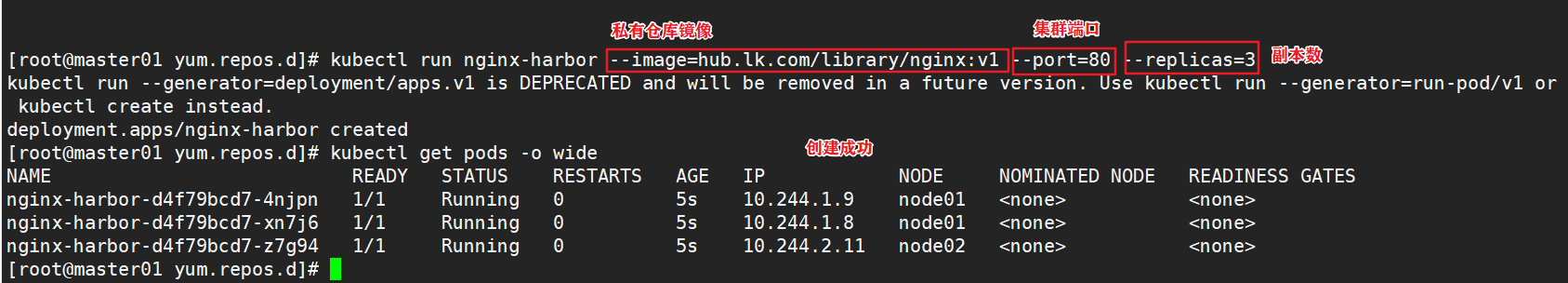

1.4.7 expand 3 copies

kubectl scale deployment nginx --replicas=3

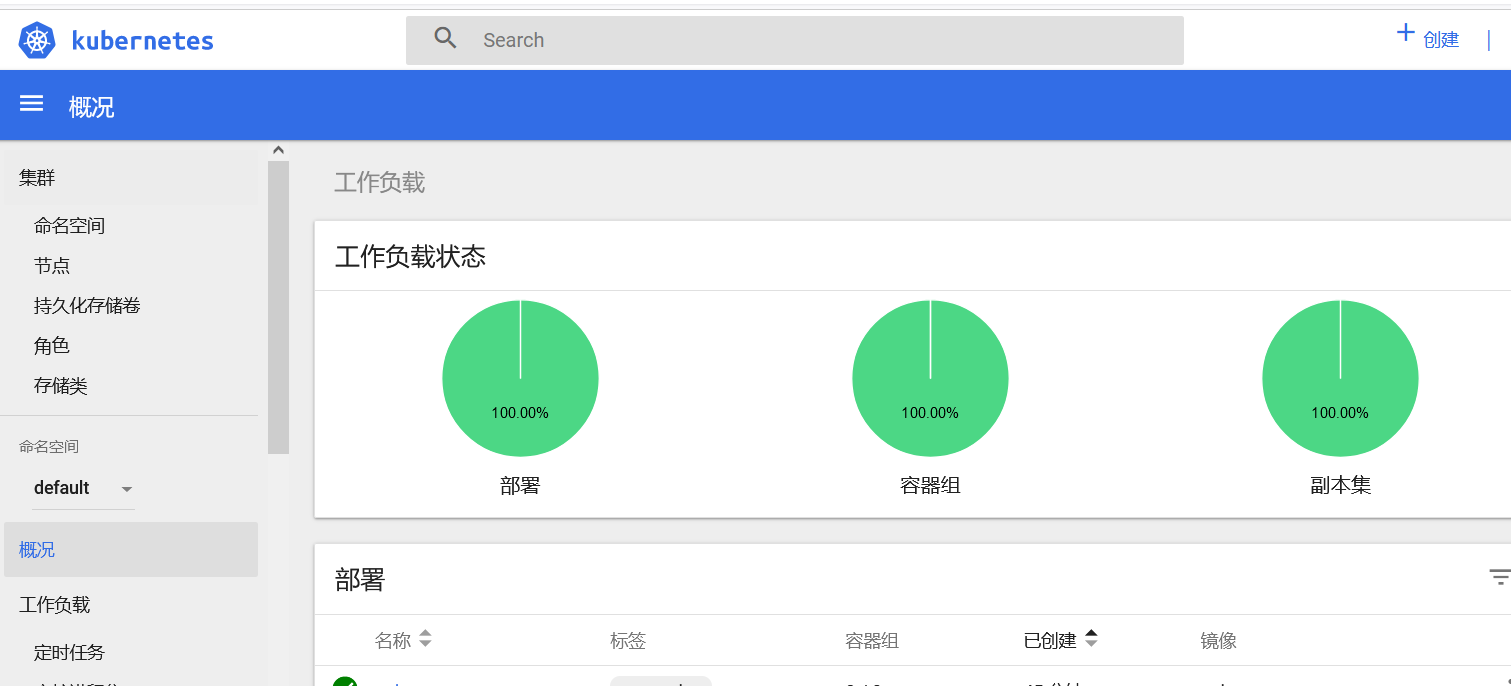

2, Installing dashboard

2.1. Install dashboard at all nodes

Method 1

#All nodes upload the dashboard image dashboard.tar to the / opt directory, and the master node uploads the kubernetes-dashboard.yaml file cd /opt/ docker load < dashboard.tar kubectl apply -f kubernetes-dashboard.yaml

Method 2:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

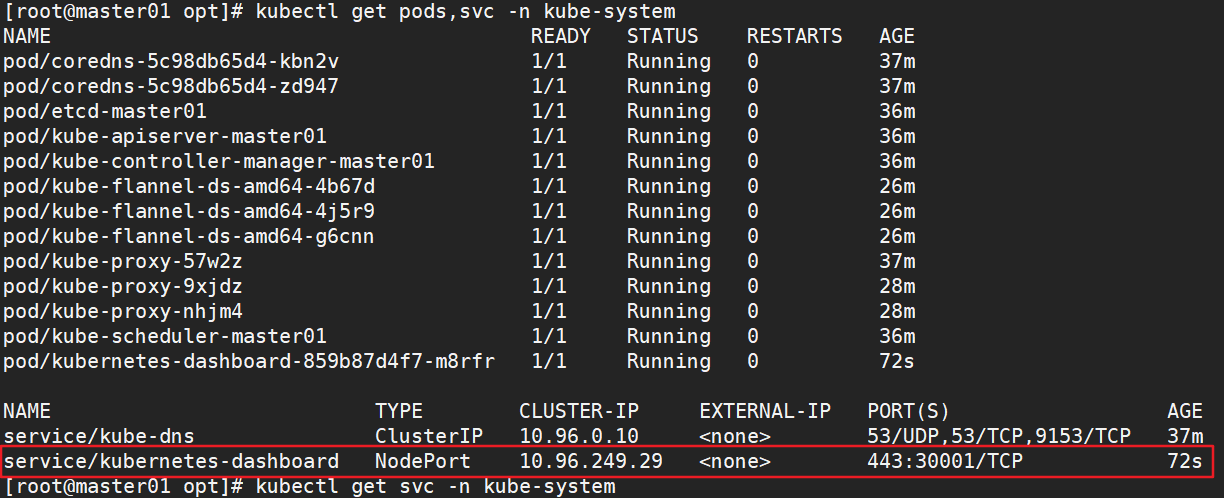

2.1.1. Check the operation status of all containers

kubectl get pods,svc -n kube-system -o wide

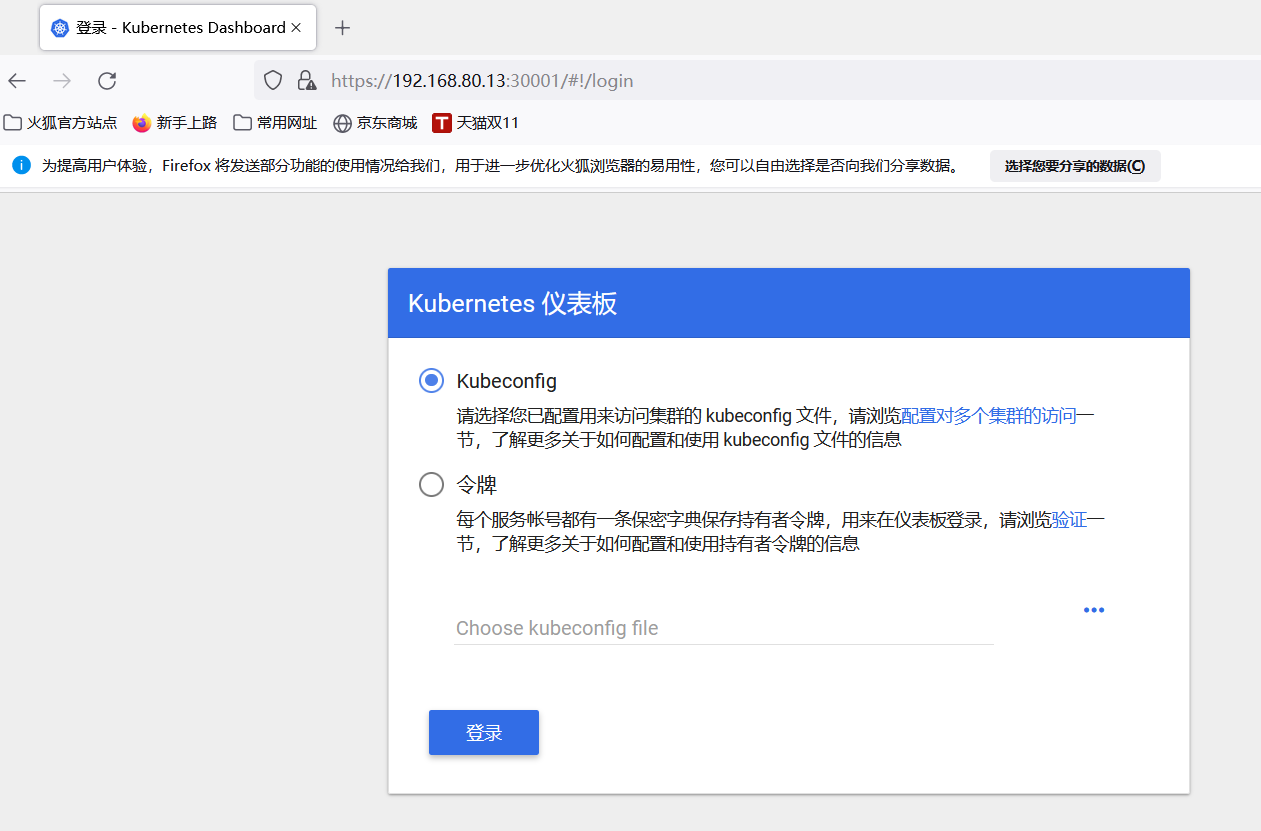

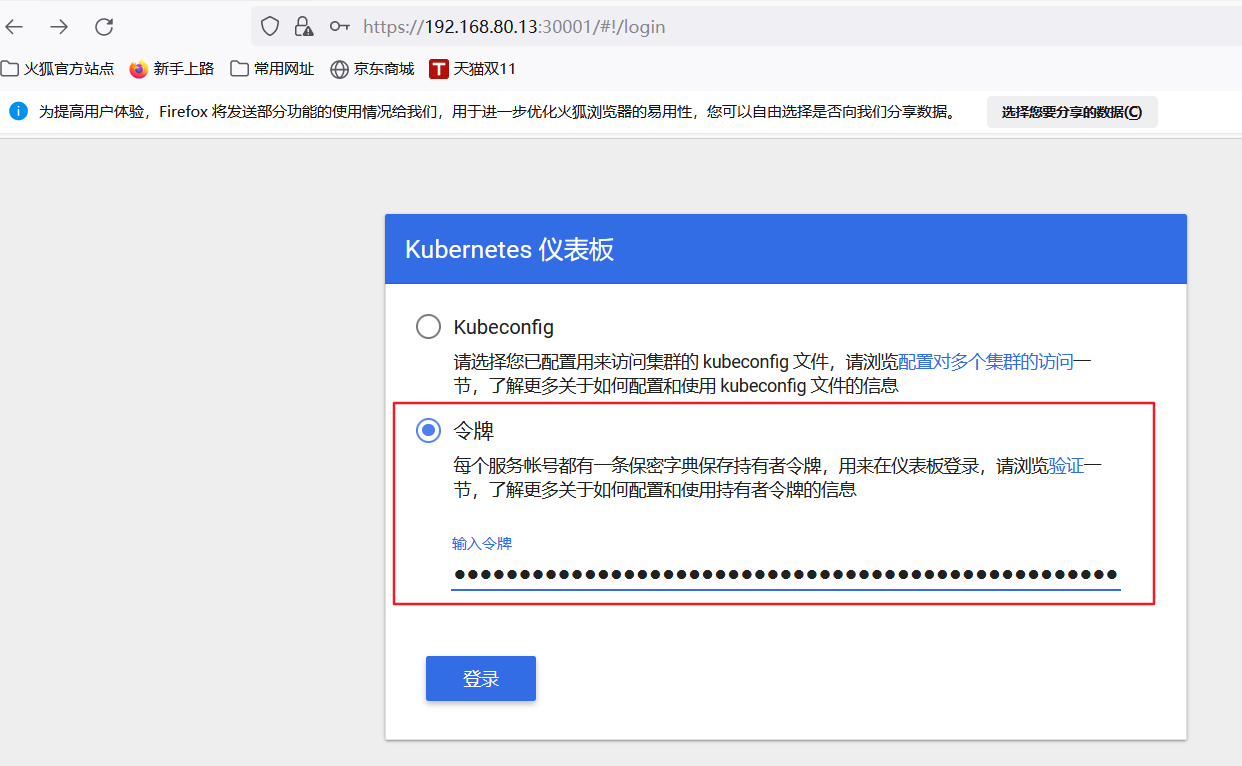

2.2. Use Firefox or 360 browser to access

https://192.168.80.13:30001

2.3. Create a service account and bind the default cluster admin administrator cluster role

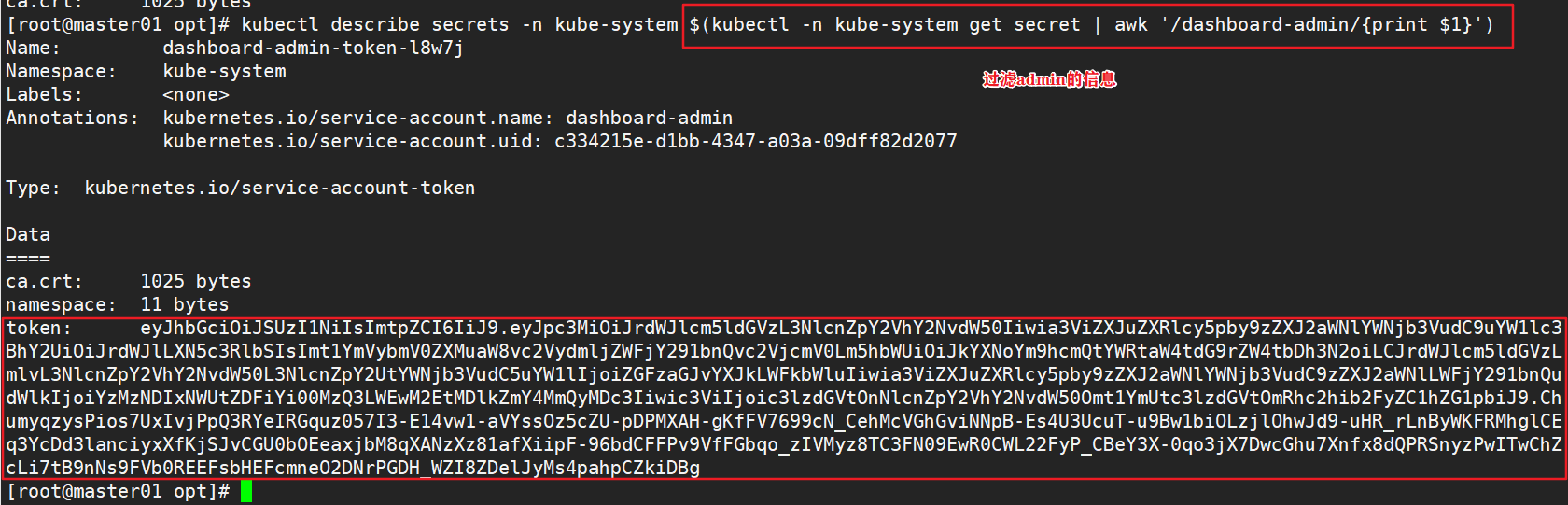

kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

2.4. Obtain token key

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

#Copy the token and log in to the website directly

3, Install Harbor private warehouse

3.1. Harbor node environment preparation

#Modify host name

hostnamectl set-hostname hub.lk.com

#All nodes plus hostname mapping

echo '192.168.80.14 hub.lk.com' >> /etc/hosts

#Install docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

mkdir /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://aun0tou0.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"insecure-registries": ["https://hub.lk.com"]

}

EOF

systemctl start docker

systemctl enable docker

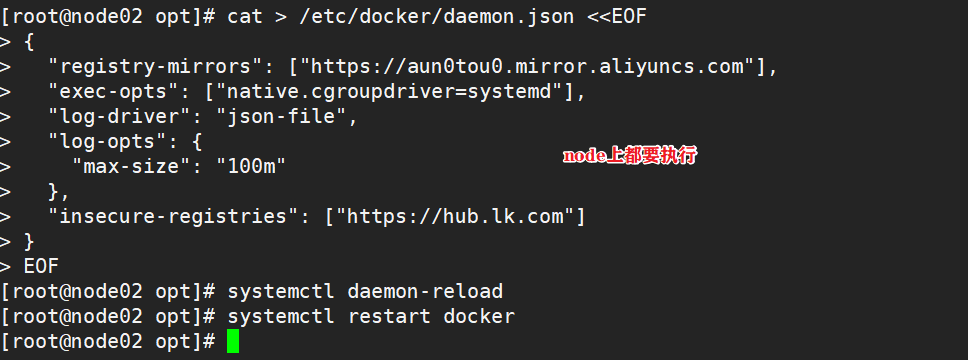

3.2. All node nodes modify the docker configuration file and add the private warehouse configuration

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://aun0tou0.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"insecure-registries": ["https://hub.lk.com"]

}

EOF

systemctl daemon-reload

systemctl restart docker

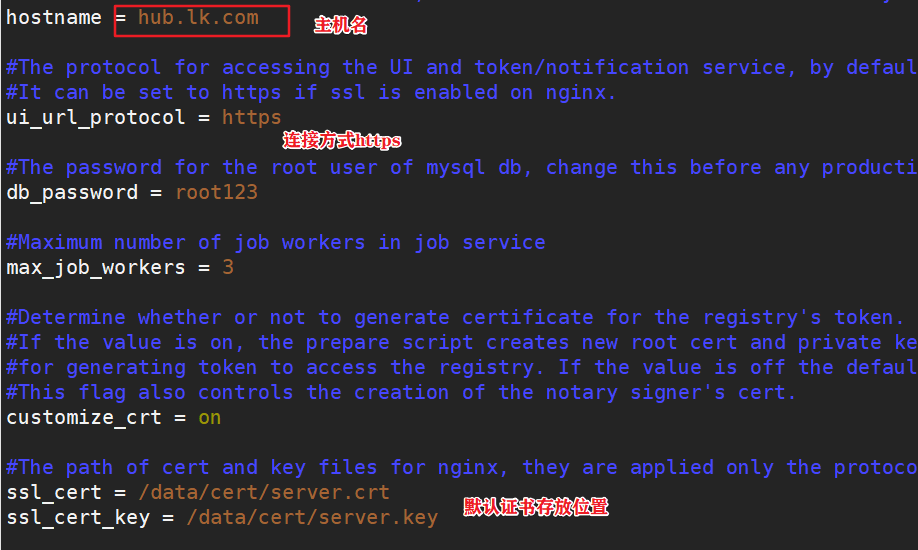

3.3. Harbor installation

3.3.1. Upload harbor-offline-installer-v1.2.2.tgz and docker compose files to / opt directory

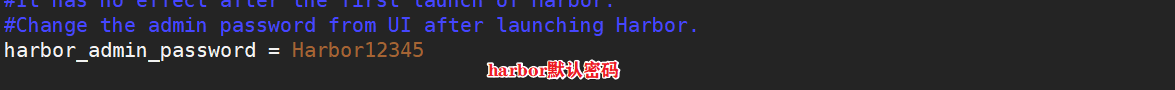

cd /opt cp docker-compose /usr/local/bin/ chmod +x /usr/local/bin/docker-compose tar zxvf harbor-offline-installer-v1.2.2.tgz cd harbor/ vim harbor.cfg 5 hostname = hub.lk.com 9 ui_url_protocol = https 24 ssl_cert = /data/cert/server.crt 25 ssl_cert_key = /data/cert/server.key 59 harbor_admin_password = Harbor12345

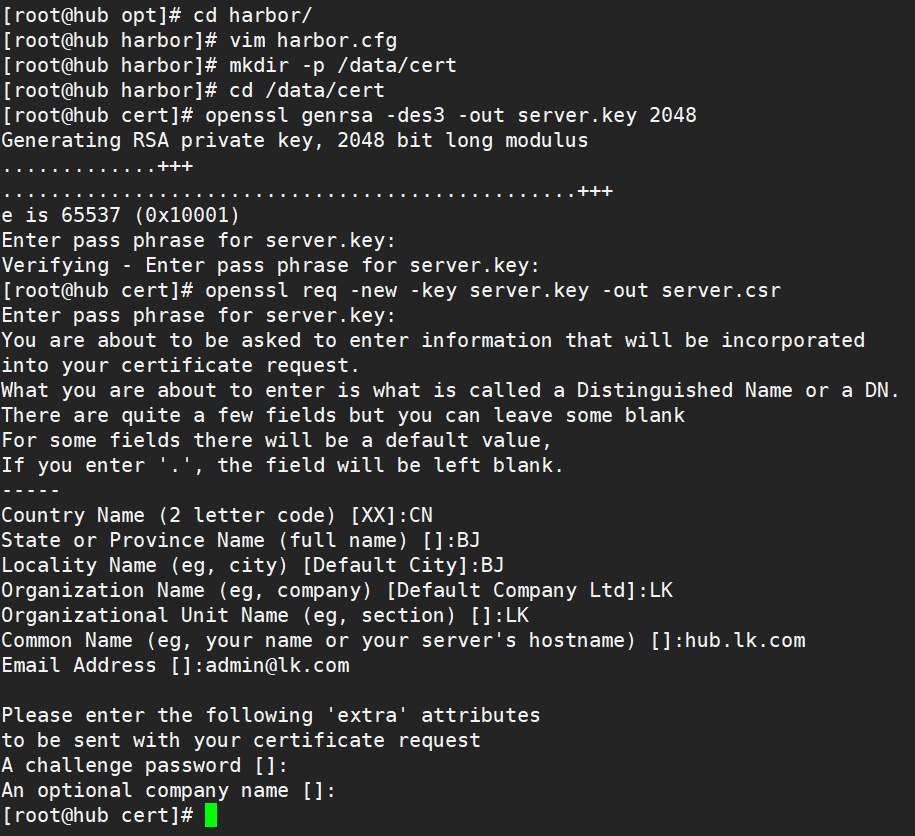

3.3.2. Generate certificate, private key and certificate signature request file

#certificate mkdir -p /data/cert cd /data/cert #Private key openssl genrsa -des3 -out server.key 2048 Enter the password twice: 123456 #Certificate signing request file openssl req -new -key server.key -out server.csr Enter private key password: 123456 Enter country name: CN Enter province name: BJ Enter city name: BJ Enter Organization Name: LK Enter practice Name: LK Enter domain name: hub.lk.com Enter administrator mailbox: admin@lk.com All others are directly returned

3.3.3. Back up the private key, clear the private key password, and sign the certificate

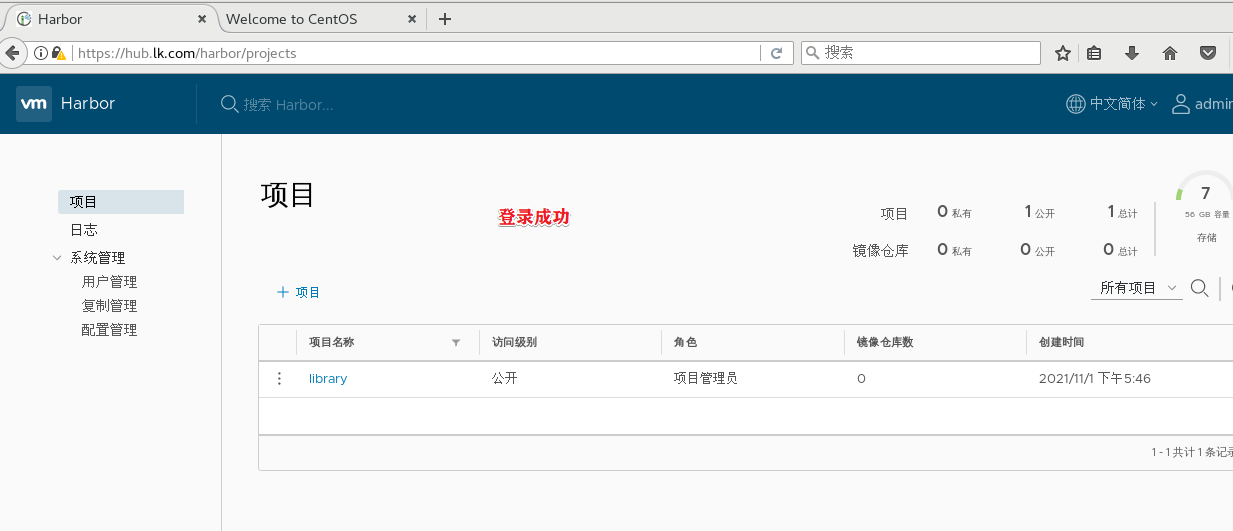

#Backup private key cp server.key server.key.org #Clear private key password openssl rsa -in server.key.org -out server.key Enter private key password: 123456 #Signing certificate openssl x509 -req -days 1000 -in server.csr -signkey server.key -out server.crt chmod +x /data/cert/* cd /opt/harbor/ ./install.sh #Browser access: https://hub.lk.com #User name: admin #Password: Harbor 12345

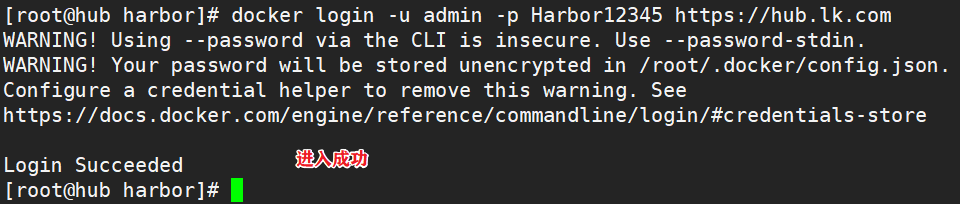

3.4. node login test

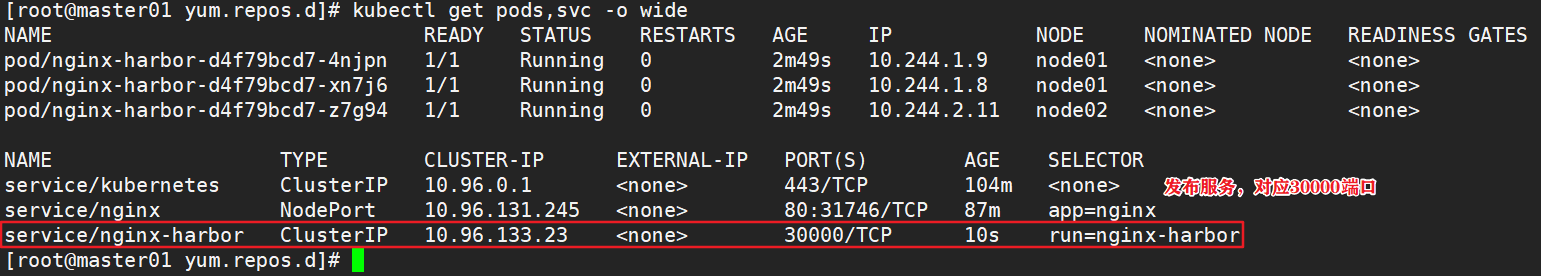

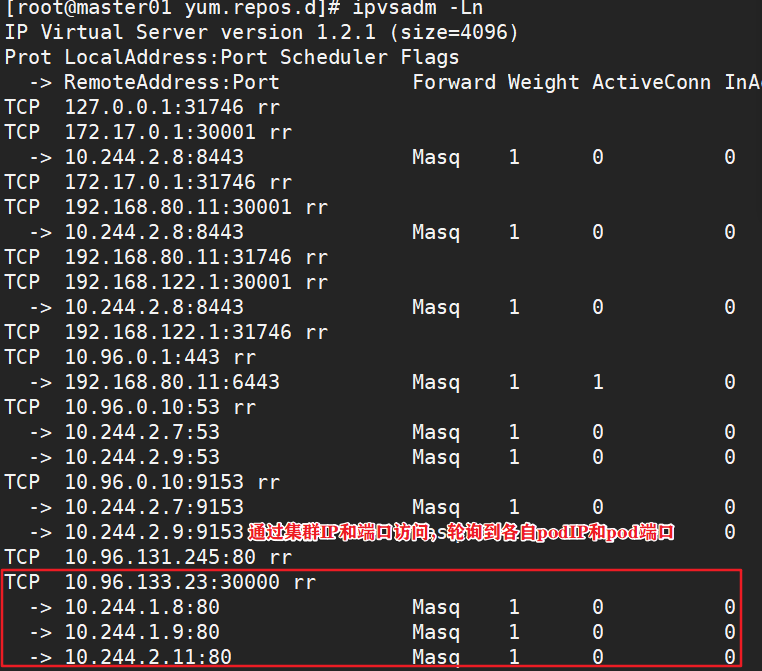

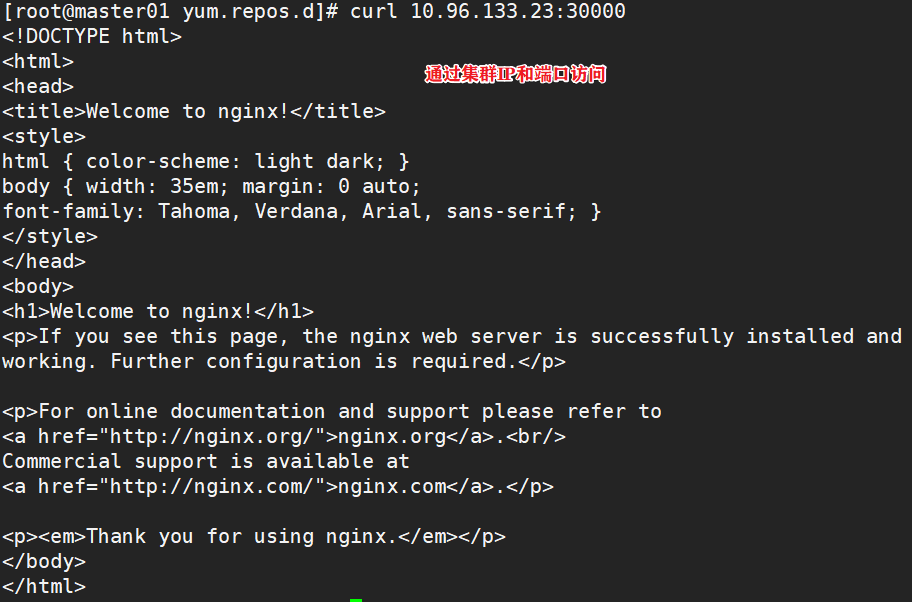

docker login -u admin -p Harbor12345 https://hub.lk.com #Upload image docker tag nginx:latest hub.lk.com/library/nginx:v1 docker push hub.lk.com/library/nginx:v1 #Delete the previously created nginx resource on the master node kubectl delete deployment nginx kubectl run nginx-deployment --image=hub.lk.com/library/nginx:v1 --port=80 --replicas=3 #Publishing services kubectl expose deployment nginx-deployment --port=30000 --target-port=80

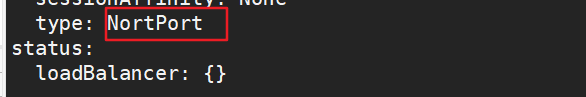

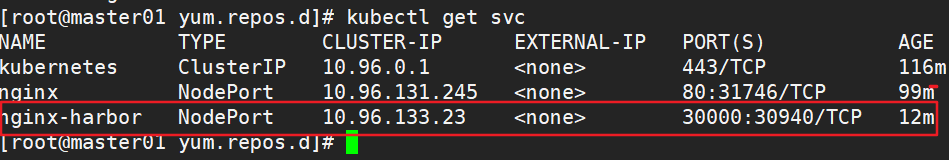

3.5 change the scheduling policy to NodePort

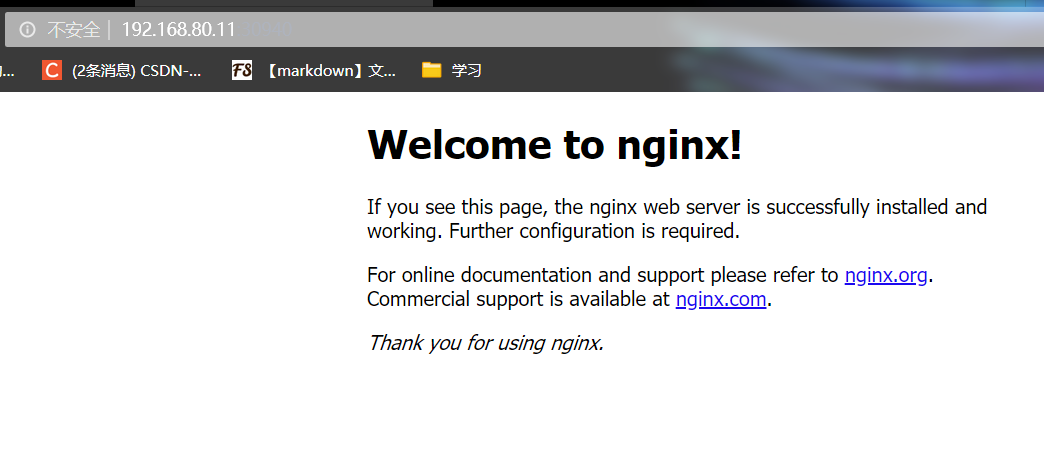

kubectl edit svc nginx-deployment 25 type: NodePort #Change the scheduling policy to NodePort kubectl get svc #Browser access: 192.168.80.11:30940 192.168.80.12:30940 192.168.80.13:30940

4, Kernel parameter optimization scheme

cat > /etc/sysctl.d/kubernetes.conf <<EOF net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_forward=1 net.ipv4.tcp_tw_recycle=0 vm.swappiness=0 #The use of swap space is prohibited and is allowed only when the system is out of memory (OOM) vm.overcommit_memory=1 #Do not check whether the physical memory is sufficient vm.panic_on_oom=0 #Open OOM fs.inotify.max_user_instances=8192 fs.inotify.max_user_watches=1048576 fs.file-max=52706963 #Specifies the maximum number of file handles fs.nr_open=52706963 #Only versions above 4.4 are supported net.ipv6.conf.all.disable_ipv6=1 net.netfilter.nf_conntrack_max=2310720 EOF