Reprinted in: https://blog.csdn.net/dustpg/article/details/38202371

Use SDK: Kinect for Windows SDK v2.0 public preview

Next to the previous section, how to initialize the Kinect this time is very simple:

The rest is to initialize the speech recognition engine, because it is very stereotyped, we recommend that you copy it directly.

- //Initialization of Kinect

- HRESULT ThisApp::init_kinect(){

- IAudioSource* pAudioSource = nullptr;

- IAudioBeamList* pAudioBeamList = nullptr;

- //Find the current default Kinect

- HRESULT hr = ::GetDefaultKinectSensor(&m_pKinect);

- //Gentlely open Kinect

- if (SUCCEEDED(hr)){

- hr = m_pKinect->Open();

- }

- //Access to audio sources

- if (SUCCEEDED(hr)){

- hr = m_pKinect->get_AudioSource(&pAudioSource);

- }

- //Access to audio lists

- if (SUCCEEDED(hr)){

- hr = pAudioSource->get_AudioBeams(&pAudioBeamList);

- }

- //Access to audio

- if (SUCCEEDED(hr)){

- hr = pAudioBeamList->OpenAudioBeam(0, &m_pAudioBeam);

- }

- //Get input audio stream

- if (SUCCEEDED(hr)){

- IStream* pStream = nullptr;

- hr = m_pAudioBeam->OpenInputStream(&pStream);

- //Generating packaging objects with puppets

- m_p16BitPCMAudioStream = new KinectAudioStreamWrapper(pStream);

- SafeRelease(pStream);

- }

- SafeRelease(pAudioBeamList);

- SafeRelease(pAudioSource);

- return hr;

- }

- //Initialized speech recognition

- HRESULT ThisApp::init_speech_recognizer(){

- HRESULT hr = S_OK;

- //Create a voice input stream

- if (SUCCEEDED(hr)){

- hr = CoCreateInstance(CLSID_SpStream, nullptr, CLSCTX_INPROC_SERVER, __uuidof(ISpStream), (void**)&m_pSpeechStream);;

- }

- //Connect to our Kinect voice input

- if (SUCCEEDED(hr)){

- WAVEFORMATEX wft = {

- WAVE_FORMAT_PCM, //PCM encoding

- 1, //Mono channel

- 16000, //Sampling rate is 16KHz

- 32000, //Data stream per minute = sampling rate * alignment

- 2, //Alignment: Mono * Sample depth = 2 byte

- 16, //Sample depth 16BIT

- 0 //Additional data

- };

- //Setting state

- hr = m_pSpeechStream->SetBaseStream(m_p16BitPCMAudioStream, SPDFID_WaveFormatEx, &wft);

- }

- //Creating Speech Recognition Objects

- if (SUCCEEDED(hr)){

- ISpObjectToken *pEngineToken = nullptr;

- //Create a language recognizer

- hr = CoCreateInstance(CLSID_SpInprocRecognizer, nullptr, CLSCTX_INPROC_SERVER, __uuidof(ISpRecognizer), (void**)&m_pSpeechRecognizer);

- if (SUCCEEDED(hr)) {

- //Connect the voice input stream object we created

- m_pSpeechRecognizer->SetInput(m_pSpeechStream, TRUE);

- //Create a language to be recognized. Choose Mainland Chinese (zh-cn) here

- //At present, there is no Kinect Chinese Speech Recognition Pack. If there is one, you can set "language=804;Kinect=Ture"

- hr = SpFindBestToken(SPCAT_RECOGNIZERS, L"Language=804", nullptr, &pEngineToken);

- if (SUCCEEDED(hr)) {

- //Setting up the language to be recognized

- m_pSpeechRecognizer->SetRecognizer(pEngineToken);

- //Creating Speech Recognition Context

- hr = m_pSpeechRecognizer->CreateRecoContext(&m_pSpeechContext);

- //Adaptability ON! Prevent degradation of recognition ability due to long processing time

- if (SUCCEEDED(hr)) {

- hr = m_pSpeechRecognizer->SetPropertyNum(L"AdaptationOn", 0);

- }

- }

- }

- SafeRelease(pEngineToken);

- }

- //Create grammar

- if (SUCCEEDED(hr)){

- hr = m_pSpeechContext->CreateGrammar(1, &m_pSpeechGrammar);

- }

- //Load static SRGS grammar files

- if (SUCCEEDED(hr)){

- hr = m_pSpeechGrammar->LoadCmdFromFile(s_GrammarFileName, SPLO_STATIC);

- }

- //Activating grammatical rules

- if (SUCCEEDED(hr)){

- hr = m_pSpeechGrammar->SetRuleState(nullptr, nullptr, SPRS_ACTIVE);

- }

- //Set the identifier to read the data all the time

- if (SUCCEEDED(hr)){

- hr = m_pSpeechRecognizer->SetRecoState(SPRST_ACTIVE_ALWAYS);

- }

- //Setting up interest in identifying events

- if (SUCCEEDED(hr)){

- hr = m_pSpeechContext->SetInterest(SPFEI(SPEI_RECOGNITION), SPFEI(SPEI_RECOGNITION));

- }

- //Ensuring that speech recognition is activated

- if (SUCCEEDED(hr)){

- hr = m_pSpeechContext->Resume(0);

- }

- //Acquisition of identification events

- if (SUCCEEDED(hr)){

- m_p16BitPCMAudioStream->SetSpeechState(TRUE);

- m_hSpeechEvent = m_pSpeechContext->GetNotifyEventHandle();

- }

- #ifdef _DEBUG

- else

- printf_s("init_speech_recognizer failed\n");

- #endif

- return hr;

- }

Attention should be paid to the following points:

1. When setting BaseStream fills in WAVEFORMATEX, PCM data format fills in.

2.SpFindBestToken initialization selection region is 16-digit, mainland Chinese is 0x0804. If additional voice packages are provided by Kinect, additional voice packages can be added

Set Kinect=Ture, separated by semicolons.

3.LoadCmdFromFile sets the grammar file to load SRGS and whether it is dynamic or not.

4. GetNotify EventHandle is used to get speech recognition event handles, which are not closed by programmers. Do not actively Close Handle.

Waiting for SR event to trigger

- //Audio processing

- void ThisApp::speech_process() {

- //Confidence threshold

- const float ConfidenceThreshold = 0.3f;

- SPEVENT curEvent = { SPEI_UNDEFINED, SPET_LPARAM_IS_UNDEFINED, 0, 0, 0, 0 };

- ULONG fetched = 0;

- HRESULT hr = S_OK;

- //Acquisition of events

- m_pSpeechContext->GetEvents(1, &curEvent, &fetched);

- while (fetched > 0)

- {

- //Identification of events

- switch (curEvent.eEventId)

- {

- case SPEI_RECOGNITION:

- //Guarantee bit object

- if (SPET_LPARAM_IS_OBJECT == curEvent.elParamType) {

- ISpRecoResult* result = reinterpret_cast<ISpRecoResult*>(curEvent.lParam);

- SPPHRASE* pPhrase = nullptr;

- //Acquiring recognition phrases

- hr = result->GetPhrase(&pPhrase);

- if (SUCCEEDED(hr)) {

- // XXXXXXXXXXXXXXXXXXX

Still the stereotype

- ::CoTaskMemFree(pPhrase);

- }

- }

- break;

- }

- m_pSpeechContext->GetEvents(1, &curEvent, &fetched);

- }

- return;

- }

When the phrase is successful, it can be used

Gets the fully recognized string

- WCHAR* pwszFirstWord;

- result->GetText(SP_GETWHOLEPHRASE, SP_GETWHOLEPHRASE, TRUE, &pwszFirstWord, nullptr);

- // XXX

- ::CoTaskMemFree(pwszFirstWord);

The structure of SPPHRASE is quite complex, and the important ones are

SPPHRASEPROPERTY* pointer is a tree pointer, and each node has SREngine Confidence for confidence.

The confidence of the parent node represents the general confidence of the branch, and the child node represents the confidence of the phrase in this section. Generally, the confidence of the parent node is used.

In order to indicate whether a SR event recognition is accurate, a confidence threshold can be set, which is greater than the threshold before the recognition is considered accurate. It can be an empirical value.

For example, 0.3, 0.4 and so on, can also be dynamic, designated by the environment or simply determined by the player.

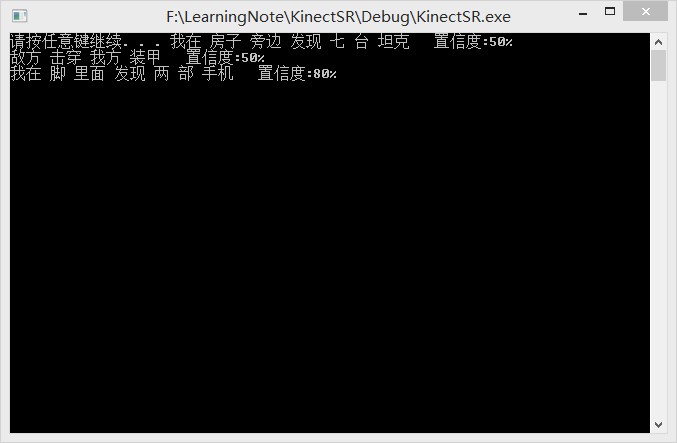

Here, let's say, "We destroyed enemy toilets," according to the SRGS provided in the previous section.

PPhrase - > pProperties are roughly:

"_value"

Situation of War

The Subject

|

Predicate

|

Object

|

Object

The pszValue members of each node can get character data, such as out = 0 we set; then this is "0".

VValue obtains the identified data, such as vValue.lVal obtains long-type data, which can be viewed by itself, after all, it is all data.

It's so arbitrary how to achieve that. You can play it by yourselves. Here's a semi-finished product.

- //Audio processing

- void ThisApp::speech_process() {

- //Confidence threshold

- const float ConfidenceThreshold = 0.3f;

- SPEVENT curEvent = { SPEI_UNDEFINED, SPET_LPARAM_IS_UNDEFINED, 0, 0, 0, 0 };

- ULONG fetched = 0;

- HRESULT hr = S_OK;

- //Acquisition of events

- m_pSpeechContext->GetEvents(1, &curEvent, &fetched);

- while (fetched > 0)

- {

- //Identification of events

- switch (curEvent.eEventId)

- {

- case SPEI_RECOGNITION:

- //Guarantee bit object

- if (SPET_LPARAM_IS_OBJECT == curEvent.elParamType) {

- ISpRecoResult* result = reinterpret_cast<ISpRecoResult*>(curEvent.lParam);

- SPPHRASE* pPhrase = nullptr;

- //Acquiring recognition phrases

- hr = result->GetPhrase(&pPhrase);

- if (SUCCEEDED(hr)) {

- #ifdef _DEBUG

- //Display recognition string when DEBUG

- WCHAR* pwszFirstWord;

- result->GetText(SP_GETWHOLEPHRASE, SP_GETWHOLEPHRASE, TRUE, &pwszFirstWord, nullptr);

- _cwprintf(pwszFirstWord);

- ::CoTaskMemFree(pwszFirstWord);

- #endif

- pPhrase->pProperties;

- const SPPHRASEELEMENT* pointer = pPhrase->pElements + 1;

- if ((pPhrase->pProperties != nullptr) && (pPhrase->pProperties->pFirstChild != nullptr)) {

- const SPPHRASEPROPERTY* pSemanticTag = pPhrase->pProperties->pFirstChild;

- #ifdef _DEBUG

- _cwprintf(L" Confidence level:%d%%\n", (int)(pSemanticTag->SREngineConfidence*100.f));

- #endif

- if (pSemanticTag->SREngineConfidence > ConfidenceThreshold) {

- speech_behavior(pSemanticTag);

- }

- }

- ::CoTaskMemFree(pPhrase);

- }

- }

- break;

- }

- m_pSpeechContext->GetEvents(1, &curEvent, &fetched);

- }

- return;

- }

- //Voice behavior

- void ThisApp::speech_behavior(const SPPHRASEPROPERTY* tag){

- if (!tag) return;

- if (!wcscmp(tag->pszName, L"War situation")){

- enum class Subject{

- US = 0,

- Enemy

- } ;

- enum class Predicate{

- Destroy = 0,

- Defeat,

- Breakdown

- };

- //Analysis of the war situation

- union Situation{

- struct{

- //Subject

- Subject subject;

- //Predicate

- Predicate predicate;

- //Object

- int object2;

- //Object

- int object;

- };

- UINT32 data[4];

- };

- Situation situation;

- auto obj = tag->pFirstChild;

- auto pointer = situation.data;

- //Fill in the data

- while (obj) {

- *pointer = obj->vValue.lVal;

- ++pointer;

- obj = obj->pNextSibling;

- }

- // XXX

- }

- else if (!wcscmp(tag->pszName, L"Find things")){

- //Discovering

- }

- }

Well, that's the end of speech recognition.

It's still a console program. Please don't click X to exit the program, but press any key to exit.

Download address: click here

On Face Recognition and Visualization Gestures:

When you look at SDK, I believe you can see that there are also some in SDK.

Kinect.Face.h

Kinect.VisualGestureBuilder.h

Among them, "Kinect. Visual GestureBuilder. h" corresponds to lib and dll only with x64 version. I don't know whether Microsoft is lazy or because a feature can only be used by x64.

Hopefully, it's lazy. After all, almost no 64-bit program has been developed. 8-byte pointer feels too wasteful.

But these two: no official documents and examples of the C++ part have been found, and it is estimated that they are currently for C#. Currently used, some functions/methods are returned:

path not found

For example, gesture recognition

IVisualGestureBuilderFrameSource::AddGestures

Facial recognition

CreateHighDefinitionFaceFrameSource

You can only wait for SDK updates or expert answers, so "Kinect for Windows SDK v2.0 Development Notes" is temporarily over here.

Thank you for your support. Goodbye for SDK updates

- This article has been included in the following columns:

- Kinect for Windows SDK v2.0 Development Notes