1, Introduction to Kerberos

Kerberos can put the authenticated key on a reliable node before cluster deployment. When the cluster is running, the nodes in the cluster are authenticated with the key, and the nodes that pass the authentication can provide services. The node attempting to impersonate cannot communicate with the nodes in the cluster because it does not have the key information obtained in advance. In this way, the problem of malicious use or tampering with Hadoop cluster is prevented, and the reliability and security of Hadoop cluster are ensured.

Noun interpretation

AS(Authentication Server): authentication server

KDC(Key Distribution Center): Key Distribution Center

TGT(Ticket Granting Ticket): Bill authorization bill, bill of the bill

TGS(Ticket Granting Server): ticket granting server

SS(Service Server): specific service provider

Principal: Certified individual

Ticket: ticket, which is used by the client to prove the authenticity of the identity. Including: user name, IP, timestamp, validity period, session key.

2, Install zookeeper (take CentOS 8 as an example)

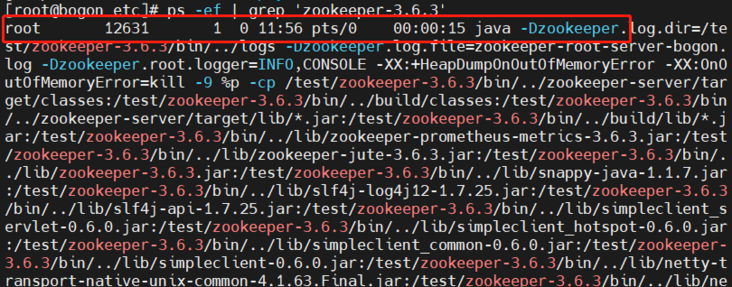

#Download binary compressed package wget https://archive.apache.org/dist/zookeeper/zookeeper-3.6.3/zookeeper-3.6.3.tar.gz #decompression tar -zxvf zookeeper-3.6.3.tar.gz #Enter the root directory of zookeeper, and then put the zoo under conf_ Rename the file sample.cfg to zoo.cfg #Go to the bin directory and start the next level directory ./bin/zkServer.sh start #Check whether the startup is successful ps -aux | grep 'zookeeper-3.6.3' or ps -ef | grep 'zookeeper-3.6.3'

3, Installation startup kafka

#download wget http://mirrors.hust.edu.cn/apache/kafka/2.8.0/kafka_2.12-2.8.0.tgz #decompression tar -zxvf kafka_2.12-2.8.0.tgz #rename mv kafka_2.12-2.8.0 kafka-2.8.0 #Modify the config/server.properties configuration file in kafka root directory #Add the following configuration port=9092 #Intranet (indicates local ip) host.name=10.206.0.17 #External network (ECS ip. If it needs to be configured, this item is not removed) advertised.host.name=119.45.174.249 #Start kafka(-daemon means background start, and start in the directory above bin) ./bin/kafka-server-start.sh -daemon config/server.properties #Close kafka ./bin/kafka-server-stop.sh #Create topic ./bin/kafka-topics.sh --create --replication-factor 1 --partitions 1 --topic test --zookeeper localhost:2181/kafka #Create producer ./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test #Create consumer ./bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test --from-beginning #If you want to connect to the outside world, you need to turn off the firewall #View firewall status systemctl status firewalld.service #Turn off firewall systemctl stop firewalld.service #Start firewall systemctl start firewalld.service #Automatic firewall restart systemctl enable firewalld.service

4, Configure and install Kerberos

1. Install Kerberos related software

yum install krb5-server krb5-libs krb5-workstation -y

2. Configure kdc.conf

vim /var/kerberos/krb5kdc/kdc.conf

The contents are as follows:

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

HADOOP.COM = {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

max_renewable_life = 7d

supported_enctypes = aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}HADOOP.COM: is the set realms. Any name. Kerberos can support multiple realms, generally all in uppercase

masterkeytype,supported_enctypes uses aes256 CTS by default. Because the use of aes256-########cts authentication in JAVA requires the installation of additional jar packages, it is not used here

acl_file: indicates the user permissions of admin.

admin_ keytab: the keytab used by KDC for verification

supported_enctypes: supported verification methods. Pay attention to remove aes256 CTS

3. Configure krb5.conf

vim /etc/krb5.conf

The contents are as follows:

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = HADOOP.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

clockskew = 120

udp_preference_limit = 1

[realms]

HADOOP.COM = {

kdc = es1 //Change to server hostname or ip address

admin_server = es1 //Change to server hostname or ip address

}

[domain_realm]

.hadoop.com = HADOOP.COM

hadoop.com = HADOOP.COM4. Initialize kerberos database

kdb5_util create -s -r HADOOP.COM

5. Modify ACL permissions of database administrator

vim /var/kerberos/krb5kdc/kadm5.acl #Amend as follows */admin@HADOOP.COM

6. Start kerberos daemons

#start-up systemctl start kadmin krb5kdc #Set automatic startup systemctl enable kadmin krb5kdc

7. kerberos simple operation

#1. First enter kadmin as supertube kadmin.local #2. View users listprincs #New user kafka/es1 addprinc kafka/es1 #Exit kadmin.local exit

8. Kafka integrated Kerberos

1. Generate user keytab (this file is required for subsequent kafka configuration and client authentication)

kadmin.local -q "xst -k /var/kerberos/krb5kdc/kadm5.keytab kafka/es1@HADOOP.COM"

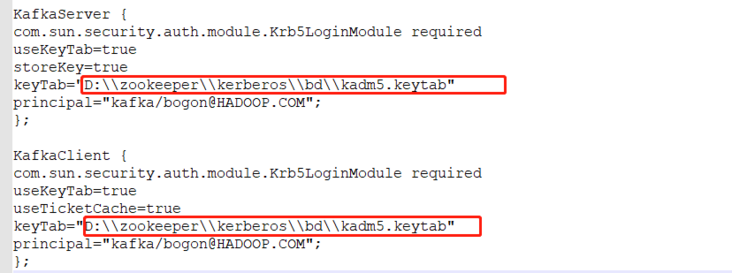

2. Create kafka-jaas.conf under config in Kafka installation directory

#The contents are as follows

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/var/kerberos/krb5kdc/kadm5.keytab"

principal="kafka/es1@HADOOP.COM";

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

useTicketCache=true

keyTab="/var/kerberos/krb5kdc/kadm5.keytab"

principal="kafka/es1@HADOOP.COM";

};3. Add the following configuration in config/server.properties

advertised.listeners=SASL_PLAINTEXT://es1:9092 # corresponding host name listeners=SASL_PLAINTEXT://es1:9092 # corresponding host name security.inter.broker.protocol=SASL_PLAINTEXT sasl.mechanism.inter.broker.protocol=GSSAPI sasl.enabled.mechanisms=GSSAPI sasl.kerberos.service.name=kafka

4. Add the kafka jvm parameter in the bin/kafka-run-class.sh script ("<", note that ">" should be removed. If the content in the original parameter is the same as that to be added, only one copy can be retained)

#jvm performance options if [ -z "$KAFKAJVMPERFORMANCEOPTS" ]; then KAFKAJVMPERFORMANCEOPTS="-server -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent < -Djava.awt.headless=true -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/home/xingtongping/kafka_2.12-2.2.0/config/kafka-jaas.conf >" fi

5. Configure config/producer.properties and kafka producer kerberos configuration

security.protocol = SASL_PLAINTEXT sasl.mechanism = GSSAPI sasl.kerberos.service.name =kafka

6. Configure config/consumer.properties and kafka consumer kerberos configuration

security.protocol = SASL_PLAINTEXT sasl.mechanism = GSSAPI sasl.kerberos.service.name=kafka

5, linux test command

1. Start kafka service

./bin/kafka-server-start.sh config/server.properties

2. Create producers

./bin/kafka-console-producer.sh --broker-list es1:9092 --topic test --producer.config config/producer.properties

3. Create consumers

./bin/kafka-console-consumer.sh --bootstrap-server es1:9092 --topic test --consumer.config config/consumer.properties

6, java client consumption test code in local environment

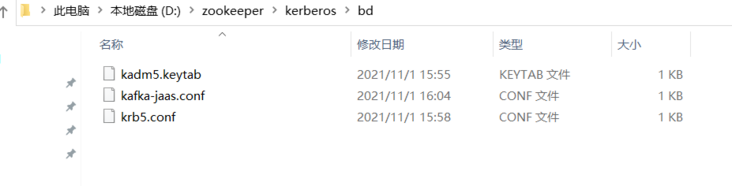

1. Download krb5.conf and kafka-jaas.conf locally, and modify the keytab path of kafka-jaas.conf file: change to the local keytab path

2. When using the domain name, remember to modify the hosts file and add the content: 172.16.110.173 es1

3. Add the following code test

public class Consumer {

public static void main(String[] args) throws IOException {

System.setProperty("java.security.krb5.conf","D:\zookeeper\kerberos\bd\krb5.conf"); //Authentication code

System.setProperty("java.security.auth.login.config","D:\zookeeper\kerberos\bd\kafka-jaas.conf");//Authentication code

Properties props = new Properties();

//Cluster address. Multiple addresses are separated by ","

props.put("bootstrap.servers", "es1:9092");//Host name or Ip

props.put("sasl.kerberos.service.name", "kafka"); //Authentication code

props.put("sasl.mechanism", "GSSAPI"); //Authentication code

props.put("security.protocol", "SASL_PLAINTEXT"); //Authentication code

props.put("group.id", "1");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("session.timeout.ms", "30000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

//Create consumer

KafkaConsumer<String, String> consumer = new KafkaConsumer<String,String>(props);

// You can subscribe to multiple topics separated by. Here you subscribe to the topic "test"

consumer.subscribe(Arrays.asList("test"));

//Continuous monitoring

while(true){

//poll frequency

ConsumerRecords<String,String> consumerRecords = consumer.poll(100);

for(ConsumerRecord<String,String> consumerRecord : consumerRecords){

System.out.println("stay test Read in:" + consumerRecord.value());

}

}

}

}