new function

-

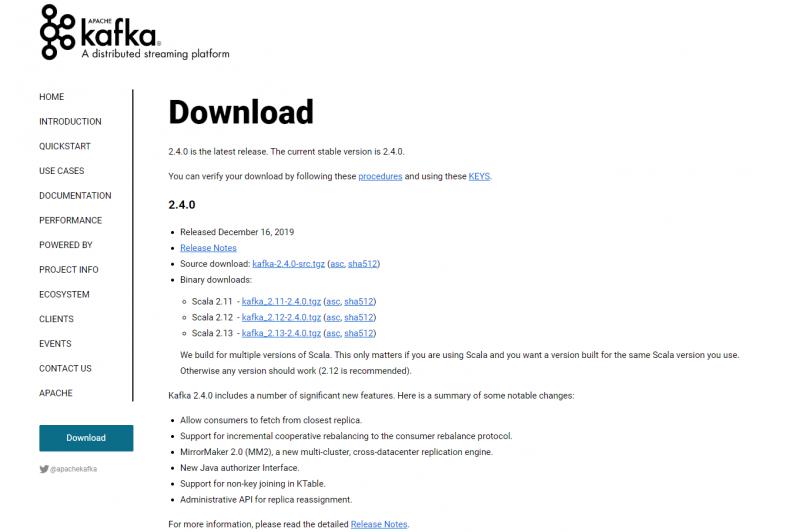

Allow consumers to get from the most recent copy

-

Increase support for incremental cooperative rebalancing for consumer rebalancing protocol

-

New MirrorMaker 2.0 (MM2), new multi cluster cross data center replication engine

-

Introducing a new Java authorized program interface

-

Supports non key connections in KTable

-

Administrative API for reassigning replicas

-

Protect the REST endpoint of the internal connection

-

Add and delete the API of consumer offset exposed through AdminClient

Improvement

-

[KAFKA-5609 ]- connection log4j will be recorded to the file by default

-

[KAFKA-6263 ]- load duration exposure metrics (Metric) for group metadata

-

[KAFKA-6883 ]- KafkaShortnamer allows Kerberos principal name to be converted to uppercase user name

-

[KAFKA-6958 ]- allow the use of ksstreams DSL to define custom processor names

-

[KAFKA-7018 ]- persistent use of memberId to restart consumer

-

[KAFKA-7149 ]- reduce the size of allocated data to improve the scalability of kafka flow

-

[KAFKA-7190 ]- in case of data transmission congestion, clearing the partition topic will cause a WARN statement about unknown product ID

-

[KAFKA-7197 ]- upgrade to Scala 2.13.0

2.4 Java Api Demo

The Kafka client recommended by the official website is convenient and flexible

Introduce dependency:

<dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>2.4.0</version> </dependency>

Producer example:

public class SimpleProvider { public static void main(String[] args) { Properties properties = new Properties(); properties.put("bootstrap.servers", "kafka01:9092,kafka02:9092"); properties.put("acks", "all"); properties.put("retries", 0); properties.put("batch.size", 16384); properties.put("linger.ms", 1); properties.put("buffer.memory", 33554432); properties.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); properties.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(properties); for (int i = 1; i <= 600; i++) { kafkaProducer.send(new ProducerRecord<String, String>("topic", "message"+i)); System.out.println("message"+i); } kafkaProducer.close(); } }

Consumer example:

public class SingleApplication { public static void main(String[] args) { Properties props = new Properties(); props.put("bootstrap.servers", "kafka01:9092,kafka02:9092"); props.put("group.id", "test"); props.put("enable.auto.commit", "true"); props.put("auto.commit.interval.ms", "1000"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("auto.offset.reset","earliest"); KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Arrays.asList("foo", "bar")); try{ while (true) { ConsumerRecords<String, String> records = consumer.poll(1000); for (ConsumerRecord<String, String> record : records) { System.out.printf("offset = %d, key = %s, value = %s%n", record.offset(), record.key(), record.value()); } } }finally{ consumer.close(); } } }

For other examples such as multithreading, see Github address:

https://github.com/tree1123/Kafka-Demo-2.4

More blogs about real-time computing, Flink,Kafka and other related technologies, welcome to pay attention to real-time streaming computing