1.emptyDir storage volume

apiVersion: v1

kind: Pod

metadata:

name: cunchujuan

spec:

containers:

- name: myapp

#Define the first container to display the contents of the index.html file

image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

volumeMounts:

#Call Storage Volume

- name: html

#Use the name of the storage volume as defined below

mountPath: /usr/share/nginx/html/

#Path mounted inside container

- name: busybox

#Define a second container to generate index.html content

image: busybox:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: html

mountPath: /data/

command: ['/bin/sh','-c','while true;do echo $(date) >> /data/index.html;sleep 2;done']

#This command does not stop appending time to the index.html file of the storage volume

volumes:

#Define Storage Volumes

- name: html

#Define Storage Volume Name

emptyDir: {}

#Define storage volume type

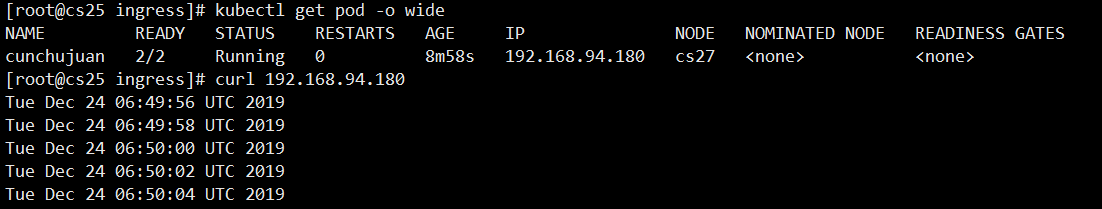

Above, we defined two containers, the second of which is the input date to the index.html of the storage volume. Because both containers are mounted on the same storage volume, the index.html of the first container is shared with the second, and curl keeps watching the index.html grow

2.hostPath storage volume

apiVersion: v1

kind: Pod

metadata:

name: cs-hostpath

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html/

volumes:

- name: html

hostPath:

#Storage volume type is hostPath

path: /data/hostpath

#Path on the actual node

type: DirectoryOrCreate

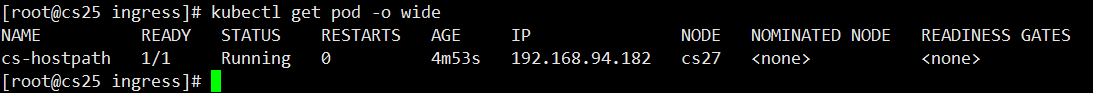

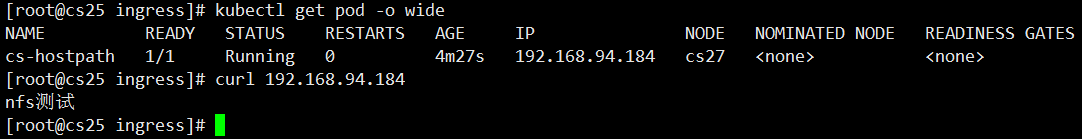

First, look at the node dispatched to that node, which is dispatched to the cs27 node

Mkdir/data/hostpath/ -pv #Create hostpath storage volume folder on cs27 node

echo "hostpath storage volume test" >/data/hostpath/index.html #Generate a home page file in the storage volume

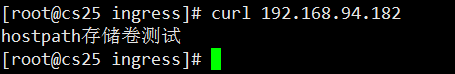

curl, you can see that the file content is what we generated above

Note that this container modifies the data in it, and the storage volume synchronized to hostpath is the same as mounting

3.nfs Shared Storage Volume

Find another host to act as an nfs server

mkdir data #Create nfs folder

echo "nfs test">index.html #Create test HTML file

Yum install-y nfs-utils #install NFS software

Vim/etc/exports #Modify nfs configuration file

/data/ 192.168.0.0/24(rw,no_root_squash)

#Profile path plus shared segment

Each node must also have Yum install-y nfs-utils installed, otherwise the driver cannot be mounted

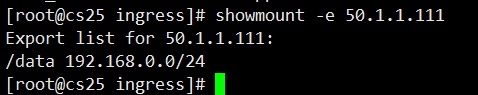

#Run showmount-e 50.1.1.111 on the node of node to see if you have mount permissions

kubectl apply -f nfs.yaml #Load container resources

Look at ip, curl visits to see if it's a test file for html that was previously created on nfs server

4.NFS uses PV and PVC

4.1 Introduction to PV definition

[root@k8s-master ~]# kubectl explain pv #View how pv is defined

FIELDS:

apiVersion

kind

metadata

spec

[root@k8s-master ~]# kubectl explain pv.spec #View specifications defined by pv

spec:

nfs(Define storage type)

path(Define mount volume path)

server(Define Server Name)

accessModes(Define the access model, there are three access models, which exist as a list, that is, multiple access modes can be defined)

ReadWriteOnce(RWO) Single Node Read-Write

ReadOnlyMany(ROX) Multi-node Read-only

ReadWriteMany(RWX) Multi-Node Read-Write

capacity(Definition PV Size of space)

storage(Specify size)

[root@k8s-master volumes]# kubectl explain pvc #View how PVC is defined

KIND: PersistentVolumeClaim

VERSION: v1

FIELDS:

apiVersion <string>

kind <string>

metadata <Object>

spec <Object>

[root@k8s-master volumes]# kubectl explain pvc.spec

spec:

accessModes(Define access mode, must be PV Subset of access modes for)

resources(Define the size of the application resource)

requests:

storage: 4.2 Configure nfs storage

mkdir v{1,2,3,}

vim /etc/exports

/data/v1 192.168.0.0/24(rw,no_root_squash)

/data/v2 192.168.0.0/24(rw,no_root_squash)

/data/v3 192.168.0.0/24(rw,no_root_squash)

4.3 Define 3 pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv001

labels:

name: pv001

spec:

nfs:

path: /data/v1

server: 50.1.1.111

accessModes: ["ReadWriteMany","ReadWriteOnce",]

capacity:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv002

labels:

name: pv002

spec:

nfs:

path: /data/v2

server: 50.1.1.111

accessModes: ["ReadWriteMany","ReadWriteOnce",]

capacity:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv003

labels:

name: pv003

spec:

nfs:

path: /data/v3

server: 50.1.1.111

accessModes: ["ReadWriteMany","ReadWriteOnce",]

capacity:

storage: 5Gi4.4 Create pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mypvc

#name, for the

spec:

accessModes: ["ReadWriteMany"]

#Declare access type, can only match to, pv contains

resources:

requests:

storage: 3Gi

#Statement requires 3GB of space and can only match 3GB and PV of meaning

---

apiVersion: v1

kind: Pod

metadata:

name: cs-hostpath

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html/

volumes:

- name: html

persistentVolumeClaim:

#Mount storage volume type is pvc

claimName: mypvc

#Specify PVC name as "mypvc"Configuration Container Application: Secret and configMap

Secret: Used to transfer sensitive information to a Pod, such as passwords, private keys, certificate files, etc. If the information is easily leaked when defined in a container, the Secret resource allows users to store the information in an emergency crowd and mount it through a Pod to achieve the effect of decoupling sensitive data and systems.

ConfigMap: Mainly used to inject non-sensitive data into a Pod. When used, the user stores the data directly in the ConfigMap object, and then Pod uses the ConfigMap volume for reference to centrally define and manage the container's configuration files.

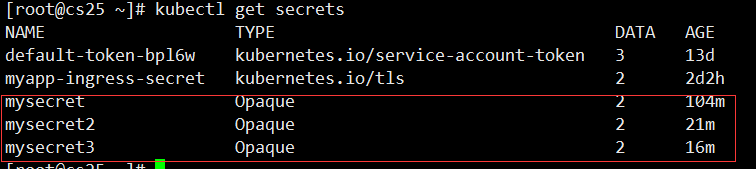

Created by--from-literal

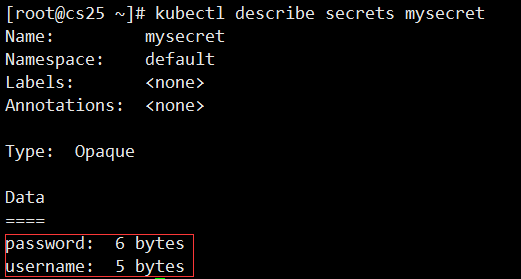

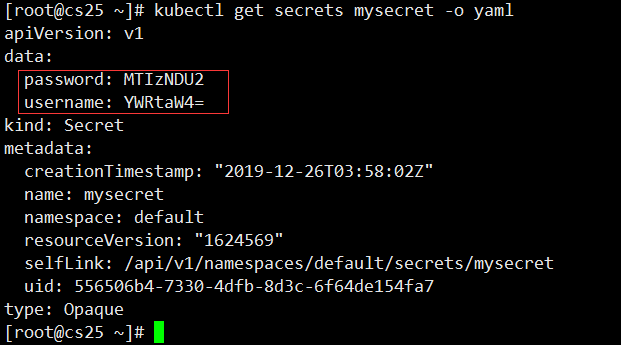

kubectl create secret generic mysecret --from-literal=username=admin --from-literal=password=123456 #Create a Secret namae as mysecret

Created from--from-file

Each file content corresponds to an information entry.

[root@cs25 ~]# echo "admin" >username [root@cs25 ~]# echo "123456" >password kubectl create secret generic mysecret2 --from-file=username --from-file=password

Created from--from-env-file

Each row of Key=Value in the file env.txt corresponds to an information entry.

[root@cs25 ~]# cat << EOF > env.txt > username=admin > password=12345 > EOF kubectl create secret generic mysecret3 --from-env-file=env.txt

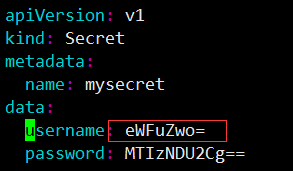

Through the YAML configuration file:

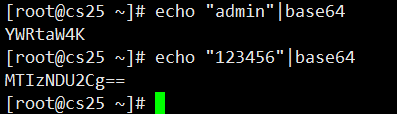

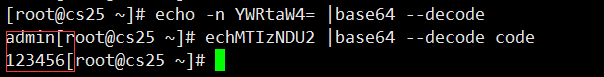

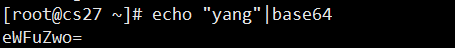

Transcoding Secret Data such as Password with base64 command first

vim secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysecretyaml

data:

username: YWRtaW4K

password: MTIzNDU2Cg==

#Key ValueSee

#Decode

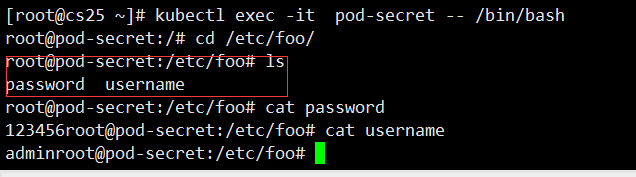

Use secret

apiVersion: v1

kind: Pod

metadata:

name: pod-secret

spec:

containers:

- name: pod-secret

image: nginx

volumeMounts:

- name: foo

#Mount that storage volume

mountPath: "/etc/foo"

#Path to pod mount

volumes:

- name: foo

#Create a storage volume, name, to be called by the pod

secret:

#Storage Volume Type

secretName: mysecret

#With that file

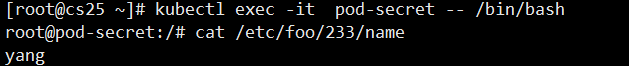

exec enters the container cd to / etc/foo to see the key and value we created mysecret

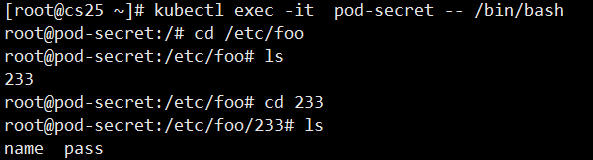

Redirect data

apiVersion: v1

kind: Pod

metadata:

name: pod-secret

spec:

containers:

- name: pod-secret

image: nginx

volumeMounts:

- name: foo

mountPath: "/etc/foo"

volumes:

- name: foo

secret:

secretName: mysecret

items:

#Custom store path and file name

- key: username

path: 233/name

#Redirect path and file name (key), note that path writes relative path

- key: password

path: 233/pass

Now the data is redirected to the folder'/etc/foo/233'

Secret also supports dynamic updates in this way: after a Secret update, the data in the container is updated.

vim secret.yaml

kubectl apply -f secret.yaml

Log in and see that the value of the name has changed

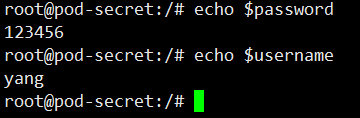

Environment variable pass key

apiVersion: v1

kind: Pod

metadata:

name: pod-secret

spec:

containers:

- name: pod-secret

image: nginx

env:

- name: username

#Environment variables passed into the container

valueFrom:

secretKeyRef:

name: mysecret

#Use that secret

key: username

#Use that key

- name: password

valueFrom:

secretKeyRef:

name: mysecret

key: password

exec login in echo environment variable

Secret's data was successfully read through the environment variables SECRET_USERNAME and SECRET_PASSWORD.

It is important to note that it is easy to read Secret from environment variables, but it does not support Secret dynamic updates.

Secret can provide Pod with sensitive data such as passwords, Token s, private keys, and ConfigMap for some non-sensitive data such as the configuration information of the application.

All of the above configmap additions are supported without an example

configmap to an instance

vim www.conf #Create nging profile

server {

server_name www.233.com;

listen 8860;

root /data/web/html;

}kubectl create configmap configmap-cs --from-file=www.conf

#Create a resource name d "configmap-cs" configmap with the content of www.conf you created earlier

vim nginx-configmap #Create pod

apiVersion: v1

kind: Pod

metadata:

name: pod-secret

spec:

containers:

- name: pod-secret

image: nginx

volumeMounts:

- name: nginxconf

#Call a storage volume named "nginxconf"

mountPath: /etc/nginx/conf.d/

volumes:

- name: nginxconf

#Create Storage Volume

configMap:

#Storage volume type is configMap

name: configmap-cs

#Use the "configmap-cs" resource inside configmapKubectl apply-f nginx-configmap.yaml #Load Start

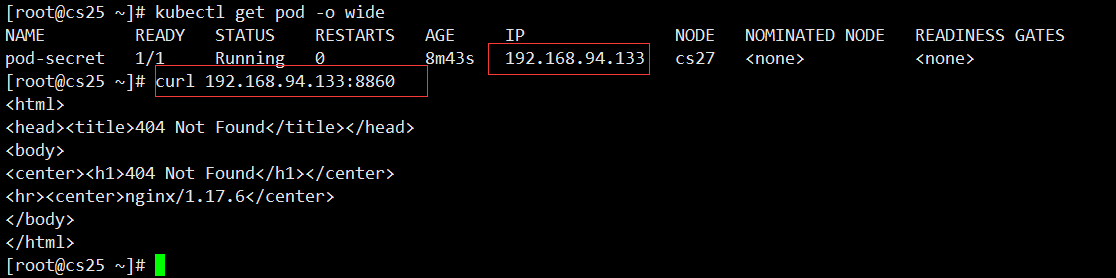

View IP access to the capital Port I just defined, normal access instructions Profile is in effect.

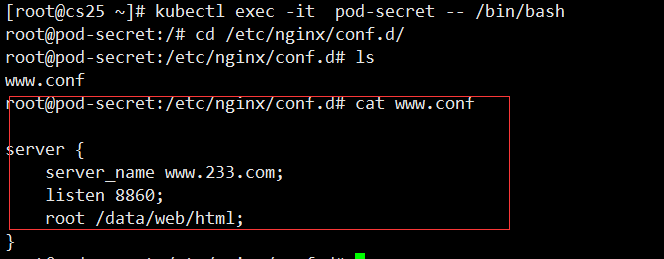

Log in to view the file

kubectl edit configmaps configmap-cs

The #command modifies resources and uses them as vim does

POD mount process

POD mounts the storage volume named "nginxconf" first The contents of the storage volume for "nginxconf" are generated by calling the resource "configmap-cs" in the configMap resource "configmap-cs" This resource was generated by reading the www.conf file