All the following operations are carried out at the master end

Server role assignment

| role | address | Installation components |

|---|---|---|

| master | 192.168.142.220 | kube-apiserver kube-controller-manager kube-scheduler etcd |

| node1 | 192.168.142.136 | kubelet kube-proxy docker flannel etcd |

| node2 | 192.168.142.132 | kubelet kube-proxy docker flannel etcd |

1, API server service deployment

Set up the apiserver installation site

[root@master k8s]# pwd /k8s [root@master k8s]# mkdir apiserver [root@master k8s]# cd apiserver/

Establish ca certificate (pay attention to the path problem!!)

//Define ca certificate and generate ca certificate configuration file

[root@master apiserver]# cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

//Generate certificate signature file

[root@master apiserver]# cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

//Certificate signature (generate ca.pem Ca key.pem)

[root@master apiserver]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -Establishing the apiserver Communication Certificate

//Define the apiserver certificate and generate the apiserver certificate configuration file

[root@master apiserver]# cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.142.220", //Master1 (note address change)

"192.168.142.120", //master2 (later double node)

"192.168.142.20", //vip

"192.168.142.130", //lb nginx load balancing (master)

"192.168.142.140", //lb (backup)

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

//Certificate signature (generate server.pem server-key.pem)

[root@master apiserver]# cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes server-csr.json | cfssljson -bare serverEstablish admin certificate

[root@master apiserver]# cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

//Certificate signature (generate admin.pem admin-key.epm)

[root@master apiserver]# cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes admin-csr.json | cfssljson -bare adminEstablish Kube proxy certificate

[root@master apiserver]# cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

//Certificate signature (generate kube-proxy.pem kube-proxy-key.pem)

[root@master apiserver]# cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxyA total of 8 certificates should be generated

[root@master apiserver]# ls *.pem admin-key.pem ca-key.pem kube-proxy-key.pem server-key.pem admin.pem ca.pem kube-proxy.pem server.pem

Copy start command

//Establish storage site

[root@master apiserver]# mkdir -p /opt/kubernetes/{bin,ssl,cfg}

[root@master apiserver]# cp -p *.pem /opt/kubernetes/ssl/

//Copy startup script

[root@master k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@master k8s]# cd kubernetes/server/bin/

[root@master bin]# cp -p kube-apiserver kubectl /opt/kubernetes/bin/Create token file

[root@master bin]# cd /opt/kubernetes/cfg

//Generate random token

[root@master cfg]# export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

[root@master cfg]# cat > token.csv << EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOFCreating an apiserver startup script

[root@master cfg]# vim /usr/lib/systemd/system/kube-apiserver.service //Write manually [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target //Convenient identification of rights [root@master cfg]# chmod +x /usr/lib/systemd/system/kube-apiserver.service

Create an apiserver profile

[root@master ssl]# vim /opt/kubernetes/cfg/kube-apiserver //Write manually, pay attention to the change of IP address KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=https://192.168.142.220:2379,https://192.168.142.136:2379,https://192.168.142.132:2379 \ --bind-address=192.168.142.220 \ --secure-port=6443 \ --advertise-address=192.168.142.220 \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --kubelet-https=true \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem" [root@master ssl]# mkdir -p /var/log/kubernetes/apiserver

apiserver service start

[root@master cfg]# systemctl daemon-reload [root@master cfg]# systemctl start kube-apiserver [root@master cfg]# systemctl status kube-apiserver [root@master cfg]# systemctl enable kube-apiserver

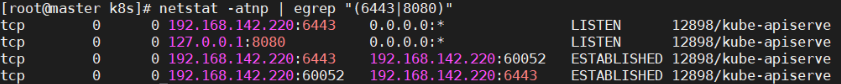

Check service startup

[root@master bin]# netstat -atnp | egrep "(6443|8080)" //6443 is the http use port; 8080 bit https use port tcp 0 0 192.168.142.220:6443 0.0.0.0:* LISTEN 12898/kube-apiserve tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 12898/kube-apiserve tcp 0 0 192.168.142.220:6443 192.168.142.220:60052 ESTABLISHED 12898/kube-apiserve tcp 0 0 192.168.142.220:60052 192.168.142.220:6443 ESTABLISHED 12898/kube-apiserve

2, Controller manager service deployment

Move control command

[root@master bin]# pwd /k8s/kubernetes/server/bin //Mobile script [root@master bin]# cp -p kube-controller-manager /opt/kubernetes/bin/

Write Kube controller manager configuration file

[root@master bin]# cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect=true \ --address=127.0.0.1 \ --service-cluster-ip-range=10.0.0.0/24 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem" EOF

Write Kube controller manager startup script

[root@master bin]# cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

Startup service

//Power up and start [root@master cfg]# chmod +x /usr/lib/systemd/system/kube-controller-manager.service [root@master cfg]# systemctl start kube-controller-manager [root@master cfg]# systemctl status kube-controller-manager [root@master cfg]# systemctl enable kube-controller-manager

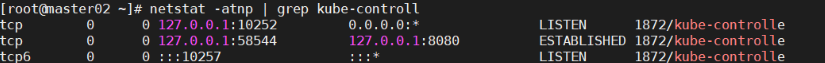

View service startup

[root@master bin]# netstat -atnp | grep kube-controll tcp 0 0 127.0.0.1:10252 0.0.0.0:* LISTEN 12964/kube-controll tcp6 0 0 :::10257 :::* LISTEN 12964/kube-controll

3, Scheduler service deployment

Move control command

[root@master bin]# pwd /k8s/kubernetes/server/bin //Mobile script [root@master bin]# cp -p kube-scheduler /opt/kubernetes/bin/

Write profile

[root@master bin]# cat <<EOF >/opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect" EOF

Writing startup scripts

[root@master bin]# cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

Opening service

[root@master bin]# chmod +x /usr/lib/systemd/system/kube-scheduler.service [root@master bin]# systemctl daemon-reload [root@master bin]# systemctl start kube-scheduler [root@master bin]# systemctl status kube-scheduler [root@master bin]# systemctl enable kube-scheduler

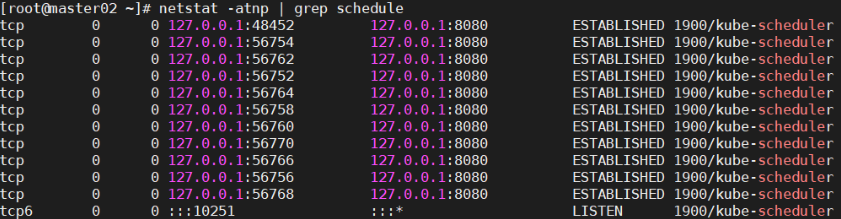

View service startup

[root@master bin]# netstat -atnp | grep schedule tcp6 0 0 :::10251 :::* LISTEN

The above is the whole deployment process of all services to be deployed on the master node.

//View master node status

[root@master bin]# /opt/kubernetes/bin/kubectl get cs

//If it succeeds, it should all be healthy

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}