The previous article talked about service. This article is about ingress.

What is ingress?

The last article introduced service, which exposed three ways of service: Cluster ip, NodePort and LoadBalance. These three ways are provided in the service dimension. The function of service is embodied in two aspects. Within the cluster, it keeps track of the changes of pod, updates the corresponding pod objects in endpoint, and provides ip No. Changing pod service discovery mechanism, which is similar to load balancer, can access pod inside and outside the cluster. However, it is not appropriate to expose services by service alone in the actual production environment:

ClusterIP can only be accessed within the cluster.

In NodePort mode, the test environment works well. When hundreds of services are running in the cluster, the port management of NodePort is a disaster.

The LoadBalance approach is limited to cloud platforms, and deployment of ELB s on cloud platforms usually requires additional costs.

Fortunately, k8s also provides a way to expose services in the cluster dimension, namely ingress. Ingress can be simply understood as the service of a service. It uses an independent ingress object to formulate rules for forwarding requests and route requests to one or more services. This decouples the service from the request rule, and considers the exposure of the service from the business dimension in a unified way, instead of considering each service individually.

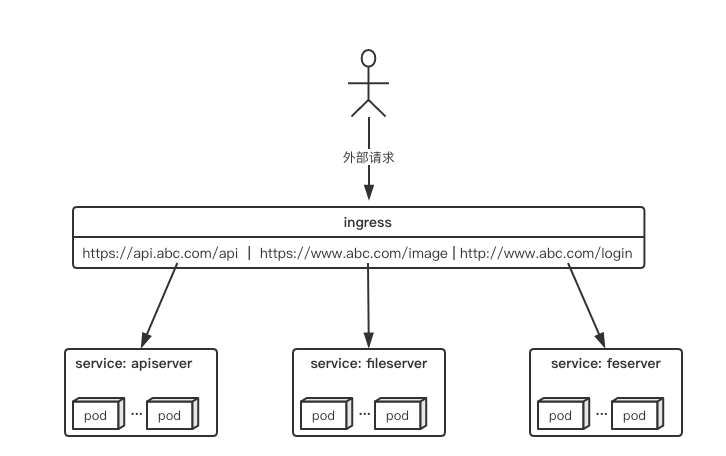

For example, there are three service s in the cluster: api, file storage and front-end. The request forwarding in the graph can be realized by an ingress object:

The ingress rule is very flexible. It can forward requests to different service s according to different domain names and path s, and supports https/http.

Ingress and ingress-controller

To understand ingress, two concepts need to be distinguished: ingress and ingress-controller:

- ingress object:

Refers to an api object in k8s, which is usually configured with yaml. The role is to define rules for how requests are forwarded to service, which can be understood as configuration templates. - ingress-controller:

Specifically implement reverse proxy and load balancing procedures, analyze the rules defined by ingress, and implement request forwarding according to the configuration rules.

Simply put, ingress-controller is the component responsible for specific forwarding. It is exposed to the cluster entrance in various ways. The external request flow to the cluster will first arrive at ingress-controller. The ingress object is used to tell ingress-controller how to forward requests, such as which domain name, which path to forward to which service. Wait a minute.

ingress-controller

Ingress-controller is not a component of k8s. In fact, ingress-controller is just a general term. Users can choose different ingress-controller implementations. At present, only GCE and ingress-nginx of google cloud are maintained by k8s. There are many other ingress-controller maintained by third parties. For reference Official Documents . However, no matter what kind of ingress-controller, the implementation mechanism is much the same, but there are differences in the specific configuration. Generally speaking, ingress-controller is a pod in which daemon program and reverse agent program run. Daemon is responsible for continuously monitoring cluster changes, generating configurations according to ingress objects and applying new configurations to reverse proxies, such as nginx-ingress, which dynamically generates nginx configurations, dynamically updates upstream, and applies new configurations to reload programs when needed. For convenience, the following examples take nginx-ingress officially maintained by k8s as an example.

ingress

Ingress is an API object that, like other objects, is configured through a yaml file. Ingress exposes the internal services of the cluster through http or https, providing service with external URL s, load balancing, SSL/TLS capabilities and host-based directional proxy. Ingress depends on ingress-controller to achieve the above functions. If the diagram in the previous section is represented by ingress, it is probably the following configuration:

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: abc-ingress annotations: kubernetes.io/ingress.class: "nginx" nginx.ingress.kubernetes.io/use-regex: "true" spec: tls: - hosts: - api.abc.com secretName: abc-tls rules: - host: api.abc.com http: paths: - backend: serviceName: apiserver servicePort: 80 - host: www.abc.com http: paths: - path: /image/* backend: serviceName: fileserver servicePort: 80 - host: www.abc.com http: paths: - backend: serviceName: feserver servicePort: 8080

Like other k8s objects, the ingress configuration also contains key fields such as apiVersion, kind, metadata, spec, etc. There are several concerns in the spec field where tls are used to define https keys and certificates. rule is used to specify request routing rules. What is worth noting here is the metadata.annotations field. Annotations are important in the ingress configuration. As mentioned earlier, ingress-controller has many different implementations, and different ingress-controller can judge which ingress configurations to use according to "kubernetes.io/ingress.class:", and different ingress-controller also has corresponding annotations configurations to customize some parameters. Columns such as'nginx.ingress.kubernetes.io/use-regex:'true', as configured above, will eventually be used in generating nginx configurations to represent regular matches.

Deployment of ingress

There are two aspects to consider in the deployment of ingress:

- ingress-controller runs as a pod and is better deployed in what way

- ingress solves how to route requests inside the cluster, and how to expose itself to the outside is better.

Here are some common deployment and exposure modes, and which one to use depends on the actual needs.

Service of Deployment+LoadBalancer pattern

If you want to deploy ingress in a public cloud, this is a good way to do it. Deployment deploys ingress-controller and creates a service type for LoadBalancer to associate this set of pod s. Most public clouds automatically create a load balancer for LoadBalancer's service, usually with a public address bound. As long as the domain name resolution points to the address, the external exposure of cluster services is realized.

Service of Deployment+NodePort Mode

Similarly, deployment mode is used to deploy ingress-controller and create corresponding services, but type is NodePort. In this way, ingress will be exposed to specific ports of cluster node ip. Because the ports exposed by nodeport are random ports, a load balancer is usually built to forward requests. This approach is generally used in scenarios where the host is relatively fixed and the IP address of the environment remains unchanged.

Although NodePort mode is simple and convenient to expose ingress, NodePort has a layer of NAT, which may have some impact on performance when the request size is large.

DaemonSet+HostNetwork+nodeSelector

Using DaemonSet and node selector to deploy ingress-controller to a specific node, and then using Host Network to connect the pod with the host node directly, the service can be accessed directly by using 80/433 port of the host. At this point, the node machine of ingress-controller is very similar to the edge nodes of traditional architecture, such as the nginx server at the entrance of the computer room. This method has the simplest request link and better performance than NodePort mode. The disadvantage is that a node can only deploy an ingress-controller pod by directly utilizing the network and port of the host node. It is more suitable for large concurrent production environment.

ingress test

Let's actually deploy and simply test ingress. Two services, gowebhost and gowebip, have been deployed in the test cluster, and each request can return the container hostname and ip. The test builds an ingress to access these two services through different path s of domain names:

Testing ingress using the k8s community ingress-nginx The deployment method is DaemonSet + Host Network.

Deploy ingress-controller

Deployment of ingress-controller pod and related resources

In official documentation, deployment simply executes a yaml

https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

mandatory.yaml, a yaml, contains many resource creation, including namespace, ConfigMap, role, Service Account, etc. All the resources needed to deploy ingress-controller are configured too much to stick out. Let's focus on the deployment section:

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-ingress-controller namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: replicas: 1 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx annotations: prometheus.io/port: "10254" prometheus.io/scrape: "true" spec: serviceAccountName: nginx-ingress-serviceaccount containers: - name: nginx-ingress-controller image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.25.0 args: - /nginx-ingress-controller - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io securityContext: allowPrivilegeEscalation: true capabilities: drop: - ALL add: - NET_BIND_SERVICE # www-data -> 33 runAsUser: 33 env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - name: http containerPort: 80 - name: https containerPort: 443 livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10

You can see that the image "quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.25.0" is mainly used, and some startup parameters are specified. At the same time, two ports, 80 and 443, were opened, and a health check was made at 10254.

We need to use daemonset to deploy to a specific node, and we need to modify some of the configuration: first, we label the node to deploy nginx-ingress, where we test the node deployed in "node-1".

$ kubectl label node node-1 isIngress="true"

Then modify the deployment part of mandatory.yaml above to configure:

# Modifying api version and type # apiVersion: apps/v1 # kind: Deployment apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: nginx-ingress-controller namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: # Delete Replicas # replicas: 1 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx annotations: prometheus.io/port: "10254" prometheus.io/scrape: "true" spec: serviceAccountName: nginx-ingress-serviceaccount # Select the node of the corresponding label nodeSelector: isIngress: "true" # Exposing Services Using Host Network hostNetwork: true containers: - name: nginx-ingress-controller image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.25.0 args: - /nginx-ingress-controller - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io securityContext: allowPrivilegeEscalation: true capabilities: drop: - ALL add: - NET_BIND_SERVICE # www-data -> 33 runAsUser: 33 env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - name: http containerPort: 80 - name: https containerPort: 443 livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10

Execute apply after modification and check the service

$ kubectl apply -f mandatory.yaml namespace/ingress-nginx created configmap/nginx-configuration created configmap/tcp-services created configmap/udp-services created serviceaccount/nginx-ingress-serviceaccount created clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created role.rbac.authorization.k8s.io/nginx-ingress-role created rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created daemonset.extensions/nginx-ingress-controller created # Check deployment $ kubectl get daemonset -n ingress-nginx NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE nginx-ingress-controller 1 1 1 1 1 isIngress=true 101s $ kubectl get po -n ingress-nginx -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-controller-fxx68 1/1 Running 0 117s 172.16.201.108 node-1 <none> <none>

As you can see, the pod of nginx-controller has been deployed on node-1.

Exposure of nginx-controller

Look at the local port on node-1:

[root@node-1 ~]# netstat -lntup | grep nginx tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 2654/nginx: master tcp 0 0 0.0.0.0:8181 0.0.0.0:* LISTEN 2654/nginx: master tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 2654/nginx: master tcp6 0 0 :::10254 :::* LISTEN 2632/nginx-ingress- tcp6 0 0 :::80 :::* LISTEN 2654/nginx: master tcp6 0 0 :::8181 :::* LISTEN 2654/nginx: master tcp6 0 0 :::443 :::* LISTEN 2654/nginx: master

Because of the hostnetwork configuration, nginx has monitored port 80/443/8181 locally on the node host. 8181 is a default backend of the default configuration of nginx-controller. In this way, as long as the access node host has a public network IP, it can directly map the domain name to expose the service to the external network. If nginx is to be highly available, it can be used at multiple nodes

Deploy and build a set of LVS+keepalive to do load balancing. Another advantage of using host network is that if lvs uses DR mode, port mapping is not supported. At this time, using node ports to expose non-standard ports can be very troublesome to manage.

Configuring ingress resources

After the ingress-controller is deployed, the ingress resource is created according to the requirements of the test.

# ingresstest.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: ingress-test annotations: kubernetes.io/ingress.class: "nginx" # Open use-regex to enable regular matching of path s nginx.ingress.kubernetes.io/use-regex: "true" spec: rules: # Define domain names - host: test.ingress.com http: paths: # Forwarding different path s to different ports - path: /ip backend: serviceName: gowebip-svc servicePort: 80 - path: /host backend: serviceName: gowebhost-svc servicePort: 80

Deployment of resources

$ kubectl apply -f ingresstest.yaml

Test access

Once deployed, do a local host to simulate parsing the ip address from test.ingress.com to node. Test access

As you can see, requesting different path s has been requested to different services as required.

Since the default backend is not configured, accessing other path s prompts 404:

On ingress-nginx

Let's say a few more words about ingress-nginx. The example tested above is very simple. There are many configurations of ingress-nginx, which can be discussed in several separate articles. But this article mainly wants to explain ingress, so it does not involve much. Specifically, you can refer to ingress-nginx's Official Documents . At the same time, there are many things to consider when using ingress-nginx in the production environment. This article It is well written and summarizes many best practices, which are worth referring to.

Last

- ingress is the request entry of k8s cluster, which can be understood as a re-abstraction of multiple service s

- Generally speaking, ingress consists of ingress resource object and ingress-controller.

- There are many implementations of ingress-controller. The native community is ingress-nginx, which is selected according to specific needs.

- There are many ways for ingress to expose itself. It is necessary to choose the right way according to the basic environment and business type.

Reference resources

Kubernetes Document

NGINX Ingress Controller Document

Introduction to the Use of Kubernetes Ingress Controller and High Availability Landing

Popular Understanding of the Functions and Relations of Service, Ingress and Ingress Controller in Kubernetes