hostNetWork:true

Test yaml:

apiVersion: v1 kind: Pod metadata: name: nginx-hostnetwork spec: hostNetwork: true containers: - name: nginx-hostnetwork image: nginx:1.7.9

# Create pod s and test them $ kubectl create -f nginx-hostnetwork.yaml $ kubectl get pod --all-namespaces -o=wide | grep nginx-hostnetwork default nginx-hostnetwork 1/1 Running 0 15m 172.30.3.222 k8s-n3 <none> <none> # test $ curl http://k8s-n3 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> ... ...

HostPort

Test yaml:

apiVersion: v1 kind: Pod metadata: name: nginx-hostport spec: containers: - name: nginx-hostport image: nginx:1.7.9 ports: - containerPort: 80 hostPort: 8088

# Create pod s and test them $ kubectl create -f nginx-hostnetport.yaml $ kubectl get pod --all-namespaces -o=wide | grep nginx-hostport default nginx-hostport 1/1 Running 0 13m 10.244.4.9 k8s-n3 <none> <none> # test $ curl http://k8s-n3:8088 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> ... ...

Port Forward

Port forwarding takes advantage of the function of Socat, which is a magic tool that you deserve: Socat

# Current machines must have kubectl config with target clusters # Test local machine 8099 port forwarded to target nginx-host network 80 port $ kubectl port-forward -n default nginx-hostnetwork 8099:80 # Test, local access localhost:8099 $ curl http://localhost:8099 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> ... ...

Service

Previously, the application of pod was exposed directly. This method is not advisable in the actual production environment. The standard method is based on Service.

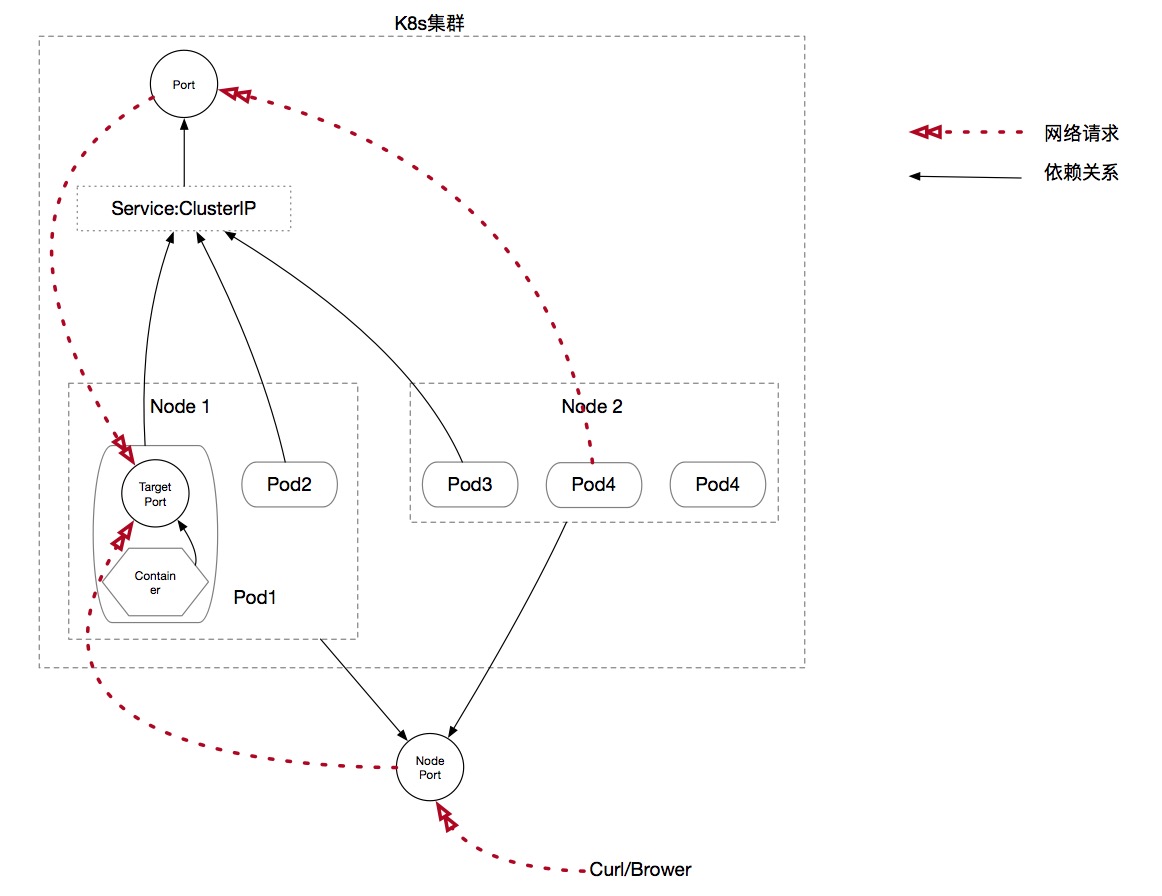

Service has three types: Cluster IP, NodePort and Load Balancer.

First, let's look at the concept of ports in Service.

port/nodeport/targetport

Port - The port that Service exposes to Cluster IP, that is, the port that virtual IP is bound to. Port is the gateway that provides access to service for clients within the cluster.

Noeport - The K8s cluster exposes the access to Service for external customers of the cluster.

Targetport -- is the port of the Pod content server. Data coming from ports and nodeport s will eventually flow through Kube-proxy to the port of the container in the back-end pod. If the targetport does not display the declaration, it will be forwarded by default to the port where the Service accepts the request (consistent with the port port).

There are four ways to expose internal services through services: Cluster IP, NodePort, LoadBalancer, Ingress

ClusterIP

ClusterIP is actually the default type of Service, which is the default type. For example, the previously deployed dashboard plug-in actually uses this type, which can be distinguished by the following instructions:

$ kubectl -n kube-system get svc kubernetes-dashboard -o=yaml apiVersion: v1 kind: Service metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"k8s-app":"kubernetes-dashboard"},"name":"kubernetes-dashboard","namespace":"kube-system"},"spec":{"ports":[{"port":80,"targetPort":8443}],"selector":{"k8s-app":"kubernetes-dashboard"}}} creationTimestamp: 2019-04-11T09:21:23Z labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system resourceVersion: "314064" selfLink: /api/v1/namespaces/kube-system/services/kubernetes-dashboard uid: 2cde72a3-5c3b-11e9-892c-000c2968fc47 spec: clusterIP: 10.101.229.15 ports: - port: 443 protocol: TCP targetPort: 8443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None # Here you specify the type of Service type: ClusterIP status: loadBalancer: {}

Therefore, this type of Service itself does not expose services outside the cluster, but it can be accessed solely through the K8s Proxy API. Proxy API is a special API. Kube-APIServer only proxy HTTP requests for such APIs, then forwards the requests to the port on which the Kubelet process listens on a node, and finally has the REST API on that port to respond to the requests.

Access outside the cluster requires the help of kubectl, so the nodes outside the cluster must be equipped with authenticated kubectl. See the configuration section of kubectl:

$ kubectl proxy --port=8080 # Accessible through selfLink, note that the service name here needs to specify https $ curl http://localhost:8080/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/ <!doctype html> <html ng-app="kubernetesDashboard"> <head> <meta charset="utf-8"> <title ng-controller="kdTitle as $ctrl" ng-bind="$ctrl.title()"></title> <link rel="icon" type="image/png" href="assets/images/kubernetes-logo.png"> <meta name="viewport" content="width=device-width"> <link rel="stylesheet" href="static/vendor.93db0a0d.css"> <link rel="stylesheet" href="static/app.ddd3b5ec.css"> </head> <body ng-controller="kdMain as $ctrl"> <!--[if lt IE 10]> <p class="browsehappy">You are using an <strong>outdated</strong> browser. Please <a href="http://browsehappy.com/">upgrade your browser</a> to improve your experience.</p> <![endif]--> <kd-login layout="column" layout-fill="" ng-if="$ctrl.isLoginState()"> </kd-login> <kd-chrome layout="column" layout-fill="" ng-if="!$ctrl.isLoginState()"> </kd-chrome> <script src="static/vendor.bd425c26.js"></script> <script src="api/appConfig.json"></script> <script src="static/app.91a96542.js"></script> </body> </html> %

This approach requires that the access node must have an authenticated kubectl, so it can only be used for debugging.

NodePort

NodePort is based on Cluster IP to expose services, but does not require the configuration of kubectl. It monitors the same port on each node. The access to the port is directed to Cluster IP of Service. The subsequent way is the same as Cluster IP. Examples are as follows:

# Accessing k8s-dashboard in NodePort $ kubectl patch svc kubernetes-dashboard -p '{"spec":{"type":"NodePort"}}' -n kube-system # Of course, if you want to re-create a nodeport type of svc is OK, so you can easily specify the open port. $ vi nodeport-k8s-dashboard-svc.yaml apiVersion: v1 kind: Service metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-nodeport namespace: kube-system spec: ports: - port: 443 protocol: TCP targetPort: 8443 nodePort: 30026 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type: NodePort status: loadBalancer: {} $ kubectl create -f nodeport-k8s-dashboard-svc.yaml service/kubernetes-dashboard-nodeport created

When accessing nodeIP:NodePort, it appears that the node where pod is located is OK, and other Node accesses are denied because of the version of Docker. kubernets nodeport is not accessible The solution is to modify FORWARD's chain generation rules on other Node s:

$ iptables -P FORWARD ACCEPT

So you can visit:

$ kubectl get svc --all-namespaces -o=wide | grep kubernetes-dashboard kube-system kubernetes-dashboard ClusterIP 10.96.8.148 <none> 443/TCP 8s k8s-app=kubernetes-dashboard kube-system kubernetes-dashboard-nodeport NodePort 10.107.134.0 <none> 443:30026/TCP 4m46s k8s-app=kubernetes-dashboard # Out-of-cluster access to dashboard services $ curl https://k8s-n1:30026 curl: (60) server certificate verification failed. CAfile: /etc/ssl/certs/ca-certificates.crt CRLfile: none More details here: http://curl.haxx.se/docs/sslcerts.html ...

LoadBalancer

It can only be defined on Service. LoadBalancer is a load balancer provided by some specific public clouds, requiring specific cloud service providers, such as AWS, Azure, OpenStack and GCE (Google Container Engine). Let's skip it here.

Ingress

Unlike previous ways of exposing cluster services, Ingress is not really a Service. It sits before multiple services and acts as an intelligent router or entry point in the cluster. Similar to the reverse proxy provided by Nginx, the way officially recommended is the implementation of Nginx. Here we construct it with the idea of Nginx-Ingress.

The function of Ingress needs two parts, one is Nginx to do the seven-layer routing of the network, the other is Ingress-controller to monitor the changes of ingress rule s and update the configuration of nginx in real time. So in order to realize Ingress in k8s cluster, it is necessary to deploy an Ingress-controller pod, which can use the official network routine:

# yaml Configuration Recommended by Official Website $ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml # This yaml defines a bunch of resources, including role, configMap, Svc, Deployment, namespace, and so on. # It's important to note that if you want to expose services in different namespaces, you must adjust the yaml file to the corresponding namespace. # At this point, the pod has started properly, but the svc has not yet been created (note that there is no need to create default-backend separately, all in the pod of nginx-ingress-controller) $ kubectl get pod -n ingress-nginx | grep ingress nginx-ingress-controller-5694ccb578-5j7q6 1/1 Running 0 5h # Create an svc to expose nginx-ingress-controller services, using NodePort apiVersion: v1 kind: Service metadata: name: ingress-nginx-controller-svc namespace: ingress-nginx spec: ports: - name: http nodePort: 32229 port: 80 protocol: TCP targetPort: 80 - name: https nodePort: 31373 port: 443 protocol: TCP targetPort: 443 selector: app.kubernetes.io/name: ingress-nginx sessionAffinity: None type: NodePort

At this point, Ingress's cluster configuration has been completed. Next, we test to expose two internal Nginx services through Ingress:

# Start up two internal pod s to host the services of nginx18 and nginx17 $ vi nginx1.7.yaml apiVersion: v1 kind: Service metadata: name: nginx1-7 namespace: ingress-nginx spec: ports: - port: 80 targetPort: 80 selector: app: nginx1-7 --- apiVersion: apps/v1beta1 kind: Deployment metadata: name: nginx1-7-deployment namespace: ingress-nginx spec: replicas: 1 template: metadata: labels: app: nginx1-7 spec: containers: - name: nginx image: nginx:1.7.9 ports: - containerPort: 80 $ vi nginx1.8.yaml apiVersion: v1 kind: Service metadata: name: nginx1-8 namespace: ingress-nginx spec: ports: - port: 80 targetPort: 80 selector: app: nginx1-8 --- apiVersion: apps/v1beta1 kind: Deployment metadata: name: nginx1-8-deployment namespace: ingress-nginx spec: replicas: 2 template: metadata: labels: app: nginx1-8 spec: containers: - name: nginx image: nginx:1.8 ports: - containerPort: 80 # Start, note that namespace must be the same as nginx-controller $ kubectl create -f nginx1.7.yaml nginx1.8.yaml #Verify startup $ kubectl get pod --all-namespaces | grep nginx ingress-nginx nginx1-7-deployment-6fd8995bbc-fsf9n 1/1 Running 0 5h28m ingress-nginx nginx1-8-deployment-854c54cb5b-hdbk4 1/1 Running 0 5h28m $ kubectl get svc --all-namespace | grep nginx ingress-nginx nginx1-7 ClusterIP 10.97.67.139 <none> 80/TCP 5h36m ingress-nginx nginx1-8 ClusterIP 10.103.212.106 <none> 80/TCP 5h29m

Then, configure the Ingress rules for these two services:

$ vi test-ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: test namespace: ingress-nginx spec: rules: - host: n17.my.com http: paths: - backend: serviceName: nginx1-7 servicePort: 80 - host: n18.my.com http: paths: - backend: serviceName: nginx1-8 servicePort: 80 $ kubectl create -f test-ingress.yaml $ kubectl get ing --all-namespaces | grep nginx ingress-nginx test n17.my.com,n18.my.com 80 5h37m # The ingress rule has been successfully configured and tested. $ curl http://n17.my.com:32229 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> ... ... $ curl http://n18.my.com:32229 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> ... ...