In the second chapter, three roles are deployed on the master, and then node nodes are deployed.

Main deployment: kubelet Kube proxy

I. environment preparation (the following operations are performed on the master)

1 create directory and copy two components

mkdir /home/yx/kubernetes/{bin,cfg,ssl} -p

# Both node nodes copy

scp -r /home/yx/src/kubernetes/server/bin/kubelet yx@192.168.18.104:/home/yx/kubernetes/bin

scp -r /home/yx/src/kubernetes/server/bin/kube-proxy yx@192.168.18.104:/home/yx/kubernetes/bin

2 bind the kubelet bootstrap user to the system cluster role

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap

3. Generate two files: bootstrap.kubeconfig and kube-proxy.kubeconfig. Use the kubeconfig.sh script, as shown below:

Execute bash kubeconfig.sh 192.168.18.104, the first parameter is the ip of the master node, and the second is the path of the ssl certificate. Finally, the above two files will be generated, and then the two files will be copied to the two node nodes.

# Create TLS Bootstrapping Token

#BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

BOOTSTRAP_TOKEN=71b6d986c47254bb0e63b2a20cfaf560

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

#----------------------

APISERVER=$1

SSL_DIR=$2

# Create kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://$APISERVER:6443"

# Set cluster parameters

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# Set client authentication parameters

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# Set context parameters

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# Set default context

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# Create the Kube proxy kubeconfig file

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig4 copy the generated bootstrap.kubeconfig and kube-proxy.kubeconfig

scp bootstrap.kubeconfig kube-proxy.kubeconfig yx@192.168.18.105:/home/yx/kubernetes/cfg scp bootstrap.kubeconfig kube-proxy.kubeconfig yx@192.168.18.104:/home/yx/kubernetes/cfg

Installation of node 2

1 deploy kubelet components

To create a kubelet profile:

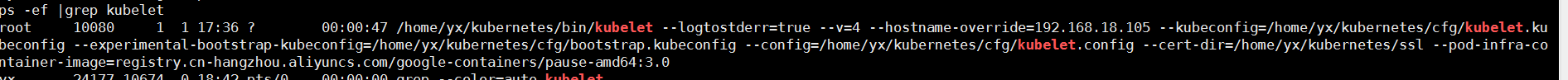

cat /home/yx/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=192.168.18.105 \ --kubeconfig=/home/yx/kubernetes/cfg/kubelet.kubeconfig \ --experimental-bootstrap-kubeconfig=/home/yx/kubernetes/cfg/bootstrap.kubeconfig \ --config=/home/yx/kubernetes/cfg/kubelet.config \ --cert-dir=/home/yx/kubernetes/ssl \ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" Parameter Description: --Hostname override the hostname displayed in the cluster --Kubeconfig specifies the location of kubeconfig file, which will be generated automatically --Bootstrap kubeconfig specifies the bootstrap.kubeconfig file just generated --CERT dir issuing certificate storage location --Pod infra container image manage the image of pod network

Create kubelet.config

cat /home/yx/kubernetes/cfg/kubelet.config kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 192.168.18.105 port: 10250 cgroupDriver: cgroupfs clusterDNS: - 10.0.0.2 clusterDomain: cluster.local. failSwapOn: false

Startup script

cat /usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=/home/yx/kubernetes/cfg/kubelet ExecStart=/home/yx/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

start-up

systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet

See if it starts

2 deploy the Kube proxy component

To create a Kube proxy profile:

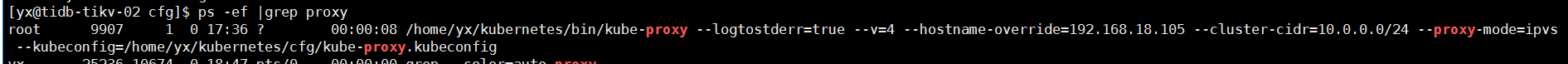

cat /home/yx/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=192.168.18.105 \ --cluster-cidr=10.0.0.0/24 \ --proxy-mode=ipvs \ --kubeconfig=/home/yx/kubernetes/cfg/kube-proxy.kubeconfig"

Startup script

[yx@tidb-tikv-02 cfg]$ cat /usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/home/yx/kubernetes/cfg/kube-proxy ExecStart=/home/yx/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.targe

start-up

systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy

Verification

In the same way, execute the above on another node. Note that the ip address needs to be changed.

3. Approve Node joining cluster in Master:

See

[yx@tidb-tidb-03 cfg]$ kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-jn-F4xSn1LAwJhom9l7hlW0XuhDQzo-RQrnkz1j4q6Y 16m kubelet-bootstrap Pending node-csr-kB2CFmTqkCA2Ix5qYGSXoAP3-ctes-cHcjs7D84Wb38 5h55m kubelet-bootstrap Approved,Issued node-csr-wWa0cKQ6Ap9Bcqap3m9d9ZBqBclwkLB84W8bpB3g_m0 22s kubelet-bootstrap Pending

Allowed to join

kubectl certificate approve node-csr-wWa0cKQ6Ap9Bcqap3m9d9ZBqBclwkLB84W8bpB3g_m0 certificatesigningrequest.certificates.k8s.io/node-csr-wWa0cKQ6Ap9Bcqap3m9d9ZBqBclwkLB84W8bpB3g_m0 approved # After the permission is completed, the status will change from Pending to Approved,Issued.

4. View the cluster status (on the master)

[yx@tidb-tidb-03 cfg]$ kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.18.104 Ready <none> 41s v1.12.1

192.168.18.105 Ready <none> 52s v1.12.1

[yx@tidb-tidb-03 cfg]$ kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"} So far, the whole k8s binary installation has been completed. Next, it's time to carry out the actual operation.