catalogue

① The traditional synchronized lock keyword.

① List (ArrayList) is not safe

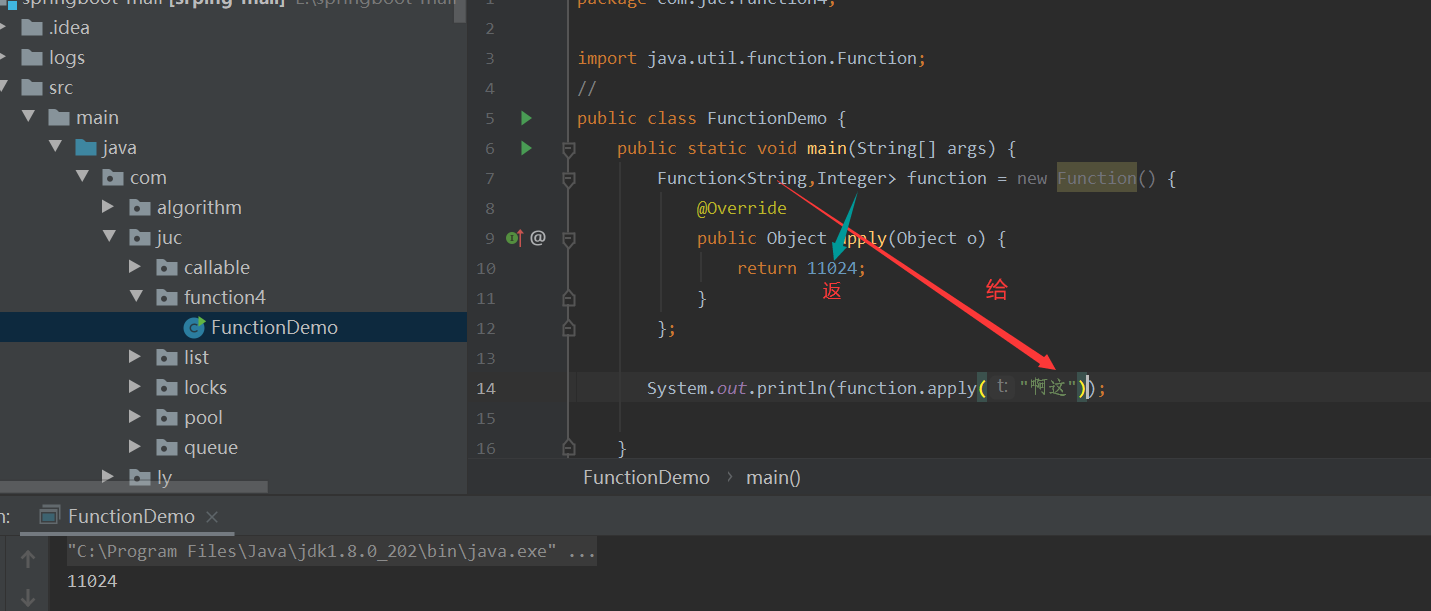

① Function interface: function type (meaning to return y to x)

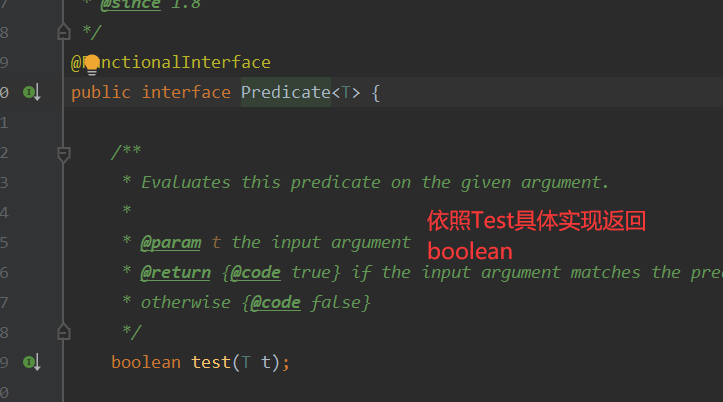

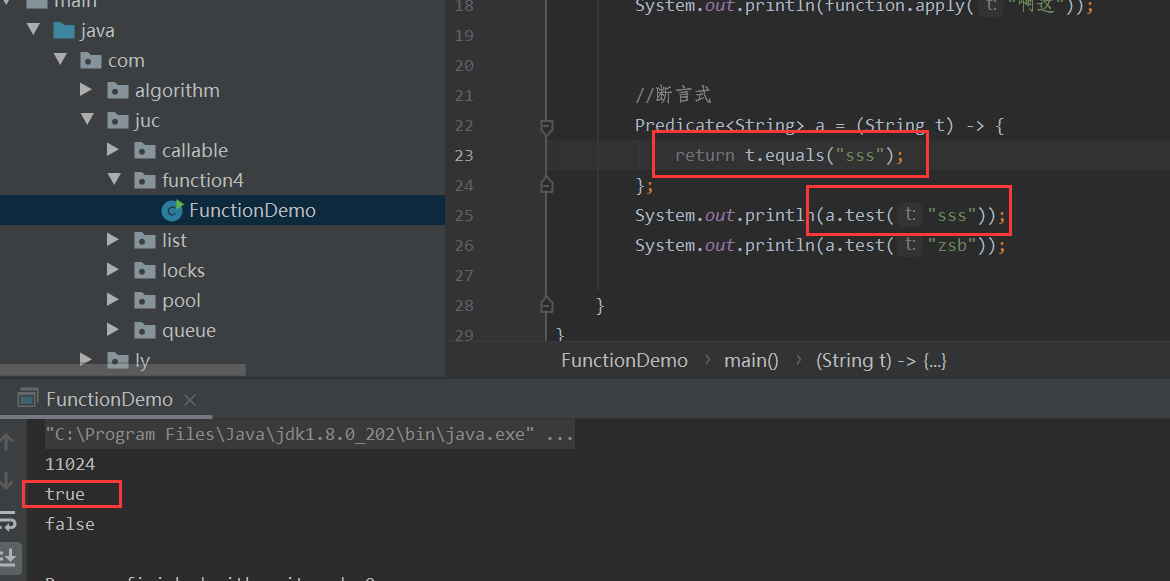

② . predicate assertive interface (judge whether it is correct)

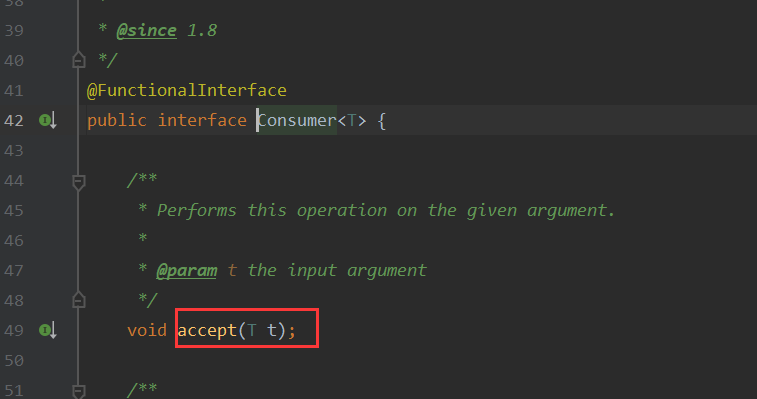

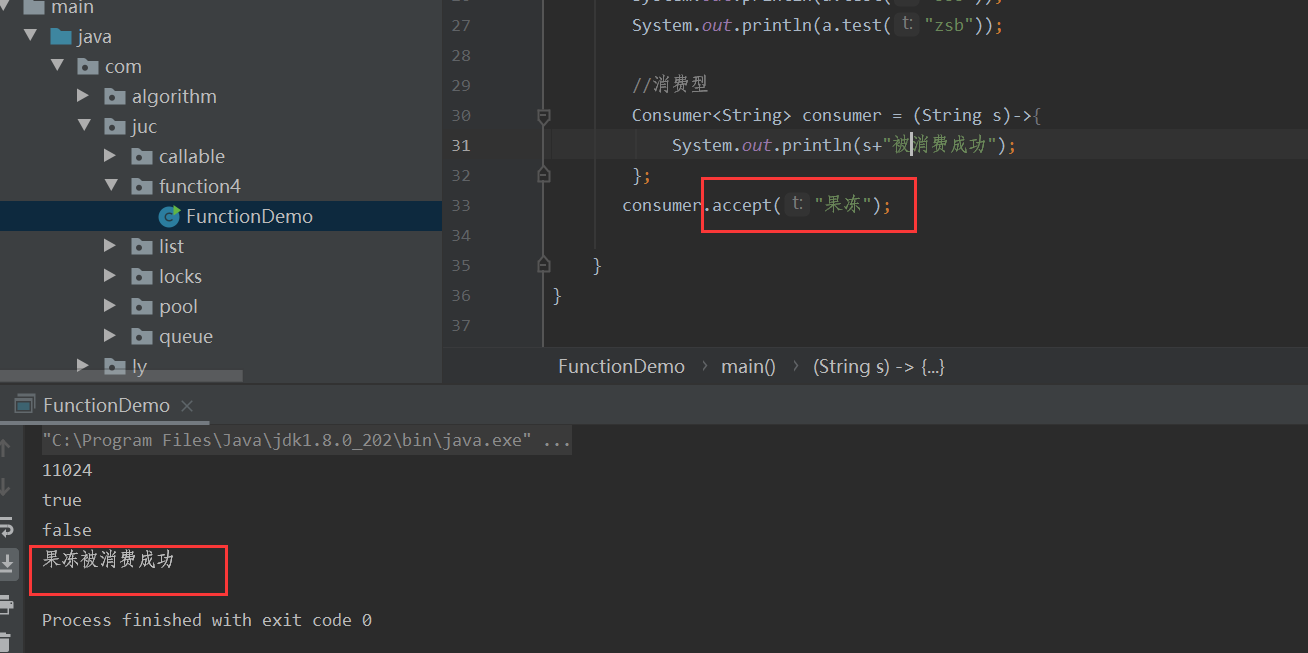

③ Consumer consumption function (input only, no return)

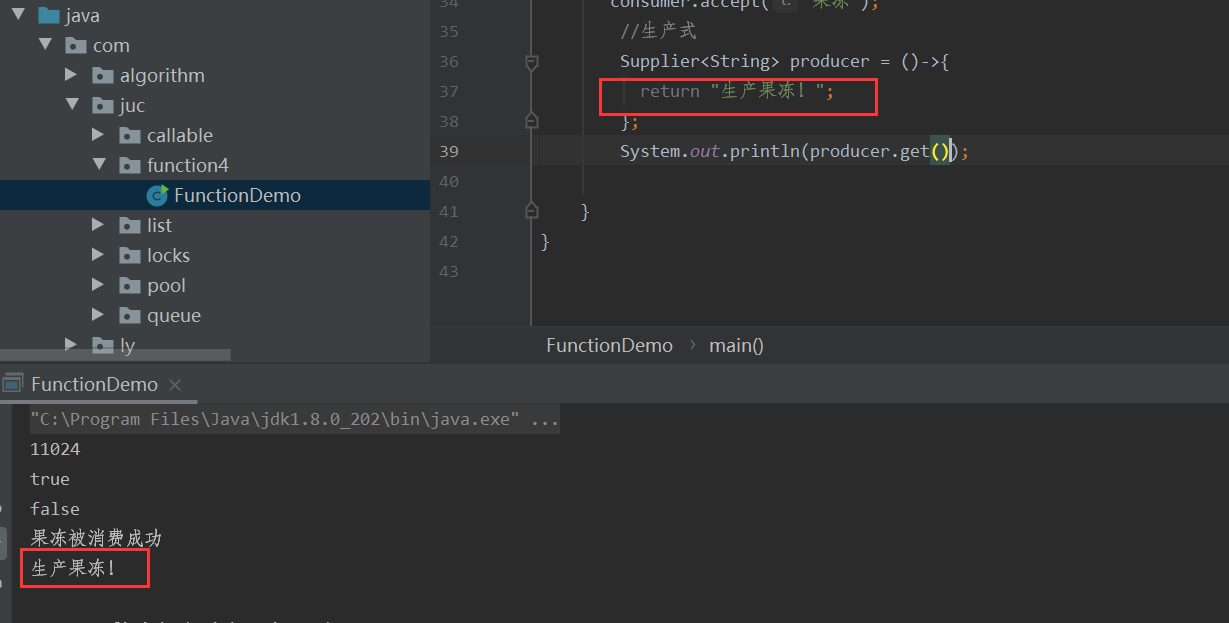

④ supplier production function interface (not given, only returned)

13, Flow calculation (using chain programming)

18, Atomic reference (Solving ABA problem of CAS)

20, AQS (cas based lock synchronization framework)

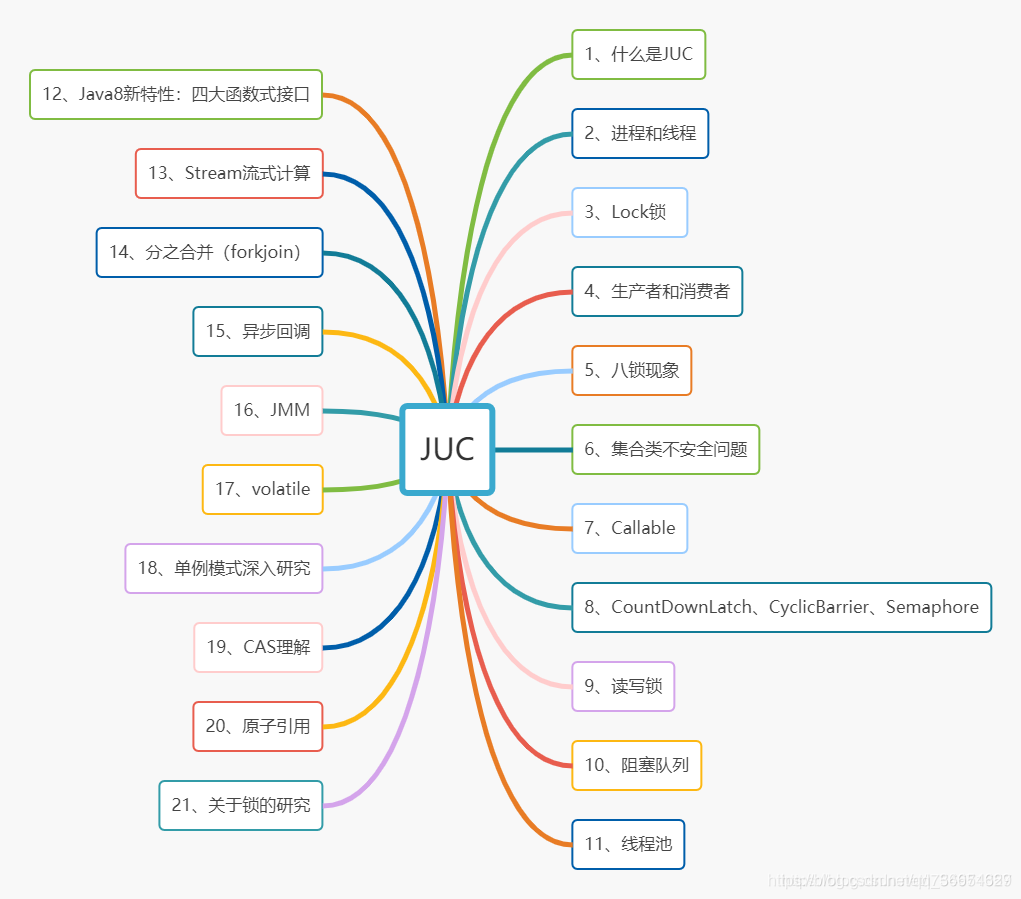

1, What is JUC?

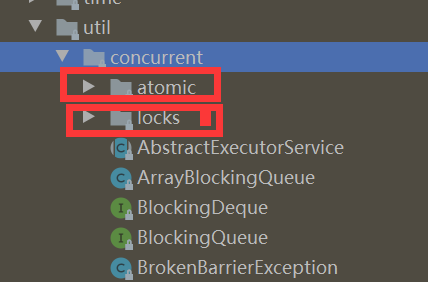

JUC is the abbreviation of java.util.concurrent package name. It is an API for concurrent programming. There are three packages related to JUC:

Java.util.concurrent (many auxiliary classes, etc.), java.util.concurrent.atomic, java.util.concurrent.locks.

A little knowledge:

High concurrency is a state where a large number of requests access the gateway interface. In this case, a large number of execution operations will occur, such as database operations, resource requests, hardware occupation, etc. This requires interface optimization.

Multithreading is a means to deal with high concurrency.

Multithreading is a way of asynchronous processing, which makes maximum use of computer resources at the same time.

2, Processes and threads?

- Generally speaking, a program is a process, such as qq.exe. You can see many processes in the task manager. Thread, such as the function task of sending messages in QQ. In previous operating systems, process is the basic unit of resource allocation and task execution.

-A process is composed of multiple threads, including at least one thread. A process is the unit of resource allocation, and threads can share the resources of the process, which is the smallest unit for scheduling and execution. Because threads basically do not have resources, their creation and destruction costs are low.

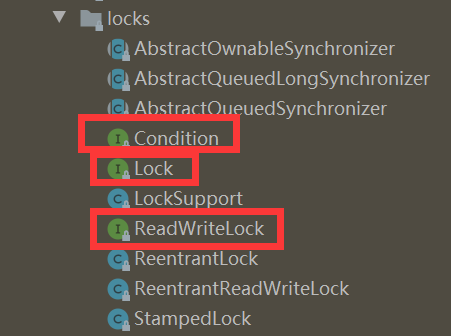

3, Locks package

① The traditional synchronized lock keyword.

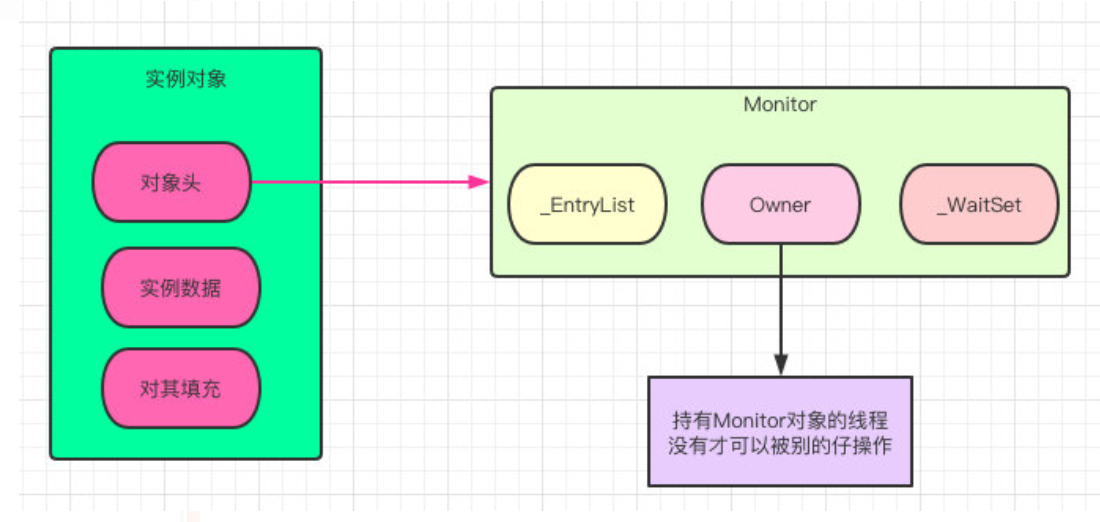

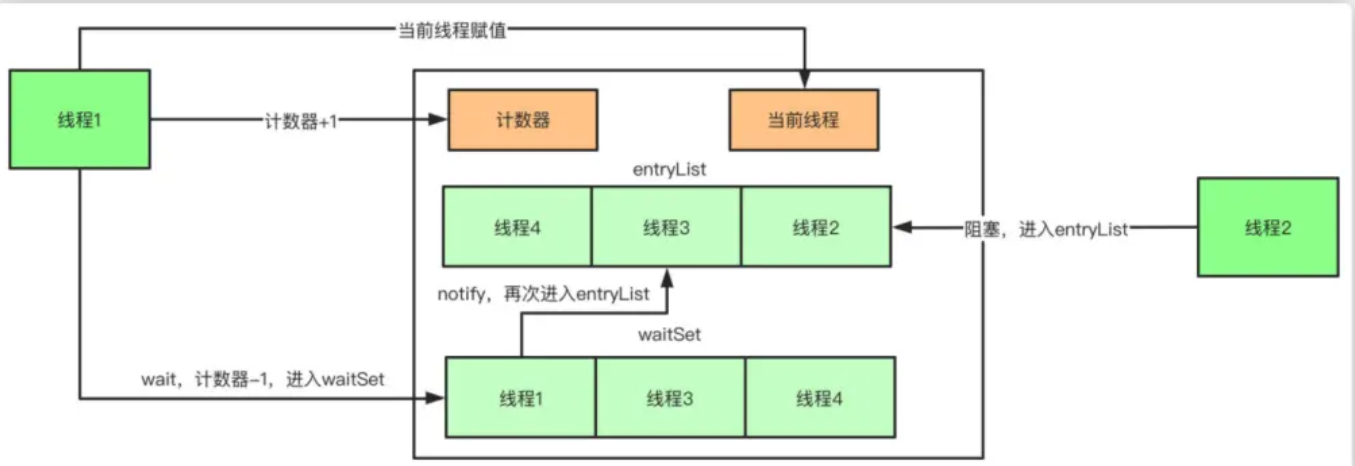

synchronized is a java keyword. synchronized uses the original built-in lock (monitor) provided by java Object), each object has built-in ObjectMonitor objects. This built-in lock that is invisible to the user is also called a monitor lock

synchronized can re-enter, because locking and unlocking are carried out automatically, and there is no need to worry about whether to release the lock at last; 1. After the thread obtaining the lock executes the synchronization code, the lock is released. 2. The thread execution is abnormal, and the jvm will let the thread release the lock.

The synchronized bottom layer is also relatively simple.

First of all, we need to know how an object is composed in memory. It is composed of object header, data information, and filling part (virtual machine requires that the starting address of the object must be an integer multiple of 8 bytes, and the filling data does not have to exist, just for byte alignment). An empty object occupies eight bytes and is automatically filled.

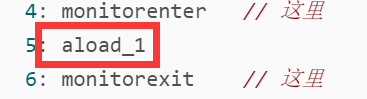

Sync code block:

The object header is associated with a monitor object called monitor, and the bottom layer is composed of c + + source code. When you get the object, you are the owner of the object, execute monitorenter and add one. Enter once and exit one by one, that is, monitorexit, reduce it by one, and return to 0 before the object can be obtained by other threads.

Synchronization method:

That is, when entering the synchronization method, there will be an identification called ACC_SYNCHTRONIZED

If this identifier is recognized, the enter and exit methods will be implicitly executed, which is equivalent to fighting for the owner of the monitor object

The synchronized lock can be used in three ways:

① Decorated code block, that is, synchronous code block. The object of the lock can be specified.

② Modify the static method. The object of the lock is the calss object of the class.

③ Modifies a common method. The object of the lock is the instance object that calls the current method.

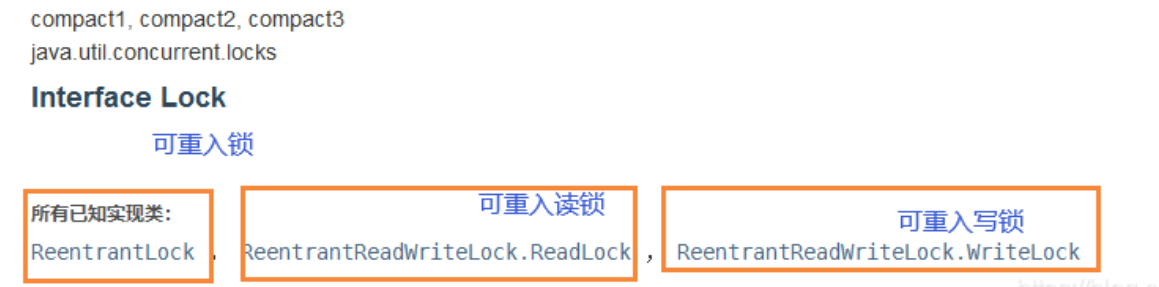

② Lock lock

There are three lock interface implementation classes

Lock Interface: supports different lock rules (reentrant, fair, etc.): 1. Fair lock 2. Non fair lock 3. Reentrant lock

Implement lock interface lock

① Fair lock: the thread is queued to obtain it and cannot jump in the queue.

② Unfair lock: it can jump the queue, and the thread task of 3s and 3H can not wait for 3s and 3h, which improves the efficiency.

③ Reentrant lock: when a lock is obtained, the same lock is obtained again without deadlock.

④ Barrier free lock: stampedlock (enhanced version of read-write lock). Read lock and write lock are completely mutually exclusive. In other words, the read operation needs to wait while data is input. Wait for the write lock to be released before proceeding with the corresponding processing of the read lock.

⑤ Pessimistic lock: always assume the worst case. Every time you get the data, you think others will modify it, so you lock it every time you get the data, so that others will block the data until they get the lock (shared resources are only used by one thread at a time, other threads are blocked, and then transfer the resources to other threads after they are used up). Exclusive locks such as synchronized and ReentrantLock in Java are the implementation of pessimistic lock.

⑥ Optimistic lock: always assume the best situation. Every time I go to get the data, I think others will not modify it, so I won't lock it. However, when updating, I will judge whether others have updated the data during this period. Optimistic locking is suitable for multi read applications, which can improve throughput. In Java, the atomic variable class under the java.util.concurrent.atomic package is the optimistic locking idea realized by using the version number mechanism and CAS algorithm.

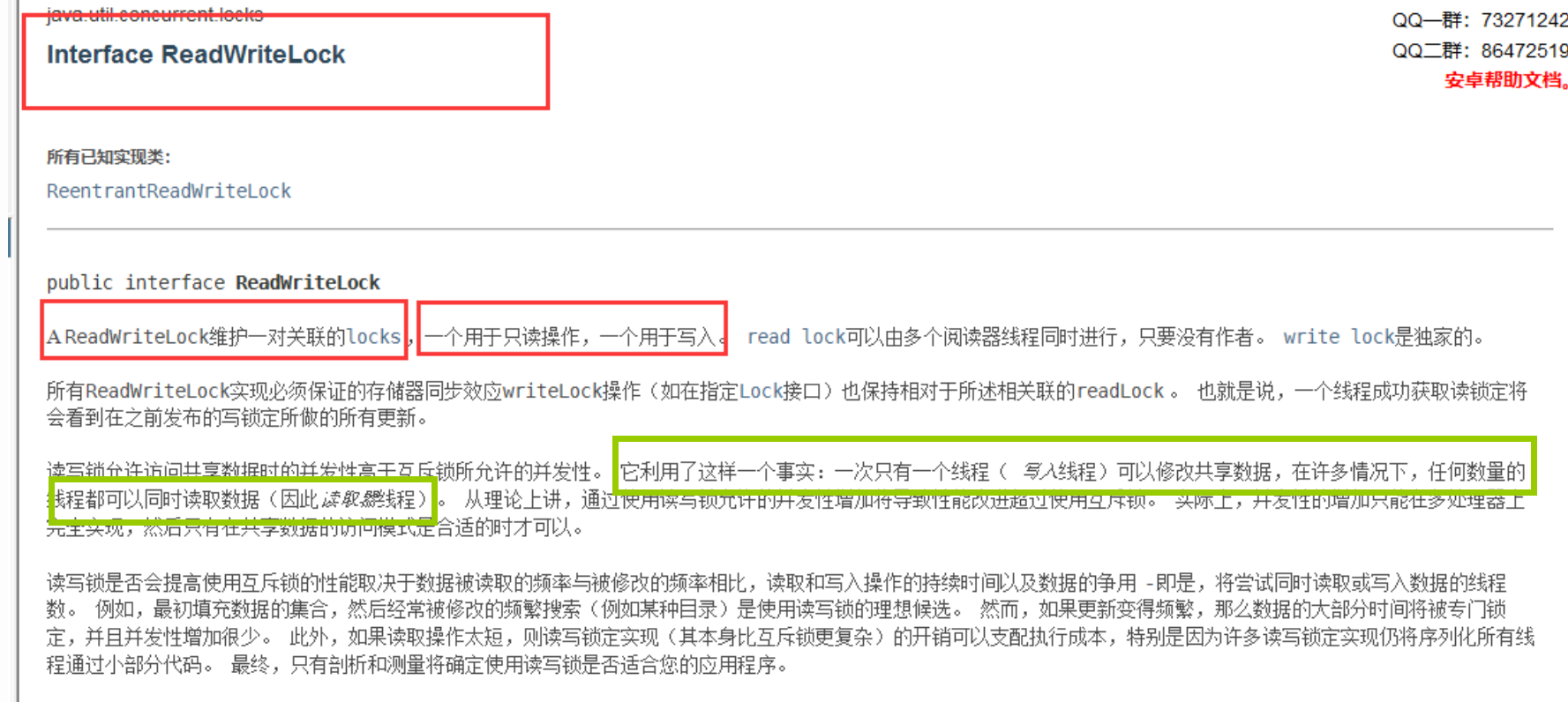

⑦ Read write lock: it is also an exclusive lock. ReadWriteLock manages a group of locks, one is a read-only lock and the other is a write lock. Read locks can be held by multiple threads at the same time when there is no write lock. Write locks are exclusive. Write lock can re-enter read lock.

The implementation of all read-write locks must ensure that a thread that has obtained the read lock must be able to see the updated content of the previously released write lock.

4, Consumer producer issues.

There are three ways to solve this problem:

1, synchronized modifies the resource class.

package com.thread.pcpattren;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.TimeUnit;

/**

* Producer consumer model

* There are three implementation methods: 1. Use the wait and notify implementation of Object, that is, the Synchronized keyword modification

* 2,Lock lock, await, signal

* 3.Using blocking queue ABQ,LBQ

*/

public class SynchronizedDemo {

private final List<String> list = new ArrayList<>(6);

//Because the consumer and producer simulate the implementation of list operation, they must use the same lock

//consumer

public void consumer() throws InterruptedException {

//judge

synchronized (list) {

while (list.size() == 0) {

list.wait();

}

//operation

try {

System.out.println(Thread.currentThread().getName() + "Start spending!");

int index = (int) (Math.random()*(list.size()));

// String productName = list.get(index);

String productName = list.remove(index);

TimeUnit.SECONDS.sleep(2);

System.out.println(Thread.currentThread().getName() + productName +"---Consumption success!" + "Item remaining:" +

list.size());

} catch (Exception e) {

e.printStackTrace();

} finally {

list.notify();

}

}

}

//producer

public void producer() throws InterruptedException {

//judge

synchronized (list) {

while (list.size() == 6) {

list.wait();

}

//operation

try {

System.out.println(Thread.currentThread().getName() + "Start production!");

list.add("product:" + (list.size() + 1));

TimeUnit.SECONDS.sleep(2);

System.out.println(Thread.currentThread().getName() + "product:" + list.size() + 1 +

"---Successful production!" + "Space left:" + (6 - list.size()));

} catch (Exception e) {

e.printStackTrace();

} finally {

list.notify();

}

}

}

}

/**

*The production line starts production!

Production line product:1 --- production succeeded! Space left: 5

The production line starts production!

Product line: 2 --- production succeeded! Space left: 4

The production line starts production!

Product line: 3 --- production succeeded! Space left: 3

The production line starts production!

Product line: 4 --- production succeeded! Space left: 2

The production line starts production!

Product line: 5 --- production succeeded! Space left: 1

The production line starts production!

Product line: 6 --- production succeeded! Space left: 0

Consumers start spending!

Consumer product:4 --- consumption succeeded! Goods left: 5

Consumers start spending!

Consumer product:3 --- consumption succeeded! Goods left: 4

Consumers start spending!

Consumer product:1 --- consumption succeeded! Goods left: 3

Consumers start spending!

Consumer product:2 --- consumption succeeded! Goods left: 2

Consumers start spending!

Consumer product:6 --- consumption succeeded! Goods left: 1

Consumers start spending!

Consumer product:5 --- consumption succeeded! Item left: 0

**/2, Lock lock

public class LockDemo {

private final List<String> list = new ArrayList<>(6);

private Lock lockList = new ReentrantLock();

private Condition condition = lockList.newCondition();

//Because the consumer and producer simulate the implementation of list operation, they must use the same lock

//consumer

public void consumer() throws InterruptedException {

lockList.lock();

//judge

//operation

try {

//After the execution statement in While is completed, continue to judge whether the conditions meet the loop conditions,

//According to the judged conditions, return the execution statement or continue to run the following program.

while (list.size() == 0) {

condition.await();

}

System.out.println(Thread.currentThread().getName() + "Start spending!");

int index = (int) (Math.random() * (list.size()));

// String productName = list.get(index);

String productName = list.remove(index);

TimeUnit.SECONDS.sleep(2);

System.out.println(Thread.currentThread().getName() + productName + "---Consumption success!" + "Item remaining:" +

list.size());

condition.signal();

} catch (Exception e) {

e.printStackTrace();

} finally {

lockList.unlock();

}

}

//producer

public void producer() throws InterruptedException {

lockList.lock();

//judge

try {

while (list.size() == 6) {

condition.await();

}

//operation

System.out.println(Thread.currentThread().getName() + "Start production!");

list.add("product:" + (list.size() + 1));

TimeUnit.SECONDS.sleep(2);

System.out.println(Thread.currentThread().getName() + "product:" + list.size() +

"---Successful production!" + "Space left:" + (6 - list.size()));

condition.signal();

} catch (Exception e) {

e.printStackTrace();

} finally {

lockList.unlock();

}

}

}③ Block the queue and say later

5, 8 lock phenomenon.

In fact, it is to understand what a lock is and the object of the lock.

1. Two synchronization methods, one object, synchronized modification, are the same this lock. Whoever gets the lock first will execute it first. Another thread is blocked.

2. Two synchronization methods and two objects are actually equal to two current instance objects, so they do not affect each other.

3. Two static synchronization methods, regardless of several objects, are class object locks relative to the same lock. Whoever gets the lock first will execute first, and the other thread will block.

4. A synchronization method is a common method. Because there is no mutually exclusive relationship, it is normally executed according to the calling order. If it sleeps (i.e. the execution time is very long), it does not need to be executed first.

5. The two common methods are also executed in the normal order

6. For a static synchronization method and a synchronization method, the two locks are also different, that is, they are not mutually exclusive and execute normally.

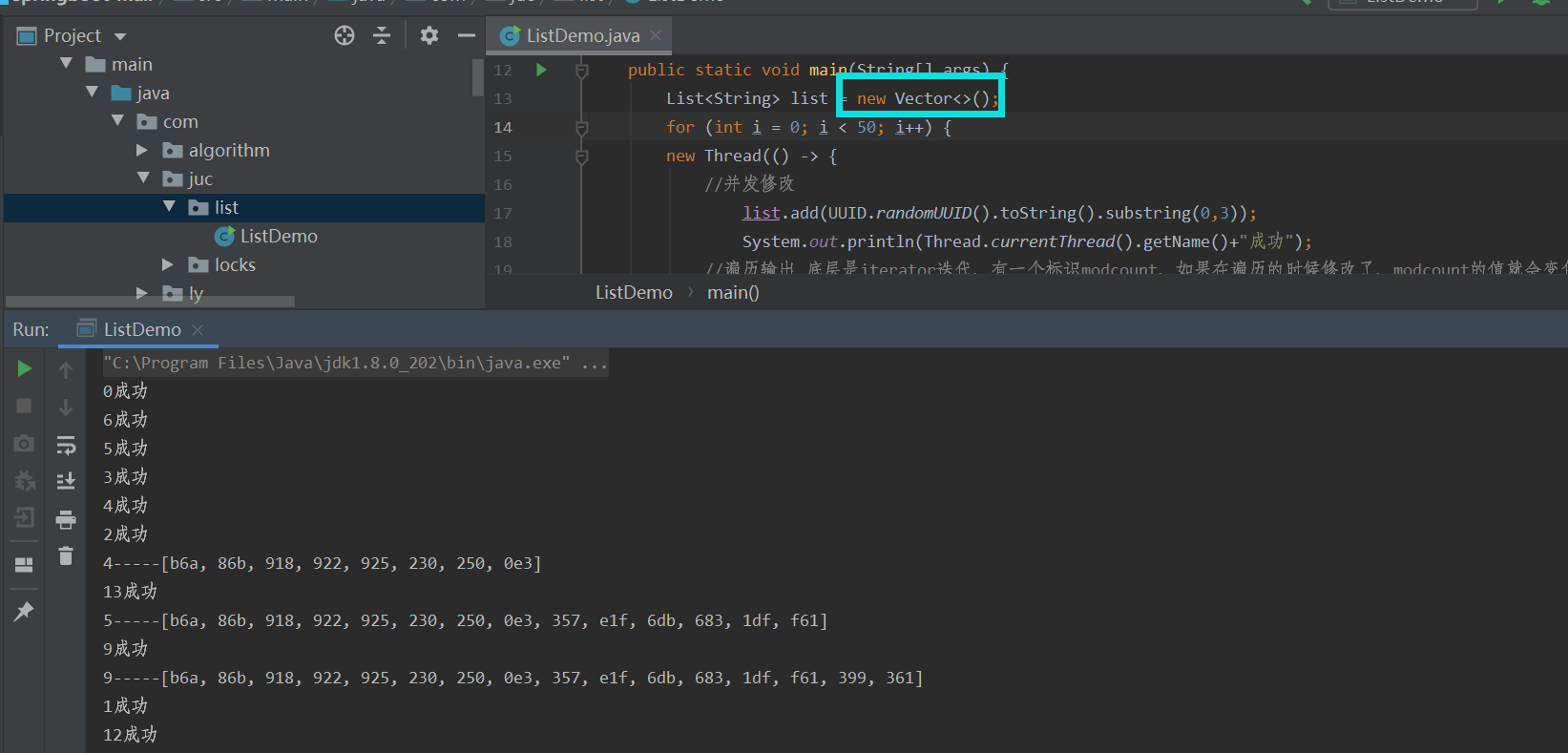

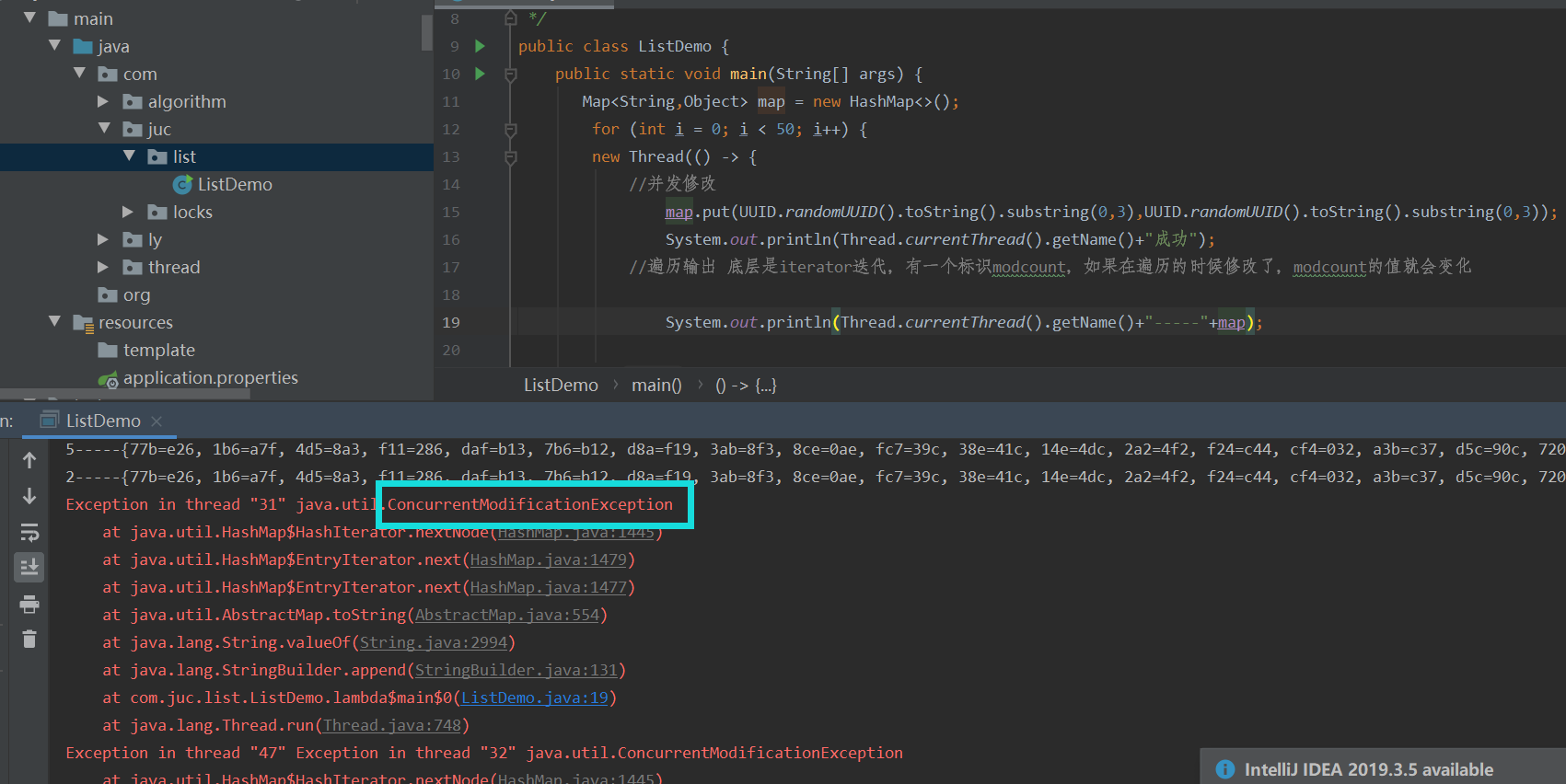

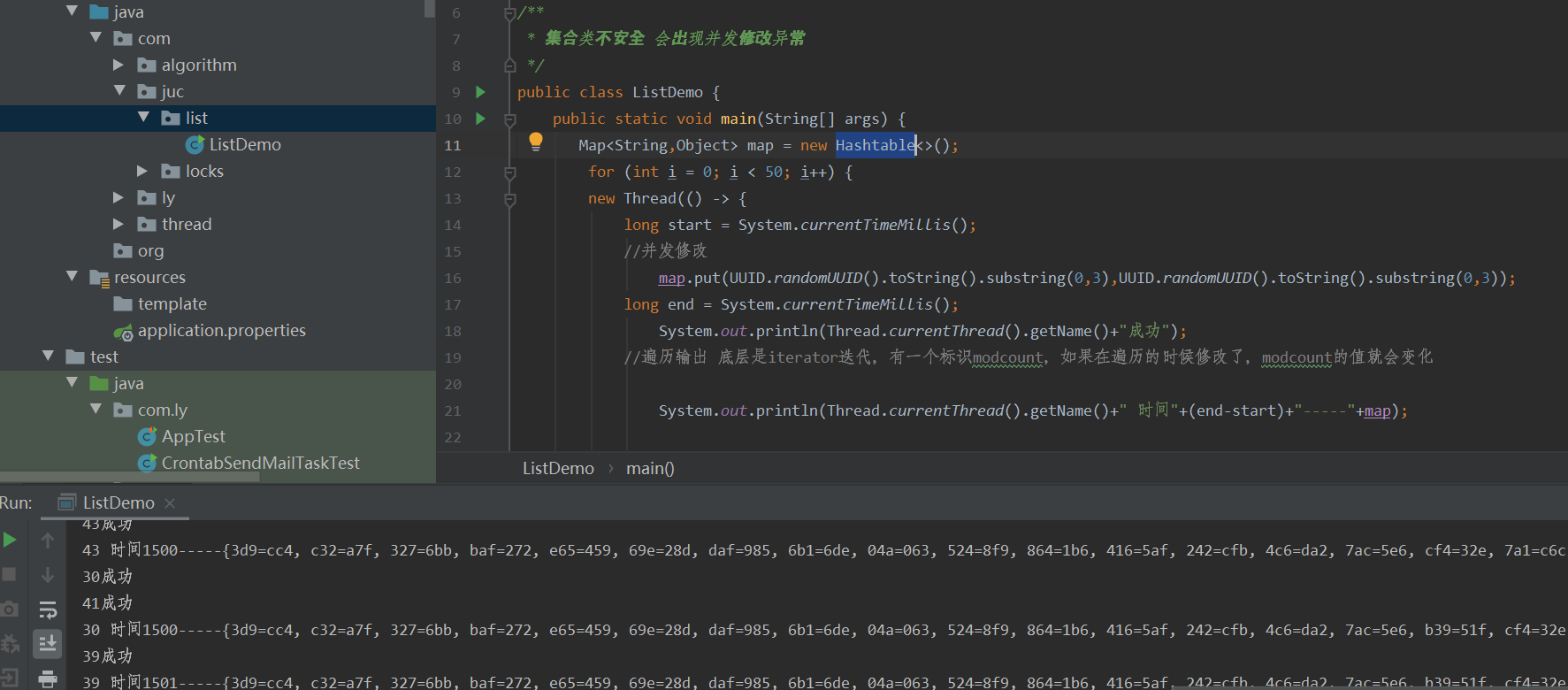

6, Collection class unsafe

① List (ArrayList) is not safe

Looking at its source code, you can find that the add method has no lock. In the case of so many threads, one thread is writing and the other thread can grab it, resulting in inconsistent data, that is, the exception caused by concurrent modification

When there are many concurrent modifications, a concurrent modification error may be reported. It runs multiple times without an error, and the number of output elements in the array is uncertain, that is, it runs unstable under multithreading.

public class ListDemo {

public static void main(String[] args) {

List<String> list = new ArrayList<>();

for (int i = 0; i < 50; i++) {

new Thread(() -> {

//Concurrent modification

list.add(UUID.randomUUID().toString().substring(0,3));

System.out.println(Thread.currentThread().getName()+"success");

//The bottom layer of the traversal output is the iterator iteration, which has an identification modcount. If it is modified during traversal, the value of modcount will change

?????? use foreach It should be read-write concurrency.

System.out.println(Thread.currentThread().getName()+"-----"+list);

}, i+"").start();

}

}

Methods: 1. Use thread safe ArrayList --- Vector

Note: as you can see from the Java source code, Vector appears from JDK1.0, while ArrayList appears from JDK1.2.

If Vector can perfectly replace ArrayList, ArrayList has no value.

In fact, the Vector method locks. Although it can ensure the consistency of data, the concurrency decreases sharply!

Therefore, the problem cannot be solved perfectly by locking.

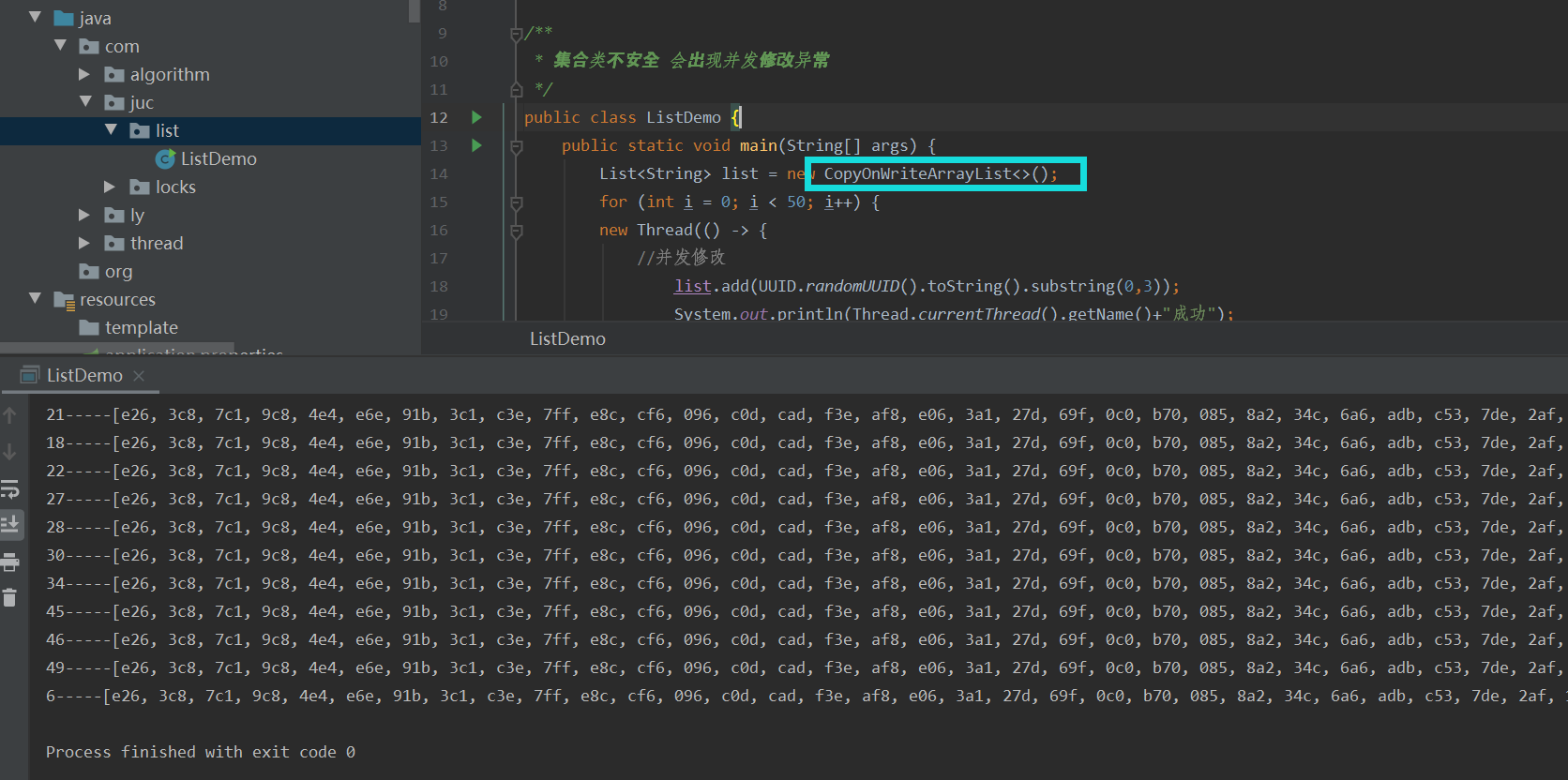

2. Use ArrayList copied on write CopyOnWriteArrayList

That is, when writing or deleting concurrently, it will not be locked directly. It will copy an array element for modification, and assign the value back after modification. In this way, each modification is not to modify the original array, and naturally there will be no concurrent modification exception. Every time you read, you read the original array.

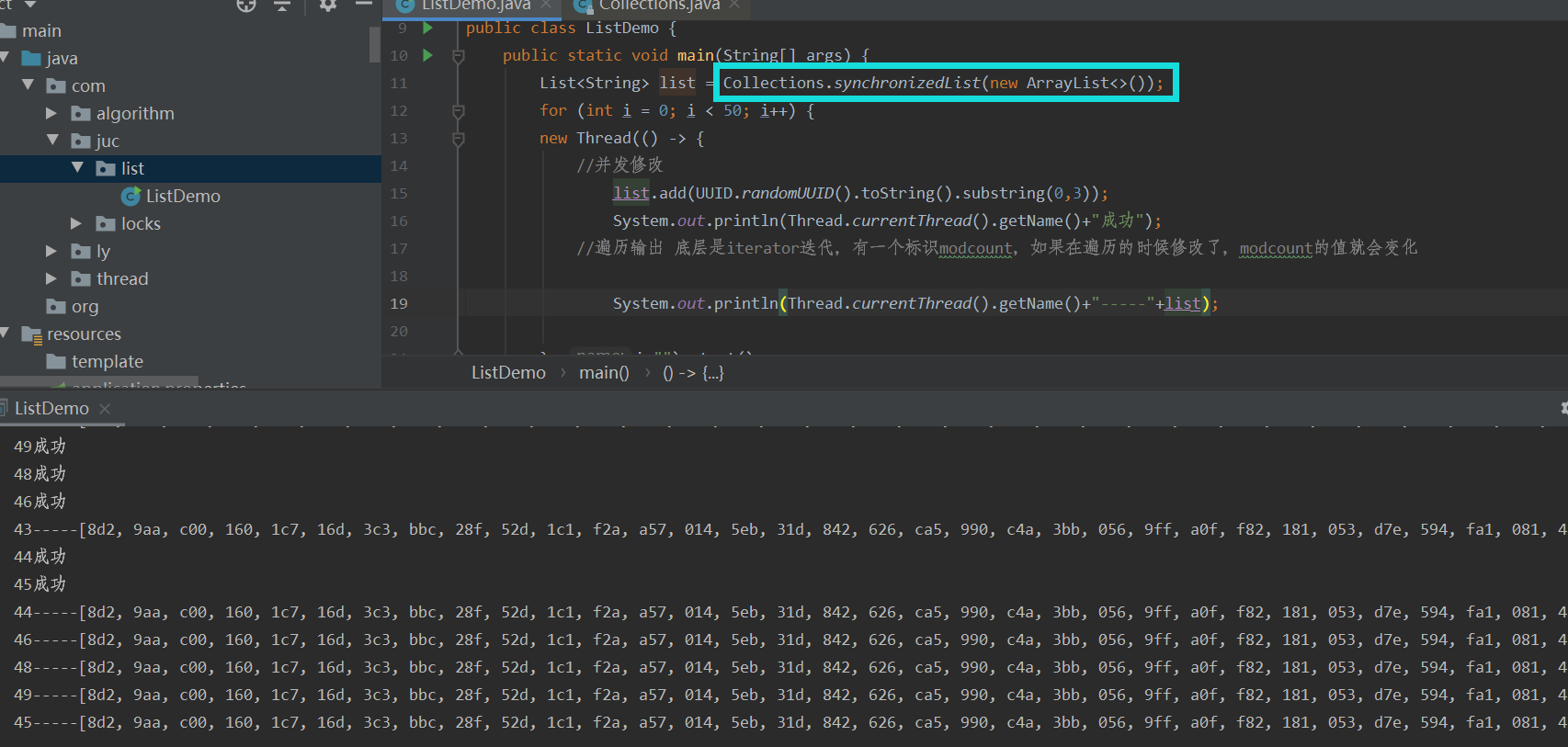

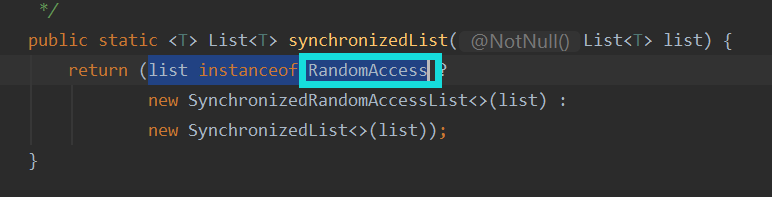

3. Use the collection tool class to wrap the collection into a thread safe collection Synchronizedlist (list type parameter);

Tips: the for loop traversal of ArrayList is faster than that of iterator iterator, and the iterator iterator traversal of LinkedList is faster than that of for loop, The List set that implements the RandomAccess interface is traversed by a general for loop, while the iterator is used if this interface is not implemented.

② Set (hashSet) is not secure

It is basically the same as the list. If the lock is not added, add will modify the exception concurrently.

1. Use CopyOnWriteArraySet

2. Use the thread safe Set wrapped under the Collections class

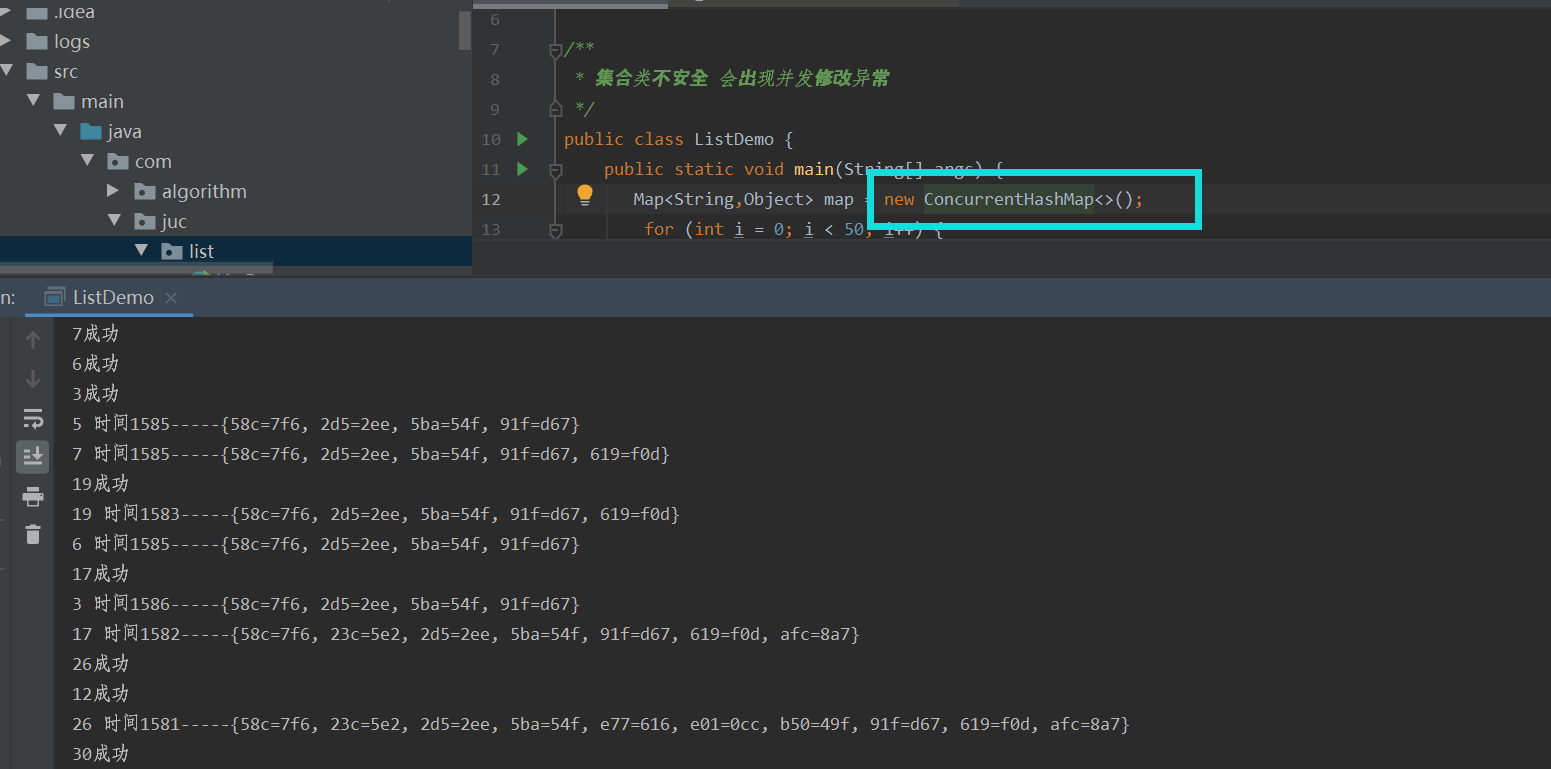

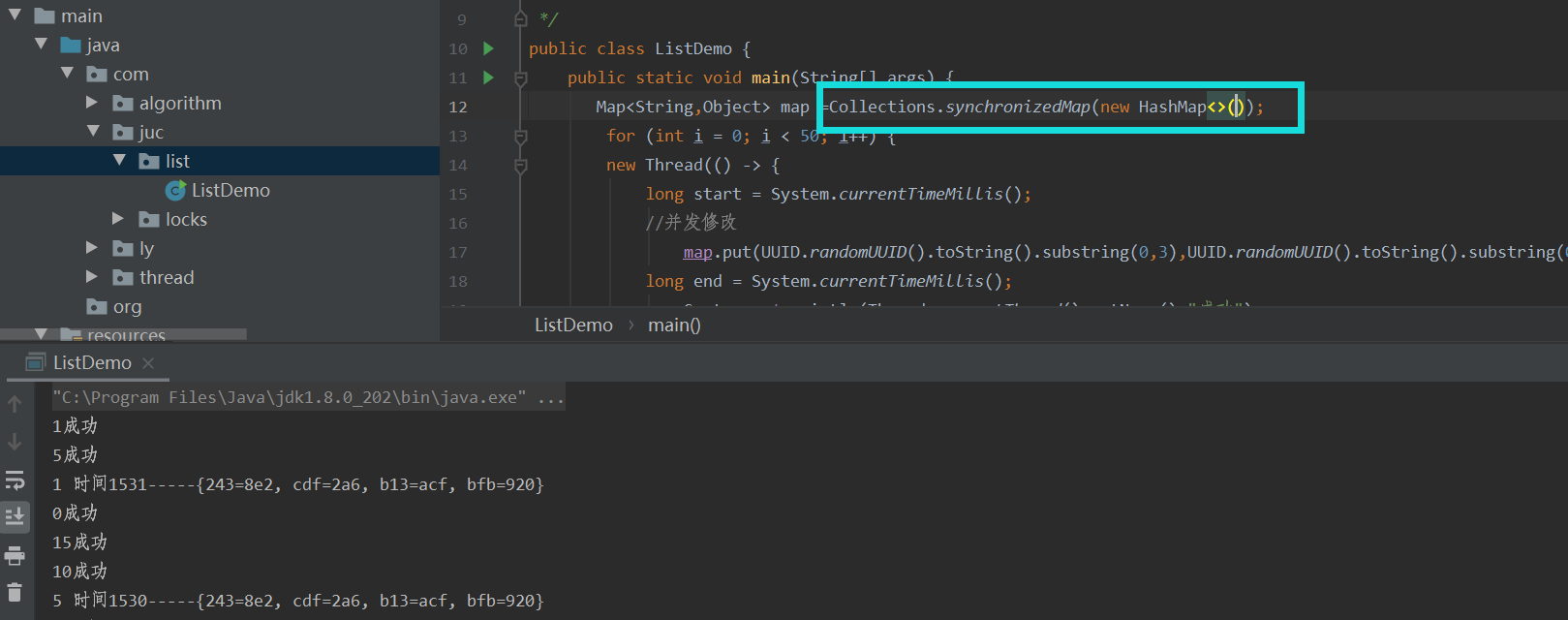

③ Map (hashSet) is not secure

1. Use ConcurrentHashMap

2. Use the thread safe Map wrapped under the Collections class

3. Use hashTable and thread safe hashMap

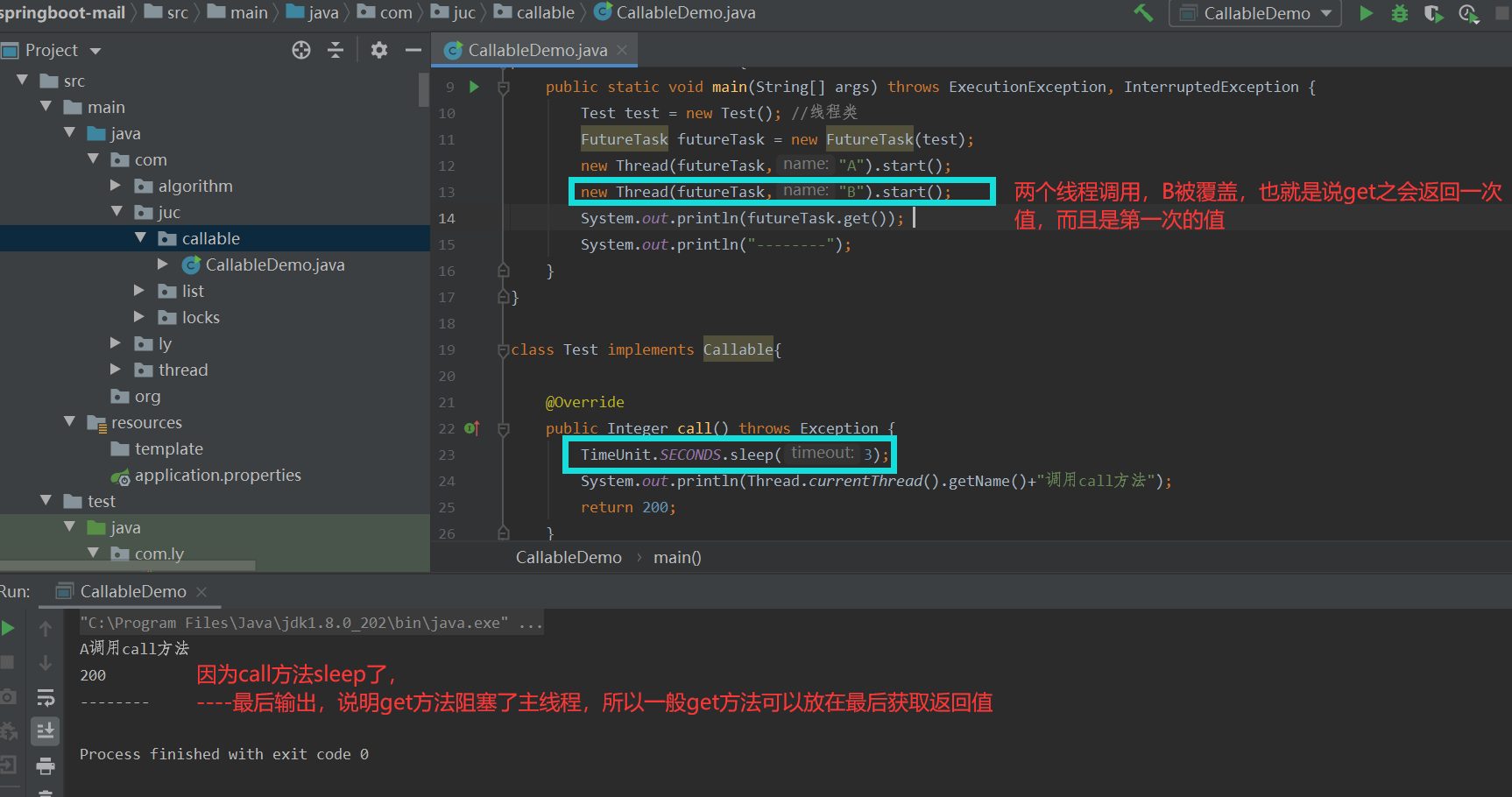

7, Callable

What is the difference between callable and Runnable?

1. One is the call method and the other is the run method

2. The call method has a return value, but the run method does not

3. The call method can throw exceptions and control and manage multiple threads in a fine-grained manner??. The run method does not work

Note: Generally speaking, we create a Thread by using new Thread (using lamda expression to implement runnable interface), but only parameters of runnable implementation class type can be passed in the Thread, and a futureTask class implements runnable interface. Therefore, the following methods can be adopted.

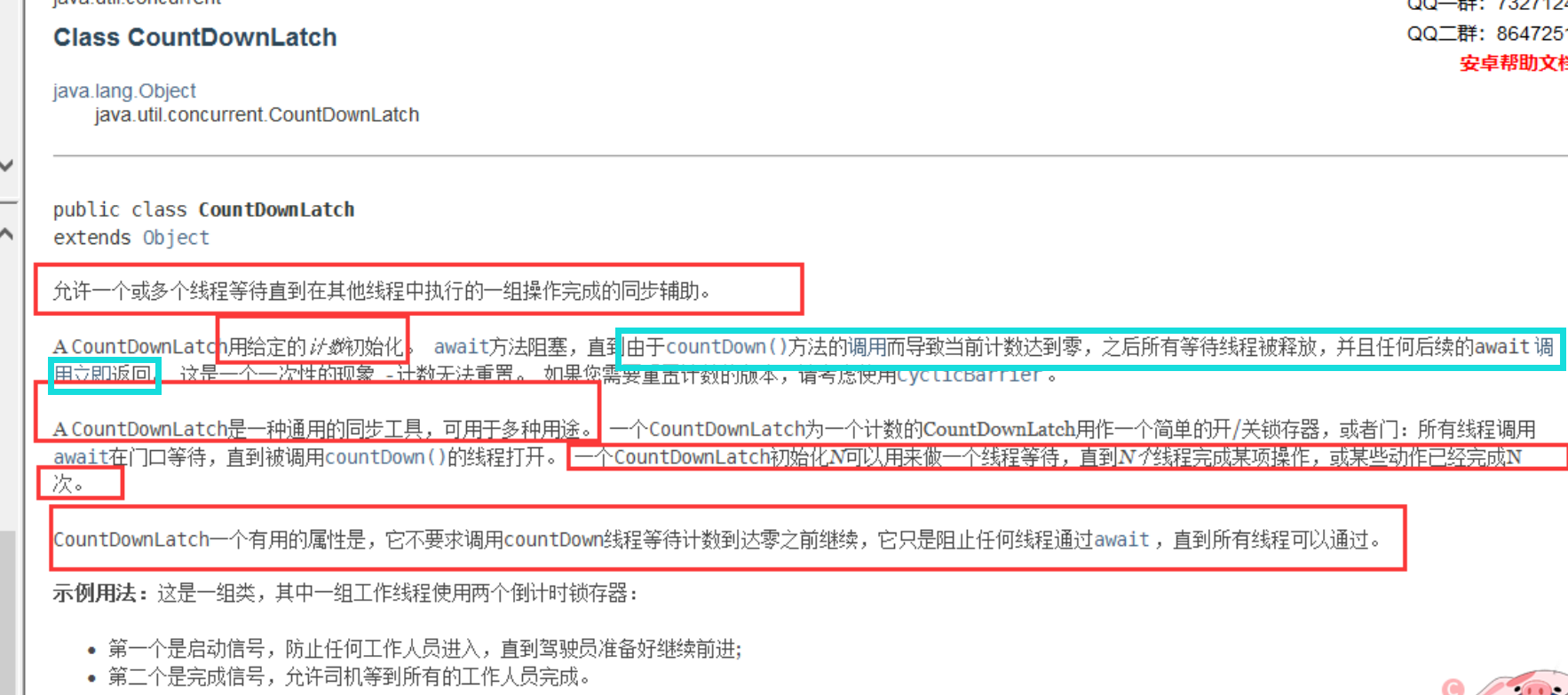

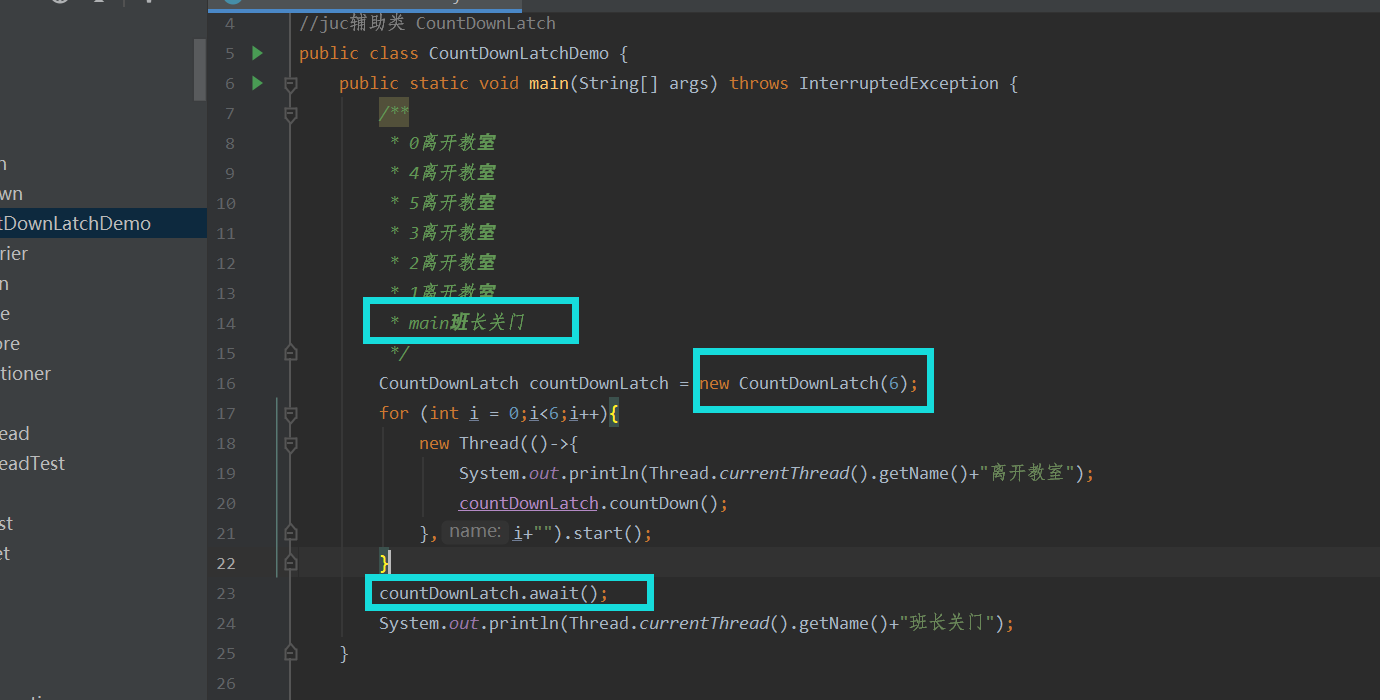

8, Common auxiliary classes

1.CountDownLatch counter

That is, the auxiliary class is a counter function, in which there are two common methods, countdown method minus one, and await blocking subsequent processes. Example: main monitor closes the door. All students must leave the teacher before closing the door.

2.CycliBarriers

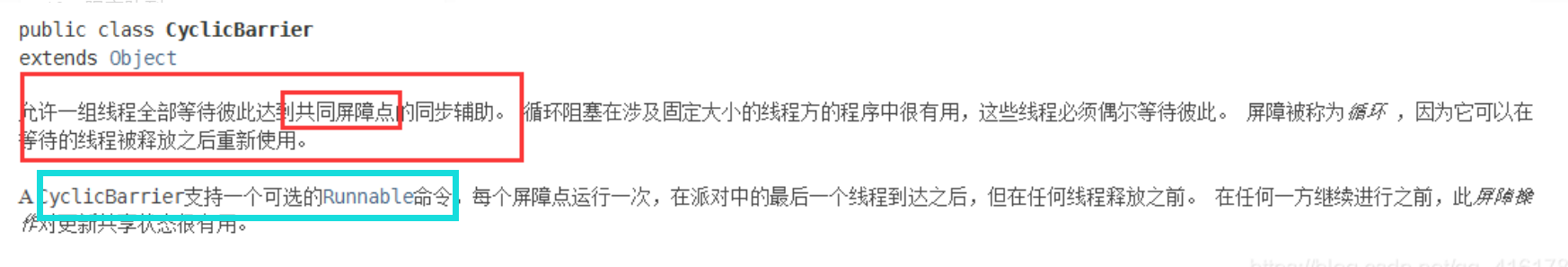

The loop barrier will not block subsequent processes. I understand that it only needs to meet the requirements of a certain number of threads to execute a runnable task. A group of threads complete a task together.

//Before reaching the barrier number, the barrier is blocked by await method

/**

* 1 Enter the conference room!

* main Eating!

* 3 Enter the conference room!

* 2 Enter the conference room!

* 6 Enter the conference room!

* 0 Enter the conference room!

* 4 Enter the conference room!

* 5 Enter the conference room!

* Everybody's here, meeting!

*/

public class CyclicBarrierDemo {

public static void main(String[] args) throws BrokenBarrierException, InterruptedException {

CyclicBarrier cyclicBarrier = new CyclicBarrier(7,

new Runnable() {

@Override

public void run() {

System.out.println("Everybody's here, meeting!");

}

});

for(int i = 0; i < 7 ;i++){

new Thread(()->{

try {

System.out.println(Thread.currentThread().getName() + "Enter the conference room!");

cyclicBarrier.await();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (BrokenBarrierException e) {

e.printStackTrace();

}

},i+"").start();

}

System.out.println(Thread.currentThread().getName() + "Eating!");

// cyclicBarrier.await();

}

}

3.Semaphore

One function of semaphores is to have mutually exclusive access to multiple resource classes

//One function of semaphores is to have mutually exclusive access to multiple resource classes, and the other is to control the number of threads concurrently

//Article 20

/**

* 0 Get resources!

* 2 Get resources!

* 1 Get resources!

* 0 Free resources!

* 2 Free resources!

* 3 Get resources!

* 1 Free resources!

* 4 Get resources!

* 5 Get resources!

* 3 Free resources!

* 5 Free resources!

* 4 Free resources!

* 8 Get resources!

* 7 Get resources!

* 6 Get resources!

* 8 Free resources!

* 6 Free resources!

* 7 Free resources!

* 9 Get resources!

* 9 Free resources!

* There are only three resources, and only three lines can access resources, unless there are released resources.

*

*/

public class SemaphoreDemo {

public static void main(String[] args) {

Semaphore semaphore = new Semaphore(3); //How many resources can be accessed concurrently

for(int i = 0;i<10;i++){

new Thread(()->{

try{

semaphore.acquire();//-1

System.out.println(Thread.currentThread().getName() +"Get resources!");

} catch (InterruptedException e) {

e.printStackTrace();

}

try {

TimeUnit.SECONDS.sleep(1);

System.out.println(Thread.currentThread().getName() +"Free resources!");

} catch (InterruptedException e) {

e.printStackTrace();

}finally {

semaphore.release(); //+1

}

},i+"").start();

}

}

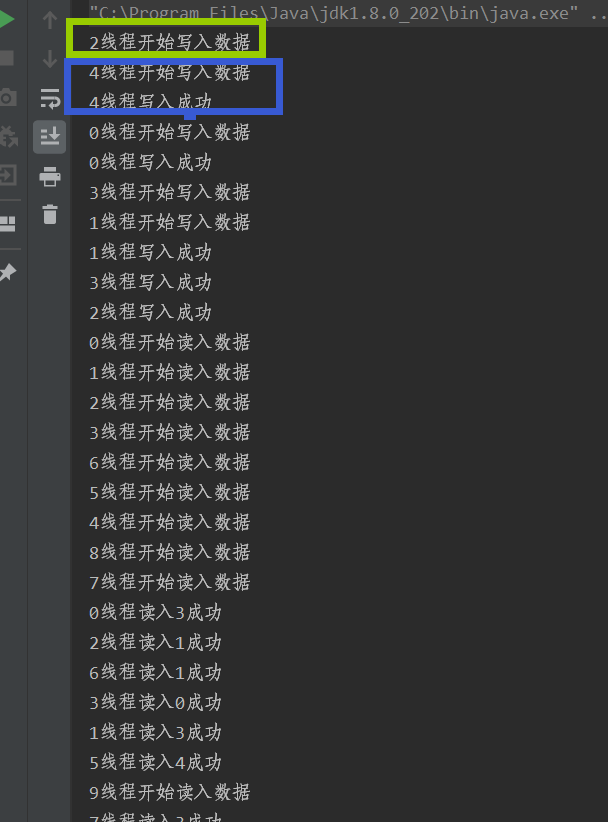

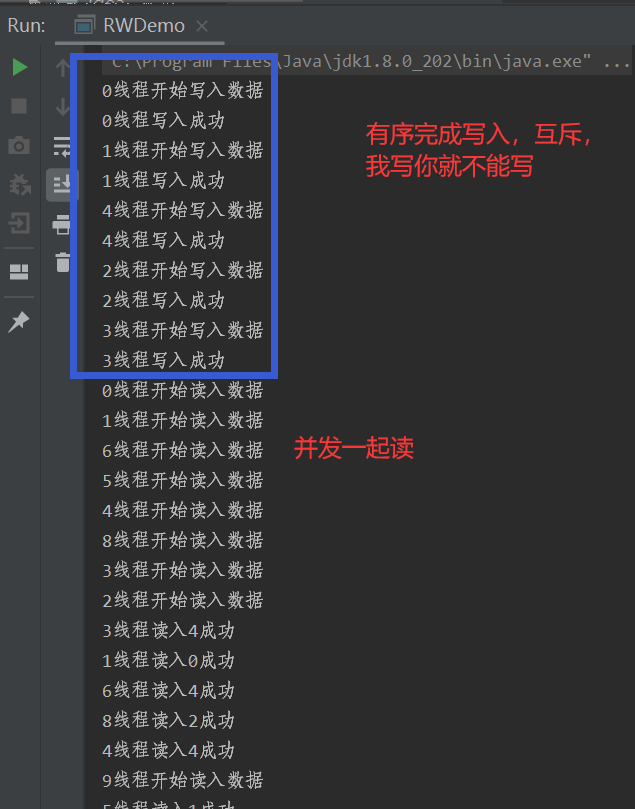

}9, Read write lock

Lock lock: reentrant read / write lock

Read write separation, exclusive write lock, shared read lock

package com.juc.locks;

import java.util.ArrayList;

import java.util.List;

import java.util.UUID;

import java.util.concurrent.locks.ReadWriteLock;

import java.util.concurrent.locks.ReentrantReadWriteLock;

public class RWDemo {

public static void main(String[] args) {

RW rw = new RW();

for (int i = 0; i < 5; i++) {

int finalI = i;

new Thread(() -> {

rw.write(finalI);

}, i+"thread ").start();

}

for (int i = 0; i < 10; i++) {

new Thread(() -> {

rw.read();

}, i+"thread ").start();

}

}

}

class RW {

private volatile List list = new ArrayList();

private ReadWriteLock readWriteLock=new ReentrantReadWriteLock();

//Ordinary lock

// private Lock lock=new ReentrantLock();

public void write(int i) {

System.out.println(Thread.currentThread().getName() + "Start writing data");

list.add(i);

System.out.println(Thread.currentThread().getName() +"Write successful");

}

public void read() {

if (list.size() != 0) {

System.out.println(Thread.currentThread().getName() + "Start reading data");

list.get((int) (Math.random() * (list.size()))); //[0,size-1]

System.out.println(Thread.currentThread().getName() + "Read in" +

list.get((int) (Math.random() * (list.size()))) + "success");

}

}

}

10, Blocking queue

Commonly used blocking queues: ABQ, LBQ, SynchtronizedQueue. Blocking queues are used in the thread pool.

See my detailed description of the collection.

Summarize some problems of the collection framework for yourself_ L_eraser's blog - CSDN blog

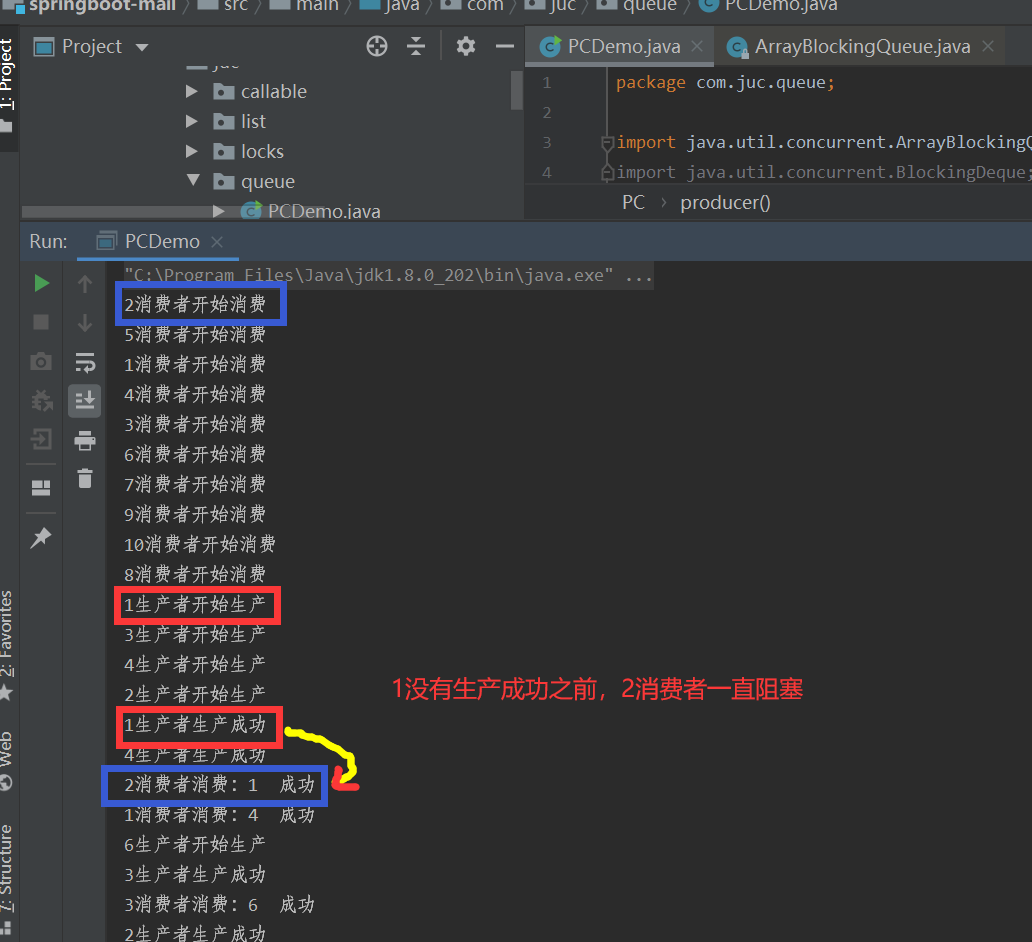

Blocking queues are usually used to complete consumer producer problems.

package com.juc.queue;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingDeque;

public class PCDemo {

public static void main(String[] args) {

PC pc = new PC();

for (int i = 1; i <= 10; i++) {

int finalI = i;

new Thread(() -> {

try {

pc.consumer();

} catch (InterruptedException e) {

e.printStackTrace();

}

}, i + "consumer").start();

}

for (int i = 1; i <= 10; i++) {

int finalI = i;

new Thread(() -> {

try {

pc.producer(finalI);

} catch (InterruptedException e) {

e.printStackTrace();

}

}, i + "producer").start();

}

}

}

class PC {

private ArrayBlockingQueue blockingDeque = new ArrayBlockingQueue<String>(10);

//consumption

public void consumer() throws InterruptedException {

System.out.println(Thread.currentThread().getName() + "Start consumption");

Object a = blockingDeque.take(); //When there are no items in it, it will be blocked automatically, that is, it will not be printed and consumed successfully. When it is produced, it will be released automatically

System.out.println(Thread.currentThread().getName() + "Consumption:" + a + " success");

}

//generate

public void producer(Integer i) throws InterruptedException {

System.out.println(Thread.currentThread().getName() + "Start production");

blockingDeque.put(i);//A lock lock is added to the method. When it is full, it will be blocked automatically and will not be produced successfully

System.out.println(Thread.currentThread().getName() + "Production success");

}

}

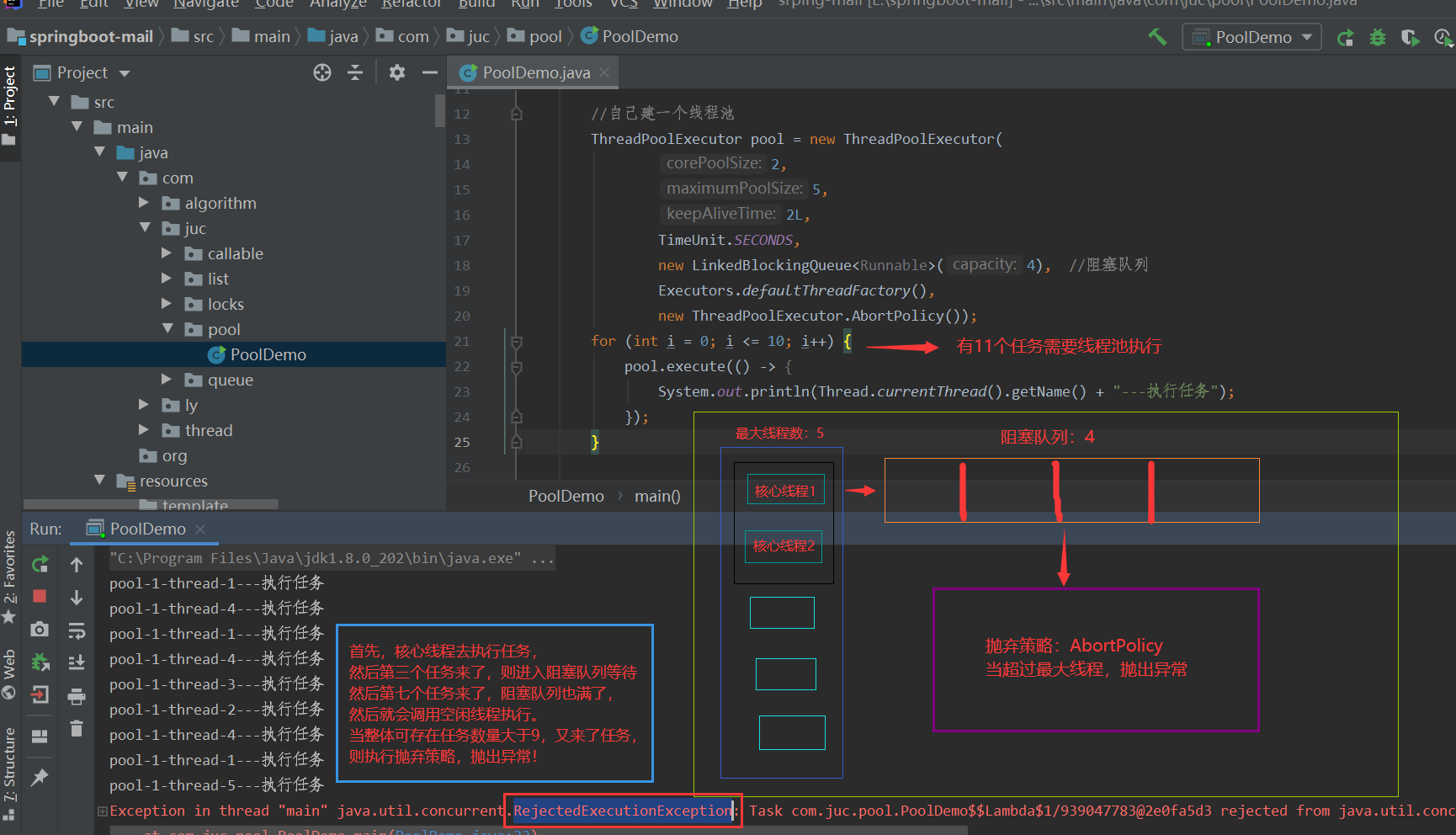

11, Thread pool

Three methods, seven parameters and four strategies

The advantages of thread pool technology: 1. Thread pool can have many idle threads, which can save the time of creation and destruction, and use threads to perform tasks. 2.

2. Thread pool can be used to manage multiple threads

3. Based on 1, thread pool can improve the efficiency of computer task execution

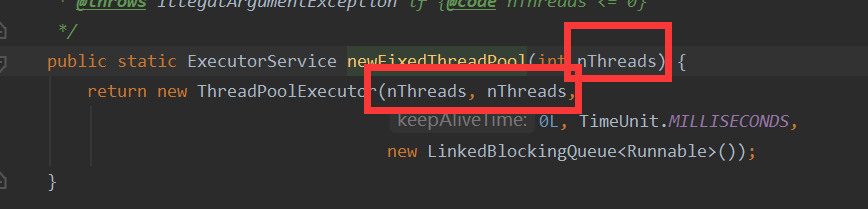

Three methods: ExecutorService pool = Executors.newFixedThreadExecutor (int i); Fixed size thread pool

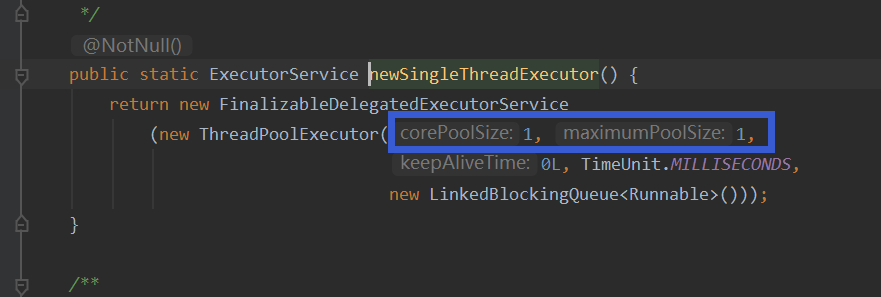

ExecutorService pool = Executors.newSingleThreadExecutor(); Single thread pool

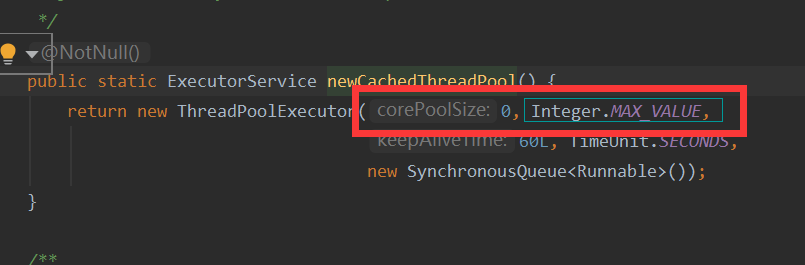

ExecutorService pool = Executors.newCachedThreadExecutor(); For variable size thread pools, idle threads can exist for up to 60s and will be destroyed.

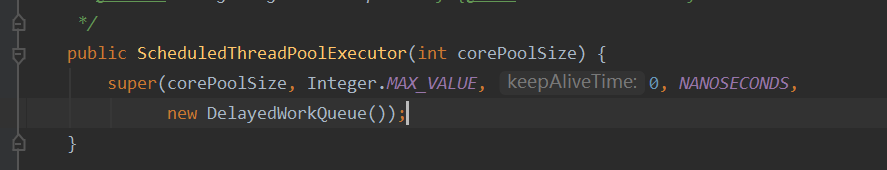

ExecutorService pool = Executors.newScheduledThreadExecutor(int i); Periodic thread pool, using delayed work queue.

Seven parameters

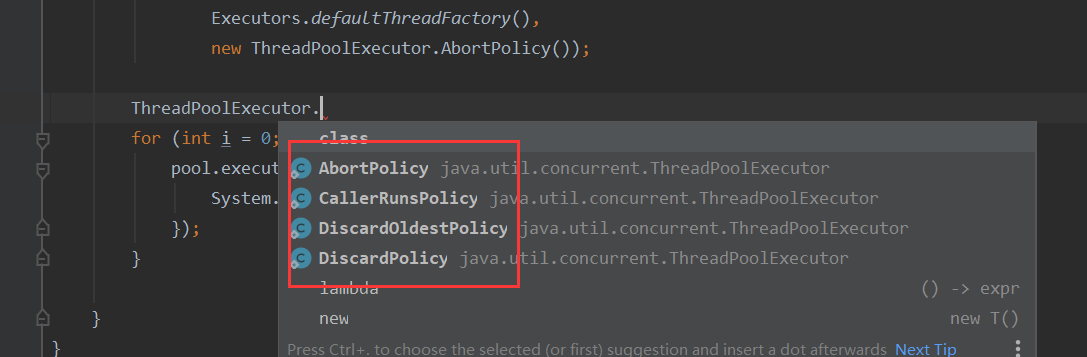

Four rejection strategies

1. Default reject policy and throw an exception.

1. Default reject policy and throw an exception.

2. Where it comes from, where it is executed. For example, return to the main thread to execute the task.

3. Discard the oldest task policy and directly discard the oldest task in the blocking queue

4. Abandon the strategy directly, lose him directly, and don't implement it.

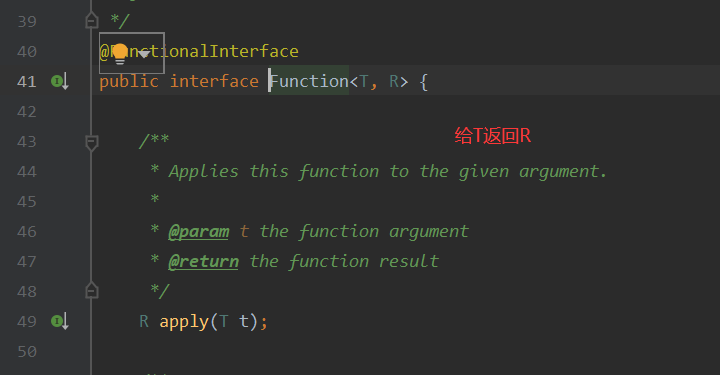

12, Four function interfaces

New programmers need to master: lamda expression, chain programming, four function interfaces, and flow computing.

① Function interface: function type (meaning to return y to x)

② . predicate assertive interface (judge whether it is correct)

③ Consumer consumption function (input only, no return)

④ supplier production function interface (not given, only returned)

④ supplier production function interface (not given, only returned)

Override get method

13, Flow calculation (using chain programming)

Stream is called "stream" in Chinese. By converting a set into such a sequence of elements called "stream", a series of parallel or serial pipeline operations can be performed on each element in the set in a declarative manner.

In other words, you just need to tell the stream your requirements, and the stream will process the elements according to the requirements behind it, and you just need to "sit back and enjoy the success".

package com.juc.stream;

import java.util.*;

//Only collections are stream ed

public class StreamDemo {

public static void main(String[] args) {

List<User> list = new ArrayList<>();

list.add(new User("ali",20));

list.add(new User("fli",45));

list.add(new User("sol",16));

list.add(new User("uoo",8));

list.add(new User("qds",51));

//Find even number, age, name sorting, uppercase ascending output

list.stream().

filter Returns a stream containing the results of applying the given function to the elements of this stream.

filter((user)->{ return user.getAge() % 2 == 0;}).

filter((user -> {return user.getAge() > 10;})).

//Stream<R> map(Function<? super T, ? extends R> mapper);

//Returns a {@ code intstream} containing the result of applying the given function to the element of this stream.

map(((user) -> {return user.getName().toUpperCase();})).

// Stream<T> sorted(Comparator<? super T> comparator);

// Returns a stream consisting of the elements of the stream and performs the provided operations on each element when using the elements in the result stream.

sorted((user1,user2)->{ return user2.compareTo(user1);}).

limit(1).// After discarding other elements in the stream, returns a stream composed of the stream elements of the specified size*

// If this stream contains less than {@ code n} elements, an empty stream is returned.

forEach(System.out::println);

//void forEach(Consumer<? super T> action);

//PrintStream out = System.out; The method is referenced by "functional interface variable name = class instance:: method name"

//The source code of println method knows that println is a non static method in PrintStream class

/**

* Consumer<String> fun = System.out::println;

**/

}

}

class User{

private String name;

private int age;

public User(String name, int age) {

this.name = name;

this.age = age;

}

public User() {

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

}

-------------Output: SOL14, Forkjoin merger

A large task is divided into several subtasks, which are processed in parallel by fork method. Finally, the subtask results are combined into the final calculation results by join method and output.

package com.ogj.forkjoin;

import java.util.concurrent.RecursiveTask;

public class ForkJoinDemo extends RecursiveTask<Long> {

private long star;

private long end;

//critical value

private long temp=1000000L;

public ForkJoinDemo(long star, long end) {

this.star = star;

this.end = end;

}

/**

* computing method

* @return Long

*/

@Override

protected Long compute() {

if((end-star)<temp){

Long sum = 0L;

for (Long i = star; i < end; i++) {

sum+=i;

}

// System.out.println(sum);

return sum;

}else {

//Divide and conquer calculation using forkJoin

//Calculate average

long middle = (star+ end)/2;

ForkJoinDemo forkJoinDemoTask1 = new ForkJoinDemo(star, middle);

forkJoinDemoTask1.fork(); //Split the task and push the thread task into the thread queue

ForkJoinDemo forkJoinDemoTask2 = new ForkJoinDemo(middle, end);

forkJoinDemoTask2.fork(); //Split the task and push the thread task into the thread queue

long taskSum = forkJoinDemoTask1.join() + forkJoinDemoTask2.join();

return taskSum;

}

}

}

package com.juc.fork;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.ForkJoinPool;

import java.util.concurrent.ForkJoinTask;

import java.util.stream.LongStream;

public class Test {

public static void main(String[] args) throws ExecutionException, InterruptedException {

test1();

test2();

test3();

}

/**

* General calculation

*/

public static void test1(){

long star = System.currentTimeMillis();

long sum = 0L;

for (long i = 1; i < 20_0000_0000; i++) {

sum+=i;

}

long end = System.currentTimeMillis();

System.out.println("for Cycle: sum="+"Time:"+(end-star));

System.out.println(sum);

}

/**

* Using ForkJoin

*/

public static void test2() throws ExecutionException, InterruptedException {

long star = System.currentTimeMillis();

//Pool technology

ForkJoinPool forkJoinPool = new ForkJoinPool();

//New task, new ForkDemo(0, 20_0000_0000)

ForkJoinTask<Long> task = new ForkDemo(0L, 20_0000_0000L);

//Submit task to pool

ForkJoinTask<Long> submit = forkJoinPool.submit(task);

//Get the return value of submit

long aLong = submit.get();

System.out.println(aLong);

long end = System.currentTimeMillis();

System.out.println("ForkJoin: sum="+"Time:"+(end-star));

}

/**

* Parallel streams using Stream

*/

public static void test3(){

long star = System.currentTimeMillis();

//Stream parallel stream ()

long sum = LongStream.range(0L, 20_0000_0000L).// (0,20000000000)

// The difference between range and closed is that rangeClosed contains the last end node, and range does not. The mathematical interval can be expressed as [startInclusive,endExclusive]

parallel().//Parallel stream execution improves efficiency

reduce(0, Long::sum); // public final class Long

// Public static long sum (long a, long b) 0 means initialization result = 0

/**

* There are three kinds of method references. Method references are represented by a pair of double colons::. Method references are another writing method of functional interfaces

*

* Static method reference, through class name:: static method name, such as Integer::parseInt

*

* Instance method reference, through instance object:: instance method, such as str::substring

*

* Construct method reference through class name:: new, such as User::new

*/

System.out.println(sum);

long end = System.currentTimeMillis();

System.out.println("Flow calculation: sum="+"Time:"+(end-star));

}

}

---------result:

for Cycle: sum=Time: 805

1999999999000000000

1999999999000000000

ForkJoin: sum=Time: 589

1999999999000000000

Flow calculation: sum=Time: 350

Sorting problem is a common problem in our work. At present, many ready-made algorithms have been invented to solve this problem, such as a variety of interpolation sorting algorithms and a variety of exchange sorting algorithms. Among all the current sorting algorithms, merge sorting algorithm has better average time complexity (O(nlgn)) and better stability. Its core algorithm idea decomposes the large problem into multiple small problems, and merges the results.

The merging algorithm only takes 2-3 milliseconds to sort 10000 random numbers, about 20 milliseconds to sort 100000 random numbers, and the average time to sort 1 million random numbers is about 160 milliseconds (depending on whether the randomly generated array to be sorted itself is messy). It can be seen that the merging algorithm itself has good performance. Using JMX tools and the CPU monitor of the operating system to monitor the execution of the application, it can be found that the whole algorithm runs in a single thread, and only a single CPU kernel works as the main processing kernel at the same time.

Therefore, the ForkJoin framework is an optimization of the merging algorithm. It uses multiple cores to run the original single core programs at the same time, and the programs on each core do not intersect with each other. This will not change the original time complexity of the algorithm.

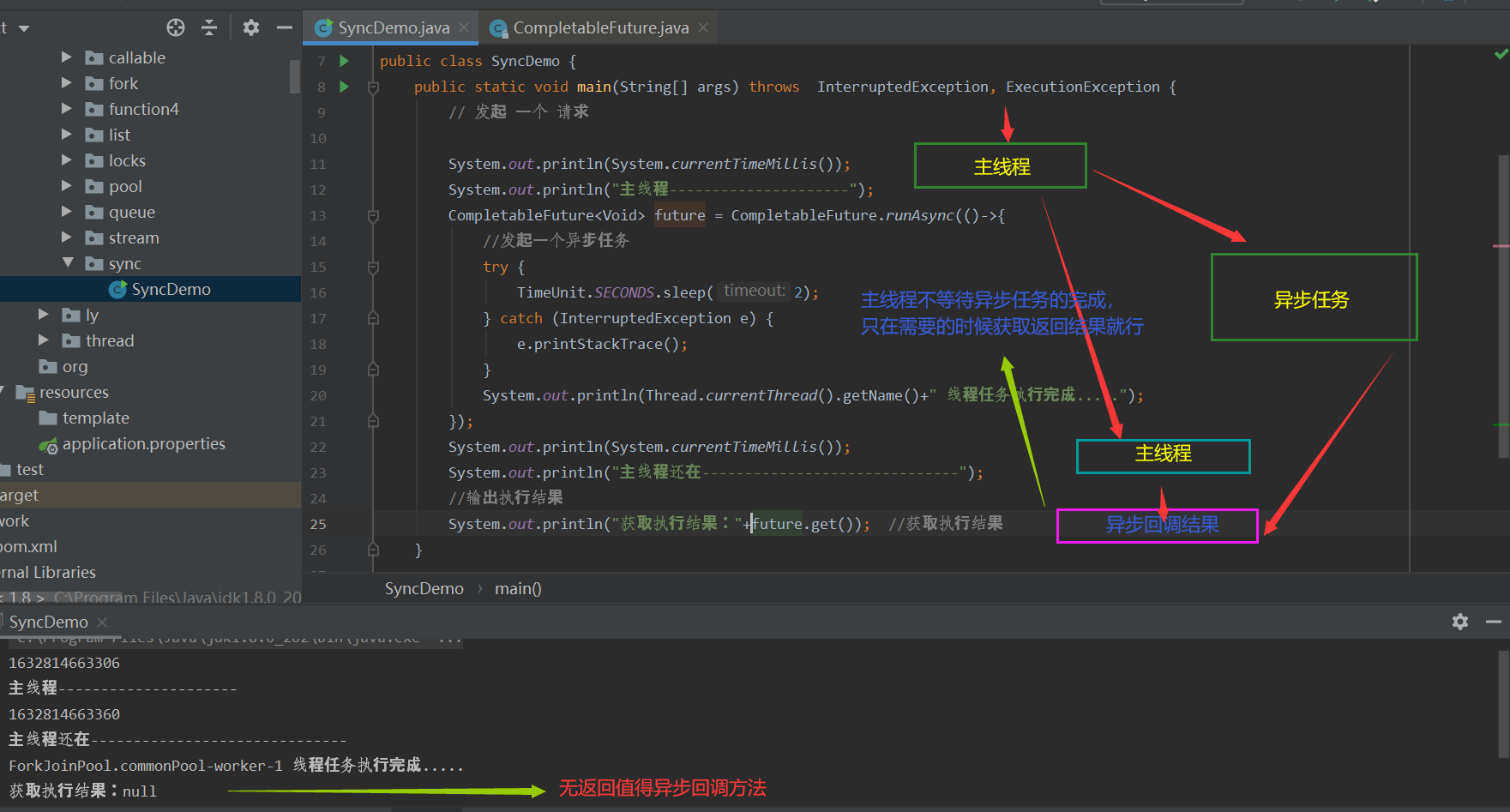

15, Asynchronous callback

What is asynchronous?

Asynchronous: compared with a group of students who want to ask the teacher questions, but the teacher is explaining to others, and then other students write their questions on a note, and then leave to do their own things. Tomorrow, when the teacher answers, the teacher will come to them and tell them the answer.

Synchronization: compared with a group of students who want to ask the teacher questions, but the teacher is explaining to others, and then other students write their questions on a note and wait here. When the teacher has answered the others, tell them the answer.

The implementation of asynchronous callback depends on multi thread or multi process

There are always certain interfaces between software modules. In terms of calling methods, they can be divided into three categories: synchronous call, callback and asynchronous call.

Synchronous call is a blocking call. The caller waits for the other party to complete execution before returning. It is a one-way call

Callback is a two-way calling mode, that is, the callee will call the other party's interface when the interface is called;

Asynchronous call is a mechanism similar to message or event. When an interface service receives a message or an event, it will actively notify the client (that is, call the client's interface). Callback and asynchronous call are closely related. Usually, we use callback to register asynchronous messages and asynchronous call to notify messages.

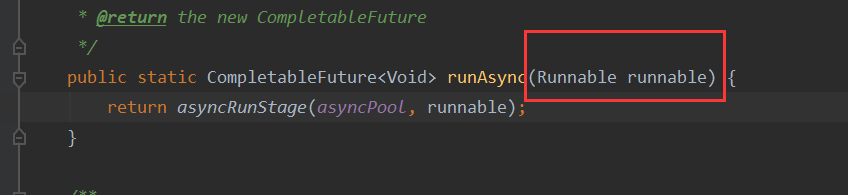

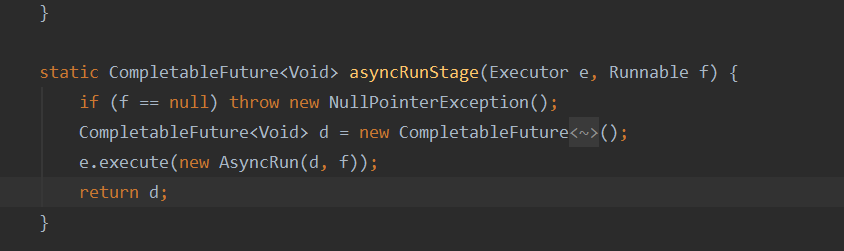

① An asynchronous callback method with no return value to create a task

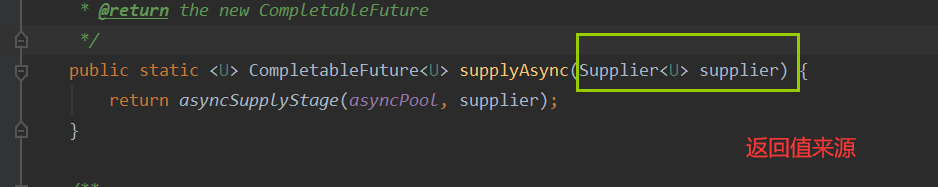

② Asynchronous callback method with return value

package com.juc.sync;

import java.util.concurrent.CompletableFuture;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.TimeUnit;

public class SyncDemo {

public static void main(String[] args) throws ExecutionException, InterruptedException {

//For asynchronous callback with return value, override the method of supplier functional interface

CompletableFuture<Integer> completableFuture = CompletableFuture.supplyAsync(() -> {

System.out.println(Thread.currentThread().getName()+"Execute asynchronous method:");

try {

TimeUnit.SECONDS.sleep(2);

int i = 1 / 1;//report errors

} catch (InterruptedException e) {

e.printStackTrace();

}

return 1024; //Return value of supplier functional interface

});

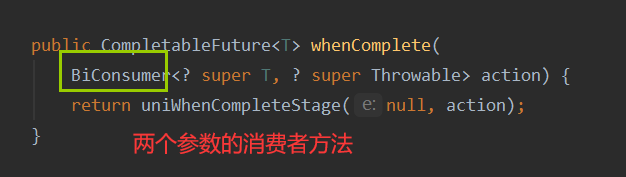

// return uniWhenCompleteStage(null, action);

// public CompletableFuture<T> whenComplete(BiConsumer<? super T, ? super Throwable> action)

//Override BiConsumer method

//Chain programming!!

System.out.println(completableFuture.whenComplete((t, u) -> {

//success callback

System.out.println("t=>" + t); //Normal return result

System.out.println("u=>" + u); //Error message for throwing exception

}).exceptionally((e) -> {

//error callback

System.out.println("Error callback:"+e.getMessage());

return 404;

}).get()); //If it is correct, you can get the original result; If an exception occurs, get can get the value returned by exceptionally

}

}

-------Asynchronous task calculation 1/0: The result is an error!

ForkJoinPool.commonPool-worker-1 Execute asynchronous method:

t=>null

u=>java.util.concurrent.CompletionException: java.lang.ArithmeticException: / by zero

Error callback: java.lang.ArithmeticException: / by zero

404

-------Perform correct calculations

ForkJoinPool.commonPool-worker-1 Execute asynchronous method:

t=>1024

u=>null

1024 -->get Results achieved16, JMM+Volatile

①JMM

In fact, the java memory model does not exist. It is a set of specifications. In order to solve the unsafe reading and writing of memory variables by threads in a sense, JMM defines a set of happens before principles to ensure the atomicity, visibility and order between two operations in a multithreaded environment.

1. Sequence regulations. That is, serial execution must be guaranteed in a thread. That is, a single thread executes from top to bottom.

2. Lock provisions. Unlocking must occur before locking the same lock. That is, after locking, it must be unlocked before locking again. (in pairs)

3.volatile provisions. Volatile write must precede volatile read. That is, volatile reads ensure that the old values of the main memory are read. Once the thread writes to the volatile variable, it must be refreshed to the main memory immediately to ensure that it is visible to all threads.

4. Startup regulations. Thread start takes precedence over all its operations, that is, if thread A modifies A variable value before calling thread B's start method, the change of change amount after Bstart is visible to B.

5. Waiting for regulations. The join operation of the thread is set after all operations, that is, if thread A.join(B), thread A needs to wait for thread B to complete before executing. If B modifies the value of A variable before thread B returns, after returning, the modification of B is visible to A.

6. Interruption provisions. The execution of thread calling interrupt method takes precedence over the interrupt event detected by the code, that is, whether the thread is interrupted can be detected through Thread.interrupted() method.???

7. Termination provisions. The end of the object constructor takes precedence over the finalize method. That is, it can be recycled only after initialization.

8. Transmission regulations. If A takes precedence over B and B takes precedence over C, then A takes precedence over C.

In case of non-compliance with this provision under multithreading, in addition to relying on synchronous lock to ensure atomicity, visibility and order. You can also rely on the volatile keyword.

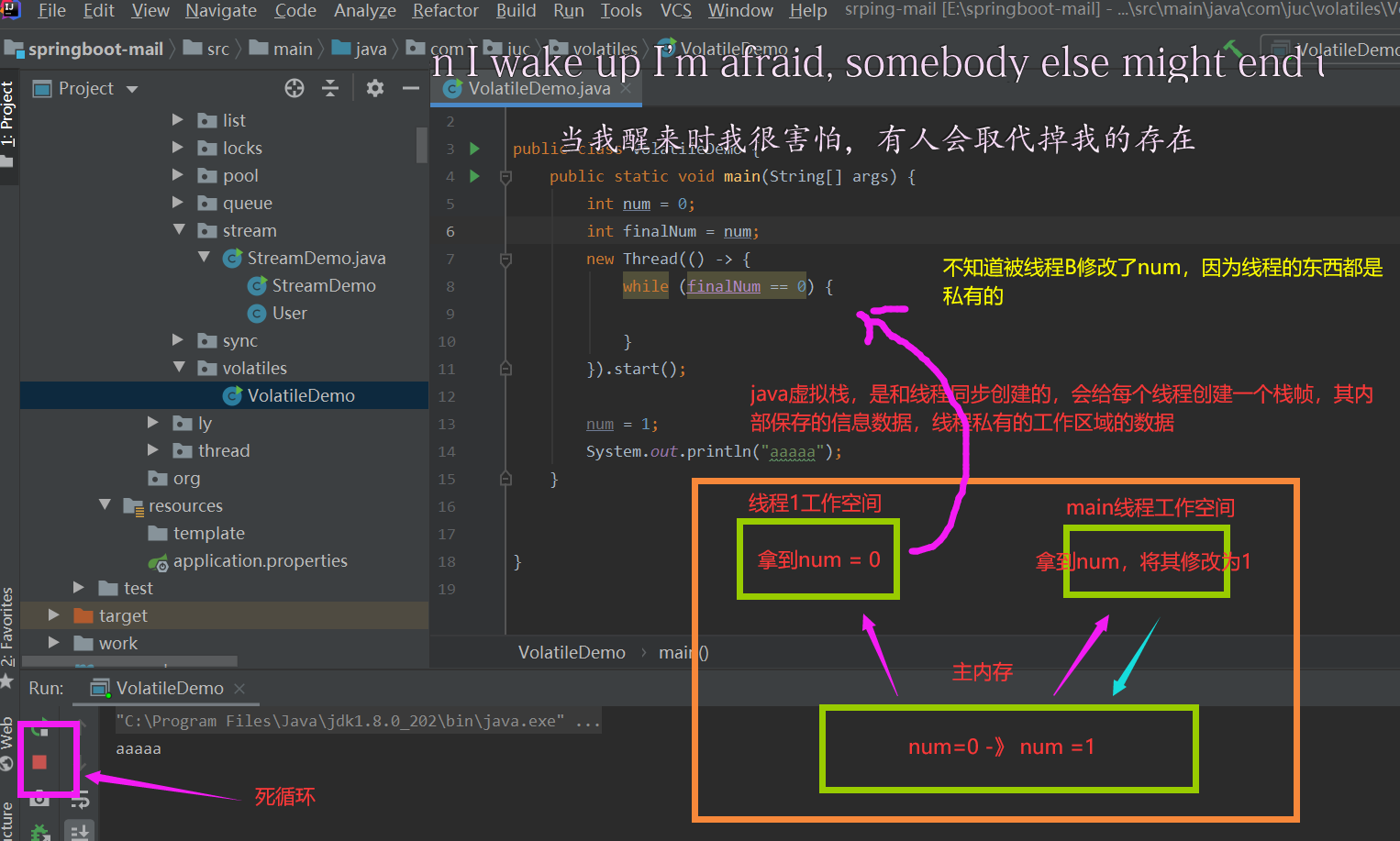

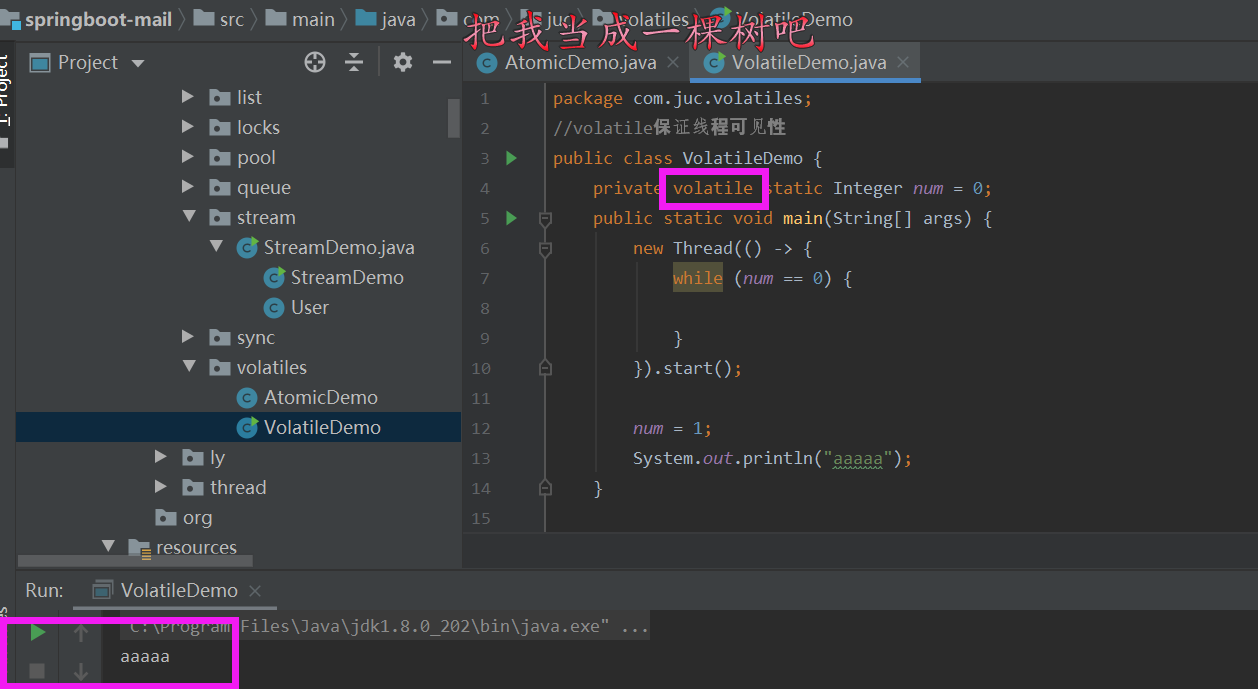

②volatile

volatile is a lightweight lock at the JVM level. It modifies variables. It has three properties:

1. Ensure that the variable thread is visible.

Stop dead circulation. If num is not modified by volatile, it will not be updated back to its workspace in time and will continue to loop.

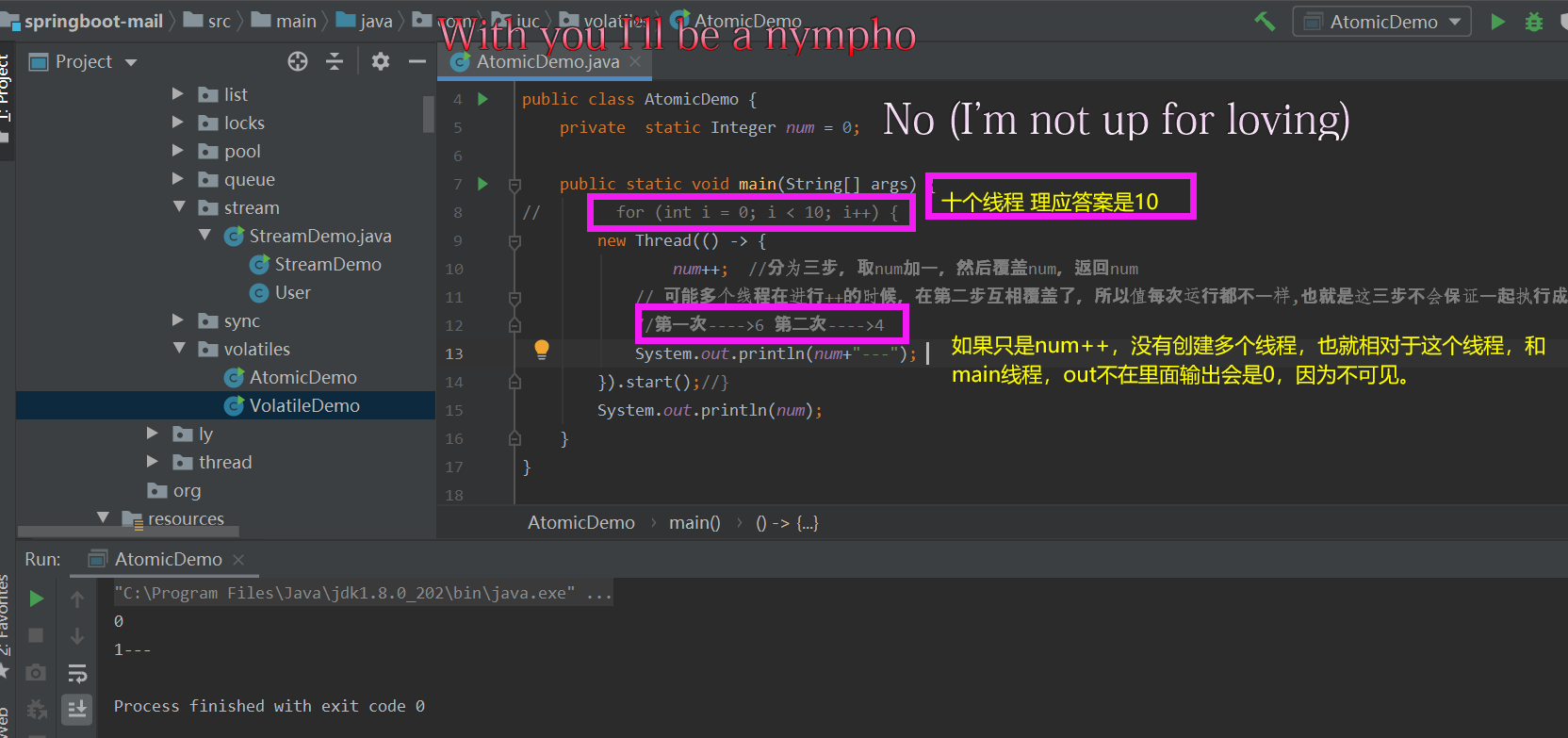

2. Atomicity is not guaranteed.

To ensure the atomicity of a variable + +, you can use the atomicinteger type in the atomic package under JUC to create a num variable. private static volatile AtomicInteger number = new AtomicInteger();

Then use its self plus one method, number.incrementAndGet(); // The bottom layer is the atomicity guaranteed by CAS

volatile ensures visibility.

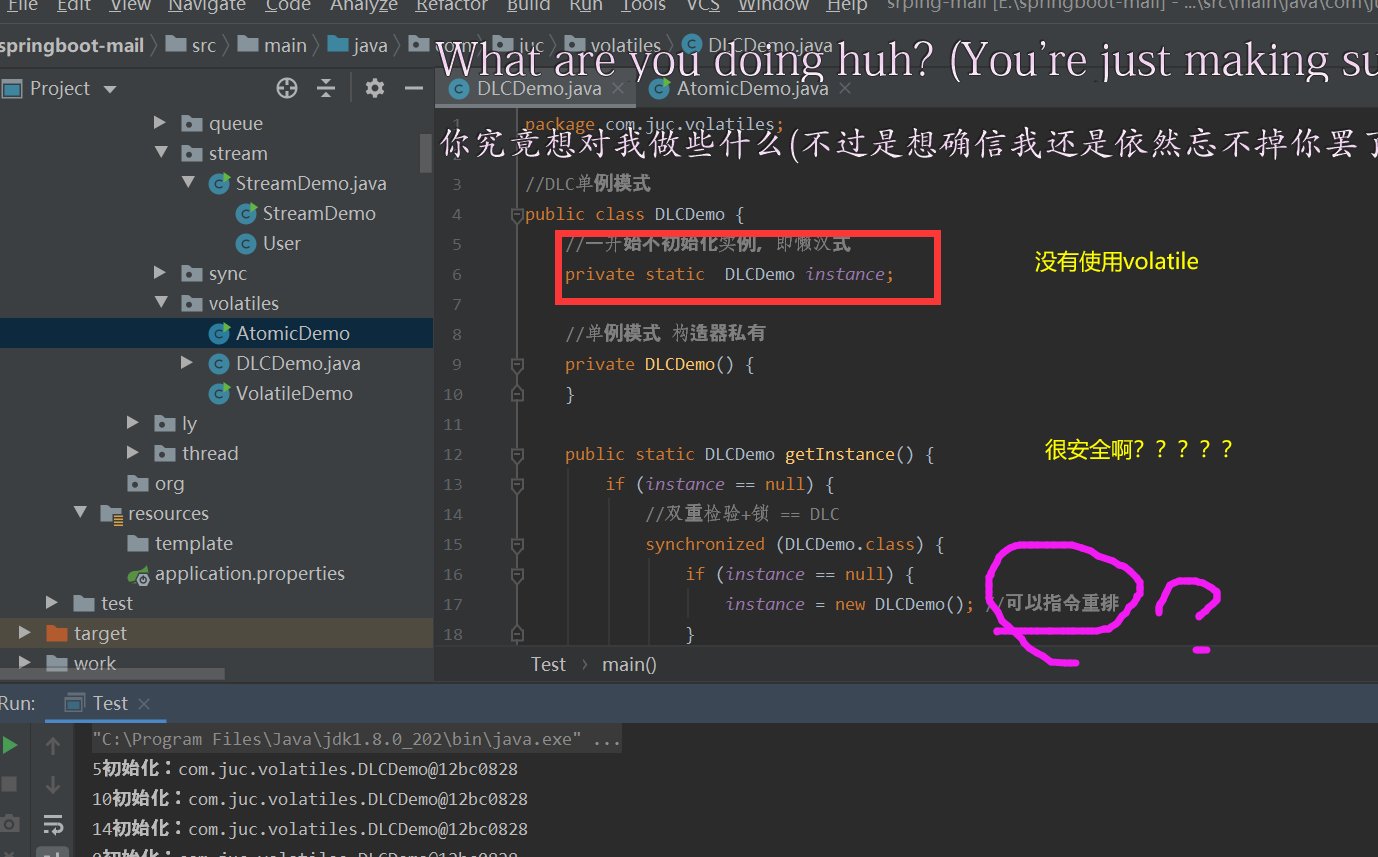

3. Command rearrangement is prohibited.

Understanding instruction rearrangement

When a computer executes a program, in order to improve the performance, the compiler and processor often rearrange the instructions, which are generally divided into the following three types:

① Rearrangement of compiler optimization: the compiler can rearrange the execution order of statements without changing the semantics of single threaded programs.

In other words, in case of multi-threaded execution, although there is no problem with the semantics of changing the order of statements in a single thread, there will be problems because the variables directly related to the thread have semantic connections.

② Rearrangement of instruction parallelism: modern processors use instruction level parallelism technology to execute multiple instructions overlapped. If there is no data dependency (that is, the later executed statement does not need to rely on the result of the previous executed statement), the processor can change the execution order of the machine instructions corresponding to the statement

③ Rearrangement of memory system: because the processor uses cache and read-write cache flushing, the load and store operations may appear to be executed out of order. Due to the existence of L3 cache, there is a time difference between the data synchronization of memory and cache.

Among them, compiler optimized rearrangement belongs to compile time rearrangement, instruction parallel rearrangement and memory system rearrangement belong to processor rearrangement. In multi-threaded environment, these rearrangement optimization may lead to memory visibility problems.

Memory barrier is added to read and write of volatile memory area (memory barrier, also known as memory barrier, is a CPU instruction)

Loadload barrier: for such statements, Load1; LoadLoad; Load2, ensure that the data to be read by Load1 is read before the data to be read by load2 and subsequent reading operations are accessed.

Storestore barrier: for such statements, Store1; StoreStore; Store2: before the execution of store2 and subsequent write operations, ensure that the write operations of Store1 are visible to other processors.

Loadstore barrier: for such statements, Load1; LoadStore; Store2, ensure that the data to be read by Load1 is read before store2 and subsequent write operations are brushed out.

Storeload barrier: for such statements, Store1; StoreLoad; Load2: before load2 and all subsequent read operations are executed, ensure that the writes of Store1 are visible to all processors.

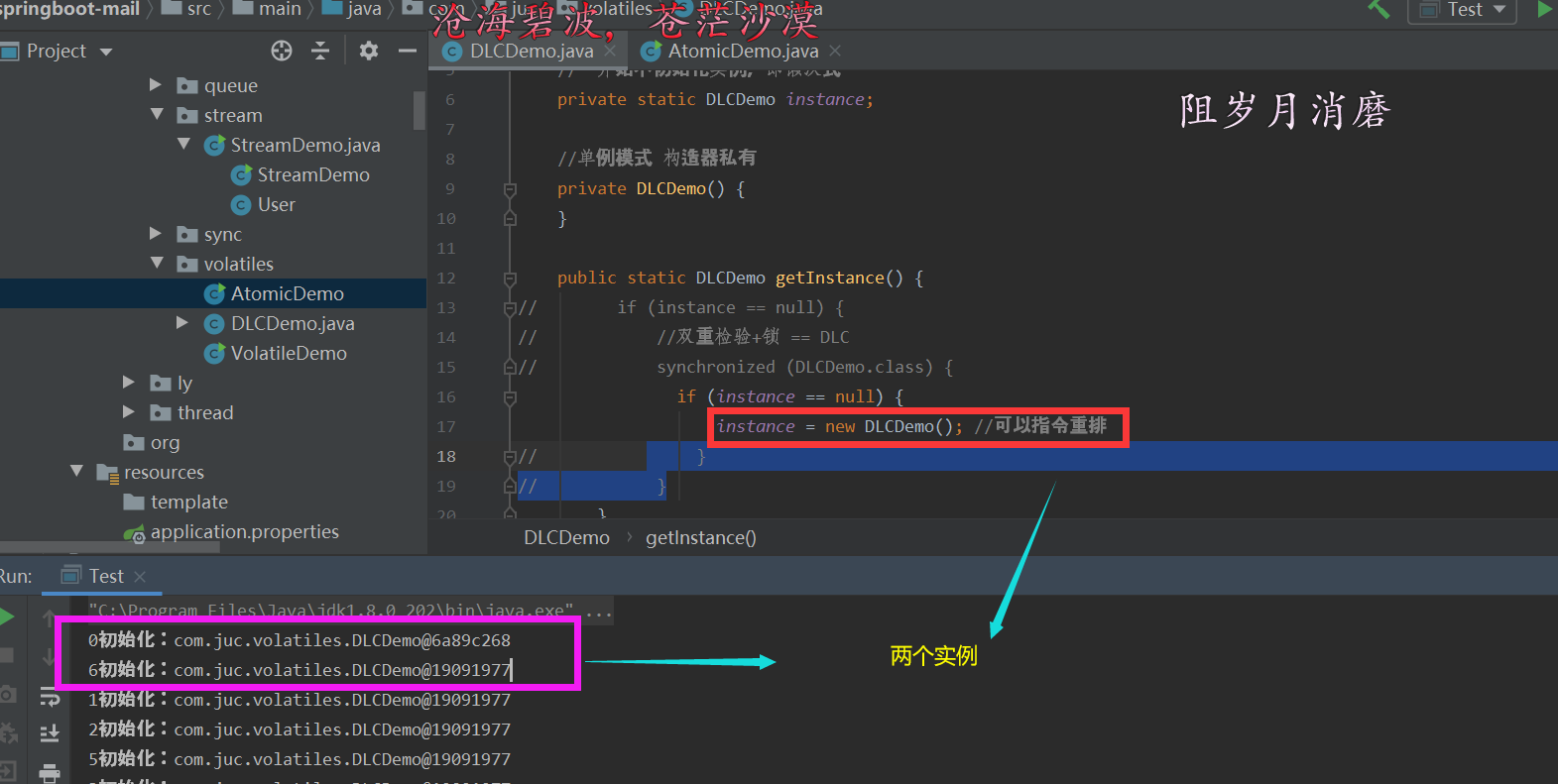

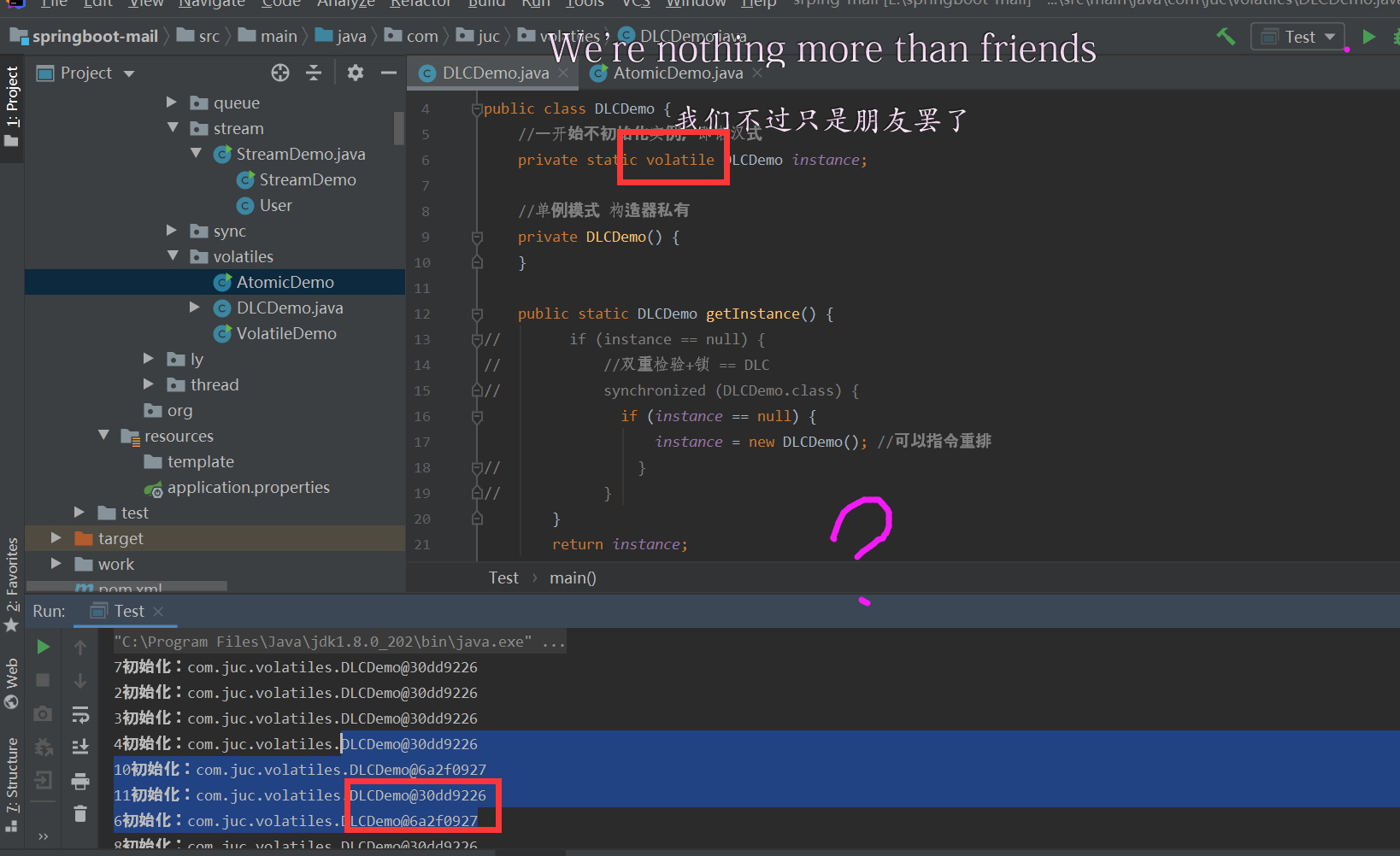

volatile variable realizes its semantics in memory through memory barrier, that is, visibility and prohibition of rearrangement optimization. Let's take a very typical example of prohibiting rearrangement optimization. One of the implementation methods of singleton mode ----- DCL lazy mode

The following is the normal lazy singleton mode:

The following is the normal lazy singleton mode:

// 1. Allocate object memory space

//2. Initialization object

//3. Set instance to point to the memory address just allocated. At this time, instance= null

2,3, you can change the order, maybe= null, but the object was not initialized

17, Singleton mode

① Singleton mode is not safe. Although the constructor is private, it can be cracked by reflection.

That is, multiple instances can be generated.

package com.juc.volatiles;

import java.lang.reflect.Constructor;

import java.lang.reflect.Field;

import java.lang.reflect.InvocationTargetException;

//Use reflection break singleton mode

public class ReflectDLC {

//Lazy singleton mode

private static boolean key = false;

private ReflectDLC() {

//Looks safe???? Why lock it

synchronized (ReflectDLC.class) {

if (key == false) {

key = true;

} else {

throw new RuntimeException("Do not attempt to use reflection to break exceptions");

}

}

System.out.println(Thread.currentThread().getName() + " ok");

}

private volatile static ReflectDLC lazyMan;

//Dual detection lock mode is referred to as DCL lazy mode for short

public static ReflectDLC getInstance() {

//Need to lock

if (lazyMan == null) {

synchronized (ReflectDLC.class) {

if (lazyMan == null) {

lazyMan = new ReflectDLC();

}

}

}

return lazyMan;

}

//Single thread is ok

//But if it's concurrent

public static void main(String[] args) throws NoSuchMethodException,

IllegalAccessException, InvocationTargetException, InstantiationException, NoSuchFieldException {

//In normal mode, it is an object instance

// ReflectDLC instance1 = ReflectDLC.getInstance();

// ReflectDLC instance2 = ReflectDLC.getInstance();

/**

* Using reflection

*/

Field key = ReflectDLC.class.getDeclaredField("key");

key.setAccessible(true);

Constructor<ReflectDLC> declaredConstructor = ReflectDLC.class.getDeclaredConstructor(null);

declaredConstructor.setAccessible(true); //Ignoring private constructors

ReflectDLC instance2 = declaredConstructor.newInstance();

key.set(instance2, false); //Originally, the key should be true after the instance is created, but it has been changed here so that the instance can be created again

//Relative to instance2.key = false;

ReflectDLC instance1 = declaredConstructor.newInstance();

System.out.println(instance1);

System.out.println(instance2);

System.out.println(instance1 == instance2);

}

}

-----------Two objects were successfully created with reflection

main ok

main ok

com.juc.volatiles.ReflectDLC@1fb3ebeb

com.juc.volatiles.ReflectDLC@548c4f57

false

② Using static inner classes to implement lazy singleton mode

//Static inner class

public class Holder {

private Holder(){

}

public static Holder getInstance(){

return InnerClass.holder;

}

public static class InnerClass{

private static final Holder holder = new Holder();

}

}

----Implementation reason:

1.When external to create Hoder Instance through getInstance Method will call the static internal class attribute to meet the class initialization conditions, so the static attribute of the internal class will be assigned as new Holder().

2.Because the attribute is final Type, so there will be no second object.

Conditions for class initialization:

1.An instance of a class to be created( new Object), which needs to be loaded into memory and initialized first

2.main Method needs to be loaded into memory and initialized first

3.Static inner classes, like non static inner classes, are loaded and initialized only when they are called (calling static methods or properties)

4.When loading static internal classes, external classes will be loaded first and then static internal classes (but the loading of static internal classes does not need to depend on external classes: Inner.INNER)

Initialization is to give the correct initial value to the static variable of the class.

The preparation phase (allocating memory for the static variables of the class and setting the default initial value) and the initialization phase seem a little contradictory, but they are not contradictory.

If there are statements in the class: private static int a = 10,Its execution process is as follows: first, after the bytecode file is loaded into memory, the link verification step is carried out first. After the verification is passed, the link is sent to the preparation stage a Allocate memory because variables a yes static Yes, so at this time a be equal to int The default initial value of type is 0, i.e a=0,Then go to parsing (later).

It is not until the initialization step a The true value of 10 is assigned to a,here a=10. ③ Hungry Han style

/**

* Hungry Han style single case

*/

public class Hungry {

/**

* It may waste space

*/

private byte[] data1=new byte[1024*1024];

private byte[] data2=new byte[1024*1024];

private byte[] data3=new byte[1024*1024];

private byte[] data4=new byte[1024*1024];

private Hungry(){

}

private final static Hungry hungry = new Hungry(); //There is only one final modifier when new comes out directly

public static Hungry getInstance(){

return hungry; //Direct return

}

}

18, Atomic reference (Solving ABA problem of CAS)

Atomic reference uses version number mechanism to realize the idea of optimistic locking.

ABA problem: in multithreaded computing, ABA problem occurs during synchronization. When a location is read twice, the two reads have the same value, and "same value" is used to indicate "no change".

However, another thread can execute and change the value between two reads, perform other work, then change the value, and then change it back, so as to deceive the first thread that "there is no change". Even if the work result of the second thread is correct, it violates this assumption.

-

Concurrency 1 (Part 1): the initial value of the retrieved data is A. the subsequent plan is to implement CAS optimistic lock. It is expected that the modification can succeed only when the data is still A

-

Concurrency 2: modify data to B

-

Concurrency 3: modify data back to A

-

Concurrency 1 (lower): CAS optimistic lock, detect whether the initial value is A, and modify the data

CAS generally makes judgment conditions and modifies resources. If someone enters it first and modifies resources, timely judgment can still succeed, but in concurrency 1 (lower), the actual things are different.

AtomicStampedReference<Long> atomicStampedRef = new AtomicStampedReference(100,1)

atomicStampedRef.compareAndSet(100, 101, atomicStampedRef.getStamp(), atomicStampedRef.getStamp() + 1);

AtomicStampedReference internally maintains not only the object value, but also a timestamp (I call it timestamp here. In fact, it can be any integer, which uses integers to represent the state value).

When the value corresponding to AtomicStampedReference is modified, the timestamp must be updated in addition to the data itself.

When AtomicStampedReference sets the object value, the object value and timestamp must meet the expected value before writing can succeed. Therefore, even if the object value is read and written repeatedly and written back to the original value, improper writing can be prevented as long as the timestamp changes.

Auto increment of version number, etc.

The CopyOnWrite strategy is used in the source code of AtomicStampedReference to ensure thread safety. The application of pairs is maintained before changes, and a new pair will be generated for each change.

19, CAS

CAS, namely compare and swap, is a well-known lock free algorithm. Lock free programming is to realize variable synchronization between multiple threads without using locks, that is, to realize variable synchronization without thread blocking, so it is also called non blocking synchronization. CAS algorithm involves three operands

- Memory value V to be read and written

- Value A for comparison

- New value to be written B

When and only when the value of V is equal to A, CAS updates the value of V with the new value B in an atomic way. Otherwise, no operation will be performed (comparison and replacement is an atomic operation). Generally, it is A spin operation, that is, constant retry.

CAS is an implementation of optimistic locking idea. It always thinks that it can successfully complete the operation. When multiple threads use CAS to operate a variable at the same time, only one will win and update successfully, and the rest will fail. The failed thread will not be suspended. It is only informed of the failure, and it is allowed to try again. Of course, the failed thread is allowed to give up the operation. Based on this CAS operation, even if there is no lock, can also find the interference of other threads to the current thread and handle it appropriately.

Most classes in the java.util.concurrent.atomic package are implemented using CAS operations

CAS disadvantages:

- The cycle will take time;

- One time can only guarantee the atomicity of one shared variable;

- ABA problem

public class casDemo {

//CAS: compareandset compare and exchange

public static void main(String[] args) {

AtomicInteger atomicInteger = new AtomicInteger(2020); //actual value

//boolean compareAndSet(int expect, int update)

//Expected value, updated value

//If the actual value is the same as my expectation, update it

//If the actual value is different from my expectation, it will not be updated

System.out.println(atomicInteger.compareAndSet(2020, 2021));

System.out.println(atomicInteger.get());

//CAS is the concurrency primitive of CPU

atomicInteger.getAndIncrement(); //++Operation --- actual value --- 2021

//Because the expected value is 2020, but the actual value becomes 2021, the modification will fail

System.out.println(atomicInteger.compareAndSet(2020, 2021));

System.out.println(atomicInteger.get());

}

}

AtomicInteger class, you will find that there is a class: Unsafe class

sun.misc.Unsafe is a tool class used internally in JDK. It exposes some "unsafe" functions in the Java sense to the Java layer code, so that JDK can use more java code to realize some functions that are originally platform related and can only be realized by using native language (such as C or C + +). This class should not be used outside the JDK core class library.

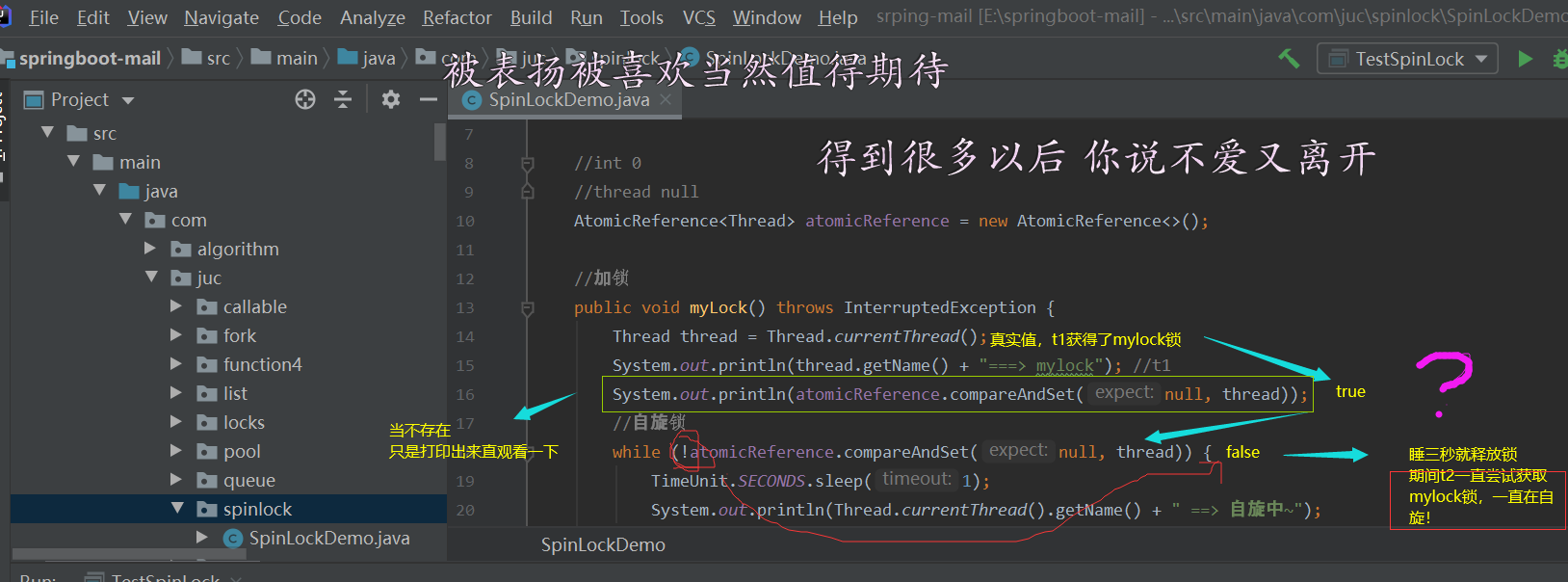

--What is a spin lock?

Spin lock: when a thread is acquiring a lock, if the lock has been acquired by other threads, the thread will wait in a loop, and then constantly judge whether the lock can be successfully acquired, and will not exit the loop until the lock is acquired.

The thread that obtains the lock is always active, but does not perform any valid tasks. Using this lock will cause busy-waiting.

It proposes a locking mechanism to protect shared resources. In fact, spinlocks are similar to mutually exclusive locks. They are used to solve the mutually exclusive use of a resource.

Whether it is a mutual exclusion lock or a spin lock, there can be at most one holder at any time, that is, at most one execution unit can obtain the lock at any time. However, they are slightly different in scheduling mechanism.

For mutexes, if the resource has been occupied, the resource applicant can only enter the sleep state / blocking state.

However, the spin lock will not cause the caller to sleep. If the spin lock has been held by other execution units, the caller will always cycle there to see whether the holder of the spin lock has released the lock. "Spin" is named for this.

public class SpinlockDemo {

//int 0

//thread null

AtomicReference<Thread> atomicReference=new AtomicReference<>();

//Lock

public void myLock(){

Thread thread = Thread.currentThread();

System.out.println(thread.getName()+"===> mylock"); //t1

//Spin lock

while (!atomicReference.compareAndSet(null,thread)){

System.out.println(Thread.currentThread().getName()+" ==> In spin~");

}

}

//Unlock

public void myunlock(){

Thread thread=Thread.currentThread();

System.out.println(thread.getName()+"===> myUnlock"); //t1

atomicReference.compareAndSet(thread,null);

}

}

class TestSpinLock {

public static void main(String[] args) throws InterruptedException {

//test

SpinlockDemo spinlockDemo=new SpinlockDemo();

new Thread(()->{

spinlockDemo.myLock();

try {

TimeUnit.SECONDS.sleep(3);

} catch (Exception e) {

e.printStackTrace();

} finally {

spinlockDemo.myunlock();

}

},"t1").start();

TimeUnit.SECONDS.sleep(1);

new Thread(()->{

spinlockDemo.myLock();

try {

TimeUnit.SECONDS.sleep(3);

} catch (Exception e) {

e.printStackTrace();

} finally {

spinlockDemo.myunlock();

}

},"t2").start();

}

}

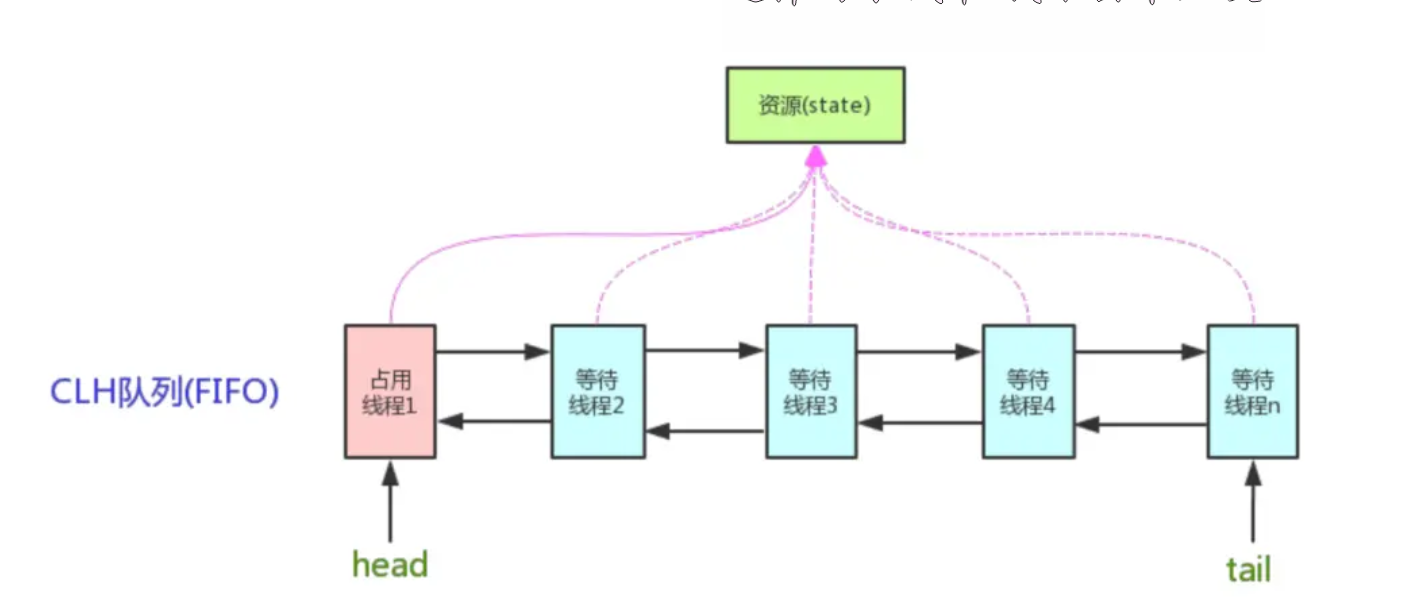

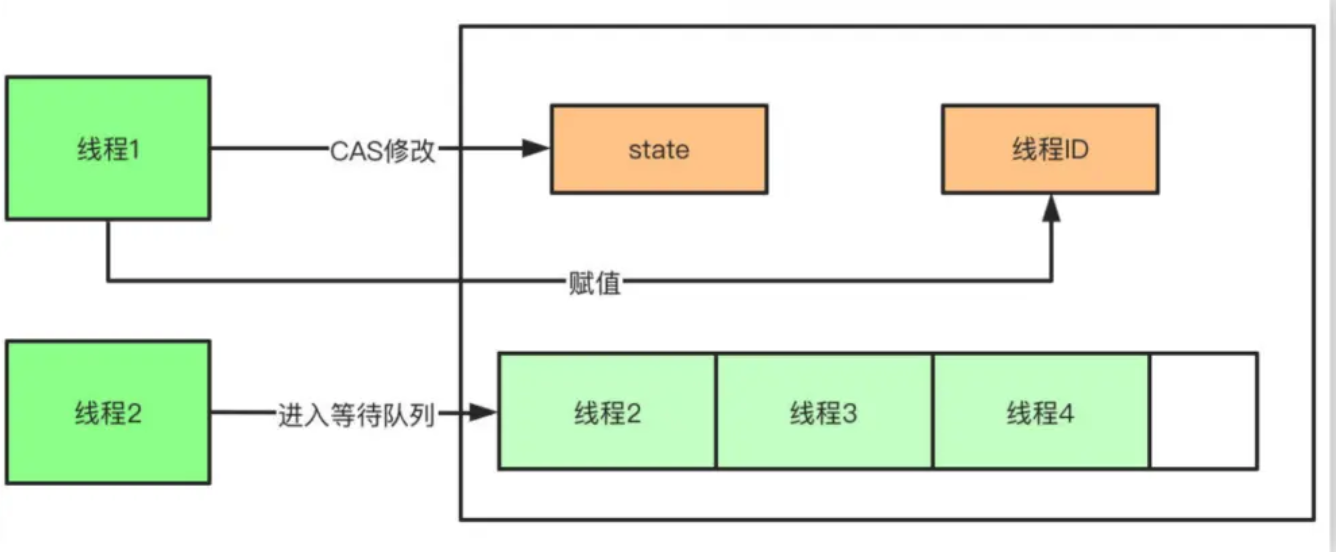

20, AQS (cas based lock synchronization framework)

AbstractQueuedSynchronizer, abstract queue synchronizer. AQS defines a synchronizer framework for multi-threaded access to shared resources, and many synchronization class implementations rely on it, such as ReentrantLock/Semaphore/CountDownLatch.

AQS The nuclear idea is:

If the requested shared resource is idle, set the thread of the current resource request as a valid thread, and set the shared resource to the locked state (through CAS(CompareAndSwap) when trying to lock) Modify the value. If it is successfully set to 1 and the current thread ID is assigned, it means that the locking is successful. Once the lock is obtained, other threads will be blocked into the blocking queue. When the lock is released, the thread in the blocking queue will wake up. When the lock is released, the state will be reset to 0 and the current thread ID will be empty.).

If the requested shared resources are occupied, a mechanism for thread blocking and waiting and lock allocation when waking up is required AQS Yes CLH The queue lock is implemented, that is, the thread that cannot obtain the lock temporarily is added to the queue. AQS schematic diagram: