Java source code reading notes - stream

Why learn stream

In Java programming, ArrayList is estimated to be one of the most commonly used classes, which is used as an array in daily life. For array operations, traversal, data filtering, data modification and other operations will be involved. The simplest of these operations is for loop traversal, but it is not elegant enough.

The simplest traversal method in normal mode is as follows.

ArrayList<String> arr = new ArrayList<>();

for (String str : arr) {

System.out.println(str);

}

Is there a simpler way? If you have learned JavaScript, the most commonly used array operation is streaming operation, as shown below:

let arr = [1,2,3] arr.map(x => console.log(x)); // Print out each element arr.filter(x => x > 2).map(x => console.log(x)) // Print out elements greater than 2

So, is there such a similar operation in Java? Of course, there will be a very sophisticated and perfect language such as Java, which is stream.

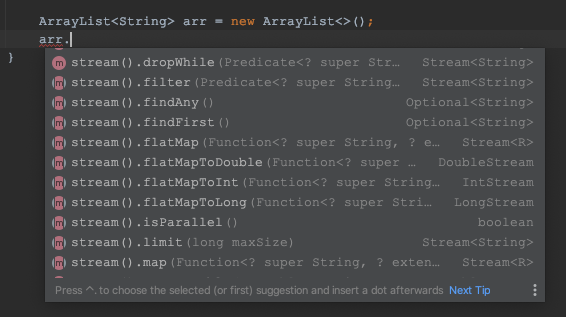

In the IDEA, instantiate an ArrayList object. When calling the object method, the IDEA will prompt a series of methods similar to stream().map(), stream().filter(), stream().forEach(). These methods may be what we are looking for, so let's see if they are.

Read the source code

First, the array object calls the stream() method. Command + B (shortcut key of MacOS) the following method to see its implementation

public interface Collection<E> extends Iterable<E> {

default Stream<E> stream() {

return StreamSupport.stream(spliterator(), false);

}

}

This method is a default method under the Collection interface (don't know the default method? Go to find out first). Why do you jump to this method directly? Of course, it is because ArrayList implements the List interface, the List interface inherits the Collection interface, and neither List nor ArrayList has rewritten this method, so the above section is the source code.

The stream() method returns an object that implements the Stream interface. Let's see what the Stream interface looks like first.

Stream interface

// A sequence of elements supporting sequential and parallel aggregate operations

public interface Stream<T> extends BaseStream<T, Stream<T>> {

// Returns a stream consisting of the elements of this stream that match the given predicate.

// Returns a stream of elements that meet the given criteria

Stream<T> filter(Predicate<? super T> predicate);

// Returns a stream consisting of the results of applying the given function to the elements of this stream

// Perform a function operation on each element, and then return the stream composed of all elements

<R> Stream<R> map(Function<? super T, ? extends R> mapper);

// Performs an action for each element of this stream

// Perform an operation on each element

void forEach(Consumer<? super T> action);

}

According to the comments, these are the methods we need to find. Because they are abstract methods, we need to find the class that implements this interface for what we do.

spliterator()

Back to the stream() method, there is only one line. First execute the splitter (), and then execute the StreamSupport.stream() method.

OK, look at the splitter (), continue Command+B, and jump here.

public interface Collection<E> extends Iterable<E> {

@Override

default Spliterator<E> spliterator() {

return Spliterators.spliterator(this, 0);

}

}

It should be noted here that splitter () is the default method, but it has been rewritten by ArrayList, so the specific content must be found under ArrayList. The following is the real method.

public class ArrayList<E> extends AbstractList<E> implements List<E>, RandomAccess, Cloneable, java.io.Serializable {

@Override

public Spliterator<E> spliterator() {

return new ArrayListSpliterator(0, -1, 0);

}

final class ArrayListSpliterator implements Spliterator<E> {

private int index; // current index, modified on advance/split

private int fence; // -1 until used; then one past last index

private int expectedModCount; // initialized when fence set

/** Creates new spliterator covering the given range. */

ArrayListSpliterator(int origin, int fence, int expectedModCount) {

this.index = origin;

this.fence = fence;

this.expectedModCount = expectedModCount;

}

public void forEachRemaining(Consumer<? super E> action) {

int i, hi, mc; // hoist accesses and checks from loop

Object[] a;

if (action == null)

throw new NullPointerException();

if ((a = elementData) != null) { // The elementData here is the element array of ArrayList

if ((hi = fence) < 0) {

mc = modCount;

hi = size;

}

else

mc = expectedModCount;

// The following is a traversal array

if ((i = index) >= 0 && (index = hi) <= a.length) {

for (; i < hi; ++i) {

@SuppressWarnings("unchecked") E e = (E) a[i];

action.accept(e); // Key code!!!

}

if (modCount == mc)

return;

}

}

throw new ConcurrentModificationException();

}

}

}

In the above, the splitter () function creates and instantiates an object arraylistsplitter. Other codes of arraylistsplitter are deleted, and only the forEachRemaining() function involved in this article is retained. First, this is the entry for calling up a series of stream processing, that is, action.accept(e).

ReferencePipeline.Head

Go back to the stream() method and take a look at the StreamSupport.stream() method. Here, a ReferencePipeline.Head object is new ly created, and the parameter passed is the arraylistsplitter object above.

public final class StreamSupport {

// Creates a new sequential or parallel Stream from a Spliterator.

public static <T> Stream<T> stream(Spliterator<T> spliterator, boolean parallel) {

Objects.requireNonNull(spliterator);

return new ReferencePipeline.Head<>(spliterator,

StreamOpFlag.fromCharacteristics(spliterator),

parallel);

}

}

Then look at the ReferencePipeline.Head class (the head class is the internal class of ReferencePipeline and inherits ReferencePipeline).

abstract class ReferencePipeline<P_IN, P_OUT>

extends AbstractPipeline<P_IN, P_OUT, Stream<P_OUT>>

implements Stream<P_OUT> {

static class Head<E_IN, E_OUT> extends ReferencePipeline<E_IN, E_OUT> {

// Constructor for the source stage of a Stream.

Head(Spliterator<?> source,

int sourceFlags, boolean parallel) {

super(source, sourceFlags, parallel);

}

@Override

final Sink<E_IN> opWrapSink(int flags, Sink<E_OUT> sink) {

throw new UnsupportedOperationException();

}

// Optimized sequential terminal operations for the head of the pipeline

@Override

public void forEach(Consumer<? super E_OUT> action) {

if (!isParallel()) {

sourceStageSpliterator().forEachRemaining(action);

}

else {

super.forEach(action);

}

}

}

}

Why is this internal class called Head? Because it is the Head of this pipeline, and the stream streams data. The data flows down level by level, so the Head and tail are different from the middle. For example, this class overrides the opWrapSink method, throws an exception and prohibits calls.

If you only traverse the array without other operations, you can directly stream().forEach(), and the flow ends. What you call is the forEach method overridden by this class

// The sourcestagespplitterer () returns the arraylistsplitter object mentioned above. Here, the forEachRemaining method appears!! (it will appear later) sourceStageSpliterator().forEachRemaining(action);

ReferencePipeline

The constructor of the above Head class calls the constructor of the parent class super(source, sourceFlags, parallel), that is, the constructor of ReferencePipeline. ReferencePipeline inherits AbstractPipeline, so it finally calls the construction method of AbstractPipeline.

abstract class ReferencePipeline<P_IN, P_OUT>

extends AbstractPipeline<P_IN, P_OUT, Stream<P_OUT>>

implements Stream<P_OUT> {

ReferencePipeline(Spliterator<?> source, int sourceFlags, boolean parallel) {

super(source, sourceFlags, parallel);

}

}

abstract class AbstractPipeline<E_IN, E_OUT, S extends BaseStream<E_OUT, S>>

extends PipelineHelper<E_OUT> implements BaseStream<E_OUT, S> {

AbstractPipeline(Spliterator<?> source, int sourceFlags, boolean parallel) {

this.previousStage = null;

this.sourceSpliterator = source;

this.sourceStage = this;

this.sourceOrOpFlags = sourceFlags & StreamOpFlag.STREAM_MASK;

// The following is an optimization of:

// StreamOpFlag.combineOpFlags(sourceOrOpFlags, StreamOpFlag.INITIAL_OPS_VALUE);

this.combinedFlags = (~(sourceOrOpFlags << 1)) & StreamOpFlag.INITIAL_OPS_VALUE;

this.depth = 0;

this.parallel = parallel;

}

}

Well, the execution of the stream() method is over. To sum up,

- In the first step, the ArrayList is encapsulated into an arraylistsplitter. This class has a method forEachRemaining to traverse elements

- Step 2: create a ReferencePipeline.Head object. Since head inherits ReferencePipeline and ReferencePipeline implements the Stream interface, it has a series of methods for Stream.

In the AbstractPipeline constructor, the arraylistsplitter object, that is, sourcesplitter, is recorded. depth = 0 indicates the first level of the pipeline.

Then, let's take a look at the filter method of Stream to see how it works.

Stream.filter

ReferencePipeline implements the interface Stream, so the filter method must be implemented in the ReferencePipeline class or its subclasses. The following is the filter source code.

abstract class ReferencePipeline<P_IN, P_OUT>

extends AbstractPipeline<P_IN, P_OUT, Stream<P_OUT>>

implements Stream<P_OUT> {

@Override

public final Stream<P_OUT> filter(Predicate<? super P_OUT> predicate) {

Objects.requireNonNull(predicate);

return new StatelessOp<P_OUT, P_OUT>(this, StreamShape.REFERENCE,

StreamOpFlag.NOT_SIZED) {

@Override

Sink<P_OUT> opWrapSink(int flags, Sink<P_OUT> sink) {

return new Sink.ChainedReference<P_OUT, P_OUT>(sink) {

@Override

public void begin(long size) {

downstream.begin(-1);

}

@Override

public void accept(P_OUT u) {

if (predicate.test(u))

downstream.accept(u);

}

};

}

};

}

}

The filter method looks complex, but it is actually very simple. It creates an anonymous class that inherits the abstract class StatelessOp and instantiates the object of the anonymous class (anonymous inner class), and implements the abstract method opWrapSink. The opWrapSink function is the same. It creates an anonymous class that inherits the abstract class Sink.ChainedReference and instantiates the object of the anonymous class.

Let's take a look at the map method first, and you'll find an amazing similarity.

abstract class ReferencePipeline<P_IN, P_OUT>

extends AbstractPipeline<P_IN, P_OUT, Stream<P_OUT>>

implements Stream<P_OUT> {

@Override

public final <R> Stream<R> map(Function<? super P_OUT, ? extends R> mapper) {

Objects.requireNonNull(mapper);

return new StatelessOp<P_OUT, R>(this, StreamShape.REFERENCE,

StreamOpFlag.NOT_SORTED | StreamOpFlag.NOT_DISTINCT) {

@Override

Sink<P_OUT> opWrapSink(int flags, Sink<R> sink) {

return new Sink.ChainedReference<P_OUT, R>(sink) {

@Override

public void accept(P_OUT u) {

downstream.accept(mapper.apply(u));

}

};

}

};

}

}

Compared with the source code of filter and map functions, both return StatelessOp objects. The opWrapSink method also returns Sink.ChainedReference objects. Filter rewrites the begin and accept methods. Map only rewrites the accept method, and the contents of the accept method are different. Similarly, other methods basically have different implementation contents of accept. Therefore, this accept method must be the key to the whole pipeline. Let's see how to call this accept method later.

All right, go back to StatelessOp and see what it is.

abstract static class StatelessOp<E_IN, E_OUT> extends ReferencePipeline<E_IN, E_OUT> {

//Construct a new Stream by appending a stateless intermediate operation to an existing stream.

StatelessOp(AbstractPipeline<?, E_IN, ?> upstream,

StreamShape inputShape,

int opFlags) {

super(upstream, opFlags);

assert upstream.getOutputShape() == inputShape;

}

@Override

final boolean opIsStateful() {

return false;

}

}

StatelessOp is an abstract class that inherits ReferencePipeline, just to make opIsStateful always return false and has no other functions.

It should be noted here that the stream() method returns the object of the ReferencePipeline.Head class, while the filter() returns the object of the StatelessOp subclass.

Let's look at the constructor of StatelessOp, which directly calls the constructor of the parent class ReferencePipeline and the constructor of AbstractPipeline.

abstract class AbstractPipeline<E_IN, E_OUT, S extends BaseStream<E_OUT, S>>

extends PipelineHelper<E_OUT> implements BaseStream<E_OUT, S> {

AbstractPipeline(AbstractPipeline<?, E_IN, ?> previousStage, int opFlags) {

if (previousStage.linkedOrConsumed)

throw new IllegalStateException(MSG_STREAM_LINKED);

previousStage.linkedOrConsumed = true;

previousStage.nextStage = this;

this.previousStage = previousStage;

this.sourceOrOpFlags = opFlags & StreamOpFlag.OP_MASK;

this.combinedFlags = StreamOpFlag.combineOpFlags(opFlags, previousStage.combinedFlags);

this.sourceStage = previousStage.sourceStage;

if (opIsStateful())

sourceStage.sourceAnyStateful = true;

this.depth = previousStage.depth + 1;

}

}

Here, previousStage is the Head object returned by stream() or the object of the subclass StatelessOp. nextStage points to this object, sourceStage points to the sourceStage of the previous stage, and depth increases by 1.

This process is A bit like A two-way linked list. The line of stream().filter().map() means to create A Head object (A), A StatelessOp (B) containing filter logic and A StatelessOp (C) containing map logic. The schematic diagram is as follows:

Well, no matter how many filter s and map s are followed, they are packed and extended. The problem is, when will they be implemented??

As mentioned earlier, stream() returns the first level of this streaming operation, or does it do special processing on the Head object. There is a Head and a tail, and the tail may be the starting point for execution.

Stream.forEach

forEach is one of the tails. In addition, collect, reduce, count, etc. can be used as tails to terminate the stream and perform a series of operations encapsulated above. First look at the source code of forEach.

abstract class ReferencePipeline<P_IN, P_OUT> extends AbstractPipeline<P_IN, P_OUT, Stream<P_OUT>> implements Stream<P_OUT> {

@Override

public void forEach(Consumer<? super P_OUT> action) {

evaluate(ForEachOps.makeRef(action, false));

}

}

It should be noted here that StatelessOp does not override the forEach method, and Head overrides the forEach method, so the execution is different. It depends on when to call forEach. Directly calling forEach after stream() is the forEach of Head (analyzed earlier). In addition, it is the forEach method of ReferencePipeline.

This forEach method looks very simple. It's just a line of code, but there are many hidden things in it. Look at it step by step.

First look at the ForEachOps.makeRef(action, false). This action is what forEach needs to do.

final class ForEachOps {

public static <T> TerminalOp<T, Void> makeRef(Consumer<? super T> action, boolean ordered) {

Objects.requireNonNull(action);

return new ForEachOp.OfRef<>(action, ordered);

}

abstract static class ForEachOp<T> implements TerminalOp<T, Void>, TerminalSink<T, Void> {

@Override

public <S> Void evaluateSequential(PipelineHelper<T> helper, Spliterator<S> spliterator) {

return helper.wrapAndCopyInto(this, spliterator).get();

}

static final class OfRef<T> extends ForEachOp<T> {

final Consumer<? super T> consumer;

OfRef(Consumer<? super T> consumer, boolean ordered) {

super(ordered);

this.consumer = consumer;

}

@Override

public void accept(T t) {

consumer.accept(t);

}

}

}

}

The ForEachOps.makeRef(action, false) statement returns a ForEachOp.OfRef object, which inherits ForEachOp and implements the interfaces TerminalOp and TerminalSink (TerminalOp is a termination operation from the name).

OK, then execute the evaluate() function,

abstract class AbstractPipeline<E_IN, E_OUT, S extends BaseStream<E_OUT, S>>

extends PipelineHelper<E_OUT> implements BaseStream<E_OUT, S> {

final <R> R evaluate(TerminalOp<E_OUT, R> terminalOp) {

assert getOutputShape() == terminalOp.inputShape();

if (linkedOrConsumed)

throw new IllegalStateException(MSG_STREAM_LINKED);

linkedOrConsumed = true;

return isParallel()

? terminalOp.evaluateParallel(this, sourceSpliterator(terminalOp.getOpFlags()))

: terminalOp.evaluateSequential(this, sourceSpliterator(terminalOp.getOpFlags()));

}

}

parallel is not involved in the analysis in this article, so what will be executed here is terminalop. evaluateSequential (this, sourcesplitter (terminalop. Getopflags())), that is, the evaluateSequential method of ForEachOp. This here is the last intermediate flow operation.

Here, first execute the sourcesplitter (terminalop. Getopflags()) and upload the source code (I guess from the function name that it is the splitter that returns the original data, that is, the first ArrayList splitter)

abstract class AbstractPipeline<E_IN, E_OUT, S extends BaseStream<E_OUT, S>>

extends PipelineHelper<E_OUT> implements BaseStream<E_OUT, S> {

private Spliterator<?> sourceSpliterator(int terminalFlags) {

// Get the source spliterator of the pipeline

Spliterator<?> spliterator = null;

if (sourceStage.sourceSpliterator != null) {

spliterator = sourceStage.sourceSpliterator;

sourceStage.sourceSpliterator = null;

}

else if (sourceStage.sourceSupplier != null) {

spliterator = (Spliterator<?>) sourceStage.sourceSupplier.get();

sourceStage.sourceSupplier = null;

}

else {

throw new IllegalStateException(MSG_CONSUMED);

}

if (isParallel() && sourceStage.sourceAnyStateful) {

// Adapt the source spliterator, evaluating each stateful op

// in the pipeline up to and including this pipeline stage.

// The depth and flags of each pipeline stage are adjusted accordingly.

int depth = 1;

for (@SuppressWarnings("rawtypes") AbstractPipeline u = sourceStage, p = sourceStage.nextStage, e = this;

u != e;

u = p, p = p.nextStage) {

int thisOpFlags = p.sourceOrOpFlags;

if (p.opIsStateful()) {

depth = 0;

if (StreamOpFlag.SHORT_CIRCUIT.isKnown(thisOpFlags)) {

// Clear the short circuit flag for next pipeline stage

// This stage encapsulates short-circuiting, the next

// stage may not have any short-circuit operations, and

// if so spliterator.forEachRemaining should be used

// for traversal

thisOpFlags = thisOpFlags & ~StreamOpFlag.IS_SHORT_CIRCUIT;

}

spliterator = p.opEvaluateParallelLazy(u, spliterator);

// Inject or clear SIZED on the source pipeline stage

// based on the stage's spliterator

thisOpFlags = spliterator.hasCharacteristics(Spliterator.SIZED)

? (thisOpFlags & ~StreamOpFlag.NOT_SIZED) | StreamOpFlag.IS_SIZED

: (thisOpFlags & ~StreamOpFlag.IS_SIZED) | StreamOpFlag.NOT_SIZED;

}

p.depth = depth++;

p.combinedFlags = StreamOpFlag.combineOpFlags(thisOpFlags, u.combinedFlags);

}

}

if (terminalFlags != 0) {

// Apply flags from the terminal operation to last pipeline stage

combinedFlags = StreamOpFlag.combineOpFlags(terminalFlags, combinedFlags);

}

return spliterator;

}

}

Sure enough, the final returned is the splitter, and it comes from sourcestage.sourcesplitter, that is, the arraylistsplitter passed in when creating the Head. The long code left in the middle has nothing to do with the existing analysis. Skip it first.

The evaluateSequential method also has only one line

public <S> Void evaluateSequential(PipelineHelper<T> helper, Spliterator<S> spliterator) {

return helper.wrapAndCopyInto(this, spliterator).get();

}

Since the helper here actually points to the object of the StatelessOp subclass, that is, the wrapAndCopyInto method is implemented in AbstractPipeline or ReferencePipeline. In fact, it is implemented in AbstractPipeline.

abstract class AbstractPipeline<E_IN, E_OUT, S extends BaseStream<E_OUT, S>>

extends PipelineHelper<E_OUT> implements BaseStream<E_OUT, S> {

@Override

final <P_IN, S extends Sink<E_OUT>> S wrapAndCopyInto(S sink, Spliterator<P_IN> spliterator) {

copyInto(wrapSink(Objects.requireNonNull(sink)), spliterator);

return sink;

}

@Override

final <P_IN> void copyInto(Sink<P_IN> wrappedSink, Spliterator<P_IN> spliterator) {

Objects.requireNonNull(wrappedSink);

if (!StreamOpFlag.SHORT_CIRCUIT.isKnown(getStreamAndOpFlags())) {

wrappedSink.begin(spliterator.getExactSizeIfKnown());

spliterator.forEachRemaining(wrappedSink);

wrappedSink.end();

}

else {

copyIntoWithCancel(wrappedSink, spliterator);

}

}

@Override

final <P_IN> Sink<P_IN> wrapSink(Sink<E_OUT> sink) {

for (AbstractPipeline p=AbstractPipeline.this; p.depth > 0; p=p.previousStage) {

sink = p.opWrapSink(p.previousStage.combinedFlags, sink);

}

return (Sink<P_IN>) sink;

}

}

The key is coming!!

In the wrapAndCopyInto method, wrapsink (objects. Requirenonulls (sink)) is executed first, and objects. Requirenonulls can be ignored directly.

What'S the sink here? I forgot. Go back and find the parameter this in the evaluateSequential method, that is, the OfRef object, because it indirectly implements the TerminalSink, which inherits the sink, and the type S here also inherits the sink interface, which is right.

Then there is the wrapSink method. AbstractPipeline.this obtains the this pointer of AbstractPipeline, and then traverses all stage s, that is, all encapsulated operations. Where is the method called by opWrapSink here?

Recall that all operation processes are encapsulated into StatelessOp or Head, and the opWrapSink method is rewritten every time, so this is the implementation method of filter, map and other functions.

Therefore, after traversing here, we call all the opWrapSink methods of the stage. Looking back at the opWrapSink method, it returns the Sink.ChainedReference object.

Then look at the constructor of Sink.ChainedReference

interface Sink<T> extends Consumer<T> {

abstract static class ChainedReference<T, E_OUT> implements Sink<T> {

protected final Sink<? super E_OUT> downstream;

public ChainedReference(Sink<? super E_OUT> downstream) {

this.downstream = Objects.requireNonNull(downstream);

}

}

}

Back to the wrapSink method, the for loop inside is traversed in reverse order, so each time a new Sink.ChainedReference object is created, the downstream will point to the previous traversal.

The line stream().filter().map().forEach() means to create A Head object (A), A StatelessOp (B) containing filter logic, A StatelessOp (C) containing map logic, and an OfRef (D) containing forEach logic. After wrapSink, the diagram is as follows:

A where is it? A is Head. Rewriting the opWrapSink method will throw exceptions. Turn up the source code of Head, so depth > 0 when wrapSink traverses, so a is not included.

OK, then execute the copyInto method,

The first step is to judge whether it is a short circuit operation StreamOpFlag.SHORT_CIRCUIT.isKnown(getStreamAndOpFlags()) is not executed only for short circuit operation. This is something that has never been set, so it must not be. We'll see what's the use of this later.

Short circuit operation will not be executed if it meets the conditions. For example, anymatch() allmatch() nonmetch() findfirst() findany() and other methods will not traverse the remaining data if they find the conditions

Then there are three lines of code:

wrappedSink.begin(spliterator.getExactSizeIfKnown()); spliterator.forEachRemaining(wrappedSink); wrappedSink.end();

In the first line, splitter. Getexactsizeifknown() takes the size of ArrayList. The begin function here seems useless? 😓

In the third line, wrappedSink.end() doesn't see any use 😓

In the second line, the splitter goes back to the arraylistsplitter encapsulated in stream(), and calls foreachmaintaining. The source code of this is pasted on it, that is, traversing the elements of ArrayList, and each element executes "action.accept(e);"

The action here is wrappedSink. Remember the accept method rewritten in the filter function? It's called here!!!!

Let's take a look at the filter function source. wrappedSink points to the Sink.ChainedReference object instantiated here, then calls the accept method defined by itself. If predicate.test(u) is true in filter, then the accept method of downstream is executed. What is downstream? This points to the next operation; If it is false, the subsequent operations will not be executed, and the whole "action.accept(e);" will be executed, and then the next element will be executed.

public final Stream<P_OUT> filter(Predicate<? super P_OUT> predicate) {

Objects.requireNonNull(predicate);

return new StatelessOp<P_OUT, P_OUT>(this, StreamShape.REFERENCE,

StreamOpFlag.NOT_SIZED) {

@Override

Sink<P_OUT> opWrapSink(int flags, Sink<P_OUT> sink) {

return new Sink.ChainedReference<P_OUT, P_OUT>(sink) {

@Override

public void begin(long size) {

downstream.begin(-1);

}

@Override

public void accept(P_OUT u) {

if (predicate.test(u))

downstream.accept(u);

}

};

}

};

}

Let's take a look at the map function and directly look at the key:

public void accept(P_OUT u) {

downstream.accept(mapper.apply(u));

}

First execute the function passed by the map, and then directly call the next operation.

The last operation, forEach, is wrapped into an OfRef object and overrides the accept method

static final class OfRef<T> extends ForEachOp<T> {

final Consumer<? super T> consumer;

OfRef(Consumer<? super T> consumer, boolean ordered) {

super(ordered);

this.consumer = consumer;

}

@Override

public void accept(T t) {

consumer.accept(t);

}

}

Finally finished!

forEach is the simplest termination operation. Let's take a look at the collect ion of complex points

Stream.collect

The collect function is overloaded as follows

public final <R, A> R collect(Collector<? super P_OUT, A, R> collector) public final <R> R collect(Supplier<R> supplier, BiConsumer<R, ? super P_OUT> accumulator, BiConsumer<R, R> combiner)

If you look at the definition of the Collector interface, you will find that it is the encapsulation of the following three parameters, so just look at the following.

@Override

public final <R> R collect(Supplier<R> supplier, BiConsumer<R, ? super P_OUT> accumulator, BiConsumer<R, R> combiner) {

return evaluate(ReduceOps.makeRef(supplier, accumulator, combiner));

}

The difference between the collect method and the forEach method is that the ReduceOps method called by collect and the forEach method called by forEach.

Because the above analysis is very detailed, let's write it simply. The process is actually similar

- The makeRef method creates a new ReduceOp object and overrides the makeSink method. This method will create a new ReducingSink object, which encapsulates the three parameters passed in from collect.

public static <T, R> TerminalOp<T, R>

makeRef(Supplier<R> seedFactory, BiConsumer<R, ? super T> accumulator, BiConsumer<R,R> reducer) {

class ReducingSink extends Box<R> implements AccumulatingSink<T, R, ReducingSink> {

@Override

public void begin(long size) {

state = seedFactory.get();

}

@Override

public void accept(T t) {

accumulator.accept(state, t);

}

@Override

public void combine(ReducingSink other) {

reducer.accept(state, other.state);

}

}

return new ReduceOp<T, R, ReducingSink>(StreamShape.REFERENCE) {

@Override

public ReducingSink makeSink() {

return new ReducingSink();

}

};

}

ReduceOp also rewrites the evaluateSequential method (both implement the TerminalOp interface), executes the makeSink method, and returns the ReducingSink class, which is equivalent to the above OfRef class

@Override

public <P_IN> R evaluateSequential(PipelineHelper<T> helper, Spliterator<P_IN> spliterator) {

return helper.wrapAndCopyInto(makeSink(), spliterator).get();

}

The remaining operations are the same as those of forEach until the copyInto method. The begin method previously considered useless is useful here. The begin method of ReducingSink is of practical significance. The get method of the seedFactory parameter was called.

Then call the accept method in forEachRemaining to end. combine will only be used when running in parallel. I don't know how to use it. I'll study it when I have time.