Previous preparation

- Ensure that the HDFS cluster has been built. If not, please check it out. Construction of Distributed HDFS Cluster

- Match hadoop environment variables locally and add node and ip under hosts files if necessary

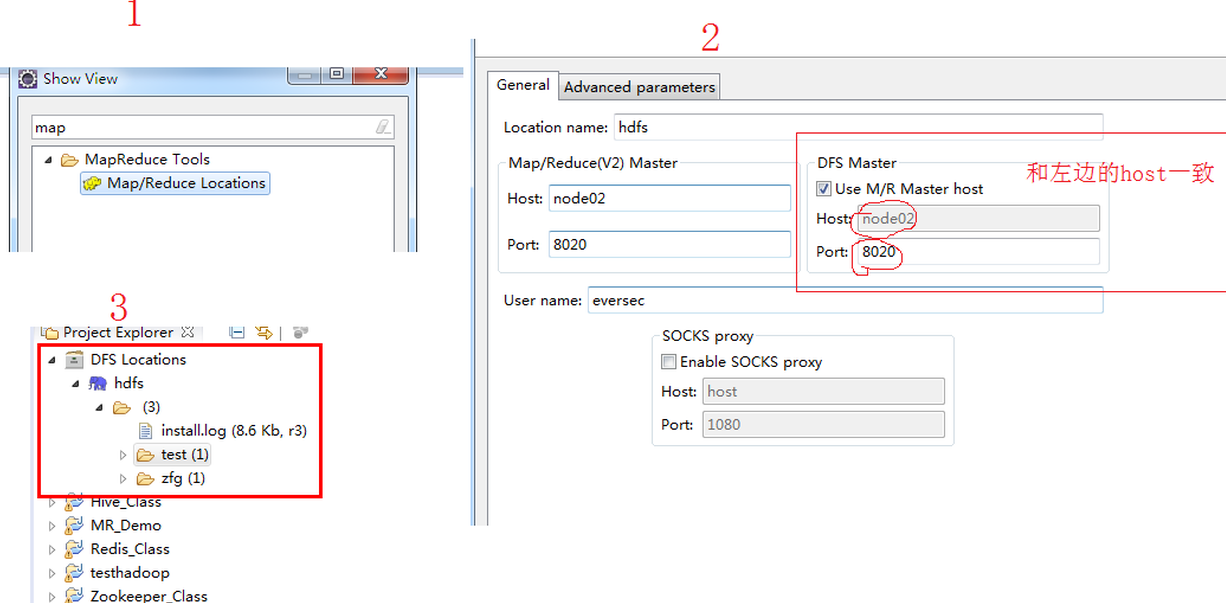

- Configure Hadoop plug-ins in eclipse

- Import dependent jar packages (under hadoop installation packages)

API operation

- First create the configuration object

Configuration conf = new Configuration(true);

FileSystem fs=fileSystem.get(conf);

see file

private static List listFileSystem(FileSystem fs, String path) throws FileNotFoundException, IOException {

Path ppath = new Path(path);

FileStatus[] listStatus = fs.listStatus(ppath);

for (FileStatus fileStatus : listStatus) {

System.out.println(fileStatus.getPath());

}

return null;

}

Create a new folder

private static void createDir(FileSystem fs, String string) throws IllegalArgumentException, IOException {

Path path = new Path(string);

if(fs.exists(path)){

fs.delete(path, true);

}

fs.mkdirs(path);

}

Upload files

private static void uploadFileToHDFS(FileSystem fs, String src, String dest) throws IOException {

Path srcPath = new Path(src);

Path destPath = new Path(dest);

fs.copyFromLocalFile(srcPath, destPath);

fs.copyFromLocalFile(true,srcPath, destPath);

}

Download File

private static void downLoadFileFromHDFS(FileSystem fs, String src, String dest) throws IOException {

Path srcPath = new Path(src);

Path destPath = new Path(dest);

fs.copyToLocalFile(true,srcPath, destPath);

}

Delete files

fs.delete(path,true);

Internal replication and internal movement (shear)

private static void innerCopyAndMoveFile(FileSystem fs, Configuration conf,String src, String dest) throws IOException {

Path srcPath = new Path(src);

Path destPath = new Path(dest);

FileUtil.copy(srcPath.getFileSystem(conf), srcPath, destPath.getFileSystem(conf), destPath,false, conf);

FileUtil.copy(srcPath.getFileSystem(conf), srcPath, destPath.getFileSystem(conf), destPath,true, conf);

}

rename

private static void renameFile(FileSystem fs, String src, String dest) throws IOException {

Path srcPath = new Path(src);

Path destPath = new Path(dest);

fs.rename(srcPath, destPath);

}

Create new files

private static void createNewFile(FileSystem fs, String string) throws IllegalArgumentException, IOException {

fs.createNewFile(new Path(string));

}

Writing file

private static void writeToHDFSFile(FileSystem fs, String filePath, String content) throws IllegalArgumentException, IOException {

FSDataOutputStream outputStream = fs.create(new Path(filePath));

outputStream.write(content.getBytes("UTF-8"));

outputStream.flush();

outputStream.close();

}

read file

private static void readFromHDFSFile(FileSystem fs, String string) throws IllegalArgumentException, IOException {

FSDataInputStream inputStream = fs.open(new Path(string));

FileStatus fileStatus = fs.getFileStatus(new Path(string));

long len = fileStatus.getLen();

byte[] b = new byte[(int)len];

int read = inputStream.read(b);

while(read != -1){

System.out.println(new String(b));

read = inputStream.read(b);

}

}

Additional writing

private static void appendToHDFSFile(FileSystem fs, String filePath, String content) throws IllegalArgumentException, IOException {

FSDataOutputStream append = fs.append(new Path(filePath));

append.write(content.getBytes("UTF-8"));

append.flush();

append.close();

}

Get data location

private static void getFileLocation(FileSystem fs, String string) throws IOException {

FileStatus fileStatus = fs.getFileStatus(new Path(string));

long len = fileStatus.getLen();

BlockLocation[] fileBlockLocations = fs.getFileBlockLocations(fileStatus, 0, len);

String[] hosts = fileBlockLocations[0].getHosts();

for (String string2 : hosts) {

System.out.println(string2);

}

HdfsBlockLocation blockLocation = (HdfsBlockLocation)fileBlockLocations[0];

long blockId = blockLocation.getLocatedBlock().getBlock().getBlockId();

System.out.println(blockId);

}