Java Multithreaded-Blocked Queue

introduce

In concurrent programming, thread-safe queues are sometimes required. There are two ways to achieve a thread-safe queue: using a blocking algorithm and using a non-blocking algorithm.

Queues using blocking algorithms can be implemented with one lock (the same lock for both queuing and queuing) or two locks (different locks for queuing and queuing). Non-blocking implementations can be implemented with circular CAS.

Blocking queue:

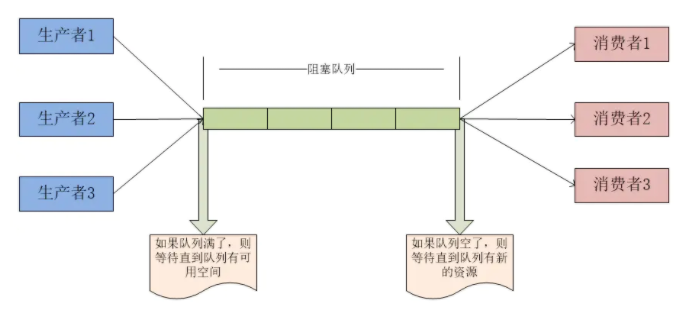

A blocking queue is a queue that supports two additional operations that support blocked insertion and removal methods.

1) Supports blocked insertion methods: This means that when the queue is full, the queue blocks threads that insert elements until the queue is not satisfied.

2) Supports blocking removal: meaning that when the queue is empty, the thread that gets the element waits for the queue to become non-empty.

Blocking queues are often used in scenarios for producers and consumers, where producers are threads that add elements to the queue and consumers are threads that take elements from the queue. Blocking queues are containers that producers use to store elements and consumers use to get them. We can implement long-connection chat on the Web

Entry

Offer (E): Return true immediately if the queue is not full; false -> no blocking if the queue is full

Put (E): If the queue is full, it is blocked until the queue is not satisfied or the thread is interrupted -->Blocked

Offer (E, long timeout, TimeUnit unit): Insert an element at the end of the queue, and if the queue is full, enter the wait until three conditions occur: - Blocking

Wake Up

Wait time out

The current thread was interrupted

Queue

poll(): Returns null directly if there are no elements; queues if there are elements

take(): If the queue is empty, it is blocked until the queue is not empty or the thread is interrupted - blocked

poll(long timeout, TimeUnit unit): If the queue is not empty, queue out; if the queue is empty and has timed out, return null; if the queue is empty and has not timed out, enter the wait until three situations occur:

Wake Up

Wait time out

The current thread was interrupted

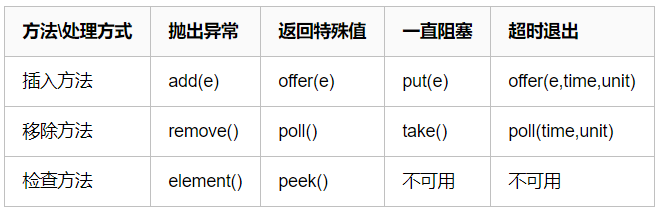

The following table is a common method for blocking queues:

- Throw an exception: An IllegalStateException("Queue full") exception is thrown when an element is inserted into the queue when the blocking queue is full. A NoSuchElementException exception is thrown when an element is retrieved from the queue when the queue is empty.

- Returns a special value: the insert method returns success and true if it succeeds. The remove method takes an element out of the queue and returns null if it does not

- Blocked all the time: When the blocked queue is full, if the producer thread put s an element into the queue, the queue will block the producer thread until it gets data or exits in response to an interruption. When the queue is empty, the consumer thread attempts to take elements from the queue, and the queue will block the consumer thread until the queue is available.

- Timeout exit: When the blocking queue is full, the queue blocks the producer thread for a period of time, and if it exceeds a certain period of time, the producer thread exits.

Again, it's important to note that the blocking method put() take() threads are not disconnected

If you use remove() to delete data without data then the error thread stops, and add() insertion also stops

remove() and poll() take() are also used to delete an element from the head of the queue.

add(e) offer(e) put(e) is inserting the specified element at the end of the queue

JDK7 provides seven blocking queues.

- ArrayBlockingQueue: A bounded blocking queue consisting of an array structure.

- LinkedBlockingQueue: A bounded chain table structure (2147483647) can be interpreted as an infinite capacity blocking queue.

- PriorityBlockingQueue: An unbounded blocking queue that supports priority ordering.

- DelayQueue: An unbounded blocking queue implemented using a priority queue.

- SynchronousQueue: A blocked queue that does not store elements.

- LinkedTransferQueue: An unbounded blocking queue consisting of a chain table structure.

- LinkedBlockingDeque: A two-way blocking queue consisting of a chain table structure.

Here is a brief description of the three queues commonly used:

As for the other cohorts, you can see from the following studies whether the basic usage is almost always primary or more project-specific to choose your own

SynchronousQueue

SynchronousQueue is unbounded and a buffer-free wait queue, but because of the nature of the Queue itself, it must wait for other threads to remove it after an element has been added; you can think of SynchronousQueue as a blocked queue with a cache value of 1, but the isEmpty() method always returns true, and the remainingCapacity() method always returns 0, remove() And removeAll() methods always return false, iterator() methods always return null, and peek() methods always return null.

There are two different ways to declare a SynchronousQueue, and they behave differently.

The difference between fair and unfair modes: if fair mode is used: SynchronousQueue uses a fair lock and works with a FIFO queue to block redundant producers and consumers, thereby establishing an overall fairness strategy;

But if it's unfair (SynchronousQueue default)SynchronousQueue uses unfair locks while working with a LIFO queue to manage redundant producers and consumers. In the latter mode, if there is a gap in processing speed between producers and consumers, it is easy to get hungry, and there may be some producers or consumers whose data will never be processed.

SynchronousQueue is a blocked queue in which each put has to wait for a take and vice versa. Synchronous queues do not have any internal capacity, or even the capacity of a queue.

You cannot peek on a synchronous queue because it exists only when you try to get an element;

You cannot (use any method) add elements unless another thread attempts to remove them;

Queues cannot also be iterated because there are no elements available for iteration. The header of the queue is the first queued thread element that is attempted to be added to the queue; if there are no queued threads, no elements are added and the header is null.

Note 1: It is a blocking queue where each put must wait for a take and vice versa.

A synchronous queue has no internal capacity, not even one.

Note 2: It is thread safe and blocked.

Note 3: null elements are not allowed.

Note 4: The fair sorting strategy refers to between threads calling put or between threads taking ke.

The Fair Sorting Policy looks at the Fair Policy in ArrayBlockingQueue.

Note that the following methods for SynchronousQueue are interesting:

- iterator() always returns empty because there is nothing inside.

- peek() always returns null.

- put() wait s after putting an element into the queue until other thread s come in to remove it.

- offer() returns immediately after placing an element in the queue, and if it happens to be taken away by another thread, the offer method returns true, assuming the offer is successful; otherwise, it returns false.

- offer(2000, TimeUnit.SECONDS) places an element in the queue but waits for the specified time before returning, returning the same logic as offer() method.

- take() takes out and remove s the element in the queue (think it's in the queue.) If he can't get something, he will wait for it all the time.

- poll() takes out and remove s the element in the queue (think it's in the queue...). This method only gets something if another thread happens to be offer ing or put ting data into the queue. Otherwise, it returns null immediately.

- Pol (2000, TimeUnit.SECONDS) waits for the specified time and remove s the element from the queue, which is to wait for other thread s to come and go.

- isEmpty() is always true.

- ReiningCapacity() is always 0.

- remove() and removeAll() are always false.

Demo:

public static void main(String[] args) throws InterruptedException {

SynchronousQueue<Integer> queue = new SynchronousQueue<Integer>();

new Customer(queue).start();

new Product(queue).start();

}

//Producer

static class Product extends Thread{

SynchronousQueue<Integer> queue;

public Product(SynchronousQueue<Integer> queue){

this.queue = queue;

}

@Override

public void run(){

while(true){

int rand = new Random().nextInt(1000);

System.out.println("A product has been produced:"+rand);

System.out.println("Wait three seconds for delivery...");

try {

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

queue.offer(rand); //Add Elements

System.out.println("Product generation complete:"+rand);

}

}

}

//Consumer

static class Customer extends Thread{

SynchronousQueue<Integer> queue;

public Customer(SynchronousQueue<Integer> queue){

this.queue = queue;

}

@Override

public void run(){

while(true){

try {

System.out.println("Consumed a product:"+queue.take()); //Get Elements

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("------------------------------------------");

}

}

}

------------------------------------------ One product was produced: 559 Wait three seconds for delivery... Product generation complete: 559 Consumed a product:559 ------------------------------------------ One product was produced: 69 Wait three seconds for delivery... Product generation complete: 69 Consumed a product:69 ------------------------------------------ One product was produced: 908 Wait three seconds for delivery... Product generation complete: 908 Consumed a product:908 ------------------------------------------ One product was produced: 769 Wait three seconds for delivery... Product generation complete: 769 Consumed a product:769

You can see from the results that if you have already produced but not yet consumed, you will be stuck in production until you consume to generate the next one. 1-to-1 relationship

LinkedBlockingQueue

The LinkedBlockingQueue default size is Integer.MAX_VALUE(2147483647), which can be interpreted as either infinite capacity or a set size, which can be interpreted as a bounded waiting queue for a cache.

A blocked queue based on a chain table maintains a data buffer queue (which consists of a chain table). When a producer puts a data into the queue, the queue gets data from the producer and caches inside the queue, and the producer returns immediately; only when the queue buffer reaches its maximum cache capacity(LinkedBlockingQueue can specify this value through the constructor) the producer queue will not be blocked until the consumer consumes a piece of data from the queue, the producer thread will be awakened, and conversely the processing on the consumer side will be based on the same principle.

LinkedBlockingQueue is able to process concurrent data efficiently because it uses separate locks for both producer and consumer to control data synchronization. This also means that producers and consumers can work concurrently with the data in the queue in high concurrency situations to improve the concurrency performance of the entire queue.

import java.io.IOException;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.LinkedBlockingQueue;

/**

* Created by guo on 17/2/2018.

* Stock Trading Server Based on Blocking Queue

* Requirements:

* 1,Allow traders to add sales orders to the queue or to obtain pending orders

* 2,At any given time, if the queue is full, the trader has to wait for a location to become empty

* 3,Buyers must wait until a sales order is available in the queue.

* 4,To simplify the situation, it is assumed that the buyer must always purchase a full quantity of sellable shares, not a partial purchase.

*/

public class StockExchange {

public static void main(String[] args) {

System.out.printf("Hit Enter to terminate %n%n");

//1. Create an instance of LinkedBlockingQueue because it has unlimited capacity, so a trader can queue any number of orders.

// If you use ArrayBlockingQueue, you will limit the number of transactions that each stock has.

BlockingQueue<Integer> orderQueue = new LinkedBlockingQueue<>();

//2. Create a Seller Seller instance, which is the implementation class of Runnable.

Seller seller = new Seller(orderQueue);

//3. Create 100 trader instances and queue orders for your own sale. Each sales order will have a random volume of transactions.

Thread[] sellerThread = new Thread[100];

for (int i = 0; i < 100; i++) {

sellerThread[i] = new Thread(seller);

sellerThread[i].start();

}

//4. Create 100 buyer instances and select orders for sale

Buyer buyer = new Buyer(orderQueue);

Thread[] buyserThread = new Thread[100];

for (int i = 0; i < 100; i++) {

buyserThread[i] = new Thread(buyer);

buyserThread[i].start();

}

//The following code is how to close all threads manually

try {

//5. Once the producer and consumer threads are created, they will always be running, placing orders in and getting orders from the queue

// Depending on the load at a given time, the application is blocked periodically by the user pressing the Enter key on the keyboard.

while (System.in.read() != '\n');

} catch (IOException e) {

e.printStackTrace();

}

//6. The main function interrupts all running producer and consumer threads and requires them to refer to and exit

System.out.println("Terminating");

for (Thread t : sellerThread) {

t.interrupt();

}

for (Thread t : buyserThread) {

t.interrupt();

}

}

/**

* seller

* Seller The class implements the Runnable interface and provides a constructor with OrderQueue as a parameter

*/

static class Seller implements Runnable {

private BlockingQueue orderQueue;

private boolean shutdownRequest = false;

private static int id;

public Seller(BlockingQueue orderQueue) {

this.orderQueue = orderQueue;

}

@Override

public void run() {

while (shutdownRequest == false) {

//1. Produce a random number for each transaction volume in each iteration

Integer quantity = (int) (Math.random() * 100);

try {

//2. Call the put method to place the order in the queue. This is a blocking call. Threads need to wait for empty positions in the queue only if the queue capacity is limited

orderQueue.put(quantity);

//3. For user convenience, print details of sales orders in the console and thread details for placing sales orders

System.out.println("Sell order by" + Thread.currentThread().getName() + ": " + quantity);

} catch (InterruptedException e) {

//4. The run method runs indefinitely and periodically submits orders to the queue. By calling the interrupt method, this thread can be interrupted by another thread.

// InterruptException generated by the interrupt method simply sets the shutdownRequest flag to true, causing the run method to terminate an infinite loop

shutdownRequest = true;

}

}

}

}

/**

* Buyer

* Buyer The class implements the Runnable interface and provides a constructor with OrderQueue as a parameter

*/

static class Buyer implements Runnable{

private BlockingQueue orderQueue;

private boolean shutdownRequest = false;

public Buyer(BlockingQueue orderQueue) {

this.orderQueue = orderQueue;

}

@Override

public void run() {

while (shutdownRequest == false) {

try {

//1. The run method pulls the to-do transaction from the head of the queue by calling the take method.

// If no orders are available in the queue, the take method will block,

Integer quantity = ((Integer) orderQueue.take());

//2. Print order and thread details for convenience

System.out.println("Buy order by " + Thread.currentThread().getName() + ": " + quantity);

} catch (InterruptedException e) {

shutdownRequest = true;

}

}

}

}

}

Read the code above and you're ok ay

The basic result is that 100 threads are entered simultaneously and 100 threads are fetched simultaneously

When data is available, consumers automatically wake up with automatic sleep turned on, waiting for the queue to wake up when it is not empty

ArrayListBlockingQueue

ArrayListBlockingQueue is bounded and is a bounded cache waiting queue.

An array-based blocking queue, similar to LinkedBlockingQueue, maintains a fixed-length data buffer queue (which consists of arrays). ArrayBlockingQueue also maintains two integer variables that identify the position of the queue's head and tail in the array.

ArrayBlockingQueue shares the same lock object when producers put in data and consumers get data, which also means they can't run in real parallel, especially unlike LinkedBlockingQueue.

Analyzing the implementation principle, ArrayBlockingQueue can fully employ detached locks, enabling full parallel operation between producer and consumer.Lea probably didn't do this because ArrayBlockingQueue's data writing and fetching operations were so light that it introduced a separate locking mechanism that, apart from the additional complexity to the code, did not cost anything in terms of performance.

Another notable difference between ArrayBlockingQueue and LinkedBlockingQueue is that the former does not produce or destroy any additional object instances when inserting or deleting elements, while the latter generates an additional Node object. In systems that require efficient and concurrent processing of large amounts of data over a long period of time, there is still a difference in their impact on GC.

import java.util.concurrent.ArrayBlockingQueue;

public class StockExchange {

public static void main(String[] args) throws Exception {

// insertBlocking(); //Insert Feature

// fetchBlocking(); //Removal characteristics

// Insert Remove Case

ArrayBlockingQueue<String> abq = new ArrayBlockingQueue<String>(10);

testProducerConsumer(abq);

}

/**

* This method demonstrates the insert blocking feature of ArrayBlockingQueue: if the queue is full, the insert will be blocked and program execution will be forced to pause.

*/

public static void insertBlocking() throws InterruptedException {

ArrayBlockingQueue<String> names = new ArrayBlockingQueue<String>(1);

new Thread(new Runnable() {

@Override

public void run() {

try {

names.put("a");

System.out.println(names.size());

// From the beginning of this sentence will not be executed The capacity that should be set for full capacity is 1

names.put("b");

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}).start();

Thread.sleep(1000);

System.out.println("Program Executes Here...");

}

/**

* This method demonstrates the fetch blocking feature of ArrayBlockingQueue: if the queue is empty, the fetch will be blocked and the program execution will fail.

*

*/

public static void fetchBlocking() throws InterruptedException {

ArrayBlockingQueue<String> names = new ArrayBlockingQueue<String>(1);

new Thread(new Runnable() {

@Override

public void run() {

try {

names.put("a");

names.take();

System.out.println(names.size());

names.take(); //Nothing to delete

names.put("b");

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}).start();

Thread.sleep(1000);

System.out.println("Program Executes Here...");

}

/**

* @Use this method to test producers and consumers

* There are two ways for a program to not make an error when it cannot get an element:

* 1.Making producers produce faster than consumers consume

* 2.Let the consumer thread pause for a period of time without outputting an error when the consumer has made an error in getting resources.

* @param abq

*/

public static void testProducerConsumer (ArrayBlockingQueue<String> abq) {

Thread tConsumer = new Consumer(abq);

Thread tProducer = new Producer(abq);

tConsumer.start();

tProducer.start();

}

}

/**

* @Role Definition Consumer

*

*/

class Consumer extends Thread {

ArrayBlockingQueue<String> abq = null;

public Consumer(ArrayBlockingQueue<String> abq) {

super();

this.abq = abq;

}

@Override

public void run() {

while(true) {

try{

String msg = abq.take();

System.out.println("Get data:===="+msg+"\t Remaining data:"+abq.size());

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

/**

* @Role Definition Producer

*

*/

class Producer extends Thread {

ArrayBlockingQueue<String> abq = null;

public Producer(ArrayBlockingQueue<String> abq) {

this.abq = abq;

}

@Override

public void run() {

int i = 0;

while(true) {

try {

Thread.sleep(2000);

abq.put(""+i);

System.out.println("Store data:===="+i+"\t Remaining data:"+abq.size());

i++;

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

Store data: ====0 Remaining data amount: 1

Take data: ====0 Remaining data amount: 0

Store data: ====1 Remaining data amount: 1

Take data: ====1 Remaining data amount: 0

Store data: ====2 Remaining data amount: 1

Data taken: ====2 Remaining data amount: 0

A simple summary of the typical characteristics of blocking queues can be summarized as follows:

- Blocking queues provide methods to add entries to them. Calls to these methods are blocking calls, where entry insertion must wait until the queue's space becomes available.

- Queues provide methods to delete entries from them, and calls to these methods are also blocking calls. Callers wait for entries to be placed in empty queues

- add and remove methods provide interrupts

- put and take operations are implemented in separate threads, providing good insulation between the two types of operations

- Cannot insert null elements like in a blocked queue

- Blocking queues may be capacity constrained

- Blocking queue implementations are thread-safe, but bulk operations, such as addAll, do not necessarily have to be performed atomically.

- Blocked queues do not naturally support close or stop operations, which means there are no more entries to add