preface

Due to the limited server resources, it is necessary to limit the speed and number of requests to prevent too many requests from causing the server to crash. Once the number of requests received by the server exceeds the given maximum value or the speed of requests is greater than the processing speed of the server, these requests should be actively rejected to ensure the health and stability of the server itself.

Single version current limiting

The flow restriction of stand-alone version is to limit the flow of a single service. At present, guava's RateLimiter current limiting algorithm is widely used. Let's have a look.

guava

maven address

// https://mvnrepository.com/artifact/com.google.guava/guava implementation group: 'com.google.guava', name: 'guava', version: '31.0.1-jre'

main method

public static void main(String[] args) throws InterruptedException {

RateLimiter rateLimiter = RateLimiter.create(1D, Duration.ofSeconds(5));

while (true) {

boolean elapsedSecond = rateLimiter.tryAcquire();

if(elapsedSecond) {

log.info("{} obtain {}", Thread.currentThread(), System.nanoTime());

}

}

}

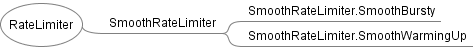

Inheritance diagram

The relationship between guava is relatively simple

Current limiter

Sleepable timer

Ordinary timer

Ticker

Used to get the current time

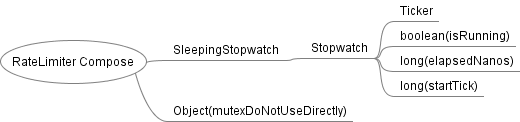

Combination diagram

A lock and a sleep timer.

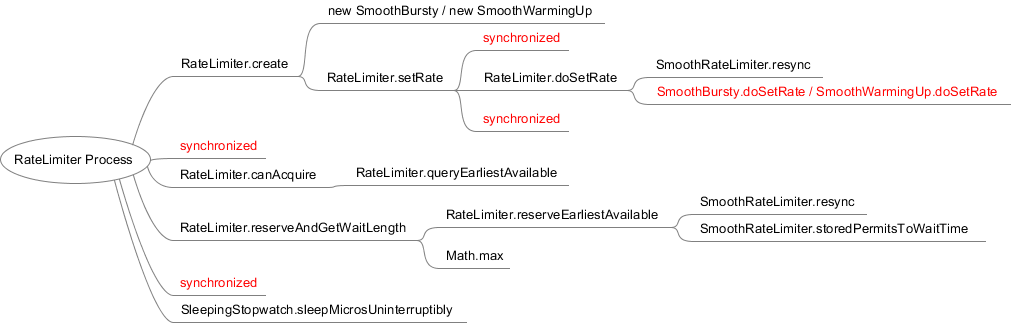

flow chart

- To create a RateLimiter object, you have two choices, smoothburst and SmoothWarmingUp.

- Lock setting rate.

- Lock and query the latest available license time. If you can't get the license, return false. If you can get the license, book the license and obtain the waiting time.

- Sleep and wait until the license takes effect.

Using pessimistic locks, I wonder if there will be a performance bottleneck when concurrency is high?

summary

-

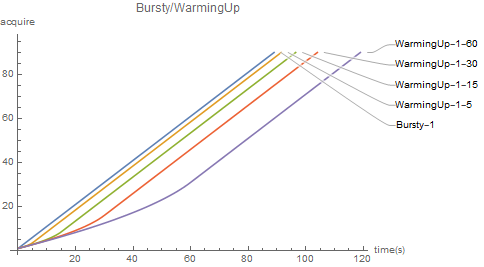

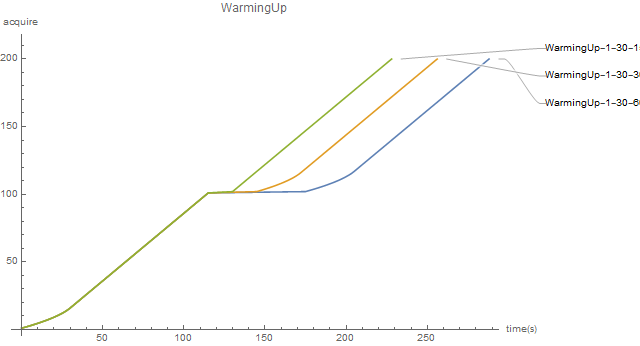

guava's RateLimiter is divided into two types: one is smoothburst, and the current limiting rate is always the same; The other is smoothwarming up, which has a preheating time. During preheating, the current limiting rate rises smoothly, and reaches the given maximum value at the end of preheating time.

-

If the WarmingUp pauses after reaching the maximum current limiting speed, the current limiter will warm up again.

-

The current limiter has a blocking version of acquire() and a non blocking version of tryAcquire().

eureka

I also happened to see that eureka also has a current limiter for InstanceInfoReplicator to synchronize data to the server.

maven address

// https://mvnrepository.com/artifact/com.netflix.eureka/eureka-client runtimeOnly group: 'com.netflix.eureka', name: 'eureka-client', version: '1.10.17'

main method

public static void main(String[] args) throws InterruptedException {

int count = 0;

EurekaRateLimiter rateLimiter = new EurekaRateLimiter(TimeUnit.SECONDS);

while (true) {

boolean elapsedSecond = rateLimiter.acquire(1, 1);

if (elapsedSecond) {

log.info("{} obtain {}", Thread.currentThread(), System.nanoTime());

++count;

if (count > 100) {

Thread.sleep(15000);

count = 0;

}

}

}

}

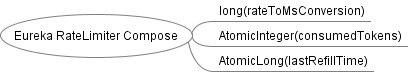

Combination diagram

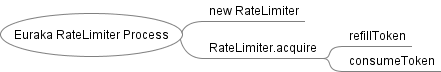

flow chart

- Create a RateLimiter

- Fill token bucket

- Consumption token

summary

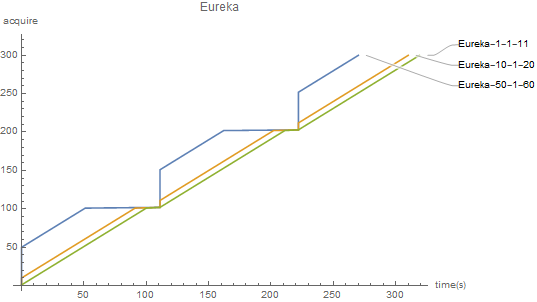

- eureka current limiter mainly has two parameters, burstSize and averageRate. burstSize determines the total size that can be released. When the maximum release size is reached, the number of releases per unit time is determined by the averageRate rate. If there are still tokens in the token bucket (burstSize), the consumed token will be returned. If there is no token, the consumed token rate is limited by (averageRate).

In the figure, the first number represents burstSize, the second number represents averageRate, and the third number represents sleep time. For example, Eureka-50-1-60 represents burstSize=50, averageRate=1(acquire/s), and sleepTime=60(ms)

- eureka current limiter uses while and compareAndSet optimistic locks for thread synchronization.

Distributed current limiting

Distributed flow limiting is to limit the flow of multiple services, that is, multiple services share a rate.

redis

At present, the scheme of redis+lua is widely used. Redis is used to store the current limiting information and lua is used to write the current limiting algorithm.

This plan needs to lua The script is understood and implemented in spring cloud gateway.

request_rate_limiter.lua

local tokens_key = KEYS[1]

local timestamp_key = KEYS[2]

--redis.log(redis.LOG_WARNING, "tokens_key " .. tokens_key)

local rate = tonumber(ARGV[1])

local capacity = tonumber(ARGV[2])

local now = tonumber(ARGV[3])

local requested = tonumber(ARGV[4])

local fill_time = capacity/rate

local ttl = math.floor(fill_time*2)

--redis.log(redis.LOG_WARNING, "rate " .. ARGV[1])

--redis.log(redis.LOG_WARNING, "capacity " .. ARGV[2])

--redis.log(redis.LOG_WARNING, "now " .. ARGV[3])

--redis.log(redis.LOG_WARNING, "requested " .. ARGV[4])

--redis.log(redis.LOG_WARNING, "filltime " .. fill_time)

--redis.log(redis.LOG_WARNING, "ttl " .. ttl)

local last_tokens = tonumber(redis.call("get", tokens_key))

if last_tokens == nil then

last_tokens = capacity

end

--redis.log(redis.LOG_WARNING, "last_tokens " .. last_tokens)

local last_refreshed = tonumber(redis.call("get", timestamp_key))

if last_refreshed == nil then

last_refreshed = 0

end

--redis.log(redis.LOG_WARNING, "last_refreshed " .. last_refreshed)

local delta = math.max(0, now-last_refreshed)

local filled_tokens = math.min(capacity, last_tokens+(delta*rate))

local allowed = filled_tokens >= requested

local new_tokens = filled_tokens

local allowed_num = 0

if allowed then

new_tokens = filled_tokens - requested

allowed_num = 1

end

--redis.log(redis.LOG_WARNING, "delta " .. delta)

--redis.log(redis.LOG_WARNING, "filled_tokens " .. filled_tokens)

--redis.log(redis.LOG_WARNING, "allowed_num " .. allowed_num)

--redis.log(redis.LOG_WARNING, "new_tokens " .. new_tokens)

if ttl > 0 then

redis.call("setex", tokens_key, ttl, new_tokens)

redis.call("setex", timestamp_key, ttl, now)

end

-- return { allowed_num, new_tokens, capacity, filled_tokens, requested, new_tokens }

return { allowed_num, new_tokens }

The author is also the first time to contact lua grammar. lua is used more in openresty. If you want to use openresty well, lua can't get around it. You can only watch it hard.

maven address

//lettuce implementation group: 'io.lettuce', name: 'lettuce-core', version: '6.1.4.RELEASE'

main method

@Slf4j

public class RateLimiter {

private volatile StatefulRedisConnection<String, String> connection;

private volatile String scriptSha1;

private String uri;

private int replenishRate;

private int burstCapacity;

private int requestedTokens;

public RateLimiter(String uri, int replenishRate, int burstCapacity, int requestedTokens) {

this.uri = uri;

this.replenishRate = replenishRate;

this.burstCapacity = burstCapacity;

this.requestedTokens = requestedTokens;

getConnection();

getScriptSha1();

}

public boolean acquire(String id) {

// How many requests per second do you want a user to be allowed to do?

int replenishRate = this.replenishRate;

// How much bursting do you want to allow?

int burstCapacity = this.burstCapacity;

// How many tokens are requested per request?

int requestedTokens = this.requestedTokens;

List<String> keys = getKeys(id);

// The arguments to the LUA script. time() returns unixtime in seconds.

List<String> scriptArgs = Arrays.asList(replenishRate + "", burstCapacity + "",

Instant.now().getEpochSecond() + "", requestedTokens + "");

// allowed, tokens_left = redis.eval(SCRIPT, keys, args)

List<Long> result = execute(keys, scriptArgs);

return result.size() > 0 && result.get(0) == 1L;

}

private List<String> getKeys(String id) {

// use `{}` around keys to use Redis Key hash tags

// this allows for using redis cluster

// Make a unique key per user.

String prefix = "request_rate_limiter.{" + id;

// You need two Redis keys for Token Bucket.

String tokenKey = prefix + "}.tokens";

String timestampKey = prefix + "}.timestamp";

return Arrays.asList(tokenKey, timestampKey);

}

private List<Long> execute(List<String> keys, List<String> scriptArgs) {

try {

if (StringUtils.isEmpty(getScriptSha1())) {

return Arrays.asList(1L, -1L);

}

return getConnection().sync().evalsha(getScriptSha1(), ScriptOutputType.MULTI, keys.toArray(new String[0]), scriptArgs.toArray(new String[0]));

} catch (Exception e) {

log.info("Error in requesting current limit information", e);

return Arrays.asList(1L, -1L);

}

}

/**

* DCL

*/

private StatefulRedisConnection<String, String> getConnection() {

StatefulRedisConnection<String, String> connection = this.connection;

if (Objects.isNull(connection)) {

synchronized (this) {

connection = this.connection;

if (Objects.isNull(connection)) {

RedisClient redisClient = RedisClient.create(this.uri);

connection = redisClient.connect();

this.connection = connection;

}

}

}

return connection;

}

private String getScriptSha1() {

String sha1 = this.scriptSha1;

if (Objects.isNull(sha1)) {

synchronized (this) {

sha1 = this.scriptSha1;

if (Objects.isNull(sha1)) {

sha1 = doLoadScript();

this.scriptSha1 = sha1;

}

}

}

return sha1;

}

private String doLoadScript() {

try (InputStream inputStream = getClass().getResourceAsStream("/request_rate_limiter.lua")) {

if (Objects.isNull(inputStream)) {

return "";

}

try (ByteArrayOutputStream baos = new ByteArrayOutputStream()) {

int len;

byte[] buffer = new byte[256];

while (true) {

len = inputStream.read(buffer);

if (len == -1) {

break;

}

baos.write(buffer, 0, len);

}

byte[] script = baos.toByteArray();

try {

return getConnection().sync().scriptLoad(script);

} catch (Exception e) {

log.info("doLoadScript report errors", e);

return "";

}

}

} catch (Exception e) {

log.info("doLoadScript report errors", e);

return "";

}

}

public static void main(String[] args) throws InterruptedException {

int count = 0;

RateLimiter rateLimiter = new RateLimiter("redis://:civic@localhost/10", 1, 50, 1);

while (true) {

boolean elapsedSecond = rateLimiter.acquire("xxx");

if (elapsedSecond) {

log.info("{} obtain {}", Thread.currentThread(), System.nanoTime());

++count;

if (count > 100) {

Thread.sleep(60000);

count = 0;

}

}

}

}

}

flow chart

This process is relatively simple:

- Create RateLimiter, initialize RedisClient, obtain StatefulRedisConnection connection connection, and load script to Redis.

- Build key values and parameters, call the evalSha method of lettuce, execute the current limiting lua script, and obtain the response.

- Judge whether there is permission according to the allowed_num, new_tokens.

summary

The following rules are summarized through the test:

- Redis's lua current limiting algorithm is consistent with Eureka's stand-alone algorithm.

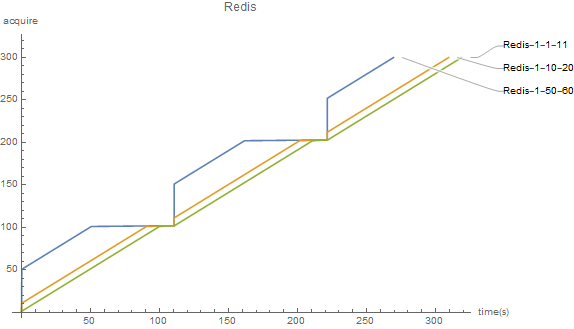

In the figure, the first number represents averageRate, the second number represents burstSize, and the third number represents sleep time. For example, Eureka-1-50-60 represents averageRate=1(acquire/s), burstSize=50, and sleepTime=60(ms)

- Redis current limiter uses the atomicity of lua script for thread synchronization.