Preface

The previous blog summarized the contents of CountDownLatch and Cyclic Barrier. The underlying concurrency control of these contents can not be separated from AQS (AbstractQueuedSynchronizer). Personally, there are fewer scenarios in which AQS is directly used to write concurrency tools in your work, and you won't go deep into the detailed source level of AQS to explore its contents. Only its practical scenarios and simple principles will be summarized.

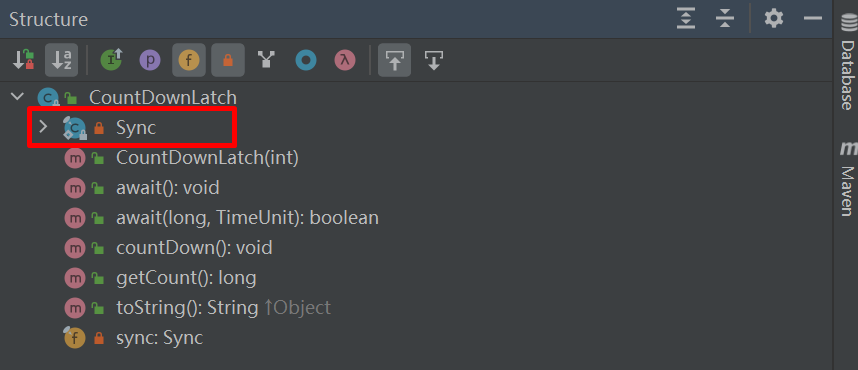

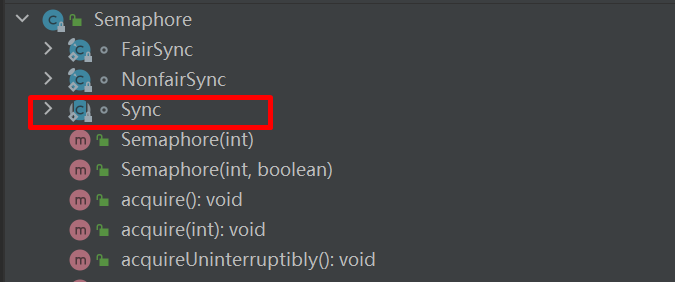

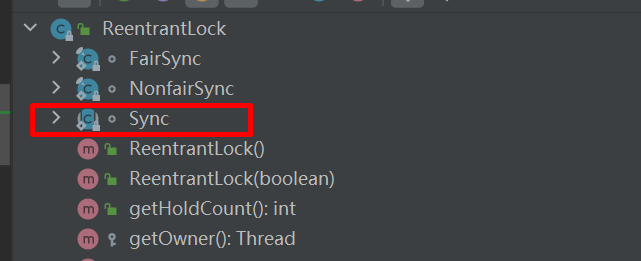

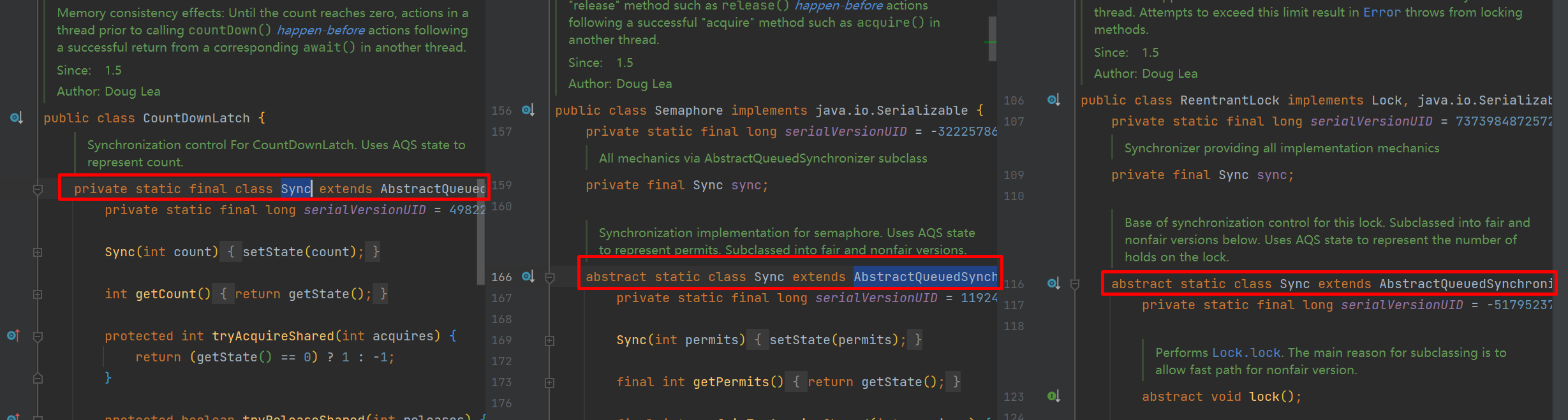

In fact, the tool classes summarized earlier for inter-thread collaboration, such as CountDownLatch, Semaphore, ReentrantLock, all seem to share a common feature, with an internal seemingly uniform gate to control the number of accessible threads and when they are accessible.

All Sync Internal Classes

So we go into the source structure of these tools and see the following

CyclicBarrier is an interthread collaboration implemented through ReentrantLock. The internal class Sync, which exists in all three, actually inherits from AbstractQueuedSynchronizer.

Simple principles of AQS

We will not analyze the source code of AQS line by line, but simply comb out the principle of AQS.

Three Cores

There are three main core contents in AQS, which are the key to understanding the principles of AQS.

state variable

/**

* The synchronization state.

*/

private volatile int state;

A volatile-modified variable that is the key to controlling concurrency. In different implementation classes, the meaning of this state does not pass. For example, in Smarphore, state means "the number of licenses remaining"; In CountDownLatch, a state means "a reciprocal number is required"; In ReentrantLock, a state represents the number of locks occupied, and a state value of 0 indicates that the current lock is not occupied by any thread.

Since this variable is volatile-modified, thread safety is required each time a state is modified, so the abstract class of AQS provides some ways to modify this variable via CAS

/**

* Atomically sets synchronization state to the given updated

* value if the current state value equals the expected value.

* This operation has memory semantics of a {@code volatile} read

* and write.

*

* @param expect the expected value

* @param update the new value

* @return {@code true} if successful. False return indicates that the actual

* value was not equal to the expected value.

*/

protected final boolean compareAndSetState(int expect, int update) {

// See below for intrinsics setup to support this

return unsafe.compareAndSwapInt(this, stateOffset, expect, update);

}

FIFO queue

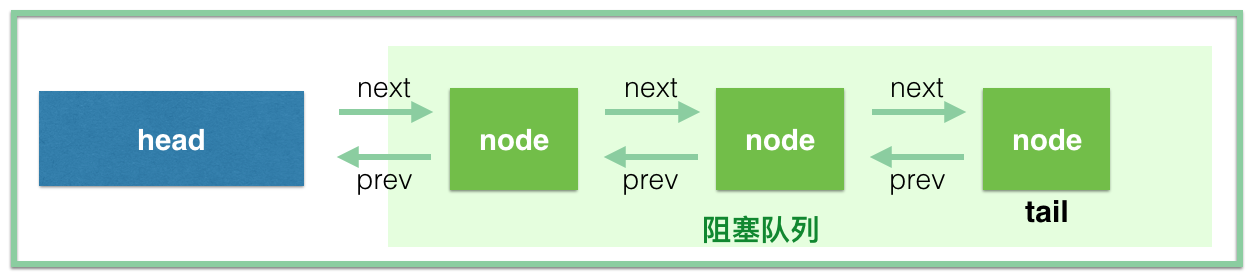

A queue is maintained in AQS to hold "threads waiting to acquire locks". When a lock is released, AQS needs to fetch an appropriate thread from the FIFO queue to acquire locks. There is an internal class called Node in AQS, which actually maintains a double-line chain table.

static final class Node {

/** Marker to indicate a node is waiting in shared mode */

static final Node SHARED = new Node();

/** Marker to indicate a node is waiting in exclusive mode */

static final Node EXCLUSIVE = null;

/** waitStatus value to indicate thread has cancelled */

static final int CANCELLED = 1;

/** waitStatus value to indicate successor's thread needs unparking */

static final int SIGNAL = -1;

/** waitStatus value to indicate thread is waiting on condition */

static final int CONDITION = -2;

/**

* waitStatus value to indicate the next acquireShared should

* unconditionally propagate

*/

static final int PROPAGATE = -3;

/**

* Status field, taking on only the values:

* SIGNAL: The successor of this node is (or will soon be)

* blocked (via park), so the current node must

* unpark its successor when it releases or

* cancels. To avoid races, acquire methods must

* first indicate they need a signal,

* then retry the atomic acquire, and then,

* on failure, block.

* CANCELLED: This node is cancelled due to timeout or interrupt.

* Nodes never leave this state. In particular,

* a thread with cancelled node never again blocks.

* CONDITION: This node is currently on a condition queue.

* It will not be used as a sync queue node

* until transferred, at which time the status

* will be set to 0. (Use of this value here has

* nothing to do with the other uses of the

* field, but simplifies mechanics.)

* PROPAGATE: A releaseShared should be propagated to other

* nodes. This is set (for head node only) in

* doReleaseShared to ensure propagation

* continues, even if other operations have

* since intervened.

* 0: None of the above

*

* The values are arranged numerically to simplify use.

* Non-negative values mean that a node doesn't need to

* signal. So, most code doesn't need to check for particular

* values, just for sign.

*

* The field is initialized to 0 for normal sync nodes, and

* CONDITION for condition nodes. It is modified using CAS

* (or when possible, unconditional volatile writes).

*/

volatile int waitStatus;

/**

* Link to predecessor node that current node/thread relies on

* for checking waitStatus. Assigned during enqueuing, and nulled

* out (for sake of GC) only upon dequeuing. Also, upon

* cancellation of a predecessor, we short-circuit while

* finding a non-cancelled one, which will always exist

* because the head node is never cancelled: A node becomes

* head only as a result of successful acquire. A

* cancelled thread never succeeds in acquiring, and a thread only

* cancels itself, not any other node.

*/

volatile Node prev;

/**

* Link to the successor node that the current node/thread

* unparks upon release. Assigned during enqueuing, adjusted

* when bypassing cancelled predecessors, and nulled out (for

* sake of GC) when dequeued. The enq operation does not

* assign next field of a predecessor until after attachment,

* so seeing a null next field does not necessarily mean that

* node is at end of queue. However, if a next field appears

* to be null, we can scan prev's from the tail to

* double-check. The next field of cancelled nodes is set to

* point to the node itself instead of null, to make life

* easier for isOnSyncQueue.

*/

volatile Node next;

/**

* The thread that enqueued this node. Initialized on

* construction and nulled out after use.

*/

volatile Thread thread;

/**

* Link to next node waiting on condition, or the special

* value SHARED. Because condition queues are accessed only

* when holding in exclusive mode, we just need a simple

* linked queue to hold nodes while they are waiting on

* conditions. They are then transferred to the queue to

* re-acquire. And because conditions can only be exclusive,

* we save a field by using special value to indicate shared

* mode.

*/

Node nextWaiter;

/**

* Returns true if node is waiting in shared mode.

*/

final boolean isShared() {

return nextWaiter == SHARED;

}

/**

* Returns previous node, or throws NullPointerException if null.

* Use when predecessor cannot be null. The null check could

* be elided, but is present to help the VM.

*

* @return the predecessor of this node

*/

final Node predecessor() throws NullPointerException {

Node p = prev;

if (p == null)

throw new NullPointerException();

else

return p;

}

Node() { // Used to establish initial head or SHARED marker

}

Node(Thread thread, Node mode) { // Used by addWaiter

this.nextWaiter = mode;

this.thread = thread;

}

Node(Thread thread, int waitStatus) { // Used by Condition

this.waitStatus = waitStatus;

this.thread = thread;

}

}

The specific diagram is shown below (the picture is from the javadoop website)

Methods to acquire and release locks

The key remaining content is the methods for acquiring and releasing locks, which depend on the state variable and have different logic for acquiring and releasing locks in different JUC process control tool classes. For example, in Smarphore, the acquire method acquires licenses. The logic is to determine if the state is greater than 0, and if it is greater than 0, to reduce the number specified on the state. The release method is to release the licenses, that is, to add the number specified on the basis of the state. In CountDownLatch, the await method is used to determine the value of the state, and if it is not zero, the thread is blocked. The countDown method is the last number, minus one based on the state. Therefore, the logic for acquiring and releasing locks is different for different tool classes.

There are several methods in AQS that can be implemented by subclasses

| Method | Effect |

|---|---|

| tryAcquire | Acquire exclusive locks |

| tryRelease | Release exclusive lock |

| tryAcquireShared(int acquires) | Acquire shared locks |

| tryReleaseShared(int releases) | Release shared lock |

Use of AQS in CountDownLatch

sync in CountDownLatch

The constructor for CountDownLatch is as follows

/**

* Constructs a {@code CountDownLatch} initialized with the given count.

*

* @param count the number of times {@link #countDown} must be invoked

* before threads can pass through {@link #await}

* @throws IllegalArgumentException if {@code count} is negative

*/

public CountDownLatch(int count) {

if (count < 0) throw new IllegalArgumentException("count < 0");

//Initialization function of internal column was called, and corresponding count value was passed in

this.sync = new Sync(count);

}

In the constructor, the initializer for the internal column is called, and the corresponding count value is passed in

In CountDownLatch, Sync's internal class source is shown below

private static final class Sync extends AbstractQueuedSynchronizer {

private static final long serialVersionUID = 4982264981922014374L;

//Initialize, set the value of state

Sync(int count) {

setState(count);

}

int getCount() {

return getState();

}

//The sync inside CountDownLatch implements the tryAcquireShared method in AQS

//You can see that the logic of CountDownLatch is that if the state does not change to zero, the thread enters the waiting queue

protected int tryAcquireShared(int acquires) {

return (getState() == 0) ? 1 : -1;

}

//Copy tryReleaseShared in AQS yourself in CountDownLatch

protected boolean tryReleaseShared(int releases) {

// Decrement count; signal when transition to zero

//for loop, like spin

for (;;) {

//Get the current state

int c = getState();

if (c == 0)

return false;

int nextc = c-1;//state-1

if (compareAndSetState(c, nextc))//Update the value of state by cas

return nextc == 0;//If the state becomes 0 after updating, it returns true

}

}

}

await in CountDownLatch

The await method for CountDownLatch is as follows

/**

CountDownLatch The await method in calls tryAcquireSharedNanos for internal AQS

When this method is called in CountDownLatch, the thread enters the wait queue and waits for the state in CountDownLatch to become zero

*/

public void await() throws InterruptedException {

sync.acquireSharedInterruptibly(1);

}

Direct calls to the acquireSharedInterruptibly method in sync, which is already implemented in AQS and does not require the related subclasses to implement themselves.

The acquireSharedInterruptibly source code in AQS is as follows

/**

AQS acquireSharedInterruptibly in

*/

public final void acquireSharedInterruptibly(int arg)

throws InterruptedException {

if (Thread.interrupted())

throw new InterruptedException();

if (tryAcquireShared(arg) < 0)//CountDownLatch implements this method and returns -1 if the value of state is not equal to 0.

doAcquireSharedInterruptibly(arg);//The underlying call to doAcquireSharedInterruptibly will wait for the thread to queue

}

/**

The underlying layer of doAcquireSharedInterruptibly above calls this method

This method does not need to be looked at carefully, the waiting threads will be queued

*/

private void doAcquireSharedInterruptibly(int arg)

throws InterruptedException {

final Node node = addWaiter(Node.SHARED);

boolean failed = true;

try {

for (;;) {

final Node p = node.predecessor();

if (p == head) {

int r = tryAcquireShared(arg);

if (r >= 0) {

setHeadAndPropagate(node, r);

p.next = null; // help GC

failed = false;

return;

}

}

if (shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt())//The underlying suspends threads through LockSupport's lock method

throw new InterruptedException();

}

} finally {

if (failed)

cancelAcquire(node);

}

}

You can see that the operation of waiting for a thread to join the queue is implemented in AQS, but when to join the queue, it is handed over to a subclass that first uses AQS as a tool. The same idea can be seen in Smarphore and ReentrantLock.

countDown method in CountDownLatch

public void countDown() {

sync.releaseShared(1);

}

releaseShared method in AQS

public final boolean releaseShared(int arg) {

//CountDownLatch's own implementation of tryReleaseShared is called in the if judgment to see the comment for the code above

if (tryReleaseShared(arg)) {

doReleaseShared();//AQS has an implementation that wakes up all waiting threads directly.

return true;

}

return false;

}

Use of AQS in Smarphore

Later on, the relevant code in AQS will not be posted.

In introducing Semaphore, we said that this specifies fairness and unfairness, so we implemented NonfairSync (unfair lock) and FairSync (fair lock) for AQS in Semaphore, similar to CountDownLatch.

acquire in Semaphore

public void acquire() throws InterruptedException {

sync.acquireSharedInterruptibly(1);//The underlying layer also calls the tryAcquireShared method of the Sync implementation in Semaphore

}

There are two implementations of fair and unfair locks in Semaphore. First, take the unfair lock implementation as an example

//java.util.concurrent.Semaphore.NonfairSync#tryAcquireShared

protected int tryAcquireShared(int acquires) {

//The underlying layer calls the nonfairTryAcquireShared method of the internal class Sync in Semaphore

return nonfairTryAcquireShared(acquires);

}

final int nonfairTryAcquireShared(int acquires) {

//spin

for (;;) {

int available = getState();

//Use cas to reduce the number of locks that need to be acquired based on state

int remaining = available - acquires;

if (remaining < 0 ||

compareAndSetState(available, remaining))

return remaining;

}

}

release in Semaphore

The source code in Semaphore is as follows, because fair and unfair locks release locks in the same way, Semaphore places its implementation in the internal class Sync

//java.util.concurrent.Semaphore#release(int)

public void release(int permits) {

if (permits < 0) throw new IllegalArgumentException();

//This calls releaseShared in AQS, and the underlying calls tryReleaseShared in Semaphore

sync.releaseShared(permits);

}

//java.util.concurrent.Semaphore.Sync#tryReleaseShared

//As you can see, this is a simple spin+cas operation, set state = state+release

protected final boolean tryReleaseShared(int releases) {

for (;;) {

int current = getState();

int next = current + releases;

if (next < current) // overflow

throw new Error("Maximum permit count exceeded");

if (compareAndSetState(current, next))

return true;

}

}

AQS implements its own collaborator

Internal inheritance of AbstractQueuedSynchronizer implements an internal Sync

/**

* autor:liman

* createtime:2021/11/30

* comment:AQS A simple example

* I use AQS to implement a simple collaborator, beggar version

*/

@Slf4j

public class SelfCountDownLatchDemo {

private final Sync sync = new Sync();

public void await() {

sync.acquireShared(0);

}

public void signal() {

sync.releaseShared(0);

}

/**

* 1,Inherit the AQS method for acquisition and release, basic operations

*/

private class Sync extends AbstractQueuedSynchronizer {

@Override

protected int tryAcquireShared(int arg) {

return (getState() == 1) ? 1 : -1;

}

@Override

protected boolean tryReleaseShared(int arg) {

setState(1);

return true;

}

}

public static void main(String[] args) throws InterruptedException {

SelfCountDownLatchDemo selfCountDownLatchDemo = new SelfCountDownLatchDemo();

for (int i = 0; i < 10; i++) {

new Thread(()->{

System.out.println(Thread.currentThread().getName()+"Try to get customized latch in......");

selfCountDownLatchDemo.await();

System.out.println(Thread.currentThread().getName()+"Continue running");

}).start();

}

//Main thread sleeps for 5 seconds

Thread.sleep(5000);

selfCountDownLatchDemo.signal();//Wake up all threads

//This thread does not need to be blocked and will run directly

new Thread(()->{

System.out.println(Thread.currentThread().getName()+"Try to get customized latch......");

selfCountDownLatchDemo.await();

System.out.println(Thread.currentThread().getName()+"Continue running");

},"main-sub-thread").start();

}

}

Run Results

summary

Core components of AQS, subclasses achieve custom thread collaboration control logic by implementing specified methods. The next article summarizes the contents of Future and Callable

Reference material

javadoop - Source line by line analysis clearly AbstractQueuedSynchronizer (1)

javadoop - Source line by line analysis clearly AbstractQueuedSynchronizer (2)

javadoop - Source line by line analysis clearly AbstractQueuedSynchronizer (3)