Hello, I'm Dabin. Recently, I read a lot of experience in the interview. I took the time to summarize the common interview questions of Java Concurrent Programming and share them with you here~

First of all, let's share a github warehouse with more than 200 classic computer books, including C language, C + +, Java, Python, front end, database, operating system, computer network, data structure and algorithm, machine learning, programming life, etc. you can star. You can search directly on the warehouse next time you find books, and the warehouse is constantly updated~

GitHub address: https://github.com/Tyson0314/java-books

If github is not accessible, you can access the gitee repository.

Gitee address: https://gitee.com/tysondai/java-books

Thread pool

Thread pool: a pool that manages threads.

Why use thread pools?

- Reduce resource consumption. Reduce the consumption caused by thread creation and destruction by reusing the created threads.

- Improve response speed. When the task arrives, it can be executed immediately without waiting for the thread to be created.

- Improve thread manageability. Manage threads in a unified way to prevent the system from creating a large number of similar threads and consuming memory.

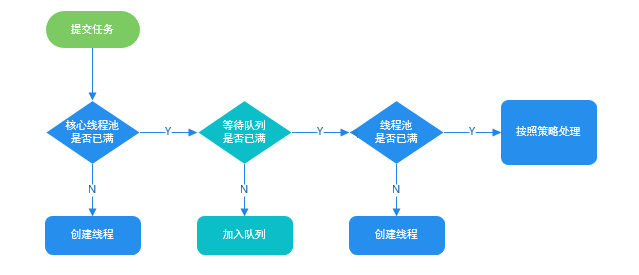

Thread pool execution principle?

Creating a new thread requires obtaining a global lock. This design can avoid obtaining a global lock as much as possible. After the ThreadPoolExecutor completes preheating (the number of currently running threads is greater than or equal to corePoolSize), most of the submitted tasks will be placed in BlockingQueue.

To visually describe thread pool execution, make an analogy:

- Core threads are compared to regular employees of the company

- Non core threads are compared to outsourced employees

- Blocking queues are compared to demand pools

- Submitting a task is like raising a demand

What are the thread pool parameters?

General constructor for ThreadPoolExecutor:

public ThreadPoolExecutor(int corePoolSize, int maximumPoolSize, long keepAliveTime, TimeUnit unit, BlockingQueue<Runnable> workQueue, ThreadFactory threadFactory, RejectedExecutionHandler handler);

-

corePoolSize: when there is a new task, if the number of threads in the thread pool does not reach the basic size of the thread pool, a new thread will be created to execute the task, otherwise the task will be placed in the blocking queue. When the number of surviving threads in the thread pool is always greater than the corePoolSize, you should consider increasing the corePoolSize.

-

maximumPoolSize: when the blocking queue is full, if the number of threads in the thread pool does not exceed the maximum number of threads, a new thread will be created to run the task. Otherwise, the new task is processed according to the reject policy. Non core threads are similar to temporarily borrowed resources. These threads should exit after their idle time exceeds keepAliveTime to avoid resource waste.

-

BlockingQueue: stores tasks waiting to run.

-

keepAliveTime: the time that a non core thread remains alive after it is idle. This parameter is only valid for non core threads. Set to 0, indicating that redundant idle threads will be terminated immediately.

-

TimeUnit: time unit

TimeUnit.DAYS TimeUnit.HOURS TimeUnit.MINUTES TimeUnit.SECONDS TimeUnit.MILLISECONDS TimeUnit.MICROSECONDS TimeUnit.NANOSECONDS

-

ThreadFactory: whenever a new thread is created in the thread pool, it is completed through the thread factory method. Only one method, newThread, is defined in ThreadFactory. It will be called whenever the thread pool needs to create a new thread.

public class MyThreadFactory implements ThreadFactory { private final String poolName; public MyThreadFactory(String poolName) { this.poolName = poolName; } public Thread newThread(Runnable runnable) { return new MyAppThread(runnable, poolName);//Pass the thread pool name to the constructor to distinguish the threads of different thread pools } } -

RejectedExecutionHandler: when the queue and thread pool are full, new tasks are processed according to the rejection policy.

AbortPolicy: The default policy is thrown directly RejectedExecutionException DiscardPolicy: Discard directly without processing DiscardOldestPolicy: Discard the task waiting for the head of the queue and execute the current task CallerRunsPolicy: The task is handled by the calling thread

How to set the thread pool size?

If the number of threads in the thread pool is too small, when there are a large number of requests to be processed, the system response is relatively slow, which will affect the experience, and even a large number of tasks in the task queue will accumulate, resulting in OOM.

If the number of threads in the thread pool is too large, a large number of threads may strive for cpu resources at the same time, which will lead to a large number of context switching (the cpu allocates time slices to the threads, and saves the state when the cpu time slices of the threads are used up, so as to continue running next time), which increases the execution time of the threads and affects the overall execution efficiency.

cpu intensive tasks (N+1): this kind of tasks mainly consume cpu resources. The number of threads can be set to N (number of cpu cores) + 1. One thread more than the number of cpu cores is to prevent the impact of task suspension (thread blocking, such as io operation, wait lock, thread sleep) caused by some reasons. Once a thread is blocked, cpu resources are released, and in this case, an extra thread can make full use of cpu idle time.

I/O intensive tasks (2N): the system will spend most of its time processing I/O operations, and threads waiting for I/O operations will be blocked to release CPU resources. At this time, the CPU can be handed over to other threads for use. Therefore, in the application of I/O-Intensive tasks, we can configure more threads. The specific calculation method is: optimal number of threads = number of CPU cores * (1/CPU utilization) = number of CPU cores * (1 + (I/O time consumption / CPU time consumption)), which can generally be set to 2N

What are the types of thread pools? Applicable scenarios?

Common thread pools include FixedThreadPool, singlethreadexecution, CachedThreadPool and ScheduledThreadPool. These are all ExecutorService instances.

FixedThreadPool

A thread pool with a fixed number of threads. At most nThreads threads are active at any point in time to execute tasks.

public static ExecutorService newFixedThreadPool(int nThreads) {

return new ThreadPoolExecutor(nThreads, nThreads, 0L, TimeUnit.MILLISECONDS, new LinkedBlockingQueue<Runnable>());

}

Using the unbounded queue LinkedBlockingQueue (the queue capacity is Integer.MAX_VALUE), the running thread pool will not reject the task, that is, it will not call the RejectedExecutionHandler.rejectedExecution() method.

maxThreadPoolSize is an invalid parameter, so set its value to be consistent with coreThreadPoolSize.

keepAliveTime is also an invalid parameter, set to 0L, because all threads in this thread pool are core threads, and the core threads will not be recycled (unless executor.allowCoreThreadTimeOut(true) is set).

Applicable scenario: it is applicable to processing CPU intensive tasks, ensuring that the CPU is allocated as few threads as possible when it is used by working threads for a long time, that is, it is applicable to executing long-term tasks. It should be noted that FixedThreadPool will not reject tasks, which will lead to OOM when there are many tasks.

SingleThreadExecutor

A thread pool with only one thread.

public static ExecutionService newSingleThreadExecutor() {

return new ThreadPoolExecutor(1, 1, 0L, TimeUnit.MILLISECONDS, new LinkedBlockingQueue<Runnable>());

}

Use the unbounded queue LinkedBlockingQueue. There is only one running thread in the thread pool. The new task is put into the work queue. After the thread processes the task, it circularly obtains the task execution from the queue. Ensure the sequential execution of each task.

Applicable scenario: it is applicable to the scenario of serial execution of tasks, one task at a time. When there are many tasks, it will also lead to OOM.

CachedThreadPool

Create a thread pool for new threads as needed.

public static ExecutorService newCachedThreadPool() {

return new ThreadPoolExecutor(0, Integer.MAX_VALUE, 60L, TimeUnit.SECONDS, new SynchronousQueue<Runnable>());

}

If the speed of the main thread submitting a task is higher than the speed of the thread processing a task, the CachedThreadPool will continue to create new threads. In extreme cases, this can lead to exhaustion of cpu and memory resources.

The SynchronousQueue without capacity is used as the work queue of the thread pool. When there are idle threads in the thread pool, the tasks submitted by SynchronousQueue.offer(Runnable task) will be processed by the idle threads, otherwise a new thread processing task will be created.

Applicable scenario: used for concurrent execution of a large number of short-term small tasks. The number of threads that CachedThreadPool allows to create is Integer.MAX_VALUE, a large number of threads may be created, resulting in OOM.

ScheduledThreadPoolExecutor

Run the task after a given delay, or execute the task periodically. It will not be used in actual projects because there are other options, such as quartz.

The used task queue DelayQueue encapsulates a PriorityQueue. The PriorityQueue will sort the tasks in the queue. The tasks with earlier time will be executed first (that is, the tasks with smaller time variable of ScheduledFutureTask will be executed first). If the time is the same, the tasks submitted first will be executed first (the tasks with smaller queuenumber variable of ScheduledFutureTask will be executed first).

Execution cycle task steps:

- The thread gets the expired ScheduledFutureTask (DelayQueue.take()) from the DelayQueue. Due task means that the time of ScheduledFutureTask is greater than or equal to the time of the current system;

- Execute this ScheduledFutureTask;

- Modify the time variable of ScheduledFutureTask to be executed next time;

- Put the ScheduledFutureTask after the modified time back into the DelayQueue (DelayQueue.add()).

Applicable scenarios: scenarios where tasks are executed periodically and the number of threads needs to be limited.

Process thread

Process refers to an application running in memory. Each process has its own independent memory space. Multiple threads can be started in a process.

Thread is a smaller execution unit than a process. It is an independent control flow in a process. A process can start multiple threads, and each thread executes different tasks in parallel.

Thread life cycle

New: the thread is built and start() has not been called yet.

Runnable: includes the ready and running states of the operating system.

Blocked: generally, it is passive. It can't get resources in the preemption of resources. It passively hangs in memory and waits for the resources to be released to wake it up. If the thread is blocked, it will release the CPU without releasing the memory.

Waiting: the thread entering this state needs to wait for other threads to make some specific actions (notification or interrupt).

Timed_WAITING: this state is different from WAITING. It can return after a specified time.

Terminated: indicates that the thread has completed execution.

Image source: the art of Java Concurrent Programming

What about thread interrupts?

Thread interrupt means that a thread is interrupted by other threads during its operation. The biggest difference between it and stop is that stop is forced by the system to terminate the thread, while thread interrupt sends an interrupt signal to the target thread. If the target thread does not receive the signal of thread interrupt and bind the thread, the thread will not terminate. Whether to exit or execute other logic depends on the target thread Mark thread.

There are three important methods of thread interruption:

1,java.lang.Thread#interrupt

Call the interrupt() method of the target thread, send an interrupt signal to the target thread, and the thread is marked with an interrupt flag.

2,java.lang.Thread#isInterrupted()

Judge whether the target thread is interrupted, and the interrupt flag will not be cleared.

3,java.lang.Thread#interrupted

Determining whether the target thread is interrupted will clear the interrupt flag.

private static void test2() {

Thread thread = new Thread(() -> {

while (true) {

Thread.yield();

// Response interrupt

if (Thread.currentThread().isInterrupted()) {

System.out.println("Java The technology stack thread was interrupted and the program exited.");

return;

}

}

});

thread.start();

thread.interrupt();

}

What are the ways to create threads?

- Create multiple threads by extending the Thread class

- Create multithreading by implementing the Runnable interface

- Implement the Callable interface and create threads through the FutureTask interface.

- Use the Executor framework to create a thread pool.

The code for creating a Thread by inheriting Thread is as follows. The run() method is a method called back by the jvm after creating an operating system level Thread. It cannot be called manually. Manual calling is equivalent to calling an ordinary method.

/** * @author: Programmer Dabin * @ time: 2021-09-11 10:15 */public class MyThread extends Thread { public MyThread() { } @Override public void run() { for (int i = 0; i < 10; i++) { System.out.println(Thread.currentThread() + ":" + i); } } public static void main(String[] args) { MyThread mThread1 = new MyThread(); MyThread mThread2 = new MyThread(); MyThread myThread3 = new MyThread(); mThread1.start(); mThread2.start(); myThread3.start(); }}

Runnable create thread code:

/** * @author: Programmer Dabin * @ time: 2021-09-11 10:04 */public class RunnableTest { public static void main(String[] args){ Runnable1 r = new Runnable1(); Thread thread = new Thread(r); thread.start(); System.out.println("Main thread:["+Thread.currentThread().getName()+"]"); }}class Runnable1 implements Runnable{ @Override public void run() { System.out.println("Current thread:"+Thread.currentThread().getName()); }}

Advantages of implementing Runnable interface over inheriting Thread class:

- It can avoid the limitation of singleton inheritance in java

- The Thread pool can only put threads that implement Runable or Callable classes, not directly into classes that inherit threads

Callable create thread code:

/** * @author: Programmer Dabin * @ time: 2021-09-11 10:21 */public class CallableTest { public static void main(String[] args) { Callable1 c = new Callable1(); //The result of asynchronous calculation futuretask < integer > result = new futuretask < > (c); new thread (result). Start(); try {/ / wait for the task to complete and return the result int sum = result. Get(); system. Out. Println (sum);} catch (interruptedexception | executionexception e) {e.printstacktrace();} }}class Callable1 implements Callable<Integer> { @Override public Integer call() throws Exception { int sum = 0; for (int i = 0; i <= 100; i++) { sum += i; } return sum; }}

Create thread code using Executor:

/** * @author: Programmer Dabin * @ time: 2021-09-11 10:44 */public class ExecutorsTest { public static void main(String[] args) { //Get the ExecutorService instance. Production is disabled. You need to manually create the thread pool ExecutorService. ExecutorService = executors. Newcachedthreadpool(); / / submit the task ExecutorService. Submit (New runnabledemo());}} class runnabledemo implements runnable {@ override public void run() {system. Out. Println ("Dabin");}}

What is a thread deadlock?

Multiple threads are blocked at the same time, and one or all of them are waiting for a resource to be released. Because the threads are blocked indefinitely, the program cannot terminate normally.

As shown in the figure below, thread A holds resource 2 and thread B holds resource 1. They both want to apply for each other's resources at the same time, so the two threads will wait for each other and enter A deadlock state.

The following is an example to illustrate thread deadlock. The code comes from the beauty of concurrent programming.

public class DeadLockDemo { private static Object resource1 = new Object();//Resource 1 private static object resource2 = new object()// Resource 2 public static void main (string [] args) {new thread (() - > {synchronized (resource1) {system.out.println (thread. Currentthread() + "get resource1"); try {thread. Sleep (1000);} catch (interruptedexception E) {e.printstacktrace() ;} system. Out. Println (thread. Currentthread() + "waiting get resource2"); synchronized (resource2) {system. Out. Println (thread. Currentthread() + "get resource2");}}}, "thread 1"). Start(); new thread (() - > {synchronized (resource2) { System.out.println(Thread.currentThread() + "get resource2"); try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); } System.out.println(Thread.currentThread() + "waiting get resource1") ; synchronized (resource1) {system. Out. Println (thread. Currentthread() + "get resource1");}}}}, "thread 2").start();}}

The code output is as follows:

Thread[Thread 1,5,main]get resource1Thread[Thread 2,5,main]get resource2Thread[Thread 1,5,main]waiting get resource2Thread[Thread 2,5,main]waiting get resource1

Thread A obtains the monitor lock of resource1 through synchronized (resource1), and then through Thread.sleep(1000); Let thread A sleep for 1s so that thread B can be executed and obtain the monitor lock of resource2. When the sleep of thread A and thread B ends, they both start to attempt to obtain each other's resources. Then the two threads will fall into the state of waiting for each other, which will produce A deadlock.

How do thread deadlocks occur? How to avoid it?

Four necessary conditions for deadlock generation:

-

Mutual exclusion: a resource can only be used by one process at a time (resource independence)

-

Request and hold: when a process is blocked by requesting resources, it holds the obtained resources (does not release the lock)

-

Non deprivation: the resources obtained by the process cannot be forcibly deprived (robbed of resources) before they are used

-

Round robin waiting: a round robin between several processes waiting for resources to close (dead loop)

Methods to avoid deadlock:

- The first condition "mutual exclusion" cannot be broken, because locking is to ensure mutual exclusion

- Apply for all resources at one time and destroy the "occupy and wait" condition

- When a thread occupying some resources further applies for other resources, if it cannot apply, it will actively release the resources it occupies and destroy the "no preemption" condition

- Apply for resources in sequence and destroy the "circular waiting" condition

What is the difference between thread run and start?

The start() method is used to start the Thread. When it is the Thread's turn to execute, run() will be called automatically; Calling the run() method directly cannot achieve the purpose of starting multithreading. It is equivalent to the linear execution of the run() method of the Thread object by the main Thread.

A thread to line start() method can only be called once, and multiple calls will throw a java.lang.IllegalThreadStateException exception; The run() method has no restrictions.

What methods do threads have?

join

Thread.join() creates a thread thread in main, invokes Thread.join() /thread.join in main (long millis), main thread abandonment cpu control, and thread enters WAITING/TIMED_. In the waiting state, wait until the thread thread finishes executing before continuing to execute the main thread.

public final void join() throws InterruptedException { join(0);}

yield

Thread.yield(), which must be called by the current thread. The current thread abandons the obtained CPU time slice, but does not release the lock resources. It changes from the running state to the ready state, allowing the OS to select the thread again. Function: let threads with the same priority execute in turn, but it is not guaranteed that they will execute in turn. In practice, yield () cannot be guaranteed to achieve the purpose of concession, because the concession thread may be selected again by the thread scheduler. Thread.yield() does not cause blocking. This method is similar to sleep(), except that the user cannot specify how long to pause.

public static native void yield(); //static method

sleep

Thread.sleep(long millis). This method must be called by the current thread, and the current thread enters TIMED_WAITING state, release cpu resources without releasing the object lock, and resume operation after the specified time. Function: the best way to give other threads the opportunity to execute.

public static native void sleep(long millis) throws InterruptedException;//static method

volatile underlying principle

volatile is a lightweight synchronization mechanism. volatile ensures the visibility of variables to all threads and does not guarantee atomicity.

- When writing to a volatile variable, the JVM will send a LOCK prefix instruction to the processor to write the data of the cache line where the variable is located back to the system memory.

- Due to the cache consistency protocol, each processor checks whether its cache has expired by sniffing the data propagated on the bus. When the processor finds that the memory address corresponding to its cache line has been modified, it will set the current processor's cache line to an invalid state. When the processor modifies this data, The data will be re read from the system memory to the processor cache.

MESI (cache consistency protocol): when the CPU writes data, if it is found that the operating variable is a shared variable, that is, there is a copy of the variable in other CPUs, it will send a signal to inform other CPUs to set the cache line of the variable to invalid state. Therefore, when other CPUs need to read the variable, it will be re read from memory.

volatile keyword has two functions:

- It ensures the visibility when different threads operate on shared variables, that is, when a thread modifies the value of a variable, the new value is immediately visible to other threads.

- Instruction reordering is prohibited.

Instruction reordering is to optimize instructions, improve program efficiency, and improve parallelism as much as possible without affecting the execution results of single threaded programs. The Java compiler will insert memory barrier instructions in appropriate places when generating instruction series to prohibit processor reordering. Inserting a memory barrier is equivalent to telling the CPU and compiler that the command must be executed before the command must be executed. When a volatile field is written, the Java memory model will insert a write barrier instruction after the write operation, which will refresh the previous write values into memory.

AQS principle

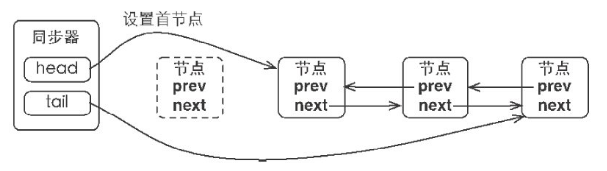

AQS, AbstractQueuedSynchronizer, abstract queue synchronizer, defines a synchronizer framework for multi-threaded access to shared resources. The implementation of many concurrency tools depends on it, such as ReentrantLock/Semaphore/CountDownLatch.

AQS uses a volatile int type member variable state to represent the synchronization state, and CAS modifies the value of the synchronization state. When a thread calls the lock method, if state=0, it means that no thread occupies the lock of shared resources. You can obtain the lock and add state to 1. If the state is not 0, it indicates that some threads are currently using shared variables, and other threads must join the synchronization queue to wait.

private volatile int state;//Share variables and use volatile decoration to ensure thread visibility

The synchronizer relies on the internal synchronization queue (a FIFO bidirectional queue) to manage the synchronization status. When the current thread fails to obtain the synchronization status, the synchronizer will construct the current thread and the waiting status (exclusive or shared) as a Node, add it to the synchronization queue and spin it. When the synchronization status is released, It will wake up the thread corresponding to the successor Node in the first Node to try to obtain the synchronization state again.

What are the uses of synchronized?

- Common modification method: it acts on the current object instance. Before entering the synchronization code, you need to obtain the lock of the current object instance

- Modify static method: it acts on the current Class. Before entering the synchronization code, you need to obtain the lock of the current Class object. The synchronized keyword is added to the static method and the synchronized(class) code block to lock the Class class

- Modifier code block: specify the lock object, lock the given object, and obtain the lock of the given object before entering the synchronization code base

What are the functions of Synchronized?

Atomicity: ensure that threads are mutually exclusive access synchronization code;

Visibility: to ensure that the modification of shared variables can be seen in time, it is actually through the changes in the Java memory model "Before unlock ing a variable, it must be synchronized to the main memory; if you lock a variable, the value of this variable in the working memory will be cleared. Before the execution engine uses this variable, you need to re initialize the variable value from the main memory by load or assign";

Orderliness: effectively solve the reordering problem, that is, "an unlock operation occurs before and then faces the lock operation of the same lock".

The underlying implementation principle of synchronized?

Synchronized synchronized code blocks are implemented through the monitorenter and monitorexit instructions. The monitorenter instruction points to the start position of the synchronized code block, and the monitorexit instruction indicates the end position of the synchronized code block. When the monitorenter instruction is executed, the thread attempts to obtain the lock, that is, to obtain the monitor (the monitor object exists in the object header of each Java object, and the synchronized lock is obtained in this way, which is why any object in Java can be used as a lock).

It contains a counter. When the counter is 0, it can be obtained successfully. After obtaining, set the lock counter to 1, that is, add 1. Accordingly, after executing the monitorexit instruction, set the lock counter to 0

, indicating that the lock is released. If the acquisition of the object lock fails, the current thread will block and wait until the lock is released by another thread

The synchronized modified method has no monitorenter instruction and monitorexit instruction. Instead, it obtains the ACC_SYNCHRONIZED ID, which indicates that the method is a synchronized method. The JVM identifies whether a method is declared as a synchronized method through the ACC_SYNCHRONIZED access flag, so as to execute the corresponding synchronous call.

How does ReentrantLock achieve reentrancy?

ReentrantLock internally customizes the synchronizer Sync. When locking, the CAS algorithm is used to put the thread object into a two-way linked list. Each time the lock is obtained, check whether the thread ID currently maintained is consistent with the thread ID currently requested. If it is consistent, the synchronization status is increased by 1, indicating that the lock has been obtained by the current thread many times.

Difference between ReentrantLock and synchronized

- Use the synchronized keyword to achieve synchronization. After the thread executes the synchronization code block, it will automatically release the lock, while ReentrantLock needs to release the lock manually.

- synchronized is a non fair lock, and ReentrantLock can be set as a fair lock.

- The thread waiting to acquire the lock on ReentrantLock is interruptible, and the thread can abandon the waiting lock, while synchonized will wait indefinitely.

- ReentrantLock can set the timeout to acquire the lock. Acquire the lock before the specified deadline. If the deadline is up and the lock has not been acquired, return.

- The tryLock() method of ReentrantLock can try to obtain a lock without blocking. It returns immediately after calling this method. If it can be obtained, it returns true, otherwise it returns false.

The difference between wait() and sleep()

Similarities:

- Pause the current thread and give the opportunity to other threads

- InterruptedException will be thrown if any thread is interrupted while waiting

difference:

- wait() is a method in the Object superclass; sleep() is a method in the Thread class

- Different from holding the lock, wait() releases the lock, while sleep() does not release the lock

- The wake-up methods are different. wait() wakes up by notifying or notifyAll, interrupting and reaching the specified time, while sleep() wakes up at the specified time

- To call obj.wait(), you need to obtain the lock of the object first, while Thread.sleep() does not

The difference between wait(),notify() and suspend(),resume()

- wait() causes the thread to enter a blocking wait state and releases the lock

- notify() wakes up a waiting thread, which is generally used with the wait () method.

- suspend() makes the thread enter the blocking state and will not recover automatically. The corresponding resume() must be called to make the thread re-enter the executable state. The suspend() method is easy to cause deadlock.

- The resume() method is used with the suspend() method.

suspend() is not recommended. After the suspend() method is called, the thread will not release the occupied resources (such as locks), but occupy the resources and enter the sleep state, which is easy to cause deadlock.

What's the difference between Runnable and Callable?

- The Callable interface method is call(), and the Runnable method is run();

- The call method of the Callable interface has a return value and supports generics. The run method of the Runnable interface has no return value.

- The call() method of the Callable interface allows exceptions to be thrown, while the run() method of the Runnable interface cannot continue throwing exceptions;

What is the difference between volatile and synchronized?

- volatile can only be used on variables; synchronized can be used on classes, variables, methods, and code blocks.

- volatile to ensure visibility; synchronized to ensure atomicity and visibility.

- volatile disables instruction reordering; synchronized does not.

- volatile does not cause blocking; synchronized does.

How to control the execution sequence of threads?

Suppose there are three threads: T1, T2 and T3. How do you ensure that T2 executes after T1 and T3 executes after T2?

You can use the join method to solve this problem. For example, in thread A, the join method of calling thread B means that **:A waits for the execution of B thread after execution (releasing CPU execution power), and continues executing.

The code is as follows:

public class ThreadTest { public static void main(String[] args) { Thread spring = new Thread(new SeasonThreadTask("spring")); Thread summer = new Thread(new SeasonThreadTask("summer")); Thread autumn = new Thread(new SeasonThreadTask("autumn")); try { //Spring thread starts spring.start()// The main thread waits for the thread spring to finish executing, and then executes spring.join()// The summer thread starts summer.start()// The main thread waits for the thread summer to finish executing, and then executes summer.join()// The autumn thread finally starts autumn.start()// The main thread waits for the thread autumn to finish executing, and then executes autumn. Join();} Catch (interruptedexception E) {e.printstacktrace();}}} class seasonthreadtask implements runnable {private string name; public seasonthreadtask (string name) {this. Name = name;} @ override public void run() {for (int i = 1; I < 4; I + +) {system.out.println (this. Name + "coming:" +I + "times"); try {thread. Sleep (100);} catch (interruptedexception E) {e.printstacktrace();}}}}

Operation results:

Spring is coming: 1 The next spring came: 2 The next spring came: 3 The next summer came: 1 The next summer came: 2 The next summer came: 3 The first autumn came: 1 The first autumn came: 2 The first autumn came: 3 second

Optimistic lock must be good?

Optimistic lock avoids the phenomenon of pessimistic lock monopolizing objects and improves concurrency performance, but it also has disadvantages:

- Optimistic locking can only guarantee the atomic operation of a shared variable. If there are one or more variables, the optimistic lock will become ineffective, but the mutex lock can be easily solved, regardless of the number of objects and the size of object granularity.

- Spinning for a long time may lead to high overhead. If CAS fails for a long time and spins all the time, it will bring great overhead to CPU.

- ABA problem. The core idea of CAS is to judge whether the memory value has been changed by comparing whether the memory value is the same as the expected value, but the judgment logic is not rigorous. If the memory value was originally A, later changed to B by one thread, and finally changed to A, CAS thinks that the memory value has not changed, but it has actually been changed by other threads, This situation has A great impact on the calculation results of scenarios that depend on process values. The solution is to introduce the version number and add one to the version number every time the variable is updated.

What is a daemon thread?

Daemon thread is a special process running in the background. It is independent of the control terminal and periodically performs certain tasks or waits to process certain events. In Java, the garbage collection thread is a special daemon thread.

Inter thread communication mode

volatile

volatile is a lightweight synchronization mechanism. volatile ensures the visibility of variables to all threads and does not guarantee atomicity.

synchronized

Ensure the visibility and exclusivity of thread access to variables.

Waiting for notification mechanism

wait/notify is a method of an Object object. To call wait/notify, you need to obtain the lock of the Object first. After the Object calls wait, the thread releases the lock and puts the thread in the Object's waiting queue. When the notifying thread calls the notify() method of this Object, the waiting thread will not return from wait immediately. You need to wait for the notifying thread to release the lock (notifying the thread to execute the synchronization code block), and wait for the thread in the queue to obtain the lock. Only after obtaining the lock successfully can it return from the wait() method, That is, it returns from the wait method, provided that the thread obtains the lock.

The waiting notification mechanism relies on the synchronization mechanism to ensure that the waiting thread can perceive the modification of the variable value of the object by the notification thread when it returns from the wait method.

ThreadLocal

Thread local variables. When using ThreadLocal to maintain a variable, ThreadLocal provides an independent copy of the variable for each thread using the variable, so each thread can change its copy independently without affecting other threads.

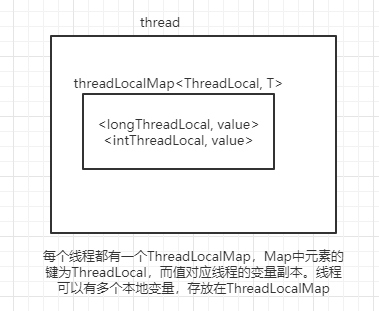

ThreadLocal principle

Each thread has a ThreadLocalMap(ThreadLocal internal class). The key of the element in the Map is ThreadLocal, and the value corresponds to the variable copy of the thread.

Call threadLocal.set() – > call getMap(Thread) – > return threadlocalmap < ThreadLocal, value > – > map.set (this, value) of the current thread, this is ThreadLocal

public void set(T value) { Thread t = Thread.currentThread(); ThreadLocalMap map = getMap(t); if (map != null) map.set(this, value); else createMap(t, value);}ThreadLocalMap getMap(Thread t) { return t.threadLocals;}void createMap(Thread t, T firstValue) { t.threadLocals = new ThreadLocalMap(this, firstValue);}

Call get() – > call getMap(Thread) – > return the threadlocalmap < ThreadLocal, value > – > map.getentry (this) of the current thread and return value

public T get() { Thread t = Thread.currentThread(); ThreadLocalMap map = getMap(t); if (map != null) { ThreadLocalMap.Entry e = map.getEntry(this); if (e != null) { @SuppressWarnings("unchecked") T result = (T)e.value; return result; } } return setInitialValue(); }

The type of threadLocals and the key of ThreadLocalMap are ThreadLocal objects, because each thread can have multiple ThreadLocal variables, such as longLocal and stringLocal.

public class ThreadLocalDemo { ThreadLocal<Long> longLocal = new ThreadLocal<>(); public void set() { longLocal.set(Thread.currentThread().getId()); } public Long get() { return longLocal.get(); } public static void main(String[] args) throws InterruptedException { ThreadLocalDemo threadLocalDemo = new ThreadLocalDemo(); threadLocalDemo.set(); System.out.println(threadLocalDemo.get()); Thread thread = new Thread(() -> { threadLocalDemo.set(); System.out.println(threadLocalDemo.get()); } ); thread.start(); thread.join(); System.out.println(threadLocalDemo.get()); }}

ThreadLocal is not used to solve the problem of multi-threaded access to shared resources, because the resources in each thread are only copies and are not shared. Therefore, ThreadLocal is suitable as a thread context variable to simplify intra thread parameters.

Why is ThreadLocal memory leaking?

Each thread has internal attributes of ThreadLocalMap. The key of the map is ThreaLocal, which is defined as a weak reference, and value is a strong reference type. During GC, the key will be automatically recycled, and the recycling of value depends on the life cycle of the thread object. Generally, thread objects are reused through thread pool to save resources, which leads to a long life cycle of Thread objects. In this way, there has always been a strong reference chain: thread -- > ThreadLocalMap – > entry – > value. With the execution of tasks, more and more values may be available and cannot be released, resulting in memory leakage.

Solution: each time you finish ThreadLocal, adjust its remove(), manually delete the corresponding key value pair, and avoid memory leakage from ThreadLocal.

currentTime.set(System.currentTimeMillis());result = joinPoint.proceed();Log log = new Log("INFO",System.currentTimeMillis() - currentTime.get());currentTime.remove();

What are ThreadLocal usage scenarios?

Applicable scenario of ThreadLocal: each thread needs to have its own separate instance and share instances in multiple methods, that is, to meet the isolation between instances and the sharing between methods at the same time. ThreadLocal is suitable for this situation. For example, in Java web applications, each thread has its own Session instance, which can be implemented using ThreadLocal.

Classification of locks

Fair lock and unfair lock

Obtain object locks in the order of thread access. synchronized is a non fair lock. Lock is a non fair lock by default. It can be set as a fair lock. A fair lock will affect performance.

public ReentrantLock() { sync = new NonfairSync();}public ReentrantLock(boolean fair) { sync = fair ? new FairSync() : new NonfairSync();}

Shared and exclusive locks

The main difference between shared and exclusive is that only one thread can obtain synchronization status at the same time, while shared can have multiple threads obtain synchronization status at the same time. For example, read operations can be performed by multiple threads at the same time, while write operations can only be performed by one thread at the same time, and other operations will be blocked.

Pessimistic lock and optimistic lock

Pessimistic lock. The lock will be added every time the resource is accessed. The lock will be released after the synchronization code is executed. synchronized and ReentrantLock belong to pessimistic locks.

Optimistic locking does not lock resources. All threads can access and modify the same resource. If there is no conflict, the modification succeeds and exits. Otherwise, it will continue to cycle. The most common implementation of optimistic locking is CAS.

Generally speaking, optimistic lock has the following two ways:

- It is implemented using the data version recording mechanism, which is the most commonly used implementation of optimistic lock. Adding a version ID to the data is generally realized by adding a numeric version field to the database table. When reading data, read out the value of the version field together. Each time the data is updated, add one to the version value. When we submit the update, we judge that the current version information of the corresponding record of the database table is compared with the version value obtained for the first time. If the current version number of the database table is equal to the version value obtained for the first time, it will be updated, otherwise it will be considered as expired data.

- Use timestamp. A field is added to the database table. The field type uses a timestamp, which is similar to the version above. When the update is submitted, the timestamp of the data in the current database is checked and compared with the timestamp obtained before the update. If it is consistent, it is OK, otherwise it is a version conflict.

Applicable scenarios:

- Pessimistic locks are suitable for scenarios with many write operations.

- Optimistic locking is suitable for scenarios with many read operations. Not locking can improve the performance of read operations.

CAS

What is CAS?

The full name of CAS is Compare And Swap, which is the main implementation of optimistic lock. CAS realizes variable synchronization between multiple threads without using locks. CAS is used in AQS inside ReentrantLock and in atomic classes.

CAS algorithm involves three operands:

- The memory value V that needs to be read and written.

- Value A for comparison.

- New value B to write.

Only when the value of V is equal to A, the value of V will be updated with the new value B in atomic mode. Otherwise, it will continue to retry until the value is successfully updated.

Take AtomicInteger as an example. The bottom layer of getAndIncrement() method of AtomicInteger is CAS implementation. The key code is compareAndSwapInt(obj, offset, expect, update). Its meaning is that if the value in obj is equal to expect, it proves that no other thread has changed this variable, then it will be updated to update. If it is not equal, Then it will continue to retry until the value is successfully updated.

Problems with CAS?

CAS has three major problems:

-

ABA problem. CAS needs to check whether the memory value changes when operating the value. If there is no change, the memory value will be updated. However, if the memory value used to be a, then B, and then a, CAS will find that the value has not changed, but it has actually changed. The solution to the ABA problem is to add the version number in front of the variable. Each time the variable is updated, the version number is added by one. In this way, the change process changes from A-B-A to 1A-2B-3A.

JDK has provided AtomicStampedReference class since 1.5 to solve the ABA problem, and atom updates the reference type with version number.

-

Long cycle time and high overhead. If CAS operation is not successful for a long time, it will cause it to spin all the time, which will bring great overhead to the CPU.

-

Only atomic operations of one shared variable can be guaranteed. When operating on a shared variable, CAS can guarantee atomic operation, but when operating on multiple shared variables, CAS cannot guarantee the atomicity of the operation.

Since Java 1.5, JDK has provided AtomicReference class to ensure atomicity between reference objects. Multiple variables can be placed in one object for CAS operation.

Concurrent tools

Several very useful concurrency tool classes are provided in the concurrency package of JDK. CountDownLatch, CyclicBarrier and Semaphore tool classes provide a means of concurrent process control.

CountDownLatch

CountDownLatch is used for a thread to wait for other threads to execute tasks before execution, which is similar to the function of thread.join(). A common application scenario is to enable multiple threads to execute a task at the same time, and wait until all tasks are executed before performing specific operations, such as summarizing statistical results.

public class CountDownLatchDemo { static final int N = 4; static CountDownLatch latch = new CountDownLatch(N); public static void main(String[] args) throws InterruptedException { for(int i = 0; i < N; i++) { new Thread(new Thread1()).start(); } latch.await(1000, TimeUnit.MILLISECONDS); //The thread calling the await() method will be suspended, and it will wait until the count value is 0; If the count value has not changed to 0 after the timeout, the system.out.println ("task finished") will continue to be executed;} static class Thread1 implements Runnable { @Override public void run() { try { System.out.println(Thread.currentThread().getName() + "starts working"); Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); } finally { latch.countDown(); } } }}

Operation results:

Thread-0starts workingThread-1starts workingThread-2starts workingThread-3starts workingtask finished

CyclicBarrier

Cyclicbarrier (synchronization barrier) is used for a group of threads to wait for each other to a certain state, and then this group of threads execute at the same time.

public CyclicBarrier(int parties, Runnable barrierAction) {}public CyclicBarrier(int parties) {}

The parameter parties refers to the number of threads or tasks to wait to a certain state; The parameter barrierAction is the content that will be executed when these threads reach a certain state.

public class CyclicBarrierTest { // Number of requests private static final int threadCount = 10// Number of threads to be synchronized private static final cyclicbarrier cyclicbarrier = new cyclicbarrier (5); Public static void main (string [] args) throws interruptedexception {/ / create thread pool executorservice ThreadPool = executors.newfixedthreadpool (10); for (int i = 0; I < threadcount; I + +) {final int threadnum = I; thread.sleep (1000); threadpool.execute (() - > {try { test(threadNum); } catch (InterruptedException e) { // TODO Auto-generated catch block e.printStackTrace(); } catch (BrokenBarrierException e) { // TODO Auto-generated catch block e.printStackTrace(); } });} ThreadPool. Shutdown();} public static void test (int threadnum) throws interruptedexception, brokenbarrierexception {system. Out. Println ("threadnum:" + threadnum + "is ready"); try {/ * * wait 60 seconds to ensure that the child thread is fully executed * / cyclicBarrier.await(60, TimeUnit.SECONDS); } catch (Exception e) { System.out.println("-----CyclicBarrierException------"); } System.out.println("threadnum:" + threadnum + "is finish"); }}

The operation results are as follows. It can be seen that CyclicBarrier can be reused:

threadnum:0is readythreadnum:1is readythreadnum:2is readythreadnum:3is readythreadnum:4is readythreadnum:4is finishthreadnum:3is finishthreadnum:2is finishthreadnum:1is finishthreadnum:0is finishthreadnum:5is readythreadnum:6is ready...

When all four threads reach the barrier state, one of the four threads will be selected to execute Runnable.

Difference between CyclicBarrier and CountDownLatch

Both CyclicBarrier and CountDownLatch can implement waiting between threads.

CountDownLatch is used for a thread to wait for other threads to execute tasks before executing. CyclicBarrier is used for a group of threads to wait for each other to a certain state, and then this group of threads execute at the same time.

The counter of CountDownLatch can only be used once, while the counter of CyclicBarrier can be reset using the reset() method, which can be used to handle more complex business scenarios.

Semaphore

Semaphore is similar to lock. It is used to control the number of threads accessing specific resources at the same time and control the number of concurrent threads.

public class SemaphoreDemo { public static void main(String[] args) { final int N = 7; Semaphore s = new Semaphore(3); for(int i = 0; i < N; i++) { new Worker(s, i).start(); } } static class Worker extends Thread { private Semaphore s; private int num; public Worker(Semaphore s, int num) { this.s = s; this.num = num; } @Override public void run() { try { s.acquire(); System.out.println("worker" + num + " using the machine"); Thread.sleep(1000); System.out.println("worker" + num + " finished the task"); s.release(); } catch (InterruptedException e) { e.printStackTrace(); } } }}

The running results are as follows. It can be seen that the lock of resources is not obtained in the order of thread access, that is

worker0 using the machineworker1 using the machineworker2 using the machineworker2 finished the taskworker0 finished the taskworker3 using the machineworker4 using the machineworker1 finished the taskworker6 using the machineworker4 finished the taskworker3 finished the taskworker6 finished the taskworker5 using the machineworker5 finished the task

Atomic class

Basic type atomic class

Update base types atomically

- AtomicInteger: integer atomic class

- AtomicLong: long integer atomic class

- AtomicBoolean: Boolean atomic class

Common methods of AtomicInteger class:

public final int get() //Get the current value public final int getAndSet(int newValue) / / get the current value and set a new value public final int getAndIncrement() / / get the current value and increase public final int getAndDecrement() / / get the current value and decrease public final int getAndAdd(int delta) / / get the current value and add the expected value boolean compareAndSet(int expect, int update) / / if the entered value is equal to the expected value, set it atomically as the entered value (update) public final void lazySet(int newValue) / / finally set it to newValue. After setting lazySet, other threads may still be able to read the old value for a short period of time.

AtomicInteger class mainly uses CAS (compare and swap) to ensure atomic operations, so as to avoid the high overhead of locking.

Array type atomic class

Update an element in an array in an atomic way

- AtomicIntegerArray: integer array atomic class

- AtomicLongArray: long integer array atomic class

- AtomicReferenceArray: reference type array atomic class

Common methods of AtomicIntegerArray class:

public final int get(int i) //Get the value of the element at the index=i position public final int getAndSet(int i, int newValue) / / return the current value at the index=i position and set it to the new value: newValuepublic final int getAndIncrement(int i) / / get the value of the element at the index=i position and let the element at the position increase by public final int getAndDecrement(int i) //Obtain the value of the element at index=i and let the element at this position subtract from public final int getAndAdd(int i, int delta) / / obtain the value of the element at index=i and add the expected value boolean compareAndSet(int i, int expect, int update) / / if the entered value is equal to the expected value, set the element value at index=i as the input value (update) in an atomic manner public final void lazySet(int i, int newValue) / / finally set the element at index=i to newValue. After setting lazySet, other threads may still be able to read the old value in a short period of time.

Reference type atomic class

- AtomicReference: reference type atomic class

- AtomicStampedReference: reference type atomic class with version number. This class associates integer value with reference, which can be used to solve the update data and version number of atomic data, and solve the ABA problem that may occur when atomic update is performed with CAS.

- AtomicMarkableReference: atomic updates reference types with tags. This class associates boolean tags with references