overview

1. Importing jar packages

2. Testing

3. Abnormal handling

First of all, the HBase Build, then start Zookeeper,Hadoop HBase cluster

1. Importing jar packages

Get ready:

1.CentOS7

2.Zookeeper Cluster

3. Hadoop 2.7.3 Cluster

4. HBase 2.0.0 cluster

5.eclipse

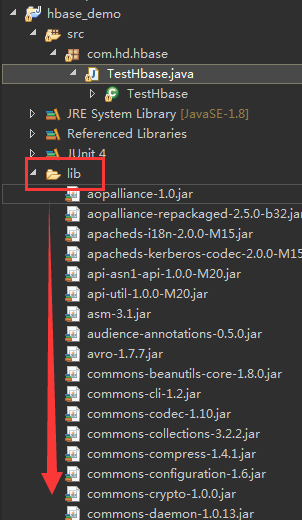

Build a java project in eclipse where you create a new lib folder to store jar packages

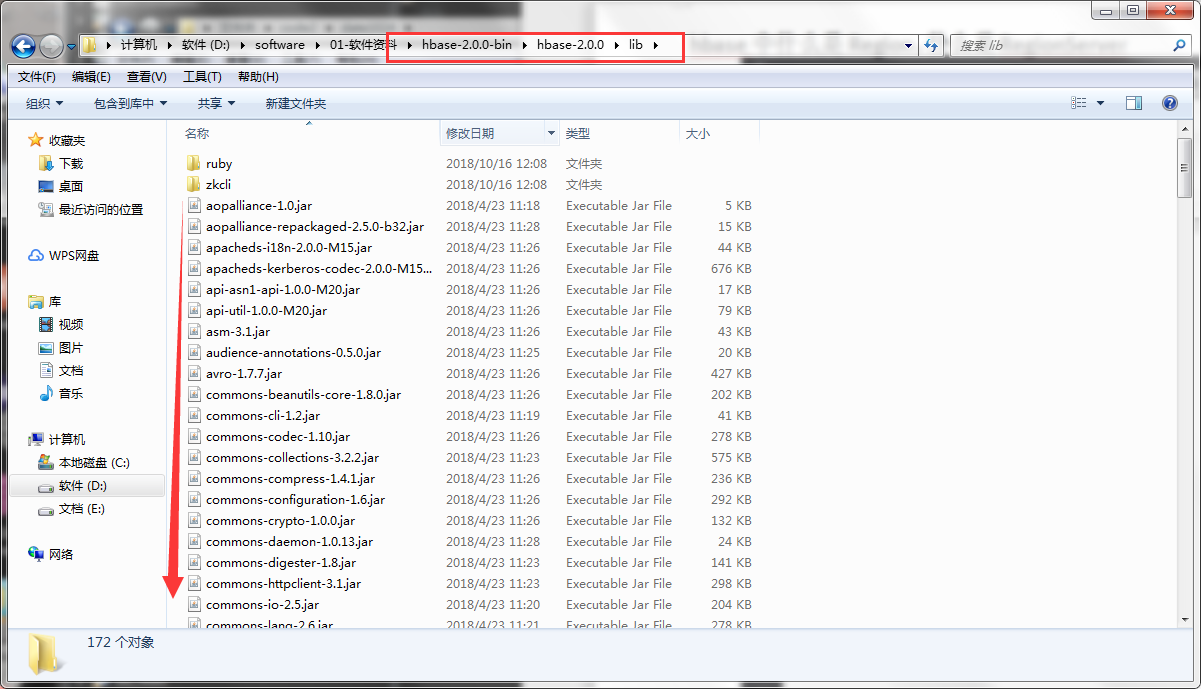

Import all jar packages under lib in the hbase directory into the project

2. Testing

Then build a package, build a TestHbase class

package com.hd.hbase;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

public class TestHbase {

private Configuration conf = null;

private Connection conn = null;

private Admin admin = null;

private Table table = null;

@Before

public void createConf() throws IOException{

//Solve exceptions, you can do without

System.setProperty("hadoop.home.dir","D:\\software\\01-Software information\\hadoop-common-2.2.0-bin-master");

conf = HBaseConfiguration.create();

//Setting up the zk cluster address, you need to modify the hosts file under windows

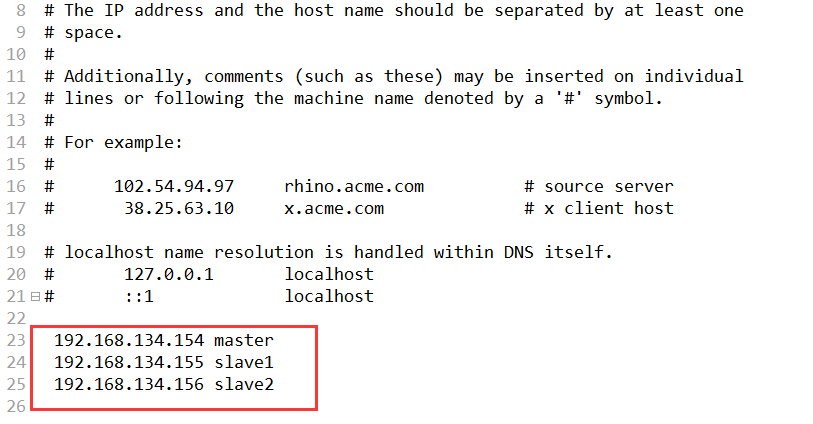

conf.set("hbase.zookeeper.quorum","master:2181,slave1:2181,slave2:2181");

//Connect

conn = ConnectionFactory.createConnection(conf);

}

@Test

public void createTable() throws IOException{

//Get the table management class

admin = conn.getAdmin();

//Definition table

HTableDescriptor hTableDescriptor = new HTableDescriptor(TableName.valueOf("person"));

//Definition Column Family

HColumnDescriptor hColumnDescriptor = new HColumnDescriptor("info");

//Adding column families to tables

hTableDescriptor.addFamily(hColumnDescriptor);

//Perform table building operations

admin.createTable(hTableDescriptor);

}

@Test

public void put() throws IOException{

//Get table objects

table = conn.getTable(TableName.valueOf("person"));

//Create put objects

Put put = new Put("p1".getBytes());

//Add columns

put.addColumn("info".getBytes(), "name".getBytes(), "haha".getBytes());

//Add put objects to tables

table.put(put);

}

@Test

public void get() throws IOException{

//Get table objects

table = conn.getTable(TableName.valueOf("person"));

//Instantiate get with line keys

Get get = new Get("p1".getBytes());

//Increasing Names and Conditions of Naming

get.addColumn("info".getBytes(), "name".getBytes());

//Execution, return result

Result result = table.get(get);

//Remove the result

String valStr = Bytes.toString(result.getValue("info".getBytes(), "name".getBytes()));

System.out.println(valStr);

}

@Test

public void scan() throws IOException{

//Get table objects

table = conn.getTable(TableName.valueOf("person"));

//Initialize the scan example

Scan scan = new Scan();

//Increasing filtration conditions

scan.addColumn("info".getBytes(), "name".getBytes());

//Return results

ResultScanner rss = table.getScanner(scan);

//Iterative extraction results

for (Result result : rss) {

String valStr = Bytes.toString(result.getValue("info".getBytes(), "name".getBytes()));

System.out.println(valStr);

}

}

@Test

public void del() throws IOException{

//Get table objects

table = conn.getTable(TableName.valueOf("person"));

//Instantiating Delete instances with line keys

Delete del = new Delete("p1".getBytes());

//Execute deletion

table.delete(del);

}

@After

public void close() throws IOException{

//Close the connection

if(admin!=null){

admin.close();

}

if(table!=null){

table.close();

}

if(conn!=null){

conn.close();

}

}

}

Modify hosts files under Windows

Find this path C: Windows System32 drivers etc

Add the host ip and host name of your hbase cluster at the end of the hosts file

Then double-click the method name separately, and right-click run Junit to test each method

3. Abnormal handling

among

System.setProperty("hadoop.home.dir","D:\\software\\01-Software information\\hadoop-common-2.2.0-bin-master");

To resolve the exception that winutils.exe file cannot be found in bin directory

2018-10-26 19:19:10,309 ERROR [main] util.Shell (Shell.java:getWinUtilsPath(400)) - Failed to locate the winutils binary in the hadoop binary path java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries. at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:382) at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:397) at org.apache.hadoop.util.Shell.<clinit>(Shell.java:390) at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:80)

In fact, this exception can be connected to hbase operation normally even if it is not handled, but it is better to solve it, because the bin directory under Hadoop 2.7.3 does not have this file, so I found a version of Hadoop 2.2.0 on the Internet, the link: https://pan.baidu.com/s/1koyq-8D5Z7u88DBtHGNe7g

Extraction code: 4ut9

After decompression, only the bin directory fills its path into System.setProperty().