Last article I talked about how to integrate kafka with spring cloud stream and run a demo. If I publicize the article of spring cloud stream this time, I can do it here. But in fact, engineering is never a simple problem of technology or not. In the actual development, we will encounter a lot of details (referred to as pit). In this article, we will talk about some of the small points, which is to use examples to tell you that the complexity of engineering is often reflected in the actual tedious steps.

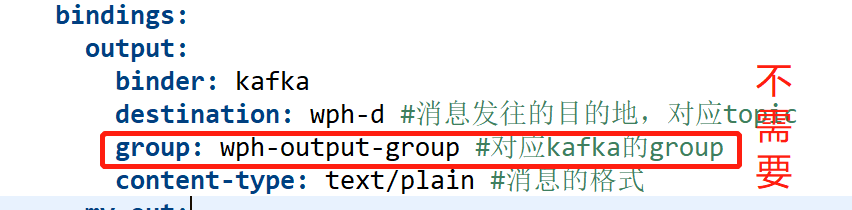

1. group configuration

In the configuration of sending messages, group is not configured

Proof of this can be found in the source code comments

org.springframework.cloud.stream.config.BindingProperties

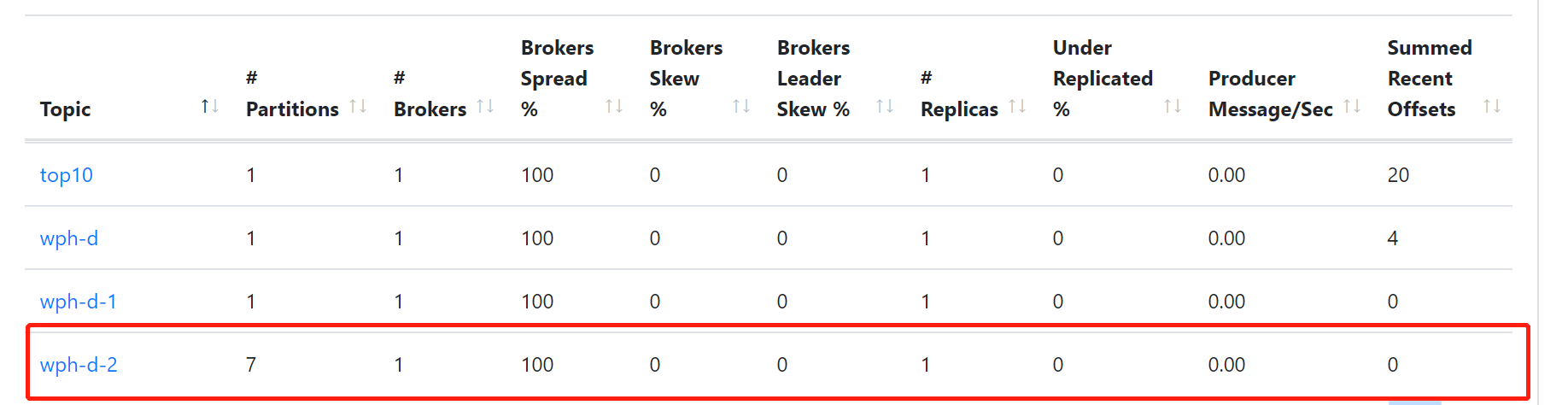

2. Modify the partitions of topic

The configuration file is as follows

bindings: output: binder: kafka destination: wph-d-2 #Destination to which the message is sent, corresponding to topic content-type: text/plain #Format of message producer: partitionCount: 7

partitionCount is used to set the number of partitions. The default value is 1. If the topic has been created, modifying partitionCount is invalid, and an error will be prompted

Caused by: org.springframework.cloud.stream.provisioning.ProvisioningException: The number of expected partitions was: 7, but 5 have been found instead.Consider either increasing the partition count of the topic or enabling `autoAddPartitions` at org.springframework.cloud.stream.binder.kafka.provisioning.KafkaTopicProvisioner.createTopicAndPartitions(KafkaTopicProvisioner.java:384) ~[spring-cloud-stream-binder-kafka-core-3.0.0.M4.jar:3.0.0.M4] at org.springframework.cloud.stream.binder.kafka.provisioning.KafkaTopicProvisioner.createTopicIfNecessary(KafkaTopicProvisioner.java:325) ~[spring-cloud-stream-binder-kafka-core-3.0.0.M4.jar:3.0.0.M4] at org.springframework.cloud.stream.binder.kafka.provisioning.KafkaTopicProvisioner.createTopic(KafkaTopicProvisioner.java:302) ~[spring-cloud-stream-binder-kafka-core-3.0.0.M4.jar:3.0.0.M4] ... 14 common frames omitted

According to the error prompt, add autoAddPartitions

kafka: binder: brokers: #Kafka's message middleware server address - localhost:9092 autoAddPartitions: true

Restart and you can see that the number of partitions has been changed

The autoAddPartitions property corresponds to org.springframework.cloud.stream.binder.kafka.properties.KafkaBinderConfigurationProperties

The class that sets the partitionCount property is org.springframework.cloud.stream.binder.ProducerProperties

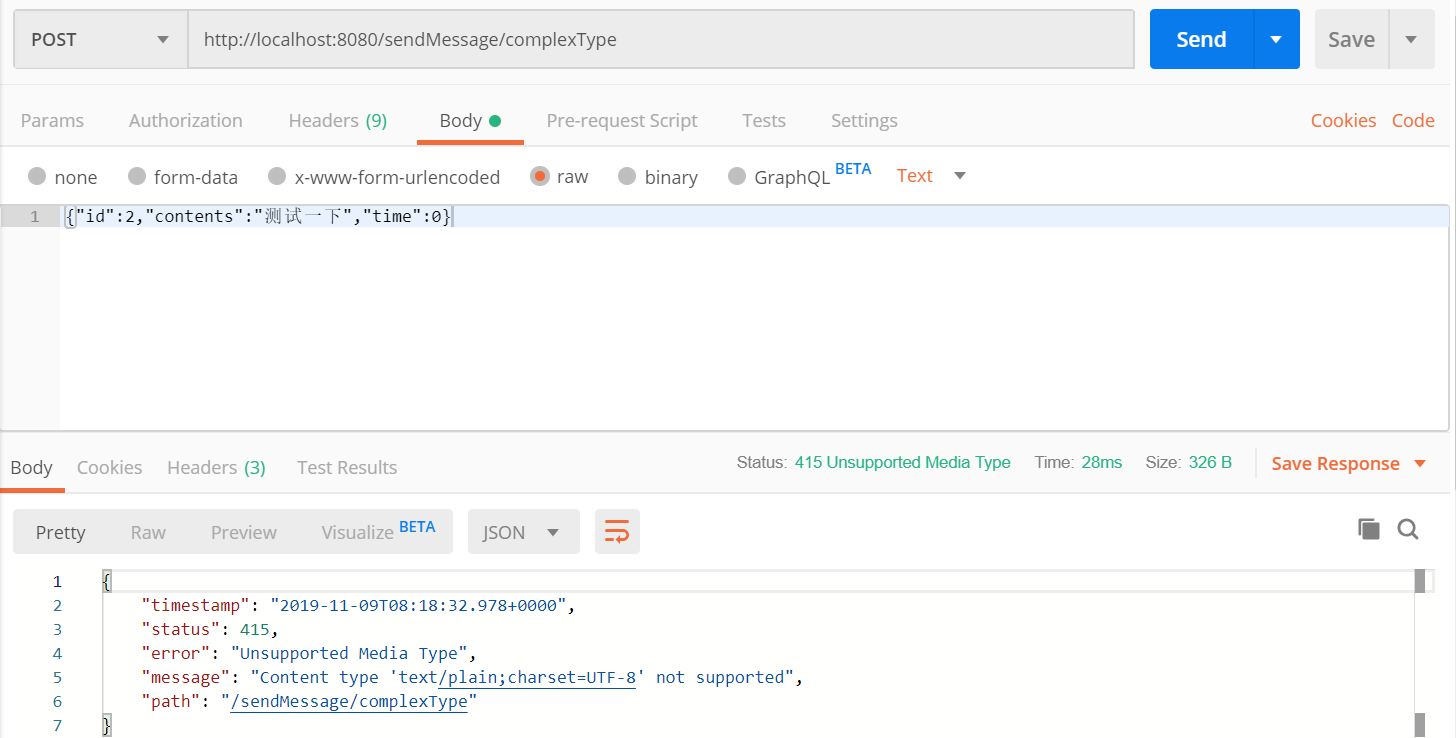

3. Send json error

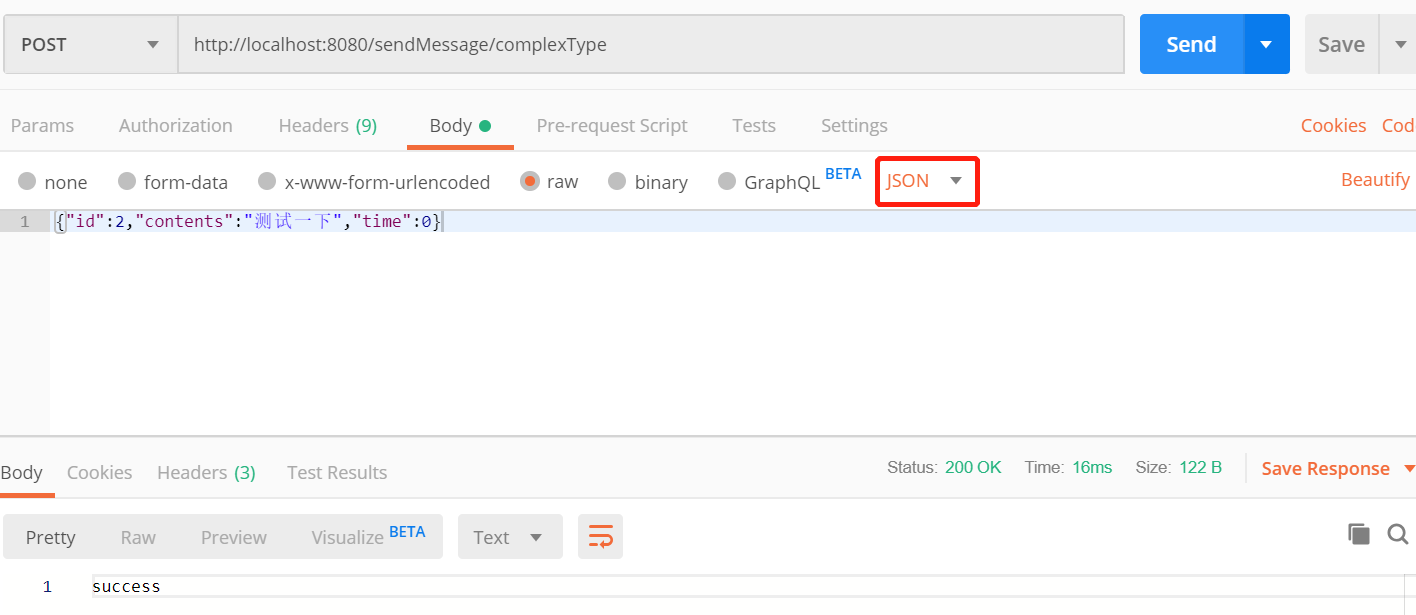

Send sendMessage/complexType error with postman

The error message on the server side is:

Resolved [org.springframework.web.HttpMediaTypeNotSupportedException: Content type 'text/plain;charset=UTF-8' not supported]

The reason is that the format of data transmission is wrong. You need to change the format of data sent by postman

Then we can send out happy

4. Send json correctly and convert it to object

If we need to transmit json information, we need to set the content type to json at the sending message end (in fact, it can not be written. The default content type is json, which will be discussed later)

bindings: output: binder: kafka destination: wph-d-2 #Destination to which the message is sent, corresponding to topic content-type: application/json #Format of message

Then send this message through producer

@RequestMapping(value = "/sendMessage/complexType", method = RequestMethod.POST) public String publishMessageComplextType(@RequestBody ChatMessage payload) { logger.info(payload.toString()); producer.getMysource().output().send(MessageBuilder.withPayload(payload).setHeader("type", "chatMessage").build()); return "success"; }

One thing to note here is that the field name of ChatMessage must have getter and setter methods, either of which is OK. Otherwise, when json is converted to an object, the field name will not receive the value.

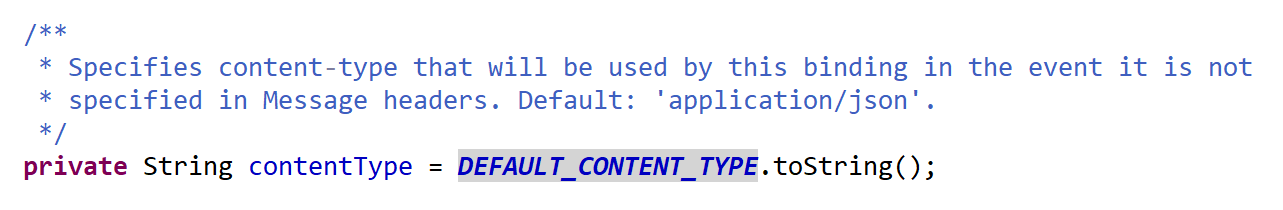

When subscribing to a message, the content type in application.yml does not need to be configured. The default value is "application/json". This can be seen in the comments of org.springframework.cloud.stream.config.BindingProperties class

As above, the field name of ChatMessage needs to have getter or setter methods, one of which is OK.

The method to receive json and convert it into a class is as follows:

@StreamListener(target = Sink.INPUT, condition = "headers['type']=='chatMessage'") public void handle(ChatMessage message) { logger.info(message.toString()); }

Pit warning: if we set the content type of the sending message end to text/plain and the content type of the message subscriber end to application/json, this error will be reported at the message subscriber end

Caused by: java.lang.IllegalStateException: argument type mismatch Endpoint [com.wphmoon.kscsclient.Consumer]

If it is reversed, the content type of the sending message end is set to application/json, and the message subscriber end is set to text/plain. I have actually tested that the message can be received and converted to a ChatMessage object, no problem.