Articles Catalogue

- What is nginx?

- nginx application scenario

- Installing Nginx in Windows

- Advantages and disadvantages of nginx

- nginx implements reverse proxy

- What is Load Balancing

- Load Balancing Strategy

- nginx for load balancing

- nginx implements specified weights

- Downtime fault tolerance of nginx

- nginx solves cross-domain problems

- nginx anti-theft chain

What is nginx?

Nginx is a high performance http server / reverse proxy server and e-mail (IMAP/POP3) proxy server. Developed by Igor Sysoev, a Russian programmer, the official test nginx can support 50,000 concurrent links, and the consumption of cpu, memory and other resources is very low, running very stable, so now many well-known companies are using nginx.

nginx application scenario

1. http server. Nginx is an HTTP service that can provide HTTP services independently. Can be a static web server.

2. Virtual host. It can realize virtual multiple websites on one server. For example, the virtual host used by personal websites.

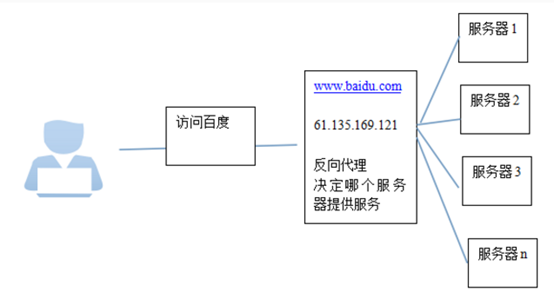

3. Reverse proxy, load balancing. When the number of visits to the website reaches a certain level, when a single server can not satisfy the user's request, it is necessary to use a cluster of servers to use nginx as a reverse proxy. Moreover, multiple servers can share the load equally, and they will not idle one server because of the high load downtime of one server.

Installing Nginx in Windows

Software download link

Link: https://pan.baidu.com/s/1XN3oVTXXiUMFgwqUrRoilg

Extraction code: b6cy

Duplicate this content and open Baidu Disk Mobile App. It's more convenient to operate.

Unzip: nginx-windows

Double-click: nginx.exe

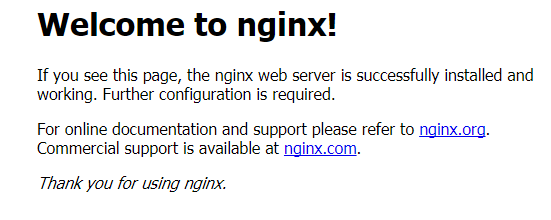

Open the browser and output localhost

You can see the following interface to show that the installation was successful

Advantages and disadvantages of nginx

It occupies less memory and can achieve high concurrent connection and fast processing response.

It can realize http server, virtual host, reverse proxy and load balancing.

nginx configuration is simple

Can not expose the real server IP address

nginx implements reverse proxy

http { include mime.types; default_type application/octet-stream; #log_format main '$remote_addr - $remote_user [$time_local] "$request" ' # '$status $body_bytes_sent "$http_referer" ' # '"$http_user_agent" "$http_x_forwarded_for"'; #access_log logs/access.log main; sendfile on; #tcp_nopush on; #keepalive_timeout 0; keepalive_timeout 65; #gzip on; server { listen 80; //port number server_name localhost; //Together, they mean that as long as they are accessed through localhost:80, I will intercept them to the location address. #charset koi8-r; #access_log logs/host.access.log main; location / { proxy_pass: https://www.baidu.com; //The address of the jump. Note that there's another number at the end of the jump. index index.html index.htm; //// Intercept files that go by default } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #} }

What is Load Balancing

Load balancing is based on the existing network structure. It provides a cheap, effective and transparent way to expand the bandwidth of network devices and servers, increase throughput, strengthen network data processing capabilities, and improve network flexibility and availability.

Load Balance, which is called Load Balance in English, means that it is distributed to multiple operating units for execution, such as Web servers, FTP servers, enterprise key application servers and other key task servers, so as to accomplish work tasks together.

Load Balancing Strategy

1,Polling (default)

//Each request is allocated to different back-end servers in chronological order. If the back-end server down s, it can be automatically eliminated.

upstream backserver {

server 192.168.0.14;

server 192.168.0.15;

}

2,Designated weight

//Specify polling probability, weight proportional to access ratio, for uneven performance of back-end servers.

upstream backserver {

server 192.168.0.14 weight=10;

server 192.168.0.15 weight=10;

}

3,IP binding ip_hash

//Each request is allocated according to the hash result of accessing ip, so that each visitor can access a back-end server regularly, which can solve the session problem.

upstream backserver {

ip_hash;

server 192.168.0.14:88;

server 192.168.0.15:80;

}

nginx for load balancing

upstream is the address where the load balancing server is configured.

Implementing access to localhost:80 for load balancing access to 8080 and 8081 servers

upstream backserver {

server 127.0.0.1:8080;

server 127.0.0.1:8081;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://backserver; // This address should have the same name as the updatestream definition

index index.html index.htm;

}

}

Because nginx defaults to the load balancing strategy of rotation training, when visiting, each time visiting, visiting in turn is 8080 - > 8081 - 8080 - >. Server

nginx implements specified weights

Advantages of assigning weights: more work for the able

upstream backserver {

server 127.0.0.1:8080 weight=1;

server 127.0.0.1:8081 weight=3;

}

server {

listen 80;

server_name www.itmayiedu.com;

location / {

proxy_pass http://backserver;

index index.html index.htm;

}

}

The ratio of visits to nginx 8080 and 8081 is 1 to 3

Downtime fault tolerance of nginx

We have two servers in front of us, so there may be a situation that when we access 8080 server, the server is down, but in order to achieve the availability of the server, we can let him access 8081 instead of work at this time.

For example, the architect is ill today, and as a result, you are responsible for all the project problems.

upstream backserver { server 127.0.0.1:8080 weight=1; server 127.0.0.1:8081 weight=3; } server { listen 80; server_name www.itmayiedu.com; location / { proxy_pass http://backserver; index index.html index.htm; proxy_connect_timeout 1; //Connection timeout proxy_send_timeout 1; //Delivery timeout proxy_read_timeout 1; // Read timeout } }

At this point in the access, there will be no matter how access, access servers are 8081 servers

nginx solves cross-domain problems

What is a cross-domain problem

When I was working in the company, I heard the interviewer next door ask this question, but the interviewer didn't seem to understand the cross-border problem very well. Instead, he asked the interviewer to explain it to him.

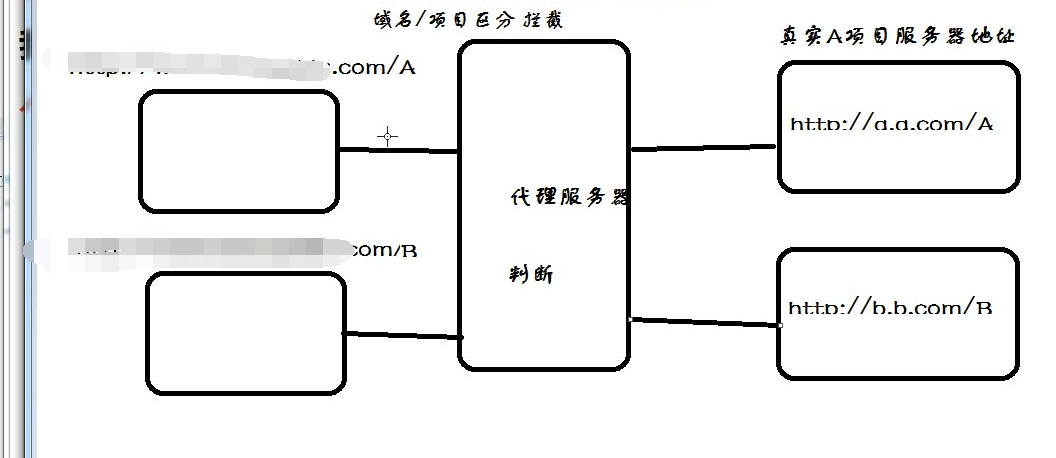

Simple cross-domain: Simply speaking, page a wants to obtain b page resources. If the protocol, domain name, port and sub-domain name of a and b pages are different, the access actions are cross-domain. For security reasons, browsers generally restrict cross-domain access, that is, cross-domain requests for resources are not allowed. Note: Cross-domain access restrictions are actually browser restrictions. It's important to understand this!!!

For example, now these two websites http://a.a.com:81/A and http://b.b.com:81/B conform to the above cross-domain problem. If the protocols, domain names, ports and sub-domain names of a and B pages are different, how can nginx be used to solve the cross-domain problem?

For example, one request is www.baidu.com/A and www.baidu.com/B.

server { listen 80; server_name www.baidu.com; location /A { //Note that there is a / a here. proxy_pass http://a.a.com:81/A; index index.html index.htm; } location /B { proxy_pass http://b.b.com:81/B; index index.html index.htm; } }

At www.baidu.com/A, you can visit http://a.a.com:81/A.

www.baidu.com/B has access to http://b.b.com:81/B;

How can we access http://b.b.com:81/B at www.baidu.com/A?

The answer is simple, although http://a.a.com:81/A; and http://b.b.com:81/B; cross-domain, but www.baidu.com/A and www.baidu.com/B do not exist.

When I visit www.baidu.com/A, I can directly request www.baidu.com/B to achieve cross-domain solutions.

nginx anti-theft chain

** Anti-theft Chain ** In order to protect website resources from theft, such as website videos and pictures, the general solution is to use F12 browser to find links directly for theft, how to solve this problem is the anti-theft chain to do.

For example, in my project, an image can be embezzled directly with the label < img href= "wwww.baidu.com/iamg.jpg">.

We only need to configure these in nginx

location ~ .*\.(jpg|jpeg|JPG|png|gif|icon)$ { //The main thing is that when accessing these suffixes, the world returns 403 to them. valid_referers blocked http://www.baidu.com www.baidu.com; if ($invalid_referer) { return 403; } }