Preface

Good memory is not as good as bad writing. Recently, I have had some time to sort out some knowledge, and then I will write some summaries one after another. Start with this less familiar audio and video section. 2016 is the first year of live broadcasting. This technology is no stranger. Later, I realized that I started to learn recently. If there are any mistakes, please correct them. This article is only a part of live broadcasting technology. It is not finished yet.

Overview of Technology

-

Live Broadcasting Technology

- In general, live broadcasting technology is divided into acquisition, pre-processing, coding, transmission, decoding and rendering.

-

Flow chart example:

Live broadcasting process. jpeg

Live broadcasting process. jpeg

Audio and Video Acquisition

Introduction to Collection

-

The acquisition of audio and video is the first link of live broadcasting architecture, and also the source of live video. Video acquisition has many application scenarios, such as two-dimensional code development. Audio and video acquisition includes two parts:

- Video capture

- Audio Acquisition

-

In the development of iOS, audio and video can be collected synchronously. The relevant acquisition API is encapsulated in the framework of AVFoundation, which is easy to use.

Acquisition steps

- Import Framework AVFoundation Framework

- Create capture session

- This session is used for input and output sources after connection

- Input Source: Camera & Microphone

- Output Source: Get the corresponding audio-video data export

- Session: Used to connect input and output sources

- Setting Video Input Source-Output Source Related Properties

- AV Capture Device Input: Input from camera

- AVCaptureVideo Data Output: Agents can be set up to process the data obtained from the corresponding input in the agent, as well as settings such as frame loss.

- Add input-output to the session

Acquisition code

- Customize a'CCVideoCapture'class inheriting NSObject to process audio and video capture

- The CCVideoCapture code is as follows:

// // CCVideoCapture.m // 01. Video capture // // Created by zerocc on 2017/3/29. // Copyright 2017 zerocc. All rights reserved. // #import "CCVideoCapture.h" #import <AVFoundation/AVFoundation.h> @interface CCVideoCapture () <AVCaptureVideoDataOutputSampleBufferDelegate,AVCaptureAudioDataOutputSampleBufferDelegate> @property (nonatomic, strong) AVCaptureSession *session; @property (nonatomic, strong) AVCaptureVideoPreviewLayer *layer; @property (nonatomic, strong) AVCaptureConnection *videoConnection; @end @implementation CCVideoCapture - (void)startCapturing:(UIView *)preView { // =============== Capturing Video=========================== // 1. Create session session session AVCaptureSession *session = [[AVCaptureSession alloc] init]; session.sessionPreset = AVCaptureSessionPreset1280x720; self.session = session; // 2. Setting up the input of audio and video AVCaptureDevice *videoDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo]; NSError *error; AVCaptureDeviceInput *videoInput = [[AVCaptureDeviceInput alloc] initWithDevice:videoDevice error:&error]; [self.session addInput:videoInput]; AVCaptureDevice *audioDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio]; AVCaptureDeviceInput *audioInput = [[AVCaptureDeviceInput alloc] initWithDevice:audioDevice error:&error]; [self.session addInput:audioInput]; // 3. Setting the Output of Audio and Video AVCaptureVideoDataOutput *videoOutput = [[AVCaptureVideoDataOutput alloc] init]; dispatch_queue_t videoQueue = dispatch_queue_create("Video Capture Queue", DISPATCH_QUEUE_SERIAL); [videoOutput setSampleBufferDelegate:self queue:videoQueue]; [videoOutput setAlwaysDiscardsLateVideoFrames:YES]; if ([session canAddOutput:videoOutput]) { [self.session addOutput:videoOutput]; } AVCaptureAudioDataOutput *audioOutput = [[AVCaptureAudioDataOutput alloc] init]; dispatch_queue_t audioQueue = dispatch_queue_create("Audio Capture Queue", DISPATCH_QUEUE_SERIAL); [audioOutput setSampleBufferDelegate:self queue:audioQueue]; if ([session canAddOutput:audioOutput]) { [session addOutput:audioOutput]; } // 4. Obtain video input and output connections for distinguishing audio and video data // The default direction of video output is set in the opposite direction, after adding output to session AVCaptureConnection *videoConnection = [videoOutput connectionWithMediaType:AVMediaTypeVideo]; self.videoConnection = videoConnection; if (videoConnection.isVideoOrientationSupported) { videoConnection.videoOrientation = AVCaptureVideoOrientationPortrait; [self.session commitConfiguration]; }else { NSLog(@"Direction setting is not supported"); } // 5. Add Preview Layer AVCaptureVideoPreviewLayer *layer = [[AVCaptureVideoPreviewLayer alloc] initWithSession:session]; self.layer = layer; layer.frame = preView.bounds; [preView.layer insertSublayer:layer atIndex:0]; // 6. Start collecting [self.session startRunning]; } // Frame Loss Video Situation - (void)captureOutput:(AVCaptureOutput *)captureOutput didDropSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection { } - (void)captureOutput:(AVCaptureOutput *)captureOutput didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection { dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0); if (self.videoConnection == connection) { dispatch_sync(queue, ^{ }); NSLog(@"Acquisition of Video Data"); } else { dispatch_sync(queue, ^{ }); NSLog(@"Acquisition of audio data"); } NSLog(@"Capturing Video Pictures"); } - (void)stopCapturing { [self.encoder endEncode]; [self.session stopRunning]; [self.layer removeFromSuperlayer]; } @end

Audio and Video Coding

Introduction to Audio and Video Compression Coding

- Uncoded videos are huge and cumbersome to store, not to mention network transmission.

- Encoding reduces data volume by compressing audio and video data, facilitates push-flow, pull-flow and storage of audio and video data, and greatly improves storage and transmission efficiency.

- Take video recording for example, how much space does bare-stream video need?

- Video without Carton effect at least 16 frames per second (normally developed 30 FPS)

- If the video is a 1280*720 resolution video

- RGB, 3*8 for corresponding pixels

- How big is the result: (1280 x 720 x 30 x 24) / (1024 x 1024) = 79 MB

- How much space does it take to record a second of audio?

- Sampling frequency of 44.1KHz

- The number of quantized bits of the sample is 16

- The number of signal channels for stereo is 2.

- The transmission rate of the audio signal is equal to the number of quantized bits x channels of the sample frequency x; then the amount of audio data in one second is approximately 1.4MB.

- Easy Information in Audio and Video

- Video (bare stream) has redundant information, such as spatial redundancy, time redundancy, visual redundancy, etc. After a series of removal of redundant information, it can greatly reduce the amount of video data, and make use of video storage and transmission. The process of removing redundant information is called video image compression coding.

- Digital audio signals contain redundant information that can be ignored in human perception, such as time domain redundancy, frequency domain redundancy, auditory redundancy and so on.

-

Audio and video coding:

- Hard coding: using non-CPU to code, such as graphics card GPU, special-purpose DSP chip provided by the system, etc.

- Soft Coding: Using CPU to Code (Cell Phone Fever is Easy)

-

Each platform handles:

- IOS side: Hard coding compatibility is good, can be directly hard-coded. After iOS 8.0, Apple opened the hardware coding and decoding function of the system. Video ToolBox framework handles hardware coding and decoding. Apple introduced the framework into iOS system.

- Android: Hard coding is difficult, and it is difficult to find a unified library compatible with various platforms (soft coding is recommended)

-

Coding standards:

-

Video coding: H.26X series, MPEG series (developed by MPEG [Motion Image Expert Group] under ISO [International Standard Organization]), C-1, VP8, VP9, etc.

- H.261: Mainly used in old video conferencing and video telephony products

- H.263: Mainly used in video conferencing, video telephony and network video

- H.264: H.264/MPEG-4 Part 10. AVC (Advanced Video Coding), or AVC (Advanced Video Coding), is a video compression standard, a widely used high-precision video recording, compression and distribution format.

- H.265: High Efficiency Video Coding (HEVC) is a video compression standard, the successor of H.264/MPEG-4 AVC. It can support 4K resolution or even Ultra-high picture quality TV. The highest resolution can reach 8192*4320 (8K resolution). This is the current trend of development. There is no popular coding software yet.

- The second part of MPEG-1: The second part of MPEG-1 is mainly used on VCD. The format is also used for wired video.

- The second part of MPEG-2: The second part of MPEG-2 is equivalent to H.262. It is used in DVD, SVCD and most digital video broadcasting systems.

- The second part of MPEG-4: The second part of the standard of MPEG-4 can be used in network transmission, broadcasting and media storage.

-

Audio coding: AAC, Opus, MP3, AC-3, etc.

-

Video compression coding H.264 (AVC)

-

Sequence (GOP - Group of picture s)

- In H264, images are organized as sequences, and a sequence is a data stream encoded by an image.

- The first image in a sequence is called an IDR image (refresh the image immediately). IDR images are all I-frame images.

- H.264 introduces IDR image for decoding resynchronization. When the decoder decodes the IDR image, it immediately empties the reference frame queue, outputs or discards all decoded data, looks up the parameter set again and starts a new sequence.

- In this way, if a major error occurs in the previous sequence, the opportunity for resynchronization can be obtained here.

- Images after IDR images will never be decoded using data from previous IDR images

- A sequence is a series of data streams generated by an image encoding with little difference in content.

- When the motion changes less, a sequence can be very long, because the motion changes less represents the content changes of the image screen is very small, so we can compile an I frame, I frame as the reference point of random access, and then all the time P frame and B frame.

- When the motion changes more, a sequence may be shorter, for example, it contains one I frame and three or four P frames.

-

Three frames are defined in H264 protocol

- I Frame: Completely coded is called I Frame, Key Frame

- P Frame: Reference to the previous I frame to generate frames that contain only differential coding is called P frame.

- B Frame: The frame coded before and after reference (I&P) is called B frame.

- Compression Method of H264

- Grouping: Several frames are divided into a group (GOP, that is, a sequence). In order to prevent the number of frames from changing, it is not appropriate to take more than one.

- Definition Frame: Each set of content frame images is defined as three types: I frame, P frame and B frame.

- Predictive frame: Predict P frame with I frame as base frame, and then predict B frame with P frame

- Data transmission: Finally, the difference information between I frame data and prediction is stored and transmitted.

- The core algorithms of H264 are intra-frame compression and inter-frame compression.

- Intra-frame compression, also known as spatial compression, is an algorithm for generating I frames. It only considers the data of this frame, but does not consider the redundant information between adjacent frames. This is actually similar to static image compression.

- Inter-frame compression, also known as time compression, is an algorithm for generating P and B frames. It compresses data between different frames on the time axis. By comparing the difference between the frame and the adjacent frames, only the difference between the frame and its adjacent frames is recorded, which can greatly reduce the amount of data.

- Hierarchical design

- H264 algorithm is conceptually divided into two layers: VCL (Video Coding Layer) is responsible for efficient video content representation; NAL (Network Abstraction Layer) is responsible for packaging and transmitting data in the appropriate way required by the network. In this way, efficient coding and network friendliness are accomplished by VCL and NAL respectively.

- NAL is designed to package data into corresponding formats according to different networks, and to adapt VCL-generated bit strings to various networks and multiple environments.

- NAL Packaging

- H.264 stream data is composed of a series of NALU units (NALU).

- NALU is to write each frame of data into a NALU unit for transmission or storage.

- NALU is divided into NALU head and NALU body.

- The NALU header is usually 00 00 00 01, which serves as the starting mark for a new NALU.

- NALU body encapsulates VCL encoding information or other information.

- Packaging process

- For streaming data, a NAUL Header may start with 0x0001 or 0x000001.

0x0001 is therefore called Start Code. - Extracting the image parameter set of SPS (Picture Parameter Sets) and the sequence parameter set of PS (Sequence Parameter Set) to generate the non-VCL NAL unit of FormatDesc. Generally speaking, the first frame data of encoder is PPS and SPS.

- The start code of each NALU is 0x0001. Locate the NALU according to the start code.

- Find sps and PPS by type information and extract them. The last five bits of the first byte after the start code are 7 for sps and 8 for pps.

- Using CMSampleBufferGetFormatDescription function to construct CMVideoFormatDescription Ref

- Extraction of video image data to generate CMBlockBuffer, encoded I frame, subsequent B frame, P frame data

- For streaming data, a NAUL Header may start with 0x0001 or 0x000001.

- Customize a CCH264Encoder class to process video (bare stream) h264 hard-coded, code as follows:

// // CCH264Encoder.h // 01. Video capture // // Created by zerocc on 2017/4/4. // Copyright 2017 zerocc. All rights reserved. // #import <Foundation/Foundation.h> #import <CoreMedia/CoreMedia.h> @interface CCH264Encoder : NSObject - (void)prepareEncodeWithWidth:(int)width height:(int)height; - (void)encoderFram:(CMSampleBufferRef)sampleBuffer; - (void)endEncode; @end

// // CCH264Encoder.m // 01. Video capture // // Created by zerocc on 2017/4/4. // Copyright 2017 zerocc. All rights reserved. // #import "CCH264Encoder.h" #import <VideoToolbox/VideoToolbox.h> #import <AudioToolbox/AudioToolbox.h> @interface CCH264Encoder () { int _spsppsFound; } @property (nonatomic, assign) VTCompressionSessionRef compressionSession; // Objects in core Foundation== c language objects do not use *, strong is only used to modify oc objects, VTCompression SessionRef objects themselves refer to pointer assign ment modification. @property (nonatomic, assign) int frameIndex; @property (nonatomic, strong) NSFileHandle *fileHandle; @property (nonatomic, strong) NSString *documentDictionary; @end @implementation CCH264Encoder #pragma mark - lazyload - (NSFileHandle *)fileHandle { if (!_fileHandle) { // There's only one bare stream, only video stream, no audio stream. NSString *filePath = [[NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, true) firstObject] stringByAppendingPathComponent:@"video.h264"]; NSFileManager *fileManager = [NSFileManager defaultManager]; BOOL isExistPath = [fileManager isExecutableFileAtPath:filePath]; if (isExistPath) { [fileManager removeItemAtPath:filePath error:nil]; } [[NSFileManager defaultManager] createFileAtPath:filePath contents:nil attributes:nil]; _fileHandle = [NSFileHandle fileHandleForWritingAtPath:filePath]; } return _fileHandle; } /** Prepare coding @param width Width of acquisition @param height Acquisition Height */ - (void)prepareEncodeWithWidth:(int)width height:(int)height { // 0. The default setting is frame 0. self.frameIndex = 0; // 1. Create VTCompression Session Ref /** VTCompressionSessionRef @param NULL CFAllocatorRef - CoreFoundation Memory allocation mode, NULL default @param width int32_t - Video Width @param height Video Height @param kCMVideoCodecType_H264 CMVideoCodecType - Coding standards @param NULL CFDictionaryRef encoderSpecification, @param NULL CFDictionaryRef sourceImageBufferAttributes @param NULL CFAllocatorRef compressedDataAllocator @param didComparessionCallback VTCompressionOutputCallback - Callback function c after successful encoding @param _Nullable void * - Parameters passed to callback functions @return session */ VTCompressionSessionCreate(NULL, width, height, kCMVideoCodecType_H264, NULL, NULL, NULL, didComparessionCallback, (__bridge void * _Nullable)(self), &_compressionSession); // 2. Setting Properties // 2.1 Setting up Real-time Coding VTSessionSetProperty(_compressionSession, kVTCompressionPropertyKey_RealTime, kCFBooleanTrue); // 2.2 Setting Frame Rate VTSessionSetProperty(_compressionSession, kVTCompressionPropertyKey_ExpectedFrameRate, (__bridge CFTypeRef _Nonnull)(@24)); // 2.3 Set Bit Rate (Bit Rate) 1500000/s VTSessionSetProperty(_compressionSession, kVTCompressionPropertyKey_AverageBitRate, (__bridge CFTypeRef _Nonnull)(@1500000)); // 1.5 million bit s per second // 2.4 Maximum key frame spacing, i.e. I frame. VTSessionSetProperty(_compressionSession, kVTCompressionPropertyKey_DataRateLimits, (__bridge CFTypeRef _Nonnull)(@[@(1500000/8), @1])); // The unit is 8 byte. // 2.5 Set GOP Size VTSessionSetProperty(_compressionSession, kVTCompressionPropertyKey_MaxKeyFrameInterval, (__bridge CFTypeRef _Nonnull)(@20)); // 3. Prepare coding VTCompressionSessionPrepareToEncodeFrames(_compressionSession); } /** Start coding @param sampleBuffer CMSampleBufferRef */ - (void)encoderFram:(CMSampleBufferRef)sampleBuffer { // 2. Start coding // Converting CMSampleBufferRef to CVImage BufferRef, CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); CMTime pts = CMTimeMake(self.frameIndex, 24); CMTime duration = kCMTimeInvalid; VTEncodeInfoFlags flags; /** Hard Encoder - An image Buffer carrying video frame data is sent to the hard encoder for encoding. @param session#> VTCompressionSessionRef @param imageBuffer#> CVImageBufferRef @param presentationTimeStamp#> CMTime - pts @param duration#> CMTime - Pass on a fixed invalid time @param frameProperties#> CFDictionaryRef @param sourceFrameRefCon#> void - The second parameter in the callback function after encoding @param infoFlagsOut#> VTEncodeInfoFlags - The fourth parameter in the callback function after encoding @return */ VTCompressionSessionEncodeFrame(self.compressionSession, imageBuffer, pts, duration, NULL, NULL, &flags); NSLog(@"Start coding a frame of data"); } #pragma mark - acquisition of coded data c language function - coded callback function void didComparessionCallback (void * CM_NULLABLE outputCallbackRefCon, void * CM_NULLABLE sourceFrameRefCon, OSStatus status, VTEncodeInfoFlags infoFlags, CM_NULLABLE CMSampleBufferRef sampleBuffer) { // c language can not call the current object self. Grammar is not good, only through the pointer to do the corresponding operation. // Get the current CCH264Encoder object and pass in the self parameter (self in VTCompression Session Create) CCH264Encoder *encoder = (__bridge CCH264Encoder *)(outputCallbackRefCon); // 1. Determine whether the frame is a key frame CFArrayRef attachments = CMSampleBufferGetSampleAttachmentsArray(sampleBuffer, true); CFDictionaryRef dict = CFArrayGetValueAtIndex(attachments, 0); BOOL isKeyFrame = !CFDictionaryContainsKey(dict, kCMSampleAttachmentKey_NotSync); // 2. If it is a key frame - > to get SPS/PPS data, it saves some necessary information of h264 video for easy parsing - > and writing to file. if (isKeyFrame && !encoder->_spsppsFound) { encoder->_spsppsFound = 1; // 2.1. Getting CMFormat Description Ref from CMSampleBufferRef CMFormatDescriptionRef format = CMSampleBufferGetFormatDescription(sampleBuffer); // 2.2. Getting SPS/pps information const uint8_t *spsOut; size_t spsSize, spsCount; CMVideoFormatDescriptionGetH264ParameterSetAtIndex(format, 0, &spsOut, &spsSize, &spsCount, NULL); const uint8_t *ppsOut; size_t ppsSize, ppsCount; CMVideoFormatDescriptionGetH264ParameterSetAtIndex(format, 1, &ppsOut, &ppsSize, &ppsCount, NULL); // 2.3. Convert SPS/PPS to NSData and write to file NSData *spsData = [NSData dataWithBytes:spsOut length:spsSize]; NSData *ppsData = [NSData dataWithBytes:ppsOut length:ppsSize]; // 2.4. Write files (NALU unit features: start with 0x000001 each NALU needs splicing) [encoder writeH264Data:spsData]; [encoder writeH264Data:ppsData]; } // 3. Get the encoding data and write it to the file // 3.1. Get CMBlockBufferRef CMBlockBufferRef blcokBuffer = CMSampleBufferGetDataBuffer(sampleBuffer); // 3.2. Get the memory address of the starting location from the blockBuffer size_t totalLength = 0; char *dataPointer; CMBlockBufferGetDataPointer(blcokBuffer, 0, NULL, &totalLength, &dataPointer); // 3.3. An image of a frame may need to be written to multiple NALU units - > Slice switching static const int H264HeaderLength = 4; // Head length is generally 4. size_t bufferOffset = 0; while (bufferOffset < totalLength - H264HeaderLength) { // 3.4 Copy the address of H 264 Header Length length from the starting position and calculate NALU Length int NALULength = 0; memcpy(&NALULength, dataPointer + bufferOffset, H264HeaderLength); // The data encoded by H264 is a large-end mode (byte order), which is transformed into the mode of iOS system. The computer is generally small-end, while the network and files are generally large-end. NALULength = CFSwapInt32BigToHost(NALULength); // 3.5 Starting with dataPointer, create NSData by length NSData *data = [NSData dataWithBytes:(dataPointer + bufferOffset + H264HeaderLength) length:NALULength]; // 3.6 Write to file [encoder writeH264Data:data]; // 3.7 Reset bufferOffset bufferOffset += NALULength + H264HeaderLength; } NSLog(@". . . . . . . . Encoding a frame of data"); }; - (void)writeH264Data:(NSData *)data { // 1. Get startCode first const char bytes[] = "\x00\x00\x00\x01"; // 2. Get headerData // The reason for subtracting one: byts stitches strings, and the last bit of the string has a0; so subtracting one is the correct length. NSData *headerData = [NSData dataWithBytes:bytes length:(sizeof(bytes) - 1)]; [self.fileHandle writeData:headerData]; [self.fileHandle writeData:data]; } - (void)endEncode { VTCompressionSessionInvalidate(self.compressionSession); CFRelease(_compressionSession); } @end

-

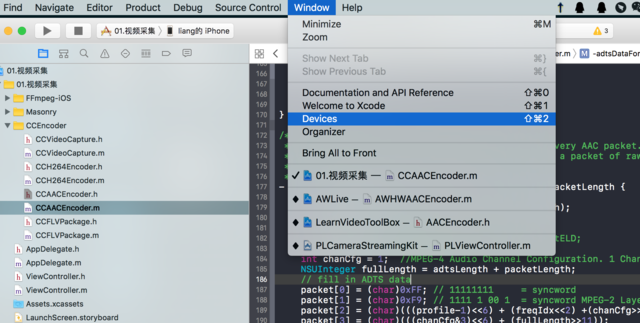

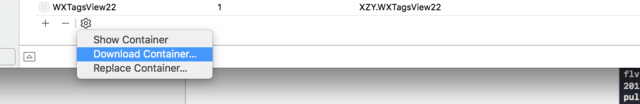

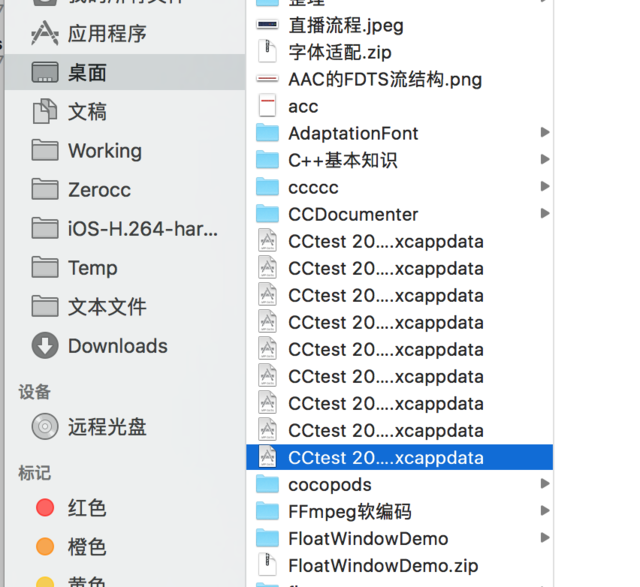

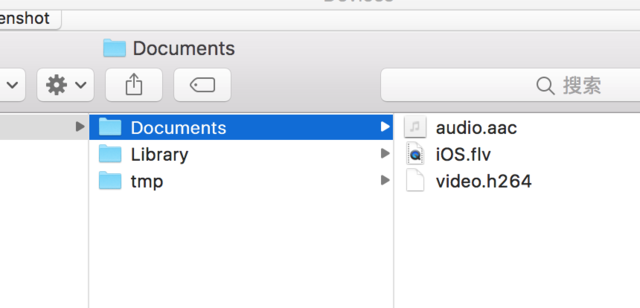

Debugging steps

-

Download VLC Player

VLC Download. png

VLC Download. png

-

Audio Coding AAC

- AAC audio formats include ADIF and ADTS:

- ADIF: Audio Data Interchange Format Audio Data Interchange Format Audio Data Interchange Format. The feature of this format is that the beginning of this audio data can be determined. ADIF has only one unified header, so all data must be decoded, that is, its decoding must be carried out at a clearly defined beginning. So this format is often used in disk files.

- ADTS: Audio Data Transport Stream audio data transmission stream. The feature of this format is that it is a bit stream with synchronized words, and decoding can start anywhere in the stream. Its features are similar to mp3 data stream format. Voice system requires high real-time performance. The basic process is to collect audio data, local coding, data upload, server processing, data download, local decoding. The following learning and analysis are also carried out in this format.

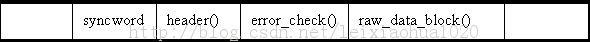

- The original AAC bit stream (also known as "bare stream") consists of one ADTS frame after another. Each ADTS frame is separated by syncword. The synchronization word is 0xFFF (binary "111111111111111"). The step of AAC stream parsing is to search 0x0FFF from the stream and separate the ADTS frame, and then analyze the first fields of the ADTS frame. Composition of ADTS Frame:

- ADTS frame header includes fixed frame header and variable frame header. It defines key information such as audio sampling rate, audio channel number and frame length, which is used to parse and decode frame payload data.

- ADTS frame payload mainly consists of the original frame.

- The following figure shows the concise structure of ADTS frame, and the blank rectangle on both sides represents the data before and after a frame.

-

ADTS Content and Structure

-

The header information of ADTS is usually 7 bytes (or 9 bytes), which is divided into two parts: adts_fixed_header() and adts_variable_header().

- The fixed head adts_fixed_header() structure of ADTS consists of:

adts_fixed_header().jpg

adts_fixed_header().jpg- syncword: Synchronized word, 12-bit "1111 1111 1111"

- ID: MPEG flag, 0 for MPEG-4, 1 for MPEG-2

- layer: layer representing audio coding

- protection_absent: Represents whether or not error checking exists

- profile: Indicates which level of AAC is used

- sampling_frequency_index: Indicates the sampling rate index used

- channel_configuration: Represents the number of channels

- The structure of ADTS variable head adts_variable_header() consists of:

adts_variable_header().jpg

adts_variable_header().jpg- aac_frame_lenth: ADTS frame length

- adts_buffer_fullness:0x7FF indicates that the stream is variable.

- number_of_raw_data_blocks_in_frame: the number of original frames in each ADTS frame

- The fixed head adts_fixed_header() structure of ADTS consists of:

-

-

Customize a CCAACEncoder class to process audio (bare stream) AAC hard coding, code as follows:

// // CCH264Encoder.h // 01. Video capture // // Created by zerocc on 2017/4/4. // Copyright 2017 zerocc. All rights reserved. // #import <Foundation/Foundation.h> #import <AVFoundation/AVFoundation.h> #import <AudioToolbox/AudioToolbox.h> @interface CCAACEncoder : NSObject - (void)encodeAAC:(CMSampleBufferRef)sampleBuffer; - (void)endEncodeAAC; @end

// // CCH264Encoder.h // 01. Video capture // // Created by zerocc on 2017/4/4. // Copyright 2017 zerocc. All rights reserved. // #import "CCAACEncoder.h" @interface CCAACEncoder() @property (nonatomic, assign) AudioConverterRef audioConverter; @property (nonatomic, assign) uint8_t *aacBuffer; @property (nonatomic, assign) NSUInteger aacBufferSize; @property (nonatomic, assign) char *pcmBuffer; @property (nonatomic, assign) size_t pcmBufferSize; @property (nonatomic, strong) NSFileHandle *audioFileHandle; @end @implementation CCAACEncoder - (void) dealloc { AudioConverterDispose(_audioConverter); free(_aacBuffer); } - (NSFileHandle *)audioFileHandle { if (!_audioFileHandle) { NSString *audioFile = [[NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) lastObject] stringByAppendingPathComponent:@"audio.aac"]; [[NSFileManager defaultManager] removeItemAtPath:audioFile error:nil]; [[NSFileManager defaultManager] createFileAtPath:audioFile contents:nil attributes:nil]; _audioFileHandle = [NSFileHandle fileHandleForWritingAtPath:audioFile]; } return _audioFileHandle; } - (id)init { if (self = [super init]) { _audioConverter = NULL; _pcmBufferSize = 0; _pcmBuffer = NULL; _aacBufferSize = 1024; _aacBuffer = malloc(_aacBufferSize * sizeof(uint8_t)); memset(_aacBuffer, 0, _aacBufferSize); } return self; } - (void)encodeAAC:(CMSampleBufferRef)sampleBuffer { CFRetain(sampleBuffer); // 1. Create audio encode converter if (!_audioConverter) { // 1.1 Setting Coding Parameters AudioStreamBasicDescription inputAudioStreamBasicDescription = *CMAudioFormatDescriptionGetStreamBasicDescription((CMAudioFormatDescriptionRef)CMSampleBufferGetFormatDescription(sampleBuffer)); AudioStreamBasicDescription outputAudioStreamBasicDescription = { .mSampleRate = inputAudioStreamBasicDescription.mSampleRate, .mFormatID = kAudioFormatMPEG4AAC, .mFormatFlags = kMPEG4Object_AAC_LC, .mBytesPerPacket = 0, .mFramesPerPacket = 1024, .mBytesPerFrame = 0, .mChannelsPerFrame = 1, .mBitsPerChannel = 0, .mReserved = 0 }; static AudioClassDescription description; UInt32 encoderSpecifier = kAudioFormatMPEG4AAC; UInt32 size; AudioFormatGetPropertyInfo(kAudioFormatProperty_Encoders, sizeof(encoderSpecifier), &encoderSpecifier, &size); unsigned int count = size / sizeof(AudioClassDescription); AudioClassDescription descriptions[count]; AudioFormatGetProperty(kAudioFormatProperty_Encoders, sizeof(encoderSpecifier), &encoderSpecifier, &size, descriptions); for (unsigned int i = 0; i < count; i++) { if ((kAudioFormatMPEG4AAC == descriptions[i].mSubType) && (kAppleSoftwareAudioCodecManufacturer == descriptions[i].mManufacturer)) { memcpy(&description , &(descriptions[i]), sizeof(description)); } } AudioConverterNewSpecific(&inputAudioStreamBasicDescription, &outputAudioStreamBasicDescription, 1, &description, &_audioConverter); } CMBlockBufferRef blockBuffer = CMSampleBufferGetDataBuffer(sampleBuffer); CFRetain(blockBuffer); OSStatus status = CMBlockBufferGetDataPointer(blockBuffer, 0, NULL, &_pcmBufferSize, &_pcmBuffer); NSError *error = nil; if (status != kCMBlockBufferNoErr) { error = [NSError errorWithDomain:NSOSStatusErrorDomain code:status userInfo:nil]; } memset(_aacBuffer, 0, _aacBufferSize); AudioBufferList outAudioBufferList = {0}; outAudioBufferList.mNumberBuffers = 1; outAudioBufferList.mBuffers[0].mNumberChannels = 1; outAudioBufferList.mBuffers[0].mDataByteSize = (int)_aacBufferSize; outAudioBufferList.mBuffers[0].mData = _aacBuffer; AudioStreamPacketDescription *outPacketDescription = NULL; UInt32 ioOutputDataPacketSize = 1; status = AudioConverterFillComplexBuffer(_audioConverter, inInputDataProc, (__bridge void *)(self), &ioOutputDataPacketSize, &outAudioBufferList, outPacketDescription); if (status == 0) { NSData *rawAAC = [NSData dataWithBytes:outAudioBufferList.mBuffers[0].mData length:outAudioBufferList.mBuffers[0].mDataByteSize]; NSData *adtsHeader = [self adtsDataForPacketLength:rawAAC.length]; NSMutableData *fullData = [NSMutableData dataWithData:adtsHeader]; [fullData appendData:rawAAC]; [self.audioFileHandle writeData:fullData]; } else { error = [NSError errorWithDomain:NSOSStatusErrorDomain code:status userInfo:nil]; } CFRelease(sampleBuffer); CFRelease(blockBuffer); } /** Encoder callback C function @param inAudioConverter xx @param ioNumberDataPackets xx @param ioData xx @param outDataPacketDescription xx @param inUserData xx @return xx */ OSStatus inInputDataProc(AudioConverterRef inAudioConverter, UInt32 *ioNumberDataPackets, AudioBufferList *ioData, AudioStreamPacketDescription **outDataPacketDescription, void *inUserData) { CCAACEncoder *encoder = (__bridge CCAACEncoder *)(inUserData); UInt32 requestedPackets = *ioNumberDataPackets; // Fill PCM into buffer size_t copiedSamples = encoder.pcmBufferSize; ioData->mBuffers[0].mData = encoder.pcmBuffer; ioData->mBuffers[0].mDataByteSize = (int)encoder.pcmBufferSize; encoder.pcmBuffer = NULL; encoder.pcmBufferSize = 0; if (copiedSamples < requestedPackets) { //PCM Buffer Not Full *ioNumberDataPackets = 0; return -1; } *ioNumberDataPackets = 1; return noErr; } /** * Add ADTS header at the beginning of each and every AAC packet. * This is needed as MediaCodec encoder generates a packet of raw * AAC data. */ - (NSData *) adtsDataForPacketLength:(NSUInteger)packetLength { int adtsLength = 7; char *packet = malloc(sizeof(char) * adtsLength); // Variables Recycled by addADTStoPacket int profile = 2; //AAC LC //39=MediaCodecInfo.CodecProfileLevel.AACObjectELD; int freqIdx = 4; //44.1KHz int chanCfg = 1; //MPEG-4 Audio Channel Configuration. 1 Channel front-center NSUInteger fullLength = adtsLength + packetLength; // fill in ADTS data packet[0] = (char)0xFF; // 11111111 = syncword packet[1] = (char)0xF9; // 1111 1 00 1 = syncword MPEG-2 Layer CRC packet[2] = (char)(((profile-1)<<6) + (freqIdx<<2) +(chanCfg>>2)); packet[3] = (char)(((chanCfg&3)<<6) + (fullLength>>11)); packet[4] = (char)((fullLength&0x7FF) >> 3); packet[5] = (char)(((fullLength&7)<<5) + 0x1F); packet[6] = (char)0xFC; NSData *data = [NSData dataWithBytesNoCopy:packet length:adtsLength freeWhenDone:YES]; return data; } - (void)endEncodeAAC { AudioConverterDispose(_audioConverter); _audioConverter = nil; } @end

- Test audio and video

- Import CCH264 Encoder and CAAC Encoder into CCVideoCapture class

#import "CCVideoCapture.h" #import <AVFoundation/AVFoundation.h> #import "CCH264Encoder.h" #import "CCAACEncoder.h" @interface CCVideoCapture () <AVCaptureVideoDataOutputSampleBufferDelegate,AVCaptureAudioDataOutputSampleBufferDelegate> @property (nonatomic, strong) AVCaptureSession *session; @property (nonatomic, strong) AVCaptureVideoPreviewLayer *layer; @property (nonatomic, strong) AVCaptureConnection *videoConnection; @property (nonatomic, strong) CCH264Encoder *videoEncoder; @property (nonatomic , strong) CCAACEncoder *audioEncoder; @end @implementation CCVideoCapture #pragma mark - lazyload - (CCH264Encoder *)videoEncoder { if (!_videoEncoder) { _videoEncoder = [[CCH264Encoder alloc] init]; } return _videoEncoder; } - (CCAACEncoder *)audioEncoder { if (!_audioEncoder) { _audioEncoder = [[CCAACEncoder alloc] init]; } return _audioEncoder; }

- (void)startCapturing:(UIView *)preView { // =============== Prepare coding=========================== [self.videoEncoder prepareEncodeWithWidth:720 height:1280]; // =============== Capturing Video=========================== // 1. Create session session session AVCaptureSession *session = [[AVCaptureSession alloc] init]; session.sessionPreset = AVCaptureSessionPreset1280x720; self.session = session; . . .

- (void)captureOutput:(AVCaptureOutput *)captureOutput didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection { dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0); if (self.videoConnection == connection) { dispatch_sync(queue, ^{ [self.videoEncoder encoderFram:sampleBuffer]; }); NSLog(@"Acquisition of Video Data"); } else { dispatch_sync(queue, ^{ [self.audioEncoder encodeAAC:sampleBuffer]; }); NSLog(@"Acquisition of audio data"); } NSLog(@"Capturing Video Pictures"); } - (void)stopCapturing { [self.videoEncoder endEncode]; [self.audioEncoder endEncodeAAC]; [self.session stopRunning]; [self.layer removeFromSuperlayer]; }

To be continued

Reference material

Coding and encapsulation, push-flow and transmission

Analysis of AAC encapsulated in ADTS format

Audio introduction