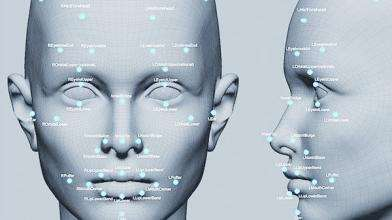

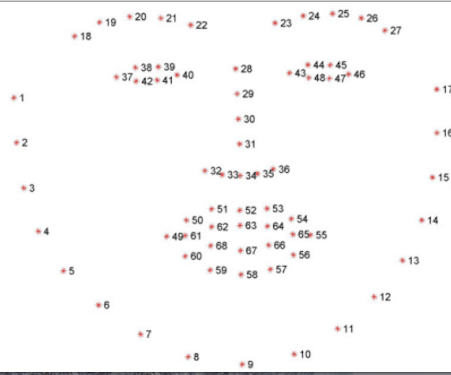

Last article Face Frame Detection It has been introduced that if we detect the information of face location from video stream, based on these contents, we continue to expand downward to get the information of 68 key points of face. The reason why we want to get the information of face location is to prepare for the key points, because the location of face will be used when we get the key points.

Keep looking at the picture.

- That's what we need to get. What we need to do today is to detect 68 key points of faces through Dlib, and then draw these 68 key points into the video stream.

Don't talk too much nonsense or see the effect first.

The volute is still very handsome!

Dlib introduction

Dlib is a C++ Open Source Toolkit containing machine learning algorithms. Dlib can help you create a lot of sophisticated machine learning software to help solve practical problems. At present, Dlib has been widely used in industry and academia, including robots, embedded devices, mobile phones and large high performance computing environments (this is a copy)

Dlib Integration

Dlib contains a folder of. A and a header file. We can search and download one from google, or cross-compile one from cmake, or use mine. Dlib and its model (Password: q6z4)

(1) Put Dlib into your project

After downloading my library and model, you will see this.

Then you drag it straight to your project.

Noteworthy

After we dragged in, the dlib folder can't be in the project. We must remove it or build it. It's pitted here for a long time, but remember it's not moving to trash.

This is it

(2) Configuration Engineering

(1).build setting

search preprocessor macros

Add macro

- DLIB_JPEG_SUPPORT

- DLIB_NO_GUI_SUPPORT

- NDEBUG

- DDLIB_USE_BLAS

- DLIB_USE_LAPACK

image.png

(2). header search path

Just drag your catalogue in

(3) Dependency Libraries

Acceletrate.framework

AssetsLibrary.framework

Others are used by opencv. Unknown students will read the first part. Face Frame Detection

(3) Writing code

It's important to note that we don't import dlib header file and opencv header file at the same time, because there are still some macro definitions and methods that conflict. Here we use separate classes to detect the key points of faces.

(1) Create a new FaceDlibWrapper class and change. m to. m m to override init method

#import <dlib/image_processing.h> #import <dlib/image_io.h> - (instancetype)init { self = [super init]; if (self) { //Initialization Detector NSString *modelFileName = [[NSBundle mainBundle] pathForResource:@"shape_predictor_68_face_landmarks" ofType:@"dat"]; std::string modelFileNameCString = [modelFileName UTF8String]; dlib::deserialize(modelFileNameCString) >> sp; } return self; }

(2) Detection methods

//The reason the return array looks wordy is that it's meant to be clear to you, or it can't be written that way. - (NSArray <NSArray <NSValue *> *>*)detecitonOnSampleBuffer:(CMSampleBufferRef)sampleBuffer inRects:(NSArray<NSValue *> *)rects { dlib::array2d<dlib::bgr_pixel> img; dlib::array2d<dlib::bgr_pixel> img_gray; // MARK: magic CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); CVPixelBufferLockBaseAddress(imageBuffer, kCVPixelBufferLock_ReadOnly); size_t width = CVPixelBufferGetWidth(imageBuffer); size_t height = CVPixelBufferGetHeight(imageBuffer); char *baseBuffer = (char *)CVPixelBufferGetBaseAddress(imageBuffer); // set_size expects rows, cols format img.set_size(height, width); // copy samplebuffer image data into dlib image format img.reset(); long position = 0; while (img.move_next()) { dlib::bgr_pixel& pixel = img.element(); // assuming bgra format here long bufferLocation = position * 4; //(row * width + column) * 4; char b = baseBuffer[bufferLocation]; char g = baseBuffer[bufferLocation + 1]; char r = baseBuffer[bufferLocation + 2]; // we do not need this // char a = baseBuffer[bufferLocation + 3]; dlib::bgr_pixel newpixel(b, g, r); pixel = newpixel; position++; } // unlock buffer again until we need it again CVPixelBufferUnlockBaseAddress(imageBuffer, kCVPixelBufferLock_ReadOnly); // convert the face bounds list to dlib format std::vector<dlib::rectangle> convertedRectangles = [self convertCGRectValueArray:rects]; dlib::assign_image(img_gray, img); NSMutableArray *facesLandmarks = [NSMutableArray arrayWithCapacity:0]; for (unsigned long j = 0; j < convertedRectangles.size(); ++j) { dlib::rectangle oneFaceRect = convertedRectangles[j]; // detect all landmarks dlib::full_object_detection shape = sp(img, oneFaceRect); //There are 68 points in shape that we need because dilb clashes with opencv, so we convert to Foundation Array. NSMutableArray *landmarks = [NSMutableArray arrayWithCapacity:0]; for (int i = 0; i < shape.num_parts(); i++) { dlib::point p = shape.part(i); [landmarks addObject:[NSValue valueWithCGPoint:CGPointMake(p.x(), p.y())]]; } [facesLandmarks addObject:landmarks]; } return facesLandmarks; } - (std::vector<dlib::rectangle>)convertCGRectValueArray:(NSArray<NSValue *> *)rects { std::vector<dlib::rectangle> myConvertedRects; for (NSValue *rectValue in rects) { CGRect rect = [rectValue CGRectValue]; long left = rect.origin.x; long top = rect.origin.y; long right = left + rect.size.width; long bottom = top + rect.size.height; dlib::rectangle dlibRect(left, top, right, bottom); myConvertedRects.push_back(dlibRect); } return myConvertedRects; }

(3) Get the key points and draw them in the stream

Continuing with the previous article, we drew 68 key points together in the position of drawing face frames.

#pragma mark - AVCaptureSession Delegate - - (void)captureOutput:(AVCaptureOutput *)output didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection { NSMutableArray *bounds = [NSMutableArray arrayWithCapacity:0]; for (AVMetadataFaceObject *faceobject in self.currentMetadata) { AVMetadataObject *face = [output transformedMetadataObjectForMetadataObject:faceobject connection:connection]; [bounds addObject:[NSValue valueWithCGRect:face.bounds]]; } UIImage *image = [self imageFromPixelBuffer:sampleBuffer]; cv::Mat mat; UIImageToMat(image, mat); //Get the key points and stream in the array of facial information and the camera NSArray *facesLandmarks = [_dr detecitonOnSampleBuffer:sampleBuffer inRects:bounds]; // Drawing 68 Key Points for (NSArray *landmarks in facesLandmarks) { for (NSValue *point in landmarks) { CGPoint p = [point CGPointValue]; cv::rectangle(mat, cv::Rect(p.x,p.y,4,4), cv::Scalar(255,0,0,255),-1); } } for (NSValue *rect in bounds) { CGRect r = [rect CGRectValue]; //Picture frame cv::rectangle(mat, cv::Rect(r.origin.x,r.origin.y,r.size.width,r.size.height), cv::Scalar(255,0,0,255)); } //Performance is not taken into account here. dispatch_async(dispatch_get_main_queue(), ^{ self.cameraView.image = MatToUIImage(mat); }); }